Professional Documents

Culture Documents

Polynomial Approximation by Least Squares: Distance in A Vector Space

Uploaded by

Mahmoud El-MahdyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Polynomial Approximation by Least Squares: Distance in A Vector Space

Uploaded by

Mahmoud El-MahdyCopyright:

Available Formats

Polynomial Approximation by Least

Squares

Distance in a Vector Space

The 2-norm of an integrable function f over an interval [a, b] is

f

2

2

=

_

a

b

f (x)

2

x.

Least square approximation

f - p

n

2

2

=

_

a

b

( f (x) - p

n

(x))

2

x

with respect to all polynomials of degree n.

We use the approximation

p

n

(x) = _

i=0

n

a

i

x

i

and

f - p

n

2

2

=

_

a

b

f (x) - _

i=0

n

a

i

x

i

2

x.

The minimum value of the error follows by

d

d a

j

f - p

n

2

2

= 0 =

d

d a

j

_

a

b

f (x) - _

i=0

n

a

i

x

i

2

x

which is

0 =

d

d a

j

_

a

b

f (x)

2

- 2 _

i=0

n

a

i

x

i

f (x) + _

i=0

n

a

i

x

i

2

x

0 =

d

d a

j

_

a

b

f (x)

2

x - 2

d

d a

j

_

i=0

n

a

i _

a

b

x

i

f (x) x +

d

d a

j

_

a

b

_

i=0

n

a

i

x

i

2

x

0 = -2

_

a

b

x

j

f (x) x + 2 _

i=0

n

a

i _

a

b

x

i

x

j

x

0 = -b

j

+ _

i=1

n+1

a

i

c

j,i

with j = 0, 1, 2, , n

_

i=1

n+1

a

i

c

j,i

= b

j

with j = 0, 1, 2, , n

|

2 | Lecture_004.nb 2012 Dr. G. Baumann

Matrix Representation

System of determining the coefficients,

c

11

c

12

c

13

c

1,n+1

c

21

c

22

c

23

c

2,n+1

c

n+1,1

c

n+1,2

c

n+1,3

c

n+1,n+1

a

0

a

1

a

n

=

b

0

b

1

b

n

.

where

c

i, j

=

_

a

b

x

i+j-2

x =

1

i + j - 1

|b

i+j-1

- a

i+j-1

]

and

b

i

=

_

a

b

x

i

f (x) x.

While this looks like an easy and straightforward solution to the problem, there are some issues of concern.

|

2012 Dr. G. Baumann Lecture_004.nb | 3

Example: Conditioning of Least Square Approximation

If we take a = 0, b = 1, then in the equation of least square approximation is determined by

c

ij

=

1

i + j - 1

and the matrix is a Hilbert matrix, which is very ill-conditioned. We should therefore expect that any attempt to

solve the full least square problem or the discrete version is likely to yield disappointing results.

|

4 | Lecture_004.nb 2012 Dr. G. Baumann

Example: Least Square Approximation

Given f (x) =

x

with x [0, 1]. Find the best approximation using least square approximation to find a second

order polynomial p

2

(x). The matrix c is given by

c

ij

=

1

i + j + 1

with i, j = 0, 1, 2, , n

b

j

=

]

0

1

f (x) x

j

x with j = 0, 1, 2, , n

The polynomial is

p

2

(x) = a

0

+ a

1

x + a

2

x

2

First let us generate the matrix c by

In[1]:=

c Table]

1

i j 1

, {i, 0, 2}, {j, 0, 2}; c // MatrixForm

Out[1]//MatrixForm=

1

1

2

1

3

1

2

1

3

1

4

1

3

1

4

1

5

The quantities b

j

can be collected in a vector of length 3

In[2]:=

b Table]

_

0

1

x

j

x

x, {j, 0, 2}

Out[2]=

1 , 1, 2

The determining system of the coefficients are

In[4]:=

eqs Thread[c.{a0, a1, a2} b]; eqs // TableForm

Out[4]//TableForm=

a0

a1

2

a2

3

1

a0

2

a1

3

a2

4

1

a0

3

a1

4

a2

5

2

The solution for the coefficients a

j

follows by applying Gau-elimination

In[6]:=

sol Solve[eqs, {a0, a1, a2}] // Flatten

Out[6]=

a0 3 35 13 , a1 588 216 , a2 570 210

Inserting the found solution into the polynomial p

2

(x) delivers

2012 Dr. G. Baumann Lecture_004.nb | 5

In[7]:=

p2 a0 a1 x a2 x

2

/. sol

Out[7]=

3 35 13 588 216 x 570 210 x

2

A graphical comparison of the function f (x) and p

2

(x) shows the quality of the approximation

In[8]:=

Plot[Evaluate[{

x

, p2}], {x, 0, 1}, AxesLabel {"x", "f(x),p

2

(x)"}]

Out[8]=

0.2 0.4 0.6 0.8 1.0

x

1.5

2.0

2.5

f(x), p

2

(x)

The absolute deviation of p

2

(x) from f(x) is shown in the following graph giwing the error of the approximation

In[10]:=

Plot[Evaluate[Abs[

x

p2]], {x, 0, 1}, AxesLabel {"x", "|f(x)p

2

(x)|"}]

Out[10]=

0.2 0.4 0.6 0.8 1.0

x

0.002

0.004

0.006

0.008

0.010

0.012

0.014

f(x)-p

2

(x)

|

6 | Lecture_004.nb 2012 Dr. G. Baumann

You might also like

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Numerical DifferentiationDocument17 pagesNumerical DifferentiationamoNo ratings yet

- Lemh106 PDFDocument53 pagesLemh106 PDFA/C Kapil Dev MishraNo ratings yet

- Summary of Syllabus Mathematics G100Document13 pagesSummary of Syllabus Mathematics G100Train_7113No ratings yet

- R (X) P (X) Q (X) .: 1.7. Partial Fractions 32Document7 pagesR (X) P (X) Q (X) .: 1.7. Partial Fractions 32RonelAballaSauzaNo ratings yet

- Application of Derivatives Class 12 IscDocument70 pagesApplication of Derivatives Class 12 Iscgourav97100% (1)

- Control System Lab Manual V 1Document173 pagesControl System Lab Manual V 1Engr. M. Farhan Faculty Member UET Kohat100% (1)

- Complex AnalysisDocument428 pagesComplex AnalysisAritra Lahiri100% (3)

- Mathematics TDocument62 pagesMathematics TRosdy Dyingdemon100% (1)

- Metal Detector-Project ReportDocument26 pagesMetal Detector-Project ReportTimK77% (52)

- m820 Sol 2011Document234 pagesm820 Sol 2011Tom DavisNo ratings yet

- Logarithmic TableDocument168 pagesLogarithmic TablechristopherjudepintoNo ratings yet

- 5.integral Calculus Objectives:: DX DyDocument16 pages5.integral Calculus Objectives:: DX DyAndyMavia100% (1)

- Oldexam Ws0708 SolutionDocument14 pagesOldexam Ws0708 SolutionhisuinNo ratings yet

- Integration: FXDX FX C S X X N FX Ix X NDocument3 pagesIntegration: FXDX FX C S X X N FX Ix X NSalvadora1No ratings yet

- Add Maths Study NotesDocument61 pagesAdd Maths Study NotesElisha James100% (1)

- Course 5: NNN N N N NDocument3 pagesCourse 5: NNN N N N NIustin CristianNo ratings yet

- Y MX B: Linearization and Newton's MethodDocument7 pagesY MX B: Linearization and Newton's MethodTony CaoNo ratings yet

- Numerical Differentiation: X X X H X H X HDocument19 pagesNumerical Differentiation: X X X H X H X HDini AryantiNo ratings yet

- Hw2 - Raymond Von Mizener - Chirag MahapatraDocument13 pagesHw2 - Raymond Von Mizener - Chirag Mahapatrakob265No ratings yet

- Lecture Note 2Document7 pagesLecture Note 2Vasudha KhannaNo ratings yet

- CH2. Locating Roots of Nonlinear EquationsDocument17 pagesCH2. Locating Roots of Nonlinear Equationsbytebuilder25No ratings yet

- Mathematics ReviewerDocument10 pagesMathematics Reviewerian naNo ratings yet

- 2024S 2014a MT1v1 - Sample MT 1Document5 pages2024S 2014a MT1v1 - Sample MT 1Takhir TashmatovNo ratings yet

- Selected Solutions, Griffiths QM, Chapter 1Document4 pagesSelected Solutions, Griffiths QM, Chapter 1Kenny StephensNo ratings yet

- Solutions To Selected Problems-Duda, HartDocument12 pagesSolutions To Selected Problems-Duda, HartTiep VuHuu67% (3)

- Zeashan Zaidi: Lecturer BiostatisticsDocument34 pagesZeashan Zaidi: Lecturer BiostatisticszeashanzaidiNo ratings yet

- Math 53 LE 3 Reviewer ProblemsDocument14 pagesMath 53 LE 3 Reviewer ProblemsJc QuintosNo ratings yet

- Griffiths CH 3 Selected Solutions in Quantum Mechanics Prob 2,5,7,10,11,12,21,22,27Document11 pagesGriffiths CH 3 Selected Solutions in Quantum Mechanics Prob 2,5,7,10,11,12,21,22,27Diane BeamerNo ratings yet

- Some Problems Illustrating The Principles of DualityDocument22 pagesSome Problems Illustrating The Principles of DualityPotnuru VinayNo ratings yet

- 2012 Approximate IntegrationDocument19 pages2012 Approximate IntegrationNguyễn Quốc ThúcNo ratings yet

- SelectionDocument15 pagesSelectionMuhammad KamranNo ratings yet

- Solutions To Home Practice Test-5/Mathematics: Differential Calculus - 2 HWT - 1Document12 pagesSolutions To Home Practice Test-5/Mathematics: Differential Calculus - 2 HWT - 1varunkohliinNo ratings yet

- Principles of Econometrics 4e Chapter 2 SolutionDocument33 pagesPrinciples of Econometrics 4e Chapter 2 SolutionHoyin Kwok84% (19)

- Math013 Calculus I Final Exam Solution, Fall 08Document13 pagesMath013 Calculus I Final Exam Solution, Fall 08JessicaNo ratings yet

- Quadratic Equations Workbook By: Ben McgaheeDocument8 pagesQuadratic Equations Workbook By: Ben Mcgaheemaihuongmaihuong1905No ratings yet

- Solucionario Metodos Numericos V 3Document24 pagesSolucionario Metodos Numericos V 3Javier Ignacio Velasquez BolivarNo ratings yet

- BITSAT 2023 Paper Memory BasedDocument19 pagesBITSAT 2023 Paper Memory Basedkrishbhatia1503No ratings yet

- Lecture11 HandoutDocument9 pagesLecture11 HandoutShahnaz GazalNo ratings yet

- Solutions To Home Work Test/Mathematics: Functions HWT - 1Document7 pagesSolutions To Home Work Test/Mathematics: Functions HWT - 1varunkohliinNo ratings yet

- Numerical Solutions of Fredholm Integral Equation of Second Kind Using Piecewise Bernoulli PolynomialsDocument9 pagesNumerical Solutions of Fredholm Integral Equation of Second Kind Using Piecewise Bernoulli Polynomialsmittalnipun2009No ratings yet

- Time-Dependent Spin Problem From Midterm. WKB Revisited.: Z S S S B S B S H EhDocument4 pagesTime-Dependent Spin Problem From Midterm. WKB Revisited.: Z S S S B S B S H Ehpimpampum111No ratings yet

- Some Inequalities Involving Ratios and Products of The Gamma Function Mridula Garg - Ajay Sharma - Pratibha ManoharDocument7 pagesSome Inequalities Involving Ratios and Products of The Gamma Function Mridula Garg - Ajay Sharma - Pratibha ManoharPePeeleNo ratings yet

- ANother Lecture NotesDocument59 pagesANother Lecture NotesLeilani ManalaysayNo ratings yet

- IntegralDocument30 pagesIntegralWilliam AgudeloNo ratings yet

- Chapter 8. Quadratic Equations and Functions: X A B C A XDocument21 pagesChapter 8. Quadratic Equations and Functions: X A B C A XJoy CloradoNo ratings yet

- Finite Difference Method 10EL20Document34 pagesFinite Difference Method 10EL20muhammad_sarwar_2775% (8)

- Individual Tutorial 2 - BBA3274 Quantitative Method For BusinessDocument8 pagesIndividual Tutorial 2 - BBA3274 Quantitative Method For BusinessHadi Azfar Bikers PitNo ratings yet

- Very Important Q3Document24 pagesVery Important Q3Fatima Ainmardiah SalamiNo ratings yet

- Exercise2 Submission Group 12 Yalcin MehmetDocument10 pagesExercise2 Submission Group 12 Yalcin MehmetMehmet YalçınNo ratings yet

- Final Matlab ManuaDocument23 pagesFinal Matlab Manuaarindam samantaNo ratings yet

- Section 2.7 Polynomials and Rational InequalitiesDocument14 pagesSection 2.7 Polynomials and Rational InequalitiesdavogezuNo ratings yet

- Chapter 4 Numerical Differentiation and Integration 4.3 Elements of Numerical IntegrationDocument9 pagesChapter 4 Numerical Differentiation and Integration 4.3 Elements of Numerical Integrationmasyuki1979No ratings yet

- Problem Solutions - Chapter 4: Yates and Goodman: Probability and Stochastic Processes Solutions ManualDocument37 pagesProblem Solutions - Chapter 4: Yates and Goodman: Probability and Stochastic Processes Solutions ManualAl FarabiNo ratings yet

- Kseeb 2019Document27 pagesKseeb 2019Arif ShaikhNo ratings yet

- F (X) DX F (X) +C Symbol of IntegrationDocument13 pagesF (X) DX F (X) +C Symbol of IntegrationSyed Mohammad AskariNo ratings yet

- Gradient Methods For Minimizing Composite Objective FunctionDocument31 pagesGradient Methods For Minimizing Composite Objective Functionegv2000No ratings yet

- MAS103Document77 pagesMAS103EskothNo ratings yet

- Round 2 SolutionsDocument10 pagesRound 2 Solutionskepler1729No ratings yet

- Green's Functions For The Stretched String Problem: D. R. Wilton ECE DeptDocument34 pagesGreen's Functions For The Stretched String Problem: D. R. Wilton ECE DeptSri Nivas ChandrasekaranNo ratings yet

- Instructor Dr. Karuna Kalita: Finite Element Methods in Engineering ME 523Document40 pagesInstructor Dr. Karuna Kalita: Finite Element Methods in Engineering ME 523Nitesh SinghNo ratings yet

- 4 - Lecture IV (Tort Law) PDFDocument18 pages4 - Lecture IV (Tort Law) PDFMahmoud El-MahdyNo ratings yet

- Numerical Analysis MATH-602 Quiz 001 Time 20 Minutes: Mathematics Department Prof. Dr. Gerd BaumannDocument2 pagesNumerical Analysis MATH-602 Quiz 001 Time 20 Minutes: Mathematics Department Prof. Dr. Gerd BaumannMahmoud El-MahdyNo ratings yet

- 2 - Lecture II - Law and EthicsDocument43 pages2 - Lecture II - Law and EthicsMahmoud El-MahdyNo ratings yet

- Control Lect1Document12 pagesControl Lect1Mahmoud El-MahdyNo ratings yet

- Final Revision Part2Document8 pagesFinal Revision Part2Mahmoud El-MahdyNo ratings yet

- 1 - Legislation, Contracts & Engineering Ethics - Lecture 1 - October 17-2013 PDFDocument18 pages1 - Legislation, Contracts & Engineering Ethics - Lecture 1 - October 17-2013 PDFMahmoud El-MahdyNo ratings yet

- Final Revision Part1Document12 pagesFinal Revision Part1Mahmoud El-MahdyNo ratings yet

- Lect 2Document25 pagesLect 2Mahmoud El-MahdyNo ratings yet

- Lecture 009Document23 pagesLecture 009Mahmoud El-MahdyNo ratings yet

- Orthogonal Polynomials Extra ProblemsDocument6 pagesOrthogonal Polynomials Extra ProblemsMahmoud El-MahdyNo ratings yet

- Lecture 008Document15 pagesLecture 008Mahmoud El-MahdyNo ratings yet

- 1.1 Gaussian Numerical Integration ContinuedDocument33 pages1.1 Gaussian Numerical Integration ContinuedMahmoud El-MahdyNo ratings yet

- Roots of Equations: 1.0.1 Newton's MethodDocument20 pagesRoots of Equations: 1.0.1 Newton's MethodMahmoud El-MahdyNo ratings yet

- Roots of Equations: K K K + K K K + K KDocument17 pagesRoots of Equations: K K K + K K K + K KMahmoud El-MahdyNo ratings yet

- Lecture 011Document58 pagesLecture 011Mahmoud El-MahdyNo ratings yet

- DC Part 2Document29 pagesDC Part 2Mahmoud El-Mahdy100% (1)

- IM Part-1Document14 pagesIM Part-1Mahmoud El-MahdyNo ratings yet

- Tcs 230Document10 pagesTcs 230MuseByKeaneNo ratings yet

- DC Part 1-1Document19 pagesDC Part 1-1Mahmoud El-Mahdy100% (1)

- Lecture 005Document17 pagesLecture 005Mahmoud El-MahdyNo ratings yet

- HMC5843Document19 pagesHMC5843Cihat Sami GüngörNo ratings yet

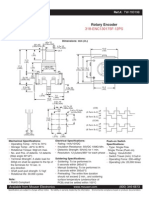

- Rotary EncoderDocument1 pageRotary EncoderMahmoud El-MahdyNo ratings yet

- Roots of Equations: K K K + K K K + K KDocument17 pagesRoots of Equations: K K K + K K K + K KMahmoud El-MahdyNo ratings yet

- 10 1 1 116 1392Document142 pages10 1 1 116 1392Mahmoud El-MahdyNo ratings yet

- May 7, 2009 18:13 WSPC - Proceedings Trim Size: 9in X 6in Autonomous ClimbingDocument8 pagesMay 7, 2009 18:13 WSPC - Proceedings Trim Size: 9in X 6in Autonomous ClimbingMahmoud El-MahdyNo ratings yet

- Paper70 ICARA2004 401 407Document7 pagesPaper70 ICARA2004 401 407Mahmoud El-MahdyNo ratings yet

- Paper70 ICARA2004 401 407Document7 pagesPaper70 ICARA2004 401 407Mahmoud El-MahdyNo ratings yet

- Technical Report - Circuits (Fall 2007)Document5 pagesTechnical Report - Circuits (Fall 2007)Mahmoud El-MahdyNo ratings yet

- SANKALP TEST: Common Monthly Test of 2022-24 (Class-XII) For Cult Metamorphosis BatchesDocument1 pageSANKALP TEST: Common Monthly Test of 2022-24 (Class-XII) For Cult Metamorphosis BatchesPBD ShortsNo ratings yet

- Introduction To Arithmetic SequencesDocument44 pagesIntroduction To Arithmetic SequencesAji PauloseNo ratings yet

- A Boltzmann Based Estimation of Distribution Algorithm: Information Sciences July 2013Document22 pagesA Boltzmann Based Estimation of Distribution Algorithm: Information Sciences July 2013f1095No ratings yet

- DRW Questions 2Document16 pagesDRW Questions 2Natasha Elena TarunadjajaNo ratings yet

- Class 4 Zeroth Law of ThermodynamicsDocument29 pagesClass 4 Zeroth Law of ThermodynamicsBharathiraja MoorthyNo ratings yet

- Lesson 8-Differentiation of Inverse Trigonometric FunctionsDocument10 pagesLesson 8-Differentiation of Inverse Trigonometric FunctionsLuis BathanNo ratings yet

- WebDocument329 pagesWebDon ShineshNo ratings yet

- 3-D Rotations: Using Euler AnglesDocument17 pages3-D Rotations: Using Euler AnglestafNo ratings yet

- Chapter 2: Equations, Inequalities and Absolute ValuesDocument31 pagesChapter 2: Equations, Inequalities and Absolute Valuesjokydin92No ratings yet

- JWB Soal UTS Soal No 2Document102 pagesJWB Soal UTS Soal No 2Sutrisno DrsNo ratings yet

- Putting Differentials Back Into CalculusDocument14 pagesPutting Differentials Back Into Calculus8310032914No ratings yet

- Mathematica Slovaca: Dragan Marušič Tomaž Pisanski The Remarkable Generalized Petersen GraphDocument6 pagesMathematica Slovaca: Dragan Marušič Tomaž Pisanski The Remarkable Generalized Petersen GraphgutterbcNo ratings yet

- CSE330 Assignment1 SolutionDocument7 pagesCSE330 Assignment1 Solutionnehal hasnain refathNo ratings yet

- BS Synopsis-1Document10 pagesBS Synopsis-1Fida HussainNo ratings yet

- Chapter 2 and 3 10Document13 pagesChapter 2 and 3 10iwsjcqNo ratings yet

- MST129 - Applied Calculus 2021-2022 / Spring 1Document13 pagesMST129 - Applied Calculus 2021-2022 / Spring 1Hatem AlsofiNo ratings yet

- Quadratic EquationsDocument8 pagesQuadratic EquationsRishabh AgarwalNo ratings yet

- PM Shri KV Gachibowli Maths Class XII 11 Sample Papers For PracticeDocument66 pagesPM Shri KV Gachibowli Maths Class XII 11 Sample Papers For Practiceamanupadhyay20062007No ratings yet

- Mathematics 1994 Paper 2Document20 pagesMathematics 1994 Paper 2api-38266290% (1)

- Probablistic Number TheoryDocument85 pagesProbablistic Number TheoryShreerang ThergaonkarNo ratings yet

- G8 Mathematics Q3Document6 pagesG8 Mathematics Q3Angelo ReyesNo ratings yet

- Classwiz CW CatalogoDocument2 pagesClasswiz CW CatalogoDionel josue Salzar moyaNo ratings yet

- M53 E3 Reviewer PDFDocument92 pagesM53 E3 Reviewer PDFGen Ira TampusNo ratings yet

- NMTC-at-Junior-level-IX-X-Standards (Level2)Document6 pagesNMTC-at-Junior-level-IX-X-Standards (Level2)asha jalanNo ratings yet