Professional Documents

Culture Documents

On Random Weights and Unsupervised Feature Learning

Uploaded by

Abbé BusoniOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

On Random Weights and Unsupervised Feature Learning

Uploaded by

Abbé BusoniCopyright:

Available Formats

On Random Weights and

Unsupervised Feature Learning

Andrew M. Saxe, Pang Wei Koh, Zhenghao Chen,

Maneesh Bhand, Bipin Suresh, and Andrew Y. Ng

Stanford University

Stanford, CA 94305

{asaxe,pangwei,zhenghao,mbhand,bipins,ang}@cs.stanford.edu

Abstract

Recently two anomalous results in the literature have shown that certain feature

learning architectures can perform very well on object recognition tasks, without

training. In this paper we pose the question, why do randomweights sometimes do

so well? Our answer is that certain convolutional pooling architectures can be in-

herently frequency selective and translation invariant, even with random weights.

Based on this we demonstrate the viability of extremely fast architecture search

by using random weights to evaluate candidate architectures, thereby sidestepping

the time-consuming learning process. We then show that a surprising fraction of

the performance of certain state-of-the-art methods can be attributed to the archi-

tecture alone.

1 Introduction

Recently two anomalous results in the literature have shown that certain feature learning architec-

tures with random, untrained weights can do very well on object recognition tasks. In particular,

Jarrett et al. [1] found that features from a one-layer convolutional pooling architecture with com-

pletely random lters, when passed to a linear classier, could achieve an average recognition rate of

53% on Caltech101, while unsupervised pretraining and discriminative netuning of the lters im-

proved performance only modestly to 54.2%. This surprising nding has also been noted by Pinto et

al. [2], who evaluated thousands of architectures on a number of object recognition tasks and again

found that random weights performed only slightly worse than pretrained weights (James DiCarlo,

personal communication and see [3]).

This leads us to two questions: (1) Why do random weights sometimes do so well? and (2) Given

the remarkable performance of architectures with random weights, what is the contribution of unsu-

pervised pretraining and discriminative netuning?

We start by studying the basis of the good performance of these convolutional pooling object recog-

nition systems. Section 2 gives two theorems which show that convolutional pooling architectures

can be inherently frequency selective and translation invariant, even when initialized with random

weights. We argue that these properties underlie their performance.

In answer to the second question, we show in Section 3 that, for a xed architecture, unsupervised

pretraining and discriminative netuning improve classication performance relative to untrained

random weights. However, we nd that the performance improvement can be modest and some-

times smaller than the performance differences due to architectural parameters. The key to good

performance, then, lies not only in improving the learning algorithms but also in searching for the

most suitable architectures [1]. This motivates a fast heuristic for architecture search in Section 4,

based on our observed empirical correlation between the random-weight performance and the pre-

trained/netuned performance of any given network architecture. This method allows us to sidestep

1

f

Convolution layer

Input layer

Pooling layer

x

n

()

2

k

f

n

k

Valid

Circular

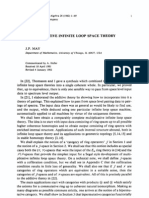

Figure 1: Left: Convolutional square pooling architecture. In our notation x is the portion of the input layer

seen by a particular pooling unit (shown in red), and f is the convolutional lter applied in the rst layer (shown

in green). Right: Valid convolution applies the lter only at locations where f ts entirely; circular convolution

applies f at every position, and permits f to wrap around.

the time-consuming learning process by evaluating candidate architectures using random weights as

a proxy for learned weights.

We conclude by showing that a surprising fraction of performance can be contributed by the ar-

chitecture alone. In particular, we present a convolutional square-pooling architecture with random

weights that achieves competitive results on the NORB dataset, without any feature learning. This

demonstrates that a sizeable component of a systems performance can come from the intrinsic

properties of the architecture, and not from the learning system. We suggest distinguishing the con-

tributions of architectures from those of learning systems by reporting random weight performance.

2 Analytical characterization of the optimal input

Why might random weights perform so well? As Jarrett et al. [1] found that only some architectures

yielded good performance with random weights, a reasonable hypothesis is that particular archi-

tectures can naturally compute features well-suited to object recognition tasks. Indeed, Jarrett et

al. numerically computed the optimal input to each neuron using gradient descent in the input space,

and found that the optimal inputs were often sinusoid and insensitive to translations. To better under-

stand what features of the input these random-weight architectures might compute, we analytically

characterize the optimal input to each neuron for the case of convolutional square-pooling architec-

tures. The convolutional square-pooling architecture can be envisaged as a two layer neural network

(Fig. 1). In the rst convolution layer, a bank of lters is applied at each position in the input

image. In the second pooling layer, neighboring lter responses are combined together by squar-

ing and then summing them. Intuitively, this architecture incorporates both selectivity for a specic

feature of the input due to the convolution stage, and robustness to small translations of the input

due to the pooling stage. In response to a single image I R

ll

, the convolutional square-pooling

architecture will generate many pooling unit outputs. Here we consider a single pooling unit which

views a restricted subregion x R

nn

, n l of the original input image, as shown in Fig. 1. The

activation of this pooling unit p

v

(x) is calculated as follows: First, a lter f R

kk

, k n is

convolved with x using valid convolution. Valid convolution means that f is applied only at each

position inside x such that f lies entirely within x (Fig. 1, top right). This produces a convolution

layer of size n k + 1 n k + 1 which feeds into the pooling layer. The nal activation of the

pooling unit is the sum of the squares of the elements in the convolution layer. This transformation

from the input x to the activation can be written as

p

v

(x) =

nk+1

i=1

nk+1

j=1

(f

v

x)

2

ij

where

v

denotes the valid convolution operation.

To understand the sort of input features preferred by this architecture, it would be useful to know

the set of inputs that maximally activate the pooling unit. For example, intuitively, this architecture

should exhibit some translation invariance due to the pooling operation. This would reveal itself as

2

Filter

2 4 6

2

4

6

Filter spectrum

5 10 15 20

5

10

15

20

Optimal input (circular)

5 10 15 20

5

10

15

20

Spectrum (circular)

5 10 15 20

5

10

15

20

Optimal input (valid)

5 10 15 20

5

10

15

20

Spectrum (valid)

5 10 15 20

5

10

15

20

Figure 2: Left to right: Randomly sampled lter used in the convolution. Square of the magnitude of the Fourier

coefcients of the lter. Input that would maximally activate a pooling unit in the case of circular convolution.

Magnitude of the Fourier transform of the optimal input. Optimal input for valid convolution. Magnitude of

the Fourier transform of the optimal input for valid convolution.

a family of optimal inputs, each differing only by a translation. While the translation invariance of

this architecture is simple enough to grasp intuitively, one might also expect that the selectivity of

the architecture will be approximately similar to that of the lter f used in the convolution. That is,

if the lter f is highly frequency selective, we might expect that the optimal input would be close

to a sinusoid at the maximal frequency in f, and if the lter f were diffuse or random, we might

think that the optimal input would be diffuse or random. Our analysis shows that this latter intuition

is in fact false; regardless of the lter f, the optimal input will be near a sinusoid at the maximal

frequency or frequencies present in the lter.

To achieve this result, we rst make one modication to the convolutional square-pooling architec-

ture to permit an analytical solution: rather than treating the case of valid convolution, we will

instead consider circular convolution. Recall that valid convolution applies the lter f only at

locations where f lies entirely within x, yielding a result of size nk +1 nk +1. By contrast

circular convolution applies the lter at every position in x, and allows the lter to wrap around

in cases where it does not lie entirely within x, as depicted in Fig. 1, right. Both valid and circular

convolution produce identical responses in the interior of the input region but differ at the edges,

where circular convolution is clearly less natural; however with it we will be able to compute the

optimal input exactly, and subsequently we will show that the optimal input for the case of circular

convolution is near-optimal for the case of valid convolution.

Theorem 2.1 Let f R

kk

and an input dimension n k be given. Let

f be formed by zero-

padding f to size n n, let M = {(v

1

, h

1

), (v

2

, h

2

), . . . , (v

q

, h

q

)} be the set of frequencies of

maximal magnitude in the discrete Fourier transform of

f, and suppose that the zero frequency

component is not maximal.

1

Then the set of norm-one inputs that maximally activate a circular

convolution square-pooling unit p

c

is

_

_

_

x

opt

| x

opt

[m, s] =

2

n

q

j

a

j

cos

_

2mv

j

n

+

2sh

j

n

+

j

_

, a = 1, a R

q

, R

q

_

_

_

.

Proof The proof is given in Appendix A.

Although Theorem 2.1 allows for the case in which there are multiple maximal frequencies in f, if

the coefcients of f are drawn independently from a continuous probability distribution, then with

probability one M will have just one maximal frequency, M = {(v, h)}. In this case, the optimal

input is simply a sinusoid at this frequency, of arbitrary phase:

x

opt

[m, s] =

2

n

cos

_

2mv

n

+

2sh

n

+

_

This equation reveals two key features of the circular convolution, square-pooling architecture.

1. The frequency of the optimal input is the frequency of maximum magnitude in the lter f.

Hence the architecture is frequency selective.

2. The phase is unspecied, and hence the architecture is translation invariant.

1

For notational simplicity we omit the case where the zero frequency component is maximal, which requires

slightly different conditions on a and .

3

Even if the lter f contains a number of frequencies of moderate magnitude, such as might occur in

a random lter, the best input will still come from the maximum magnitude frequency. An example

of this optimization for one random lter is shown in Fig. 2. In particular, note that the frequency of

the optimal input (as shown in the plot of the spectrum) corresponds to the maximum in the spectrum

of the random lter.

2.1 Optimal input for circular convolution is near-optimal for normal convolution

The above analysis computes the optimal input for the case of circular convolution; object recog-

nition systems, however, typically use valid convolution. Because circular convolution and valid

convolution differ only near the edge of the input region, we might expect that the optimal input for

one would be quite good for the other. In Fig. 2, the optimal input for valid and circular convolution

differs dramatically only near the border of the input region. We make this rigorous in the result

below.

Theorem 2.2 Let p

v

(x) denote the activation of a single pooling unit in a valid convolution, square-

pooling architecture in response to an input x, and let x

opt

v

and x

opt

c

denote the optimal norm-one

inputs for valid and circular convolution, respectively. Then if x

opt

c

is composed of a single sinusoid,

lim

n

p

v

_

x

opt

v

_

p

v

_

x

opt

c

_

= 0.

Proof The proof is supplied in Appendix B (deferred to the supplementary material due to space

constraints).

Figure 3: Empirical demonstration of the convergence.

An empirical example illustrating the conver-

gence is given in Fig. 3. This result, in combi-

nation with Theorem 2.1, shows that valid con-

volutional square-pooling architectures will re-

spond near-optimally to sinusoids at the maxi-

mum frequency present in the lter. These re-

sults hold for arbitrary lters, and hence they

hold for random lters. We suggest that these

two properties contribute to the good perfor-

mance reported by Jarrett et al. [1] and Pinto

et al [2]. Frequency selectivity and translation

invariance are ingredients well-known to pro-

duce high-performing object recognition sys-

tems. Although many systems attain frequency

selectivity via oriented, band-pass convolutional lters such as Gabor lters, this is not necessary

for convolutional square-pooling architectures; even with random weights, these architectures are

sensitive to Gabor-like features of their input.

2.2 Empirical evaluation of the effect of convolution

To show that convolutional networks indeed detect more salient features, we tested the classication

performance of both convolutional and non-convolutional square-pooling networks across a variety

of architectural parameters on variants of the NORB and CIFAR-10 datasets. For NORB, we took

the left side of the stereo images in the normalized-uniform set and downsized them to 32x32, and

for CIFAR-10, we converted each 32x32 image from RGB to grayscale. We term these modied

datasets NORB-mono and CIFAR-10-mono.

Classication experiments were run on 11 different architectures with varying lter sizes {4x4, 8x8,

12x12, 16x16}, pooling sizes {3x3, 5x5, 9x9} and lter strides {1, 2}. On NORB-mono, we used

10 unique sets of convolutional and 10 unique sets of non-convolutional random weights for each

architecture, giving a total of 220 networks, while we used 5/5 sets on CIFAR-10-mono, for a total

of 110 networks. The classication accuracies of the sets of random weights were averaged to give

a nal score for the convolutional and non-convolutional versions of each architecture. We used a

linear SVM [4] for classication, with regularization parameter C determined by cross-validation

over {10

3

, 10

1

, 10

1

}. Algorithms were initially prototyped on the GPU with Accelereyes Jacket.

4

Figure 4: Classication performance of convolutional

architectures vs non-convolutional architectures

As expected, the convolutional random-weight

networks outperformed the non-convolutional

random-weight networks by an average of 3.8

0.29%on NORB-mono (Fig.4) and 1.50.93%

on CIFAR-10-mono. We found that the dis-

tribution the random weights were drawn from

(e.g. uniform, Laplacian, Gaussian) did not af-

fect their classication performance, so long as

the distribution was centered about 0.

Finally, we note that non-convolutional net-

works still performed signicantly better than

the raw-pixel baseline of 72.6% on NORB-

mono and 28.2% on CIFAR-10-mono. More-

over, on CIFAR-10-mono, some networks performed equally well with or without convolution, even

though convolutional networks did better on average. While we would expect an improvement over

the raw-pixel baseline just from the fact that the neural networks work in a higher-dimensional space

(e.g., see [5]), preliminary experiments indicate that the observed performance of non-convolutional

random networks stem from other architectural factors such as lter locality and the form of the non-

linearities applied to each hidden unit activation. This suggests that convolution is just one among

many different architectural features that play a part in providing good classication performance.

3 What is the contribution of pretraining and ne tuning?

The unexpectedly high performance of random-weight networks on NORB-mono leads us to a cen-

tral question: is unsupervised pretraining and discriminative netuning necessary, or can the right

architecture compensate?

We investigate this by comparing the performance of pretrained and netuned convolutional net-

works with random-weight convolutional networks on the NORB and CIFAR-10 datasets. Our

convolutional networks were pretrained in an unsupervised fashion through local receptive eld To-

pographic Independent Components Analysis (TICA), a feature-learning algorithm introduced by

Le et al. [6] that was shown to achieve high performance on both NORB and CIFAR-10, and which

operates on the same class of square-pooling architectures as our networks. We use the same experi-

mental settings as before, with 11 architectures and 10 random initializations per architecture for the

NORB dataset and 5 random initializations per architecture for the CIFAR-10 dataset. Pretraining

these with TICA and then netuning them gives us a total of 110 random-weight and 110 trained

networks for NORB, and likewise 55 / 55 networks for CIFAR-10. Finetuning was carried out by

backpropagating softmax error signals. For speed, we terminate after just 80 iterations of L-BFGS

[7].

As one would expect, the top-performing networks had trained weights, and pretraining and ne-

tuning invariably increased the performance of a given architecture. This was especially true for

CIFAR-10, where the top architecture using trained weights achieved an accuracy of 59.5 0.3%,

while the top architecture using random weights only achieved 53.2 0.3%. Within our range of

parameters, at least, pretraining and netuning was necessary to attain near-top classication perfor-

mance on CIFAR-10.

More surprising, however, was nding that many learnt networks lose out in terms of performance

to random-weight networks with different architectural parameters. For instance, the architecture

with 8x8 lters, a convolutional stride size of 1 and a pooling region of 5x5 gets 89.6 0.3% with

random weights on NORB, while the (8x8, 2, 3x3) architecture gets 86.5 0.5% with pretrained

and netuned weights. Likewise, on CIFAR-10, the (4x4, 1, 3x3) architecture gets 53.2 0.3% with

random weights, while the (16x16, 1, 3x3) architecture gets 50.9 1.7% with trained weights.

We can hence conclude that to get good performance, one cannot solely focus on the learning algo-

rithm and neglect a search over a range of architectural parameters, especially since we often only

have a broad architectural prior to work with. An important consideration, then, is to be able to

perform this search efciently. In the next section, we present and justify a heuristic for doing so.

5

Figure 5: Classication performance of random-weight networks vs pretrained and netuned networks.

Left: NORB-mono. Right: CIFAR-10-mono (Error bars represent a 95% condence interval about the mean)

4 Fast architecture selection

When we plot the classication performance of random-weight architectures against trained-weight

architectures, a distinctive trend emerges: we see that architectures which perform well with random

weights also tend to perform well with pretrained and netuned weights, and vice versa (Fig. 5). In-

tuitively, our analysis in Section 2 suggests that random-weight performance is not truly random but

should correlate with the corresponding trained-weight performance, as both are linked to intrinsic

properties of the architecture. Indeed, this happens in practice.

This suggests that large-scale searches of the space of possible network architectures can be carried

out by evaluating the mean performance of such architectures over several random initializations.

This would allow us to determine suitable values for architecture specic parameters without the

need for computationally expensive pretraining and netuning. A subset of the best performing

architectures could then be selected to be pretrained and netuned.

2

As classication is signicantly faster than pretraining and netuning, this process of heuristic ar-

chitecture search provides a commensurate speedup over the normal approach of evaluating each

pretrained and netuned network, as shown in Fig. 1.

3

Table 1: Time comparison between normal architecture search and random weight architecture search

As a concrete example, we see from Fig. 5 that if we had performed this random-weight architectural

search and selected the top three random-weight architectures for pretraining and netuning, we

would have found the top-performing overall architecture for both NORB-mono and CIFAR-10-

mono.

5 Distinguishing the contributions of architecture and learning

We round off the paper by presenting evidence that current state-of-the-art feature detection sys-

tems derive a surprising amount of performance just from their architecture alone. In particular, we

focus once again on local receptive eld TICA [6], which has achieved classication performance

superior to many other methods reported in the literature. As TICA involves a square-pooling ar-

chitecture with sparsity-maximizing pretraining, we investigate how well a simple convolutional

2

We note that it is insufcient to evaluate an architecture based on a single random initialization because

the performance difference between random initializations is not generally negligible. Also, while there was a

signicant correlation between the mean performance of an architecture using random weights and its perfor-

mance after training, a particular high performing initialization did not generally perform well after pretraining

and netuning.

3

Different architectures have different numbers of hidden units and consequently take different amounts

of time to train, but the trend is similar across all architectures, so here we only report the speedup with one

representative architecture.

6

square-pooling architecture can perform without any learning. It turns out that a convolutional and

expanded version

4

of Le et al.s top-performing architecture is sufcient to obtain highly competitive

results on NORB, as shown in Table 2 below.

With such results, it becomes important to distinguish the contributions of architectures from those

of learning systems by reporting the performance of random weights. At the least, recognizing these

separate contributions will help us to hone in towards the good learning algorithms, which might not

always give the best reported performances if they are paired with bad architectures; similarly, we

will be better able to sift out the good architectures independently from the learning algorithms.

Table 2: Classication results for various methods on NORB

6 Conclusion

The performance of random-weight networks with convolutional pooling architectures demonstrates

the important role architecture plays in feature representation for object recognition. We nd that

random-weight networks reect intrinsic properties of their architecture; for instance, convolutional

pooling architectures enable even random-weight networks to be frequency selective, and we prove

this in the case of square pooling.

One major practical result from this study is a new method for fast architectural selection, as experi-

mental results show that the performance of random-weight networks is signicantly correlated with

the performance of such architectures after pretraining and netuning. While random weights are

no substitute for pretraining and netuning, we hope their use for architecture search will improve

the performance of state-of-the-art systems.

Acknowledgments This work was supported by the DARPADeep Learning programunder contract

number FA8650-10-C-7020. AMS is supported by NDSEG and Stanford Graduate fellowships. We

warmly thank Quoc Le, Will Zou, Chenguang Zhu, and three anonymous reviewers for helpful

comments.

A Optimal input for circular convolution

The appendices provide proofs of Theorems 2.1 and 2.2. They assume some familiarity with Fourier

transforms and linear algebra. Because of space constraints, please refer to the supplementary ma-

terial for Appendix B.

The activation of a single pooling unit is determined by rst convolving a lter

f R

nn

with a

portion of the input image x R

nn

, and then computing the sum of the squares of the convolution

coefcients. Since convolution is a linear operator, we can recast the above problem as a matrix

norm problem

max

x

N

2

A

c

x

2

2

(1)

subject to ||x||

2

= 1.

where the matrix A

c

implements the two-dimensional circular convolution convolution of f and x,

where f and x are formed by attening

f and x, respectively, into a column vector in column-major

order.

4

The architectural parameters of this network are the same as that of the 96.2%-scoring architecture in

[6], with the exception that ours is convolutional, and has 48 maps instead of 16, since without supervised

netuning, random-weight networks are less prone to overtting.

7

The matrix A

c

has special structure that we can exploit to nd the optimal solution. In particular,

A

c

is block circulant, i.e., each row of blocks is a circular shift of the previous row,

A =

_

_

A

1

A

2

A

n1

A

n

A

n

A

1

A

n2

A

n1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

A

3

A

4

A

1

A

2

A

2

A

3

A

n

A

1

_

_

.

Additionally each block A

n

is itself circulant, i.e., each row is a circular shift of the previous row,

A

i

=

_

_

a

1

a

2

a

n1

a

n

a

n

a

1

a

n2

a

n1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

a

3

a

4

a

1

a

2

a

2

a

3

a

n

a

1

_

_

,

where each row contains an appropriate subset of the lter coefcients used in the convolution.

Hence the matrix A

c

is doubly block circulant. Returning to (2), we obtain the optimization problem

max

xR

n

2

,x=0

x

c

A

c

x

x

x

. (2)

This is a positive semidenite quadratic form, for which the solution is the eigenvector associated

with the maximal eigenvalue of A

c

A

c

. To obtain an analytical solution we will reparametrize the

optimization to diagonalize A

c

A

c

so that the eigenvalues and eigenvectors may be read off of the

diagonal. We change variables to z = Fx where F is the unitary 2D discrete Fourier transform

matrix. The objective then becomes

x

c

A

c

x

x

x

=

z

FA

c

A

c

F

z

z

Fz

(3)

=

z

FA

c

F

FA

c

F

z

z

z

(4)

=

z

Dz

z

z

(5)

where from (3) to (4) we have twice used the fact that the Fourier transform is unitary (F

F = I),

and from (4) to (5) we have used the fact that the Fourier transform diagonalizes block circulant

matrices (which corresponds to the convolution theorem F {f x} = FfFx), that is, FA

c

F

=

D. The matrix D is diagonal, with coefcients D

ii

= n(Ff)

i

, equal to the Fourier coefcients of

the convolution lter f scaled by n. Hence we obtain the equivalent optimization problem

max

zC

n

2

,z=0

z

|D|

2

z

z

z

, (6)

subject to F

z R (7)

where the matrix |D|

2

is diagonal with entries |D|

2

ii

= n

2

|(Ff)

i

|

2

, the square of the magnitude of

the Fourier coefcients of f scaled by n

2

. The constraint F

z R ensures that x is real, despite z

being complex. Because the coefcient matrix is diagonal, we can read off the eigenvalues as

i

=

n

2

|D|

2

ii

with corresponding eigenvector e

i

, the i

th

unit vector. The global solution to the optimization

problem, setting aside the reality condition, is any mixture of eigenvectors corresponding to maximal

eigenvalues, scaled to have unit norm.

To establish the optimal input taking account of the reality constraint, we must ensure that Fourier

coefcients corresponding to negative frequencies are their complex conjugate, that is, the solution

must satisfy the reality condition z

i

= z

i

. One choice for satisfying the reality condition is to take

z

j

=

_

_

_

a

|j|

2

e

isgn(j)

|j|

j

max

0 otherwise

where a, R

q

are vectors of arbitrary coefcients, one for each maximal frequency in Ff, and

||a|| = 1. Then, for nonzero z

j

, z

j

=

a

|j|

2

e

isgn(j)

|j|

=

a

|j|

2

e

isgn(j)

|j|

= z

j

so the reality

condition holds. Since this attains the maximum for the problem without the reality constraint, it

must also be the maximum for the complete problem. Converting z back to the time domain proves

the result.

8

References

[1] K. Jarrett, K. Kavukcuoglu, M. Ranzato, and Y. LeCun. What is the best multi-stage architec-

ture for object recognition? In ICCV, 2009.

[2] N. Pinto, D. Doukhan, J. J. DiCarlo, and D. D. Cox. A high-throughput screening approach

to discovering good forms of biologically inspired visual representation. PLoS computational

biology, 5(11), November 2009.

[3] N. Pinto and D.D. Cox. An evaluation of the invariance properties of a biologically-inspired

system for unconstrained face recognition. In BIONETICS, 2010.

[4] R.-E. Fan, K.-W. Chang, C.-J. Hsieh, X.-R. Wang, and C.-J. Lin. LIBLINEAR: A library for

large linear classication. Journal of Machine Learning Research, 9:18711874, 2008.

[5] A. Rahimi and B. Recht. Weighted sums of random kitchen sinks: Replacing minimization

with randomization. In NIPS, 2008.

[6] Q.V. Le, J. Ngiam, Z. Chen, P. Koh, D. Chia, and A. Ng. Tiled convolutional neural networks.

In NIPS, in press, 2010.

[7] M. Schmidt. minFunc, http://www.cs.ubc.ca/ schmidtm/Software/minFunc.html. 2005.

[8] Y. LeCun, F.J. Huang, and L. Bottou. Learning methods for generic object recognition with

invariance to pose and lighting. In CVPR, 2004.

[9] Y. Boureau, J. Ponce, and Y. LeCun. A Theoretical Analysis of Feature Pooling in Visual

Recognition. In ICML, 2010.

[10] G. J. Tee. Eigenvectors of block circulant and alternating circulant matrices. New Zealand

Journal of Mathematics, 36:195211, 2007.

[11] R. M. Gray. Toeplitz and Circulant Matrices: A review. Foundations and Trends in Communi-

cations and Information Theory, 2(3), 2005.

[12] N. K. Bose and K. J. Boo. Asymptotic Eigenvalue Distribution of Block-Toeplitz Matrices.

Signal Processing, 44(2):858861, 1998.

[13] A. Krizhevsky. Learning multiple layers of features from tiny images. Masters thesis, Tech-

nical report, Computer Science Department, University of Toronto, 2009.

[14] D. Erhan, Y. Bengio, A. Courville, P. Manzagol, P. Vincent, and S. Bengio. Why does unsuper-

vised pre-training help deep learning? Journal of Machine Learning Research, 11:625660,

2010.

[15] G. Hinton, S. Osindero, and Y. Teh. A fast learning algorithm for deep belief nets. Neural

Computation, 18(7):15271554, 2006.

[16] V. Nair and G. Hinton. 3D Object Recognition with Deep Belief Nets. In NIPS, 2009.

[17] Y. Bengio and Y. LeCun. Scaling learning algorithms towards A.I. In Large-Scale Kernel

Machines. MIT Press, 2007.

[18] R. Salakhutdinov and H. Larochelle. Efcient learning of deep boltzmann machines. In AIS-

TATS, 2010.

9

You might also like

- Nipsdlufl10 RandomWeights PDFDocument9 pagesNipsdlufl10 RandomWeights PDFÜmit AslanNo ratings yet

- Simple Perceptrons: 1 NonlinearityDocument5 pagesSimple Perceptrons: 1 NonlinearityAdnan AmeenNo ratings yet

- A Histogram Is A Graphical Representation of The Distribution of Numerical DataDocument16 pagesA Histogram Is A Graphical Representation of The Distribution of Numerical DataArchanaNo ratings yet

- 3.5 Coefficients of The Interpolating Polynomial: Interpolation and ExtrapolationDocument4 pages3.5 Coefficients of The Interpolating Polynomial: Interpolation and ExtrapolationVinay GuptaNo ratings yet

- Sparse Representation of Astronomical Images: Laura Rebollo-Neira and James BowleyDocument24 pagesSparse Representation of Astronomical Images: Laura Rebollo-Neira and James BowleyAyan SantraNo ratings yet

- Multiplication Operators on Vector-Valued Function SpacesDocument14 pagesMultiplication Operators on Vector-Valued Function SpacesMirahNo ratings yet

- Ge 2015 EscapingDocument46 pagesGe 2015 EscapingditceeNo ratings yet

- The Theory of Multidimensional PersistenceDocument17 pagesThe Theory of Multidimensional PersistenceAshish AnantNo ratings yet

- Machine Learning using HyperkernelsDocument8 pagesMachine Learning using HyperkernelsMartin CalderonNo ratings yet

- Support Vector NetworkDocument25 pagesSupport Vector NetworkatollorigidoNo ratings yet

- Function SpacesDocument10 pagesFunction SpacesAthan CalugayNo ratings yet

- On Yao's XOR-Lemma: 1 T Def T I 1 IDocument29 pagesOn Yao's XOR-Lemma: 1 T Def T I 1 IAnder AndersenNo ratings yet

- Inverse Cubature and Quadrature Kalman Filters for Nonlinear State EstimationDocument6 pagesInverse Cubature and Quadrature Kalman Filters for Nonlinear State Estimations_bhaumikNo ratings yet

- Efficient Similarity Search in Sequence DatabasesDocument16 pagesEfficient Similarity Search in Sequence DatabasesNontas TheosNo ratings yet

- A comparison of interpolation methods in medical imagingDocument5 pagesA comparison of interpolation methods in medical imagingYannel ChameNo ratings yet

- Optimal Stopping and Effective Machine Complexity in LearningDocument8 pagesOptimal Stopping and Effective Machine Complexity in LearningrenatacfNo ratings yet

- Quantum Neural Networks Versus Conventional Feedforward Neural NetworksDocument10 pagesQuantum Neural Networks Versus Conventional Feedforward Neural NetworksNavneet KumarNo ratings yet

- Fast Discrete Curvelet TransformsDocument44 pagesFast Discrete Curvelet TransformsjiboyvNo ratings yet

- Annals of MathematicsDocument12 pagesAnnals of Mathematicskehao chengNo ratings yet

- Dynamic Logistic RegressionDocument7 pagesDynamic Logistic Regressiongchagas6019No ratings yet

- CNN Notes on Convolutional Neural NetworksDocument8 pagesCNN Notes on Convolutional Neural NetworksGiri PrakashNo ratings yet

- Competition and Self-Organisation - Kohonen NetsDocument12 pagesCompetition and Self-Organisation - Kohonen NetsAnonymous I0bTVBNo ratings yet

- Interpolation PDFDocument7 pagesInterpolation PDFeduliborioNo ratings yet

- Line of Sight Rate Estimation With PFDocument5 pagesLine of Sight Rate Estimation With PFAlpaslan KocaNo ratings yet

- Notes On Micro Controller and Digital Signal ProcessingDocument70 pagesNotes On Micro Controller and Digital Signal ProcessingDibyajyoti BiswasNo ratings yet

- Robust Uncertainty Principles: Exact Signal Reconstruction From Highly Incomplete Frequency InformationDocument21 pagesRobust Uncertainty Principles: Exact Signal Reconstruction From Highly Incomplete Frequency InformationGilbertNo ratings yet

- Multiplicative Infinite Loop Space Theory: Department of Mathematics. University of Chicago, IL 60637, USADocument69 pagesMultiplicative Infinite Loop Space Theory: Department of Mathematics. University of Chicago, IL 60637, USAEpic WinNo ratings yet

- Unit 2aDocument31 pagesUnit 2aAkshaya GopalakrishnanNo ratings yet

- Neural Networks and Deep Learning (PE - V) (18CSE23) Unit - 4Document11 pagesNeural Networks and Deep Learning (PE - V) (18CSE23) Unit - 4Sathwik ChandraNo ratings yet

- e Suppl Cor BerteroDocument68 pagese Suppl Cor BerteroArvind VishnubhatlaNo ratings yet

- MCMC OriginalDocument6 pagesMCMC OriginalAleksey OrlovNo ratings yet

- Maximum Likelihood Sequence Estimators:: A Geometric ViewDocument9 pagesMaximum Likelihood Sequence Estimators:: A Geometric ViewGeorge MathaiNo ratings yet

- Identification of Surface Defects in Textured Materials Using Wavelet PacketsDocument5 pagesIdentification of Surface Defects in Textured Materials Using Wavelet Packetsapi-3706534No ratings yet

- Function Approximation Using Robust Wavelet Neural Networks: Sheng-Tun Li and Shu-Ching ChenDocument6 pagesFunction Approximation Using Robust Wavelet Neural Networks: Sheng-Tun Li and Shu-Ching ChenErezwaNo ratings yet

- Optimally Rotation-Equivariant DirectionalDocument8 pagesOptimally Rotation-Equivariant DirectionalEloisa PotrichNo ratings yet

- Mean Shift Algo 3Document5 pagesMean Shift Algo 3ninnnnnnimoNo ratings yet

- Class03 Rkhs ScribeDocument8 pagesClass03 Rkhs ScribeSergio Pérez-ElizaldeNo ratings yet

- Mathematical Foundations of Information TheoryFrom EverandMathematical Foundations of Information TheoryRating: 3.5 out of 5 stars3.5/5 (9)

- An Introduction To Support Vector Machines and Other Kernel-Based Learning MethodsDocument17 pagesAn Introduction To Support Vector Machines and Other Kernel-Based Learning MethodsJônatas Oliveira SilvaNo ratings yet

- Summary of Quantum Field Theory: 1 What Is QFT?Document4 pagesSummary of Quantum Field Theory: 1 What Is QFT?shivsagardharamNo ratings yet

- Computing Persistent Homology: Afra Zomorodian and Gunnar CarlssonDocument15 pagesComputing Persistent Homology: Afra Zomorodian and Gunnar CarlssonPatricia CortezNo ratings yet

- Bob Coecke - Quantum Information-Flow, Concretely, AbstractlyDocument17 pagesBob Coecke - Quantum Information-Flow, Concretely, AbstractlyGholsasNo ratings yet

- The Physical Meaning of Replica Symmetry BreakingDocument15 pagesThe Physical Meaning of Replica Symmetry BreakingpasomagaNo ratings yet

- A Group Theoretic Perspective On Unsupervised Deep LearningDocument2 pagesA Group Theoretic Perspective On Unsupervised Deep LearningTroyNo ratings yet

- Hybrid Control of Load Simulator For Unmanned Aerial Vehicle Based On Wavelet NetworksDocument5 pagesHybrid Control of Load Simulator For Unmanned Aerial Vehicle Based On Wavelet NetworksPhuc Pham XuanNo ratings yet

- Kernels and Distances For Structured DataDocument28 pagesKernels and Distances For Structured DataRazzNo ratings yet

- FAQs Wavelets Transform ApplicationsDocument10 pagesFAQs Wavelets Transform ApplicationsAndyBasuNo ratings yet

- Neural Network Ensembles, Cross Validation, and Active LearningDocument8 pagesNeural Network Ensembles, Cross Validation, and Active LearningMalim Muhammad SiregarNo ratings yet

- The Genetic Algorithm For A Signal EnhancementDocument5 pagesThe Genetic Algorithm For A Signal EnhancementCarlyleEnergyNo ratings yet

- Schrodinger 1936Document8 pagesSchrodinger 1936base1No ratings yet

- Advanced QM Lecture NotesDocument92 pagesAdvanced QM Lecture Noteslinfv sjdnfos djfms kjdiNo ratings yet

- International Journal of Pure and Applied Mathematics No. 3 2013, 365-375Document12 pagesInternational Journal of Pure and Applied Mathematics No. 3 2013, 365-375KarthiNo ratings yet

- Fourier Sampling & Simon's Algorithm: 4.1 Reversible ComputationDocument11 pagesFourier Sampling & Simon's Algorithm: 4.1 Reversible Computationtoto_bogdanNo ratings yet

- Quantum Bounds on Detector Efficiencies for Violating Bell InequalitiesDocument10 pagesQuantum Bounds on Detector Efficiencies for Violating Bell InequalitiesJerimiahNo ratings yet

- Recursion: Unit 10Document18 pagesRecursion: Unit 10Aila DarNo ratings yet

- Articulo Distribución en RedesDocument6 pagesArticulo Distribución en RedesCristian Alejandro Vejar BarajasNo ratings yet

- Sketching Valuation FunctionsDocument11 pagesSketching Valuation Functionspmarlowe98No ratings yet

- Comparasion of Pain Intensity of AMSA Injection With Infiltation Anesthetic Technique in Maxillary Periodontal SurDocument5 pagesComparasion of Pain Intensity of AMSA Injection With Infiltation Anesthetic Technique in Maxillary Periodontal SurAbbé BusoniNo ratings yet

- Comparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreDocument7 pagesComparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreAbbé BusoniNo ratings yet

- Comparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreDocument7 pagesComparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreAbbé BusoniNo ratings yet

- Comparasion of Pain Intensity of AMSA Injection With Infiltation Anesthetic Technique in Maxillary Periodontal SurDocument5 pagesComparasion of Pain Intensity of AMSA Injection With Infiltation Anesthetic Technique in Maxillary Periodontal SurAbbé BusoniNo ratings yet

- CompuDent Anesthetic Efficacy Burns PDFDocument8 pagesCompuDent Anesthetic Efficacy Burns PDFAbbé BusoniNo ratings yet

- Comparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreDocument7 pagesComparasion of The Pain Levels of Computer Controlled and Conventional Alaesthesia Techniques in Prosthodontic TreAbbé BusoniNo ratings yet

- A Cilinical Assessment On The Efficacy of The Anterior and Middle Superior Alveolar Nerve Block Tehnique During eDocument6 pagesA Cilinical Assessment On The Efficacy of The Anterior and Middle Superior Alveolar Nerve Block Tehnique During eAbbé BusoniNo ratings yet

- AMSA Alternate To Multole Infiltrations in Maxillary AmaesthesiaDocument6 pagesAMSA Alternate To Multole Infiltrations in Maxillary AmaesthesiaAbbé BusoniNo ratings yet

- CompuDent Anesthetic Efficacy Burns PDFDocument8 pagesCompuDent Anesthetic Efficacy Burns PDFAbbé BusoniNo ratings yet

- Tree of Latent Mixtures for Bayesian Modelling of High Dimensional DataDocument8 pagesTree of Latent Mixtures for Bayesian Modelling of High Dimensional DataAbbé BusoniNo ratings yet

- A Cilinical Assessment On The Efficacy of The Anterior and Middle Superior Alveolar Nerve Block Tehnique During eDocument6 pagesA Cilinical Assessment On The Efficacy of The Anterior and Middle Superior Alveolar Nerve Block Tehnique During eAbbé BusoniNo ratings yet

- 77navier-Ispitni ZadaciDocument4 pages77navier-Ispitni ZadaciAbbé BusoniNo ratings yet

- UputstvoDocument54 pagesUputstvoAbbé BusoniNo ratings yet

- Competitive Mixtures of Simple Neurons: Karthik Sridharan Matthew J. Beal Venu GovindarajuDocument4 pagesCompetitive Mixtures of Simple Neurons: Karthik Sridharan Matthew J. Beal Venu GovindarajuAbbé BusoniNo ratings yet

- Automatically Extracting Nominal Mentions of Events With A Bootstrapped Probabilistic ClassifierDocument8 pagesAutomatically Extracting Nominal Mentions of Events With A Bootstrapped Probabilistic ClassifierAbbé BusoniNo ratings yet

- Variational Bayesian Learning of Directed Graphical Models With Hidden VariablesDocument44 pagesVariational Bayesian Learning of Directed Graphical Models With Hidden VariablesAbbé BusoniNo ratings yet

- On Profiling Mobility and Predicting Locations of Campus-Wide Wireless Network UsersDocument15 pagesOn Profiling Mobility and Predicting Locations of Campus-Wide Wireless Network UsersAbbé BusoniNo ratings yet

- Multimodal Deep Learning for Audio-Visual Speech ClassificationDocument9 pagesMultimodal Deep Learning for Audio-Visual Speech ClassificationAbbé BusoniNo ratings yet

- Hierarchical Dirichlet Processes: Ywteh@eecs - Berkeley.eduDocument34 pagesHierarchical Dirichlet Processes: Ywteh@eecs - Berkeley.eduAbbé BusoniNo ratings yet

- Low-Cost Accelerometers For Robotic Manipulator PerceptionDocument7 pagesLow-Cost Accelerometers For Robotic Manipulator PerceptionAbbé BusoniNo ratings yet

- Grasping Novel Objects with Depth SegmentationDocument8 pagesGrasping Novel Objects with Depth SegmentationAbbé BusoniNo ratings yet

- Multi-Camera Object Detection For Robotics: Adam Coates Andrew Y. NGDocument8 pagesMulti-Camera Object Detection For Robotics: Adam Coates Andrew Y. NGAbbé BusoniNo ratings yet

- Probabilistic Model for Learning Semantic Word VectorsDocument8 pagesProbabilistic Model for Learning Semantic Word VectorsAbbé BusoniNo ratings yet

- Icml11 EncodingVsTrainingDocument8 pagesIcml11 EncodingVsTrainingAbbé BusoniNo ratings yet

- LDA ModelDocument30 pagesLDA ModelTrần Văn HiếnNo ratings yet

- Icml11 EncodingVsTrainingDocument8 pagesIcml11 EncodingVsTrainingAbbé BusoniNo ratings yet

- Manual de Vigas HP 50GDocument9 pagesManual de Vigas HP 50GamadoromanNo ratings yet

- Multinomijalna RaspodelaDocument5 pagesMultinomijalna RaspodelaAbbé BusoniNo ratings yet

- Raspored Pocetka KursevaDocument1 pageRaspored Pocetka KursevaAbbé BusoniNo ratings yet

- Performance: Lenovo Legion 5 Pro 16ACH6HDocument5 pagesPerformance: Lenovo Legion 5 Pro 16ACH6HsonyNo ratings yet

- Maximabook PDFDocument121 pagesMaximabook PDFWarley GramachoNo ratings yet

- Modumate Raises $1.5M Seed Funding To Automate Drafting For ArchitectsDocument2 pagesModumate Raises $1.5M Seed Funding To Automate Drafting For ArchitectsPR.comNo ratings yet

- Boyce/Diprima 9 Ed, CH 2.1: Linear Equations Method of Integrating FactorsDocument15 pagesBoyce/Diprima 9 Ed, CH 2.1: Linear Equations Method of Integrating FactorsAnonymous OrhjVLXO5sNo ratings yet

- LG CM8440 PDFDocument79 pagesLG CM8440 PDFALEJANDRONo ratings yet

- t370hw02 VC AuoDocument27 pagest370hw02 VC AuoNachiket KshirsagarNo ratings yet

- Jean Abreu CVDocument3 pagesJean Abreu CVABREUNo ratings yet

- LIT1126 ACT20M Datasheet 03 14Document16 pagesLIT1126 ACT20M Datasheet 03 14alltheloveintheworldNo ratings yet

- V11 What's New and Changes PDFDocument98 pagesV11 What's New and Changes PDFGNo ratings yet

- Frontiers in Advanced Control SystemsDocument290 pagesFrontiers in Advanced Control SystemsSchreiber_DiesesNo ratings yet

- Berjuta Rasanya Tere Liye PDFDocument5 pagesBerjuta Rasanya Tere Liye PDFaulia nurmalitasari0% (1)

- Medical Image ProcessingDocument24 pagesMedical Image ProcessingInjeti satish kumarNo ratings yet

- LogDocument57 pagesLogEva AriantiNo ratings yet

- International Technology Roadmap For Semiconductors: 2008 Itrs OrtcDocument23 pagesInternational Technology Roadmap For Semiconductors: 2008 Itrs Ortcvishal garadNo ratings yet

- Hardware WorksheetDocument4 pagesHardware WorksheetNikita KaurNo ratings yet

- Baby Shark Color by Number Printables!Document2 pagesBaby Shark Color by Number Printables!siscaNo ratings yet

- Easychair Preprint: Adnene Noughreche, Sabri Boulouma and Mohammed BenbaghdadDocument8 pagesEasychair Preprint: Adnene Noughreche, Sabri Boulouma and Mohammed BenbaghdadTran Quang Thai B1708908No ratings yet

- Low Cost PCO Billing Meter PDFDocument1 pageLow Cost PCO Billing Meter PDFJuber ShaikhNo ratings yet

- The Apache OFBiz ProjectDocument2 pagesThe Apache OFBiz ProjecthtdvulNo ratings yet

- Churn Consultancy For V Case SharingDocument13 pagesChurn Consultancy For V Case SharingObeid AllahNo ratings yet

- Audio Encryption Optimization: Harsh Bijlani Dikshant Gupta Mayank LovanshiDocument5 pagesAudio Encryption Optimization: Harsh Bijlani Dikshant Gupta Mayank LovanshiAman Kumar TrivediNo ratings yet

- Satelec-X Mind AC Installation and Maintainance GuideDocument50 pagesSatelec-X Mind AC Installation and Maintainance Guideagmho100% (1)

- Imvu Free Credits-Fast-Free-Usa-Ios-Pc-AndroidDocument8 pagesImvu Free Credits-Fast-Free-Usa-Ios-Pc-Androidapi-538330524No ratings yet

- Function X - A Universal Decentralized InternetDocument24 pagesFunction X - A Universal Decentralized InternetrahmahNo ratings yet

- LivewireDocument186 pagesLivewireBlessing Tendai ChisuwaNo ratings yet

- Oed 2,0 2022Document139 pagesOed 2,0 2022Ellish ErisNo ratings yet

- Blockchain Systems and Their Potential Impact On Business ProcessesDocument19 pagesBlockchain Systems and Their Potential Impact On Business ProcessesHussain MoneyNo ratings yet

- Wind Turbine Generator SoftwareDocument3 pagesWind Turbine Generator SoftwareamitNo ratings yet

- Max Heap C ImplementationDocument11 pagesMax Heap C ImplementationKonstantinos KonstantopoulosNo ratings yet

- 3 Process Fundamentals Chapter 2 and 3Document74 pages3 Process Fundamentals Chapter 2 and 3Terry Conrad KingNo ratings yet