Professional Documents

Culture Documents

Linear Regression: An Approach For Forecasting

Uploaded by

svrbikkina0 ratings0% found this document useful (0 votes)

102 views12 pagesLearn how to calculate liner regression

Original Title

Linear Regression

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentLearn how to calculate liner regression

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

102 views12 pagesLinear Regression: An Approach For Forecasting

Uploaded by

svrbikkinaLearn how to calculate liner regression

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 12

1

Linear Regression: An approach for forecasting

Neeraj Bhatia, Oracle Corporation

We may encountered several situations in our daily life when we are asked to forecast the

performance of Oracle-based systems. Many questions strike our mind like:

How much load of some particular business activity, our existing system can support,

before running out of gas?

What is the optimal volume of a business activity that system can support without

any performance problem?

If the workload grows by x percentage every quarter, when we will need to add more

capacity to the system?

The questions you are asked to answer, may vary according to your business requirements;

but the bottom line remains the same. As a Capacity Planner, you are asked to do the capacity

planning and intimate the management regarding the additional capacity requirements in

proactive fashion.

This paper is all about forecasting of Oracle-based systems performance. Throughout the

paper, I will explain a statistical method called Linear Regression; that is industry-proven,

easy and timesaving technique to answer all such complex questions. I hope that after reading

the paper, you will feel more confident while forecasting Oracle performance.

Here, the approach followed for forecasting is based on a statistical technique, so if you are

not from statistics background, at times it may feel a bit harder for you to digest the text and

terminology. Dont Worry!! I have tried to explain the statistics terms in detail, and at times

doing that, also have diverted from main theme.

What is Linear Regression?

In very simple words, regression analysis is a method for investigating relationships among

variables. In context of Oracle examples of such relations are: Number of sessions vs

memory utilization, physical I/O vs. disk subsystem utilization etc. Regression relations can

be classified as linear and nonlinear, simple and multiple. For the sake of applicability, here

we are only concerned with simple linear Regression (or simply, Linear Regression).

Linear Regression tries to find a linear relationship between two variables. The general form

of such a relation is y= mx + c, which is also an equation of a straight line (dont get

confused with mathematical terminology). Here c represents the y-intercept of the line and m

represents the slope.

Seems bit complex, Yes its. Let me explain it in simple words. As a performance analyst, we

need to find a relationship between two technical metrics such as user calls and CPU

utilization. Such a relationship can be expressed in terms of an equation. Here the variable

user calls is used to predict the value of CPU utilization and is known as explanatory

variable or simply predictor. On the other hand, the variable whose value is to be predicted,

known as response variable or dependent variable. We generally denote response variable

by Y and predictor variable as X. If we apply these notations to above example, we can write

the relation as:

2

Sample# 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

Order Lines/day 16483 13142 12015 11986 1119 0 0 12259 6531 14086 12797 13141 454 1 5971 10901

Avg CPU Utilization 27.01 32.43 21.74 20.56 2.85 1.41 1.45 46.38 21.95 29.55 30.04 28.08 3.26 1.62 29.41 40.02

Sample# 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31

Order Lines/day 14271 13728 12938 1158 0 11450 5311 17073 11336 7340 11330 0 10679 12803 12827

Avg CPU Utilization 29.86 28.34 34.82 3.22 1.43 34.22 23.58 33.6 23.36 26.76 4.31 2.62 33.44 29.19 28.11

CPU utilization = user calls * m + c

Here m and c are known as regression coefficients or parameters, whose values we need to

determine. Once we get these values, we can predict the systems CPU utilization based on

the values of user calls.

Exploring relationships with Scatters and Correlations

Before we dig into linear regression to predict a value of response variable, there are some

conditions that should be met.

There exists a linear relationship between response and prediction variable. In other

words, if we plot a scatter diagram between X and Y, it should be a straight line.

There should be a strong correlation strength between response and prediction

variable.

These are the two conditions, which show a strong linear relation between response and

prediction variables. Lets discuss these one by one using an example.

Throughout this paper, we will discuss a data warehouse support system. The major

workload is the warehouse orders. Thus the key business metric is identified as number of

order lines. We wish to find a relation, where we can predict CPU utilization based on the

number of order lines entered into the system.

Here response variable (Y) is CPU utilization and prediction variable (X) is number of order

lines.

Here are 31 samples of CPU utilization and number of order line entries, collected

throughout the month.

3

Exploring relationships with Scatter plot

A scatter plot is a 2-dimensional graph that displays pairs of data, one pair per sample in the

(x, y) format. We can use Excels Chart Wizard to plot a scatter diagram for the above

example.

Figure 1: Scatter plot of Order lines vs. CPU Utilization

Here you can see that relationship appears to be a straight line, except some points, where

CPU utilization is not following the trend. These points are known as Outliers and we will

discuss them during later course of the paper.

Now since its confirmed that the condition of linear regression meets, we need to check how

strong the relation is.

Exploring relationships with Correlation Coefficient

Correlation Coefficient measures the strength and direction of the linear relationship

between response and prediction variable. Its a number between 1 and +1. Higher the

absolute value of r, stronger is the linear relationship between the variables. Negative value

of correlation coefficient represents negative relation between response and prediction

variable, means that if X increases then Y decreases and vice versa. We can calculate

correlation coefficient of a set of x and y values, using following formula:

Corr coeff r = (y

i

y)( x

i

x) / SQRT ( (x

i

y)

2

(x

i

x)

2

)

Again there is no need to solve this complex mathematical equation; Excels predefined

function CORREL () is available for us (Good news!).

The square of correlation coefficient (i.e., r

2

) denotes how much response variable can be

explained from the prediction variable. In our previous example, correlation coefficient is

calculated as r= 0.818 and r

2

= 0.669, so we can say that 66.9% of CPU utilization output

can be explained by order lines/day data. The remaining 33.1% of the CPU utilization output

cannot be explained from the prediction variable under observation, but by some other

variables (other technical metric). In order to do the forecasting precisely, we need to find a

variable which is most likely explain the response variable, or in order words, whose

correlation strength is maximum.

Where 1 value of correlation coefficient indicates perfect correlation between response and

prediction variables (you will rarely find it in real environments), Zero (0) exhibits absence

Order Lines vs CPU Utilization

0

10

20

30

40

50

0 2000 4000 6000 8000 10000 12000 14000 16000 18000

Number of Order Lines (X)

C

P

U

U

t

i

l

i

z

a

t

i

o

n

(

Y

)

4

of correlation. Any other value of correlation coefficient between 1 and +1 indicates limited

degree of correlation. Following table

*

gives practical meaning of various correlation

coefficients:

Correlation Coefficient (r) Practical Meaning

0.0 to 0.2 Very week

0.2 to 0.4 Week

0.4 to 0.7 Moderate

0.7 to 0.9 Strong

0.9 to 1.0 Very strong

* Reference 1

Regression Coefficients Estimation

Now after we have established that there is a strong linear relationship between response

variable and predictor, we are ready to estimate the regression coefficients. This is equivalent

to finding the equation of a straight line that best fits the points on the scatter diagram of

response variable versus the predictor variable. There are various methods available, one of

which is known as least squares method. It gives the equation of a straight line, which

minimizes the sum of squares of the vertical distances from each point to the line.

Using least squares method, the values of slope m and y-intercept c can be given as:

Slope m = (y

i

-y)(x

i

-x) / (x

i

-x)

2

And y-intercept c = y m * x

Where x = mean value of x-values, y = mean of y-values

If we dont want to solve these mathematical formulas, Microsoft Excel is there to help you.

It has predefined statistical functions Slope() and Intercept(), which takes X and Y values,

and return us the slope and y-intercept of the regression line respectively.

Excel can help us to plot best-fitted line along with its equation, all we need to do is to select

graphs points, right click on it and select add Trend line. Select linear trend line and in

options tab, check the display equation on chart box.

5

Here is the scatter plot for our previous example along with best-fit line and its equation:

Figure 2: Best-fitted line for Order lines vs. CPU Utilization plot

In this case slope m = 0.0019 and y-intercept c = 4.6317

Forecasting through Linear Regression

Now we have all the data that we need for forecasting. We have equation of a straight line

that best fits the response and prediction variables.

Suppose we want to predict the CPU utilization when there are 13141 order lines/day. We

can calculate this as follows:

Y-estimated = 0.0019 * 13141 + 4.6317 = 30.18%

From sample data, we know that at 13141 order lines/day, CPU utilization is observed as

28.08 %, we can see that there is 2.0 difference in actual and estimated CPU utilization. This

is known as residual. In a formal language, residual is the difference between observed value

of y and estimated value of y. In general, a positive residual means you have underestimated

y at that point and negative value of y implies that you have overestimated y.

Residuals are expected in data set, there may be some data points, which are away from main

data trend, such data points are known as Outliers. In real production environment Outliers

may be caused due to various reasons. Some of them are, backups, one-time report

generation process or problem in data collection. Any process, which is not a part of routine

workload will account for Outliers. While Outliers distort the regressions coefficients, they

sometimes alert us regarding the problem in data collection process. The bottom line is,

Outlier removal is most important part in forecasting through regression analysis. When we

detect Outliers, we need to think of whether these are part of normal workload and if its, then

we should include the same in our analysis, otherwise remove. But important thing here to

note down is that we should have proper documentation and justification for all the data

points that have been removed.

Scatter Plot (CPU utilization vs Order Lines/day)

y = 0.001944x + 4.631658

R

2

= 0.668916

0

10

20

30

40

50

0 2000 4000 6000 8000 10000 12000 14000 16000 18000

Order Lines per day (X)

C

P

U

U

t

i

l

i

z

a

t

i

o

n

(

Y

)

6

Outlier Removal Process

Outlier removal process may be very frustrating and time-consuming sometimes. Further, we

should know when to stop. Discussion with business/system specialist may help us to identify

outliers, points that are not part of a normal workload. Statistically Outliers can be detected

using following method.

First standardize residuals, means subtract the mean (average) of the residuals and divide by

the standard deviation from each calculated residual. Residuals have a property that they have

zero as their means. In order words, error above the linear regression line (positive residuals)

and below the regression line (negative residuals) is equal.

Following is the table containing standardized residual for the case study we are discussing:

Sample

Order Lines

(X)

CPU

Utilization (Y)

Estimated

Y Residual

Residual

Square

Stnd

Residual

27 11330 4.31 26.66 -22.35 499.5241 -2.87

8 12259 46.38 29.42 16.95 287.3514 2.53

16 10901 40.02 25.83 14.19 201.4710 1.84

15 5971 29.41 16.24 13.17 173.4282 1.70

1 16483 27.01 36.68 -9.67 93.4851 -1.25

23 5311 23.58 14.96 8.62 74.3461 1.12

29 10679 33.44 25.39 8.05 64.7328 1.04

26 7340 26.76 18.90 7.86 61.7408 1.02

4 11986 20.56 27.94 -7.38 54.3975 -0.95

22 11450 34.22 26.89 7.33 53.6799 0.95

3 12015 21.74 27.99 -6.25 39.0856 -0.81

19 12938 34.82 29.79 5.03 25.3372 0.65

9 6531 21.95 17.33 4.62 21.3484 0.60

24 17073 33.60 37.83 -4.23 17.8580 -0.55

5 1119 2.85 6.81 -3.96 15.6600 -0.51

20 1158 3.22 6.88 -3.66 13.4183 -0.47

25 11336 23.36 26.67 -3.31 10.9674 -0.43

6 0 1.41 4.63 -3.22 10.3791 -0.42

21 0 1.43 4.63 -3.20 10.2506 -0.41

7 0 1.45 4.63 -3.18 10.1229 -0.41

14 1 1.62 4.63 -3.01 9.0818 -0.39

18 13728 28.34 31.32 -2.98 8.8944 -0.39

17 14271 29.86 32.38 -2.52 6.3407 -0.33

10 14086 29.55 32.02 -2.47 6.0930 -0.32

13 454 3.26 5.51 -2.25 5.0821 -0.29

2 13142 32.43 30.18 2.25 5.0489 0.29

12 13141 28.08 30.18 -2.10 4.4145 -0.27

28 0 2.62 4.63 -2.01 4.0468 -0.26

31 12827 28.11 29.57 -1.46 2.1333 -0.19

11 12797 30.04 29.51 0.53 0.2785 0.07

30 12803 29.19 29.52 -0.33 0.1115 -0.04

Sum 0.0 0.0 0.0

7

After calculating standardized residuals, plot them against estimated values of response

variables. This is known as standardized residual plot. The motive behind plotting

standardized residuals is to check the normality

*

of the residuals. While most of the

standardize residuals should be close to zero, as we move away from zero, the frequency of

the residuals should decrease. Further, a standard residual at or beyond 3 and +3 is not

acceptable (due to Empirical rule of Standard Scores). These points should be considered as

Outliers and we should investigate them further.

Secondly, residuals should occur in random, they shouldnt follow a pattern. A pattern in

residual plots implies that regression line may not be fitting right.

Note: For normality and empericial rule, I have another document titled Statistics basics

for forecasting

Alternate way for detecting the Outliers is to square the residuals and sort the data set in

descending order. By doing so all the data points with higher residuals (positive or negative)

will come on the top. Further investigation of these data points will lead us to know whether

we should exclude these points from our analysis or not.

(a) (b)

Figure 3: Standardize residual plot, (a) before Outliers removal and (b) After Outliers

removal

From the above table and figure 3(a), we can see that Sample number 27 and 8 can be treated

as Outliers as their standardize residuals are greater than 2.5 (in any direction) and the

residual squares are very high as compared to other data points. Now its the time to

investigate the reason for these observations.

In this particular case, I personally found that it was Sunday when sample number 8 was

collected. So some statistics gathering processes were running which resulted in high CPU

utilization. Thats why we have positive residual (we underestimated CPU utilization). Since

its not a part of standard business workload, we can safely remove this data point from our

data set after proper documentation. Now digging further into the details for sample number

27, I found that there was no reasonable CPU utilization during the complete day. Overall

workload was not much, so even after there were 11000+ order lines served; resulted average

CPU utilization was only 4%. We need to discuss the reason of low workload with system

specialist. This may be due to skewness effect in CPU utilization data (CPU utilization is not

evenly distributed throughout the day). We can avoid the skewness effect by increasing the

data collection frequency (in this particular case per hour, for example). It should be noted

that, while increasing data collection frequency will eliminate skewness problem, it may

Standardize Residual Plot (After Outliers removal)

-3

-2

-1

0

1

2

3

0 5 10 15 20 25 30 35 40

Estimated Y values (CPU Utilization)

S

t

a

n

d

a

r

d

i

z

e

R

e

s

i

d

u

a

l

Standardize Residual Plot (Before Outliers removal)

-3

-2

-1

0

1

2

3

0 5 10 15 20 25 30 35 40

Estimated Y values (CPU Utilization)

S

t

a

n

d

a

r

d

i

z

e

R

e

s

i

d

u

a

l

8

result in high resource consumption on production servers. However optimized data

collection process can save precious resources (like memory and CPU cycles).

Proper Outlier detection and removal is very important step in regression analysis since they

can affect regression formula and make the overall forecasting process less precise.

In our example, after removing sample numbers 27 and 8 from our analysis, correlation

coefficient (r) increases to 0.884 and r

2

= 0.782. It means that now 78.2% of CPU utilization

can be explained using order lines/day. Compare there values with the previous ones where

r= 0.818 and r

2

= 0.669.

Figure:4 shows the scatter plot of the data set without the Outliers. Clearly regression line is

better without the Outliers, where more data points are close to the line.

Figure 4: Scatter plot of CPU Utilization vs. Order Lines/day after Outliers removal

Avoiding Extrapolation

In Linear regression, we end-up with an equation of a straight line, which best fits our data.

We substitute a value for x and get a predicted value for y. Plugging x values into the

equation that fall outside a reasonable bound is known as extrapolation. We should avoid

extrapolation in case of linear regression. In fact if we do, well have wrong results. This can

be considered as main drawback of linear regression; it can forecast only during the linearity

of the relation. This is due to the fact that systems performance is not linear. For example, in

our case, when number of order lines increases, it will increase host CPU utilization. Around

70-75% of CPU utilization queueing behavior comes into the picture, and CPU utilization

will never remain linear after that. Thus we can forecast through Linear Regression only

during the linear relationships of x and y values. In our case, minimum and maximum

observed value of Order lines per day are 0 and 17073 respectively. Choosing order lines/day

between 0 and 17073 to forecast is reasonable, but beyond these limits (value greater than

17073) isnt a good idea. We cant make sure that same linear relationship between order

lines/day and CPU utilization will be there after 17073 order lines/day.

So the bottom line is; never forecast in nonlinear areas. For CPU subsystem, this limit is

around 75% and for I/O subsystem this limit is around 65%.

Forecasting in the absence of single non-correlated variable

In our example, we have found that order lines/day is correlated with CPU Utilization and

after Outliers removal, able to predict CPU Utilization 78.2% of the times (Correlation

coefficient of 0.884 is strong).

Scatter Plot (CPU utilization vs Order Lines/day)

y = 0.001940x + 4.825653

R

2

= 0.781843

0

10

20

30

40

50

0 2000 4000 6000 8000 10000 12000 14000 16000 18000

Order Lines/day (X)

C

P

U

U

t

i

l

i

z

a

t

i

o

n

(

Y

)

9

We need to collect various Business Workload metrics and check, which is maximum

correlating with the Response Variable. Identification process of such metrics is sometimes

frustrating and may result in failure. A good approach here is to discuss the systems

behavior with all the concerned persons (business/system specialist, Application Engineer

etc.), as they better know the business and its impact on the system workload. Its always

better to end up the discussion with a good set of identified metrics. No one wants to re-

arrange the meeting if the initial metrics are not found be correlated with response variable.

There may be a case when no single metric is identified, that is correlated with the response

variable. Then we can select a set of variables, which are moderately correlated with response

variable and none of them is highly correlated. A Statistical regression approach where we

predict response variable on the basis of multiple prediction variables, is know as Multiple

Regression Analysis. Discussion of that approach is beyond the scope of this paper. I have

separate document, which explain the concept behind multiple regression.

Linear Regression Functions in Oracle

Oracle has inbuilt linear regression functions that support least squares method for regression

coefficient estimation.

The functions are as follows:

REGR_COUNT Function: It returns the number of non-null number pairs used to fit

the regression line.

REGR_AVGY and REGR_AVGX Functions: REGR_AVGY and REGR_AVGX

compute the averages of the dependent variable and the independent variable of the

regression line, respectively. REGR_AVGY computes the average of its first

argument (dependent variable Y) after eliminating number pairs where either of the

variables is null. Similarly, REGR_AVGX computes the average of its second

argument (independent variable X) after null elimination.

REGR_SLOPE and REGR_INTERCEPT Functions: The REGR_SLOPE function

computes the slope of the regression line fitted to non-null number pairs. The

REGR_INTERCEPT function computes the y-intercept of the regression line.

REGR_R2 Function: The REGR_R2 function computes the coefficient of

determination (usually called "R-squared" or "goodness of fit") for the regression

line.

Sample code- Automate Linear Regression through Oracle functions

Following is sample code, which can be used for linear regression analysis for Oracle

performance forecasting. Here I tried to give an example of Oracle linear regression

functions.

This sample code chooses database workload metric logical reads per sec as a predictor

variable. You can customize the code and include other metrics as well like user calls

per sec, executions per sec etc.

10

Description:

Table regression_data: This table will be used for storing database technical metrics and

regression data.

Table outlier_data: This table will store outlier data. It should be noted that outliers

would be deleted from regression_data and store in this table, for further investigation

and documentation purpose.

View dba_hist_sysmetric_summary: This database view is used to fetch historical AWR

data.

create table regression_data(

snap_id number,

begin_time date,

end_time date,

logical_reads number,

host_CPU number,

proj_cpu number,

residual number,

residual_sqr number,

stnd_residual number);

create table outlier_data(

snap_id number,

begin_time date,

end_time date,

logical_reads number,

host_cpu number);

Table population:

insert into regression_data

(snap_id ,begin_time, end_time,logical_reads)

select snap_id,BEGIN_TIME,END_TIME,AVERAGE from

DBA_HIST_SYSMETRIC_SUMMARY v, gv$instance i

where metric_name='Logical Reads Per Sec' and

begin_time >= to_date('1-oct-08 00:00:00','dd-mon-yy

hh24:mi:ss') and

end_time <= to_date('31-oct-08 00:00:00','dd-mon-yy hh24:mi:ss')

and v.instance_number=i.instance_number;

declare

cursor c2 is select

snap_id,metric_name,BEGIN_TIME,END_TIME,MAXVAL,AVERAGE from

DBA_HIST_SYSMETRIC_SUMMARY where metric_name ='Host CPU

Utilization (%)';

begin

FOR table_scan in c2 loop

update regression_data set host_cpu = table_scan.average

where snap_id = table_scan.snap_id ;

11

END LOOP;

end;

/

Regression analysis code:

declare

outlier_count number;

intercept number;

slope number;

stnd_dev number;

avg_res number;

cursor c1 is select snap_id ,begin_time, end_time ,logical_reads,

host_CPU, proj_cpu ,residual , residual_sqr, stnd_residual from

regression_data ;

begin

update regression_data set stnd_residual= 4;

select count(*) into outlier_count

from regression_data

where abs(stnd_residual) > 3;

while outlier_count >0 loop

select round(REGR_INTERCEPT (host_cpu, logical_reads),8)

into intercept from regression_data ;

select round(REGR_SLOPE (host_cpu, logical_reads),8) into

slope from regression_data ;

FOR table_scan in c1 loop

update regression_data set proj_cpu=

slope*table_scan.logical_reads + intercept where

snap_id=table_scan.snap_id;

update regression_data set residual= proj_cpu-

host_cpu where snap_id=table_scan.snap_id;

update regression_data set residual_sqr =

residual*residual where snap_id=table_scan.snap_id;

END LOOP;

select round(stddev(residual),8) into stnd_dev from

regression_data;

select round(avg(residual),8) into avg_res from

regression_data;

FOR table_scan2 in c1 loop

update regression_data set stnd_residual=

(residual-avg_res)/stnd_dev where snap_id=table_scan2.snap_id;

END Loop;

select count(*) into outlier_count from regression_data

where abs(stnd_residual) > 3;

If outlier_count >0 then

FOR table_scan3 in c1 loop

12

If abs(table_scan3.stnd_residual) > 3 then

insert into outlier_data(snap_id,

begin_time,end_time,logical_reads, host_cpu) values

(table_scan3.snap_id, table_scan3.begin_time,

table_scan3.end_time, table_scan3.logical_reads,

table_scan3.host_cpu);

delete from regression_data where

snap_id=table_scan3.snap_id;

end if;

END LOOP;

end if;

END LOOP;

commit;

end;

/

References

1. Statistics Without Tears: A Primer for Non Mathematicians by Derek Rowntree

(MacMillan Publishing Company; 1981)

2. Forecasting Oracle Performance by Craig Shallahamer (Apress, 2007)

3. Intermediate Statistics for dummies by Deborah Rumsey (Wiley Publishing, 2007)

4. Regression Analysis by example, fourth edition by Samprit Chatterjee and Ali S.Hadi

(John Wiley & Sons, 2006)

5. Oracle9i Data Warehousing Guide Release 2 (9.2) Part Number A96520-01

About the Author

Neeraj Bhatia is a Senior Technical Analyst in Oracle India, based in Noida, India. He has

been working with Oracle Databases for five years. Currently he is responsible for Capacity

Planning for Oracle-based systems that include Oracle Database, Oracle Application Server,

PeopleSoft and Oracle Applications. Prior to this, he worked as a Performance Analyst for

VLDBs. When not working with Oracle, he likes to listen music, watch movies and spend a

good time with family. He can be reached at: neeraj.dba@gmail.com

You might also like

- Tablet Process ValidationDocument38 pagesTablet Process Validationasit_m100% (6)

- Exp. 5 Heat Transfer Study On Plate Heat ExchangerDocument6 pagesExp. 5 Heat Transfer Study On Plate Heat ExchangerElaine PuiNo ratings yet

- Compare distributions with QQ plotsDocument7 pagesCompare distributions with QQ plotsdavparanNo ratings yet

- Multiple Regression SPECIALISTICADocument93 pagesMultiple Regression SPECIALISTICAalchemist_bgNo ratings yet

- Stat331-Multiple Linear RegressionDocument13 pagesStat331-Multiple Linear RegressionSamantha YuNo ratings yet

- Plan, Do, Check, Act: An Introduction To Managing For Health and SafetyDocument8 pagesPlan, Do, Check, Act: An Introduction To Managing For Health and SafetyMr-SmithNo ratings yet

- Training MetoceanDocument68 pagesTraining MetoceanChiedu Okondu100% (3)

- ASTM D6522-00 Determination of NOx, CO, and O2 Concentrations in Emissions Using Portable AnalyzersDocument9 pagesASTM D6522-00 Determination of NOx, CO, and O2 Concentrations in Emissions Using Portable AnalyzersFredi Cari Carrera100% (1)

- Capital Structure: Theory and PolicyDocument31 pagesCapital Structure: Theory and PolicySuraj ShelarNo ratings yet

- Top Factors Influencing Shared Bike DemandDocument7 pagesTop Factors Influencing Shared Bike Demandmudassar shaik100% (5)

- Plesnerrossing2017 PDFDocument13 pagesPlesnerrossing2017 PDFM Iqbal RamadhanNo ratings yet

- Element 2 How Health and Safety Management Systems Work and What They Look LikeDocument16 pagesElement 2 How Health and Safety Management Systems Work and What They Look LikespkpalkuNo ratings yet

- UsefulDocument11 pagesUsefulKrunal Gohel100% (1)

- Multipkle RegressionDocument29 pagesMultipkle Regressionrohanfyaz00No ratings yet

- Systems in Focus 0409Document32 pagesSystems in Focus 0409ylaala8010No ratings yet

- Applied Linear RegressionDocument9 pagesApplied Linear RegressionPee NutNo ratings yet

- Violations of OLSDocument64 pagesViolations of OLSOisín Ó CionaoithNo ratings yet

- QuestionsDocument4 pagesQuestionsMuhammed Shiraz KhanNo ratings yet

- Linear Regression SPSSDocument19 pagesLinear Regression SPSSAnadiGoelNo ratings yet

- Unit 1 - Introduction To Environmental Auditing and ManagementDocument47 pagesUnit 1 - Introduction To Environmental Auditing and Managementmadhuri100% (1)

- EfficientFrontier ExcelDocument7 pagesEfficientFrontier ExcelNikhil GuptaNo ratings yet

- Probit Logit Ohio PDFDocument16 pagesProbit Logit Ohio PDFKeith Salazar ArotomaNo ratings yet

- Efficient Portfolios in Excel Using The Solver and Matrix AlgebraDocument16 pagesEfficient Portfolios in Excel Using The Solver and Matrix AlgebraShubham MittalNo ratings yet

- Ig2 Forms Written SubmissionDocument8 pagesIg2 Forms Written SubmissionMuhammad Yaseen0% (1)

- Stability Diognostic Through CUSUMDocument8 pagesStability Diognostic Through CUSUMsaeed meo100% (1)

- Structural VAR and Applications: Jean-Paul RenneDocument55 pagesStructural VAR and Applications: Jean-Paul RennerunawayyyNo ratings yet

- Principal Component Analysis Tutorial 101 With NumXLDocument10 pagesPrincipal Component Analysis Tutorial 101 With NumXLNumXL ProNo ratings yet

- Poisson Regression in EViews for Count DataDocument12 pagesPoisson Regression in EViews for Count DataRajat GargNo ratings yet

- Testing Mediation Using Medsem' Package in StataDocument17 pagesTesting Mediation Using Medsem' Package in StataAftab Tabasam0% (1)

- Regression AnalysisDocument20 pagesRegression AnalysisAnkit SaxenaNo ratings yet

- The Impact of Inflation On Working CapitalDocument16 pagesThe Impact of Inflation On Working CapitalVishal Mohanka0% (1)

- Soln mt2 w08 431Document10 pagesSoln mt2 w08 431medazNo ratings yet

- Var - Monte Carlo SimulationDocument5 pagesVar - Monte Carlo SimulationKomang62No ratings yet

- Reserving InsuranceDocument19 pagesReserving InsuranceDarwin DcNo ratings yet

- 2-Risk Assessment in Practice-1Document8 pages2-Risk Assessment in Practice-1MAB AliNo ratings yet

- Performance Prism 200302 - 14Document4 pagesPerformance Prism 200302 - 14rajad2010No ratings yet

- Probit Analysis for Concentration and TimeDocument6 pagesProbit Analysis for Concentration and TimeM Isyhaduul IslamNo ratings yet

- Eviews - 9 - Cointegration - Engle-Granger - JohansenDocument10 pagesEviews - 9 - Cointegration - Engle-Granger - Johansendsami100% (2)

- The Properties, Advantages, and Assumption of 3SLS ApproachDocument1 pageThe Properties, Advantages, and Assumption of 3SLS ApproachUmer Hassan Butt100% (1)

- Balance Sheet or Statement of Financial PositionDocument52 pagesBalance Sheet or Statement of Financial PositionkarishmaNo ratings yet

- Econometrics II-1Document56 pagesEconometrics II-1Teneshu HorsisaNo ratings yet

- Thresholdeffects.GARCH Models Allow for Asymmetriesin VolatilityDocument25 pagesThresholdeffects.GARCH Models Allow for Asymmetriesin VolatilityLuis Bautista0% (1)

- THERPDocument6 pagesTHERPedumm001No ratings yet

- أثر فاعلية أنظمة تخطيط موارد المنظمة على تميز الأداء المؤسسي - أسماء مروان الفاعوريDocument97 pagesأثر فاعلية أنظمة تخطيط موارد المنظمة على تميز الأداء المؤسسي - أسماء مروان الفاعوريNezo Qawasmeh80% (5)

- Identifying A Suitable Approach For Measuring and Managing Public Service ProductivityDocument12 pagesIdentifying A Suitable Approach For Measuring and Managing Public Service ProductivityAdhitya Setyo PamungkasNo ratings yet

- Panel Cointegration FMOLS Test in EViewsDocument8 pagesPanel Cointegration FMOLS Test in EViewsSayed Farrukh AhmedNo ratings yet

- Case Study 3Document2 pagesCase Study 3Rao SNo ratings yet

- Ejercicois EviewsDocument10 pagesEjercicois EviewsJuan Meza100% (1)

- Score (CronbachAlpha CI)Document9 pagesScore (CronbachAlpha CI)Alexandru Gabriel SettemilaNo ratings yet

- Mcs 1Document57 pagesMcs 1Hafizur Rahman DhruboNo ratings yet

- Risk Management: Sitara Chemical Industries LimitedDocument11 pagesRisk Management: Sitara Chemical Industries LimitedNuman RoxNo ratings yet

- Managerial Statistics UuDocument138 pagesManagerial Statistics UuSolomonNo ratings yet

- Var, Svar and Svec ModelsDocument32 pagesVar, Svar and Svec ModelsSamuel Costa PeresNo ratings yet

- Regression Analysis Explained in 40 CharactersDocument21 pagesRegression Analysis Explained in 40 CharactersjjjjkjhkhjkhjkjkNo ratings yet

- Asymptotic SDocument88 pagesAsymptotic SapmountNo ratings yet

- ARDL Models-Bounds TestingDocument17 pagesARDL Models-Bounds Testingmzariab88% (8)

- Practical Assessment GuidanceDocument19 pagesPractical Assessment GuidanceEULET RICHARDNo ratings yet

- Werner Modlin RatemakingDocument423 pagesWerner Modlin RatemakingCynthia WangNo ratings yet

- TestDocument31 pagesTestMs. Vandana MohantyNo ratings yet

- Week 5 - EViews Practice NoteDocument16 pagesWeek 5 - EViews Practice NoteChip choiNo ratings yet

- كيف تجتاز امتحانات البنوك PDFDocument60 pagesكيف تجتاز امتحانات البنوك PDFwhoamiNo ratings yet

- Analyzing Performance of Moroccan Pension Plans Using Case-Type ApproachDocument21 pagesAnalyzing Performance of Moroccan Pension Plans Using Case-Type ApproachYoussef JabirNo ratings yet

- 06 Simple Linear Regression Part1Document8 pages06 Simple Linear Regression Part1Rama DulceNo ratings yet

- UNIt-3 TYDocument67 pagesUNIt-3 TYPrathmesh Mane DeshmukhNo ratings yet

- Machine LearningDocument34 pagesMachine LearningsriramasettyvijaykumarNo ratings yet

- Alumine FB 111 EnglishDocument1 pageAlumine FB 111 EnglishsvrbikkinaNo ratings yet

- Toton New Housing PlanDocument52 pagesToton New Housing PlansvrbikkinaNo ratings yet

- Regenerate Scalp Serum Fuller HairDocument1 pageRegenerate Scalp Serum Fuller HairsvrbikkinaNo ratings yet

- Vmware Hadoop Deployment GuideDocument34 pagesVmware Hadoop Deployment GuidesvrbikkinaNo ratings yet

- Regenerate Scalp Serum Fuller HairDocument1 pageRegenerate Scalp Serum Fuller HairsvrbikkinaNo ratings yet

- Alumine FB 113 EnglishDocument1 pageAlumine FB 113 EnglishsvrbikkinaNo ratings yet

- Alumine FB 110 EnglishDocument1 pageAlumine FB 110 EnglishsvrbikkinaNo ratings yet

- Alumine FB 106 107 EnglishDocument1 pageAlumine FB 106 107 EnglishsvrbikkinaNo ratings yet

- Alumine FB 112 EnglishDocument1 pageAlumine FB 112 EnglishsvrbikkinaNo ratings yet

- Bose LS35II GuideDocument46 pagesBose LS35II GuidesvrbikkinaNo ratings yet

- Alumine FB 114 EnglishDocument1 pageAlumine FB 114 EnglishsvrbikkinaNo ratings yet

- Alumine FB 115 EnglishDocument1 pageAlumine FB 115 EnglishsvrbikkinaNo ratings yet

- Detoxifying Citrus C Facial Cleanser: Cleans, Tones and DetoxifiesDocument1 pageDetoxifying Citrus C Facial Cleanser: Cleans, Tones and DetoxifiessvrbikkinaNo ratings yet

- ACN PresentationDocument2 pagesACN PresentationsvrbikkinaNo ratings yet

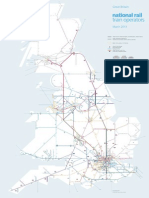

- National Rail Network Map ZoomDocument1 pageNational Rail Network Map ZoomsvrbikkinaNo ratings yet

- How Do You Configure Transaction Timeout For BPEL On SOA 11gDocument2 pagesHow Do You Configure Transaction Timeout For BPEL On SOA 11gsvrbikkinaNo ratings yet

- Broadband Pricelist UKDocument3 pagesBroadband Pricelist UKsvrbikkinaNo ratings yet

- Scottish Power Business in Voic ExplainedDocument20 pagesScottish Power Business in Voic ExplainedsvrbikkinaNo ratings yet

- 0509 FOSSIL BW 70 Upgrade To BW 73 Success Story and Lessons Learnt For Customers Looking To UpgradeDocument22 pages0509 FOSSIL BW 70 Upgrade To BW 73 Success Story and Lessons Learnt For Customers Looking To UpgradeManoj KumarNo ratings yet

- DAMA-Financial Management Reference ModelDocument6 pagesDAMA-Financial Management Reference ModelsvrbikkinaNo ratings yet

- UK Visas & Immigration ContactDocument3 pagesUK Visas & Immigration ContactsvrbikkinaNo ratings yet

- SAP Testing ContentDocument2 pagesSAP Testing ContentsvrbikkinaNo ratings yet

- ForecastingDocument30 pagesForecastingp_nayaksNo ratings yet

- SAP Testing ContentDocument2 pagesSAP Testing ContentsvrbikkinaNo ratings yet

- Change Run Failed Because of The RollupsDocument2 pagesChange Run Failed Because of The RollupssvrbikkinaNo ratings yet

- SAP BW Demo - AllDocument14 pagesSAP BW Demo - AllsvrbikkinaNo ratings yet

- Change Run Failed Because of The RollupsDocument2 pagesChange Run Failed Because of The RollupssvrbikkinaNo ratings yet

- Sapbpcnw10 0consolidationsownershipandminorityinterestcalculationsv2 120610223124 Phpapp02Document65 pagesSapbpcnw10 0consolidationsownershipandminorityinterestcalculationsv2 120610223124 Phpapp02svrbikkinaNo ratings yet

- Owners Guide Lifestyle 135 235 System V25 V35 System Lifestyle T10 T20 System AM342774 00 Tcm6 36063Document34 pagesOwners Guide Lifestyle 135 235 System V25 V35 System Lifestyle T10 T20 System AM342774 00 Tcm6 36063svrbikkinaNo ratings yet

- Open Field Cup Anemometry: DEWI Magazin Nr. 19, August 2001Document6 pagesOpen Field Cup Anemometry: DEWI Magazin Nr. 19, August 2001Muqtaf NajichNo ratings yet

- Journal of King Saud University - Computer and Information SciencesDocument20 pagesJournal of King Saud University - Computer and Information SciencesSamiul SakibNo ratings yet

- Goodness of Fit Negative Binomial RDocument3 pagesGoodness of Fit Negative Binomial RTimothyNo ratings yet

- M&V Plan and Savings Calculation Methods Outline v. 1.0, Nov. 2004Document10 pagesM&V Plan and Savings Calculation Methods Outline v. 1.0, Nov. 2004Zuhair MasedNo ratings yet

- Difference between F-Test and T-TestDocument2 pagesDifference between F-Test and T-TestayyazmNo ratings yet

- Nkwabi Et Al 2018 Roadkill Paper - BirdsDocument11 pagesNkwabi Et Al 2018 Roadkill Paper - BirdsAlly NkwabiNo ratings yet

- Computer AssignmentDocument6 pagesComputer AssignmentSudheender Srinivasan0% (1)

- Genetic Variability and Associations in Lentil GenotypesDocument24 pagesGenetic Variability and Associations in Lentil GenotypesĐoh RģNo ratings yet

- Chapter 9 - Forecasting TechniquesDocument50 pagesChapter 9 - Forecasting TechniquesPankaj MaryeNo ratings yet

- RilemDocument78 pagesRilemسارة المالكيNo ratings yet

- Linear Algebra For Machine LearningDocument115 pagesLinear Algebra For Machine LearningHusain NasikwalaNo ratings yet

- Tepid Sponge PDFDocument4 pagesTepid Sponge PDFkusrini_kadarNo ratings yet

- 2000 (LTPP) FHWA-RD-98-085 Temperature Predictions and Adjustment Factors For Asphalt Pavemen PDFDocument49 pages2000 (LTPP) FHWA-RD-98-085 Temperature Predictions and Adjustment Factors For Asphalt Pavemen PDFTim LinNo ratings yet

- 0610 w17 Ms 62Document8 pages0610 w17 Ms 62Hacker1No ratings yet

- Chapter 4 SolutionsDocument13 pagesChapter 4 SolutionsAhmed FahmyNo ratings yet

- 2021 01 Slides l4 MLDocument253 pages2021 01 Slides l4 MLsajjad BalochNo ratings yet

- MAT 540 Statistical Concepts For ResearchDocument28 pagesMAT 540 Statistical Concepts For Researchnequwan79No ratings yet

- Chapter 7 Review W Sols Part IDocument4 pagesChapter 7 Review W Sols Part ICopetanNo ratings yet

- Introecon Normal Dist PDFDocument22 pagesIntroecon Normal Dist PDFFetsum LakewNo ratings yet

- SMT Strange JourneyDocument367 pagesSMT Strange JourneyDaniel UntoroNo ratings yet

- AC Lab 4 Molecular Weight Freezing Point DepressionDocument10 pagesAC Lab 4 Molecular Weight Freezing Point DepressionSohamDixitNo ratings yet

- Afi ManuscriptDocument29 pagesAfi ManuscriptprmraoNo ratings yet

- Aircraft Windshield Reliability Final PaperDocument12 pagesAircraft Windshield Reliability Final PaperAnonymous ffje1rpa100% (1)

- BMC Evol BiolDocument17 pagesBMC Evol BiolStacy JohnsonNo ratings yet

- Rheem PKG RLNL-B - S11-937 - Rev1 PDFDocument56 pagesRheem PKG RLNL-B - S11-937 - Rev1 PDFWESHIMNo ratings yet