Professional Documents

Culture Documents

Bayes Tree

Uploaded by

Ashwini Kumar PalOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Bayes Tree

Uploaded by

Ashwini Kumar PalCopyright:

Available Formats

Package BayesTree

August 29, 2013

Version 0.3-1.1

Date 2009-5-23

Title Bayesian Methods for Tree Based Models

Author Hugh Chipman <hugh.chipman@gmail.com>, Robert McCulloch

<robert.mcculloch1@gmail.com>

Maintainer Robert McCulloch <robert.mcculloch1@gmail.com>

Depends nnet

Description Implementation of BART:Bayesian Additive Regression Trees,Chipman, George, McCul-

loch (2009)

License GPL (>= 2)

URL http://www.r-project.org, http://www.rob-mcculloch.org

Repository CRAN

Date/Publication 2010-05-07 16:10:16

NeedsCompilation yes

R topics documented:

bart . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

makeind . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

pdbart . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Index 11

1

2 bart

bart Bayesian Additive Regression Trees

Description

BART is a Bayesian sum-of-trees model.

For numeric response y, we have y = f(x) + , where N(0,

2

).

For a binary response y, P(Y = 1|x) = F(f(x)), where F denotes the standard normal cdf (probit

link).

In both cases, f is the sum of many tree models. The goal is to have very exible inference for the

uknown function f.

In the spirit of ensemble models, each tree is constrained by a prior to be a weak learner so that it

contributes a small amount to the overall t.

Usage

bart(

x.train, y.train, x.test=matrix(.,,),

sigest=NA, sigdf=3, sigquant=.9,

k=2.,

power=2., base=.95,

binaryOffset=,

ntree=2,

ndpost=1, nskip=1,

printevery=1, keepevery=1, keeptrainfits=TRUE,

usequants=FALSE, numcut=1, printcutoffs=,

verbose=TRUE)

## S3 method for class bart

plot(

x,

plquants=c(.5,.95), cols =c(blue,black),

...)

Arguments

x.train Explanatory variables for training (in sample) data.

May be a matrix or a data frame, with (as usual) rows corresponding to obser-

vations and columns to variables.

If a variable is a factor in a data frame, it is replaced with dummies. Note that

q dummies are created if q>2 and one dummy is created if q=2, where q is the

number of levels of the factor. makeind is used to generate the dummies. bart

will generate draws of f(x) for each x which is a row of x.train.

y.train Dependent variable for training (in sample) data.

If y is numeric a continous response model is t (normal errors).

If y is a factor (or just has values 0 and 1) then a binary response model with a

probit link is t.

bart 3

x.test Explanatory variables for test (out of sample) data.

Should have same structure as x.train.

bart will generate draws of f(x) for each x which is a row of x.test.

sigest The prior for the error variance (

2

) is inverted chi-squared (the standard con-

ditionally conjugate prior). The prior is specied by choosing the degrees of

freedom, a rough estimate of the corresponding standard deviation and a quan-

tile to put this rough estimate at. If sigest=NA then the rough estimate will be

the usual least squares estimator. Otherwise the supplied value will be used. Not

used if y is binary.

sigdf Degrees of freedom for error variance prior. Not used if y is binary.

sigquant The quantile of the prior that the rough estimate (see sigest) is placed at. The

closer the quantile is to 1, the more aggresive the t will be as you are putting

more prior weight on error standard deviations () less than the rough estimate.

Not used if y is binary.

k For numeric y, k is the number of prior standard deviations E(Y |x) = f(x) is

away from +/-.5. The response (y.train) is internally scaled to range from -.5 to

.5. For binary y, k is the number of prior standard deviations f(x) is away from

+/-3. In both cases, the bigger k is, the more conservative the tting will be.

power Power parameter for tree prior.

base Base parameter for tree prior.

binaryOffset Used for binary y.

The model is P(Y = 1|x) = F(f(x) + binaryOffset).

The idea is that f is shrunk towards 0, so the offset allows you to shrink towards

a probability other than .5.

ntree The number of trees in the sum.

ndpost The number of posterior draws after burn in, ndpost/keepevery will actually be

returned.

nskip Number of MCMC iterations to be treated as burn in.

printevery As the MCMC runs, a message is printed every printevery draws.

keepevery Every keepevery draw is kept to be returned to the user.

A draw will consist of values of the error standard deviation () and f

(x) at

x = rows from the train(optionally) and test data, where f

denotes the current

draw of f.

keeptrainfits If true the draws of f(x) for x = rows of x.train are returned.

usequants Decision rules in the tree are of the form x c vs. x > c for each variable

corresponding to a column of x.train. usequants determines how the set of pos-

sible c is determined. If usequants is true, then the c are a subset of the values

(xs[i]+xs[i+1])/2 where xs is unique sorted values obtained fromthe correspond-

ing column of x.train. If usequants is false, the cutoffs are equally spaced across

the range of values taken on by the corresponding column of x.train.

numcut The number of possible values of c (see usequants). If a single number if given,

this is used for all variables. Otherwise a vector with length equal to ncol(x.train)

is required, where the i

th

element gives the number of c used for the i

th

variable

in x.train. If usequants is false, numcut equally spaced cutoffs are used covering

4 bart

the range of values in the corresponding column of x.train. If usequants is true,

then min(numcut, the number of unique values in the corresponding columns of

x.train - 1) c values are used.

printcutoffs The number of cutoff rules c to printed to screen before the MCMC is run. Give

a single integer, the same value will be used for all variables. If 0, nothing is

printed.

verbose Logical, if FALSE supress printing.

x Value returned by bart which contains the information to be plotted.

plquants In the plots, beliefs about f(x) are indicated by plotting the posterior median

and a lower and upper quantile. plquants is a double vector of length two giving

the lower and upper quantiles.

cols Vector of two colors. First color is used to plot the median of f(x) and the

second color is used to plot the lower and upper quantiles.

... Additional arguments passed on to plot.

Details

BART is an Bayesian MCMC method. At each MCMC interation, we produce a draw from the

joint posterior (f, )|(x, y) in the numeric y case and just f in the binary y case.

Thus, unlike a lot of other modelling methods in R, we do not produce a single model object from

which ts and summaries may be extracted. The output consists of values f

(x) (and

in the

numeric case) where * denotes a particular draw. The x is either a row from the training data

(x.train) or the test data (x.test).

Value

The plot method sets mfrow to c(1,2) and makes two plots.

The rst plot is the sequence of kept draws of including the burn-in draws. Initially these draws

will decline as BART nds t and then level off when the MCMC has burnt in.

The second plot has y on the horizontal axis and posterior intervals for the corresponding f(x) on

the vertical axis.

bart returns a list assigned class bart. In the numeric y case, the list has components:

yhat.train A matrix with (ndpost/keepevery) rows and nrow(x.train) columns. Each row

corresponds to a draw f

from the posterior of f and each column corresponds

to a row of x.train. The (i, j) value is f

(x) for the i

th

kept draw of f and the

j

th

row of x.train.

Burn-in is dropped.

yhat.test Same as yhat.train but now the xs are the rows of the test data.

yhat.train.mean

train data ts = mean of yhat.train columns.

yhat.test.mean test data ts = mean of yhat.test columns.

sigma post burn in draws of sigma, length = ndpost/keepevery.

first.sigma burn-in draws of sigma.

bart 5

varcount a matrix with (ndpost/keepevery) rows and nrow(x.train) columns. Each row is

for a draw. For each variable (corresponding to the columns), the total count of

the number of times that variable is used in a tree decision rule (over all trees) is

given.

sigest The rough error standard deviation () used in the prior.

y The input dependent vector of values for the dependent variable.

This is used in plot.bart.

In the binary y case, the returned list has the components yhat.train, yhat.test, and varcount as above.

In addition the list has a binaryOffset component giving the value used.

Note that in the binary y, case yhat.train and yhat.test are f(x) + binaryOffset. If you want draws

of the probability P(Y = 1|x) you need to apply the normal cdf (pnorm) to these values.

Note

There was a bug in BayesTree\_0.1-0 (now xed of course).

If the number of test observations was less than the number of trees (200 is the default), the yhat.test

and yhat.test.mean components were suspect.

Author(s)

Hugh Chipman: <hugh.chipman@gmail.com>

Robert McCulloch: <robert.mcculloch1@gmail.com>.

References

Chipman, H., George, E., and McCulloch, R. (2009) BART: Bayesian Additive Regression Trees.

Chipman, H., George, E., and McCulloch R. (2006) Bayesian Ensemble Learning. Advances in

Neural Information Processing Systems 19, Scholkopf, Platt and Hoffman, Eds., MIT Press, Cam-

bridge, MA, 265-272.

both of the above at: http://www.rob-mcculloch.org

Friedman, J.H. (1991) Multivariate adaptive regression splines. The Annals of Statistics, 19, 167.

See Also

pdbart

Examples

##simulate data (example from Friedman MARS paper)

f = function(x){

1*sin(pi*x[,1]*x[,2]) + 2*(x[,3]-.5)^2+1*x[,4]+5*x[,5]

}

sigma = 1. #y = f(x) + sigma*z , z~N(,1)

n = 1 #number of observations

set.seed(99)

x=matrix(runif(n*1),n,1) #1 variables, only first 5 matter

Ey = f(x)

y=Ey+sigma*rnorm(n)

6 makeind

lmFit = lm(y~.,data.frame(x,y)) #compare lm fit to BART later

##run BART

set.seed(99)

bartFit = bart(x,y)

plot(bartFit) # plot bart fit

##compare BART fit to linear matter and truth = Ey

fitmat = cbind(y,Ey,lmFit$fitted,bartFit$yhat.train.mean)

colnames(fitmat) = c(y,Ey,lm,bart)

print(cor(fitmat))

makeind Build x matrix from x data frame (convert factors to dummies)

Description

Converts factors to dummies.

Note that with more than one level, BART needs a dummy for each level of a factor (unlike in linear

regression where one of the dummies is dropped).

Usage

makeind(

x,

all=TRUE)

Arguments

x Data frame of explanatory variables.

all If all=TRUE, a factor with p levels will be replaced by all p dummies. If

all=FALSE, the pth dummy is dropped.

Details

Uses function class.ind from the nnet library. Note that if you have train and test data frames, it may

be best to rbind the two together, apply makeind to the result, and then pull them back apart.

Value

A matrix.

Numerical variables come rst, and then the appended dummies.

Author(s)

Hugh Chipman: <hugh.chipman@acadiau.ca>

Robert McCulloch: <robert.mcculloch@chicagogsb.edu>.

See Also

bart

pdbart 7

Examples

x1 = 1:1

x2 = as.factor(c(rep(1,5),rep(2,5)))

x3 = as.factor(c(1,1,1,2,2,2,4,4,4,4))

levels(x3) = c(rob,hugh,ed)

x = data.frame(x1,x2,x3)

junk = makeind(x)

pdbart Partial Dependence Plots for BART

Description

Run bart at test observations constructed so that a plot can be created displaying the effect of a

single variable (pdbart) or pair of variables (pd2bart). Note the y is a binary with P(Y = 1|x) =

F(f(x)) with F the standard normal cdf, then the plots are all on the f scale.

Usage

pdbart(

x.train, y.train,

xind=1:ncol(x.train), levs=NULL, levquants=c(.5,(1:9)/1,.95),

pl=TRUE, plquants=c(.5,.95), ...)

## S3 method for class pdbart

plot(

x,

xind = 1:length(x$fd),

plquants =c(.5,.95),cols=c(black,blue), ...)

pd2bart(

x.train, y.train,

xind=1:2, levs=NULL, levquants=c(.5,(1:9)/1,.95),

pl=TRUE, plquants=c(.5,.95), ...)

## S3 method for class pd2bart

plot(

x,

plquants =c(.5,.95), contour.color=white,

justmedian=TRUE, ...)

Arguments

x.train Explanatory variables for training (in sample) data.

Must be a matrix (typeof double) with (as usual) rows corresponding to obser-

vations and columns to variables.

Note that for a categorical variable you need to use dummies and if there are

more than two categories, you need to put all the dummies in (unlike linear

regression).

8 pdbart

y.train Dependent variable for training (in sample) data.

Must be a vector (typeof double) with length equal to the number of observations

(equal to the number of rows of x.train).

xind Integer vector indicating which variables are to be plotted.

In pdbart, variables (columns of x.train) for which plot is to be constructed.

In plot.pdbart, indices in list returned by pdbart for which plot is to be con-

structed.

In pd2bart, integer vector of length 2, indicating the pair of variables (columns

of x.train) to plot.

levs Gives the values of a variable at which the plot is to be constructed.

List, where i

th

component gives the values for i

th

variable.

In pdbart, should have same length as xind.

In pd2bart, should have length 2.

See also argument levquants.

levquants If levs in NULL, the values of each variable used in the plot is set to the quantiles

(in x.train) indicated by levquants.

Double vector.

pl For pdbart and pd2bart, if true, plot is made (by calling plot.*).

plquants In the plots, beliefs about f(x) are indicated by plotting the posterior median

and a lower and upper quantile. plquants is a double vector of length two giving

the lower and upper quantiles.

... Additional arguments.

In pdbart,pd2bart, passed on to bart.

In plot.pdbart, passed on to plot.

In plot.pd2bart, passed on to image

x For plot.*, object returned from pdbart or pd2bart.

cols Vector of two colors.

First color is for median of f, second color is for the upper and lower quantiles.

contour.color Color for contours plotted on top of the image.

justmedian Boolean, if true just one plot is created for the median of f(x) draws. If false,

three plots are created one for the median and two additional ones for the lower

and upper quantiles. In this case, mfrow is set to c(1,3).

Details

We divide the predictor vector x into a subgroup of interest, x

s

and the complement x

c

= x \ x

s

.

A prediction f(x) can then be written as f(x

s

, x

c

). To estimate the effect of x

s

on the prediction,

Friedman suggests the partial dependence function

f

s

(x

s

) =

1

n

n

i=1

f(x

s

, x

ic

)

where x

ic

is the i

th

observation of x

c

in the data. Note that (x

s

, x

ic

) will generally not be one of the

observed data points. Using BART it is straightforward to then estimate and even obtain uncertainty

bounds for f

s

(x

s

). A draw of f

s

(x

s

) from the induced BART posterior on f

s

(x

s

) is obtained by

simply computing f

s

(x

s

) as a byproduct of each MCMC draw f

. The median (or average) of

pdbart 9

these MCMC draws f

s

(x

s

) then yields an estimate of f

s

(x

s

), and lower and upper quantiles can

be used to obtain intervals for f

s

(x

s

).

In pdbart x

s

consists of a single variable in x and in pd2bart it is a pair of variables.

This is a computationally intensive procedure. For example, in pdbart, to compute the partial

dependence plot for 5 x

s

values, we need to compute f(x

s

, x

c

) for all possible (x

s

, x

ic

) and there

would be 5n of these where n is the sample size. All of that computation would be done for each

kept BART draw. For this reason running BART with keepevery larger than 1 (eg. 10) makes the

procedure much faster.

Value

The plot methods produce the plots and dont return anything.

pdbart and pd2bart return lists with components given below. The list returned by pdbart is

assigned class pdbart and the list returned by pd2bart is assigned class pd2bart.

fd A matrix whose (i, j) value is the i

th

draw of f

s

(x

s

) for the j

th

value of x

s

.

fd is for function draws.

For pdbart fd is actually a list whose k

th

component is the matrix described

above corresponding to the k

th

variable chosen by argument xind.

The number of columns in each matrix will equal the number of values given

in the corresponding component of argument levs (or number of values in lev-

quants).

For pd2bart, fd is a single matrix. The columns correspond to all possible pairs

of values for the pair of variables indicated by xind. That is, all possible (x

i

, x

j

)

where x

i

is a value in the levs component corresponding to the rst x and x

j

is

a value in the levs components corresponding to the second one.

The rst x changes rst.

levs The list of levels used, each component corresponding to a variable.

If argument levs was supplied it is unchanged.

Otherwise, the levels in levs are as constructed using argument levquants.

xlbs vector of character strings which are the plotting labels used for the variables.

The remaining components returned in the list are the same as in the value of bart. They are simply

passed on from the BART run used to create the partial dependence plot. The function plot.bart

can be applied to the object returned by pdbart or pd2bart to examine the BART run.

Author(s)

Hugh Chipman: <hugh.chipman@acadiau.ca>.

Robert McCulloch: <robert.mcculloch@chicagogsb.edu>.

References

Chipman, H., George, E., and McCulloch, R. (2006) BART: Bayesian Additive Regression Trees.

Chipman, H., George, E., and McCulloch R. (2006) Bayesian Ensemble Learning.

both of the above at: http://faculty.chicagogsb.edu/robert.mcculloch/research/rob-mcculloch-cv.

html

10 pdbart

Friedman, J.H. (2001) Greedy function approximation: A gradient boosting machine. The Annals

of Statistics, 29, 11891232.

Examples

##simulate data

f = function(x) { return(.5*x[,1] + 2*x[,2]*x[,3]) }

sigma=.2 # y = f(x) + sigma*z

n=1 #number of observations

set.seed(27)

x = matrix(2*runif(n*3)-1,ncol=3) ; colnames(x) = c(rob,hugh,ed)

Ey = f(x)

y = Ey + sigma*rnorm(n)

lmFit = lm(y~.,data.frame(x,y)) #compare lm fit to BART later

par(mfrow=c(1,3)) #first two for pdbart, third for pd2bart

##pdbart: one dimensional partial dependence plot

set.seed(99)

pdb1 = pdbart(x,y,xind=c(1,2),

levs=list(seq(-1,1,.2),seq(-1,1,.2)),pl=FALSE,

keepevery=1,ntree=1)

plot(pdb1,ylim=c(-.6,.6))

##pd2bart: two dimensional partial dependence plot

set.seed(99)

pdb2 = pd2bart(x,y,xind=c(2,3),

levquants=c(.5,.1,.25,.5,.75,.9,.95),pl=FALSE,

ntree=1,keepevery=1,verbose=FALSE)

plot(pdb2)

##compare BART fit to linear model and truth = Ey

fitmat = cbind(y,Ey,lmFit$fitted,pdb1$yhat.train.mean)

colnames(fitmat) = c(y,Ey,lm,bart)

print(cor(fitmat))

## plot.bart(pdb1) displays the BART run used to get the plot.

Index

Topic dplot

pdbart, 7

Topic nonlinear

bart, 2

makeind, 6

pdbart, 7

Topic nonparametric

bart, 2

makeind, 6

pdbart, 7

Topic regression

bart, 2

makeind, 6

pdbart, 7

Topic tree

bart, 2

makeind, 6

pdbart, 7

bart, 2, 6, 8, 9

image, 8

makeind, 6

pd2bart (pdbart), 7

pdbart, 5, 7

plot, 8

plot.bart, 9

plot.bart (bart), 2

plot.pd2bart (pdbart), 7

plot.pdbart (pdbart), 7

11

You might also like

- Interaction Effects-Part 2Document5 pagesInteraction Effects-Part 2Ashwini Kumar PalNo ratings yet

- Sequence To Sequence Learning With Neural NetworksDocument9 pagesSequence To Sequence Learning With Neural NetworksIonel Alexandru HosuNo ratings yet

- Fine GrainedDocument8 pagesFine GrainedAshwini Kumar PalNo ratings yet

- Adlec 4Document20 pagesAdlec 4Raman IyerNo ratings yet

- Hermite Interpolation and Piecewise Polynomial ApproximationDocument4 pagesHermite Interpolation and Piecewise Polynomial ApproximationHarshavardhan ReddyNo ratings yet

- Learning Sentiment LexiconsDocument6 pagesLearning Sentiment LexiconsAshwini Kumar PalNo ratings yet

- Central Banks As Agents of Economic DevelopmentDocument24 pagesCentral Banks As Agents of Economic DevelopmentFatima AhmedNo ratings yet

- EM Algorithm for Mixtures of GaussiansDocument4 pagesEM Algorithm for Mixtures of GaussiansAndres Tuells JanssonNo ratings yet

- Numerical Method For Linear AlgebraDocument16 pagesNumerical Method For Linear AlgebraAshwini Kumar PalNo ratings yet

- The Feynman-Kac TheoremDocument7 pagesThe Feynman-Kac TheoremAshwini Kumar PalNo ratings yet

- Cubic Spline InterpolationDocument4 pagesCubic Spline InterpolationAshwini Kumar PalNo ratings yet

- QR Decomposition: Numerical Analysis Massoud MalekDocument4 pagesQR Decomposition: Numerical Analysis Massoud MalekAshwini Kumar PalNo ratings yet

- The Moment Generating Function of The Normal Distribution: Msc. Econ: Mathematical Statistics, 1996Document2 pagesThe Moment Generating Function of The Normal Distribution: Msc. Econ: Mathematical Statistics, 1996hamdimouhhiNo ratings yet

- Three Introductory Lectures On FourierDocument59 pagesThree Introductory Lectures On FouriercooldudepaneendraNo ratings yet

- Interfacing Python With Fortran - F2pyDocument56 pagesInterfacing Python With Fortran - F2pyAnonymous 1rLNlqUNo ratings yet

- Rainbow OptionsDocument6 pagesRainbow OptionsAshwini Kumar PalNo ratings yet

- The QR Method For EigenvaluesDocument5 pagesThe QR Method For EigenvaluesAshwini Kumar PalNo ratings yet

- Game Theory 4Document6 pagesGame Theory 4Ashwini Kumar PalNo ratings yet

- Categorical DataDocument38 pagesCategorical DataJoanne WongNo ratings yet

- C EssentialsDocument45 pagesC EssentialssuchitraprasannaNo ratings yet

- A Suitable BoyDocument2 pagesA Suitable BoyAshwini Kumar PalNo ratings yet

- BayesclustDocument18 pagesBayesclustAshwini Kumar PalNo ratings yet

- Miss ForestDocument10 pagesMiss ForestAshwini Kumar PalNo ratings yet

- Interest Rate ModelsDocument32 pagesInterest Rate ModelsAshwini Kumar PalNo ratings yet

- Kuhn Tucker ConditionDocument1 pageKuhn Tucker ConditionAvinash VasudeoNo ratings yet

- Support Vector Machines and RNNDocument6 pagesSupport Vector Machines and RNNAshwini Kumar PalNo ratings yet

- G. Value Analysis and Value EngineeringDocument5 pagesG. Value Analysis and Value EngineeringMaaz FarooquiNo ratings yet

- AdabagDocument21 pagesAdabagAshwini Kumar PalNo ratings yet

- PRTDocument24 pagesPRTmohitshuklamarsNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hubungan Beban Kerja Fisik Dan Kelelahan Kerja Dengan Unsafe Actions Pada Pekerja Bagian Tanning Upt Industri Kulit MagetanDocument6 pagesHubungan Beban Kerja Fisik Dan Kelelahan Kerja Dengan Unsafe Actions Pada Pekerja Bagian Tanning Upt Industri Kulit MagetanAnnisaNo ratings yet

- ECON 136 Syllabus BreakdownDocument6 pagesECON 136 Syllabus Breakdowncothom_3192No ratings yet

- Hasil Analisis SPSS Regresi Dan Uji TurkeyDocument11 pagesHasil Analisis SPSS Regresi Dan Uji TurkeyFianiNo ratings yet

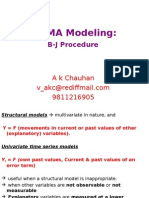

- ARIMA Modeling:: B-J ProcedureDocument26 pagesARIMA Modeling:: B-J ProcedureMayank RawatNo ratings yet

- Assignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) SubjectDocument7 pagesAssignment No. 2 Subject: Educational Statistics (8614) (Units 1-4) Subjectrida batoolNo ratings yet

- Stats 1Document5 pagesStats 1Bob BernsteineNo ratings yet

- Analyze Cost Behavior with Fixed, Variable & Mixed Costs (40chDocument37 pagesAnalyze Cost Behavior with Fixed, Variable & Mixed Costs (40chMaulana HasanNo ratings yet

- Continuous BBN Copula-VineDocument8 pagesContinuous BBN Copula-VineMoisesOsornoPichardoNo ratings yet

- Experiment 5Document6 pagesExperiment 5Nesamanikandan SNo ratings yet

- 36-401 Modern Regression HW #6 Solutions: Problem 1 (32 Points)Document26 pages36-401 Modern Regression HW #6 Solutions: Problem 1 (32 Points)SNo ratings yet

- ID Analisis Klaster Kecamatan Di KabupatenDocument10 pagesID Analisis Klaster Kecamatan Di Kabupatennurul qatijahNo ratings yet

- Multiple Regression AnalysisDocument15 pagesMultiple Regression AnalysisBibhush MaharjanNo ratings yet

- MMW Questions Answer 1. T-Test For Correlated SampleDocument21 pagesMMW Questions Answer 1. T-Test For Correlated SampleMarcoNo ratings yet

- ReportDocument55 pagesReportSidharthNo ratings yet

- Statistics For Managers Using Microsoft Excel: 7 EditionDocument68 pagesStatistics For Managers Using Microsoft Excel: 7 EditionDinesh SainiNo ratings yet

- Dat Science: CLASS 11: Clustering and Dimensionality ReductionDocument30 pagesDat Science: CLASS 11: Clustering and Dimensionality Reductionashishamitav123No ratings yet

- Eco 173 Regression Part IDocument4 pagesEco 173 Regression Part INadim IslamNo ratings yet

- Session 1-Discriminant Analysis-1Document52 pagesSession 1-Discriminant Analysis-1devaki reddyNo ratings yet

- Chapter3 Classification Summary FinalDocument11 pagesChapter3 Classification Summary FinalZubair NajimNo ratings yet

- Jurnal EkonomiDocument13 pagesJurnal EkonomiAsrul MinanjarNo ratings yet

- Neural Networks: 16-385 Computer Vision (Kris Kitani)Document20 pagesNeural Networks: 16-385 Computer Vision (Kris Kitani)juan jose100% (1)

- Peningkatan Laba Operasi Dengan Pengendalian Biaya Produksi: Sustainable Competitive Advantage-9 (Sca-9) Feb UnsoedDocument15 pagesPeningkatan Laba Operasi Dengan Pengendalian Biaya Produksi: Sustainable Competitive Advantage-9 (Sca-9) Feb Unsoedgina anggiNo ratings yet

- Case Study NewDocument16 pagesCase Study Newashishamitav123No ratings yet

- Data Science Related Interview QuestionDocument77 pagesData Science Related Interview QuestionTanvir Rashid100% (1)

- Assignment 02Document5 pagesAssignment 02Raja RaviNo ratings yet

- FMD PRACTICAL FILEDocument61 pagesFMD PRACTICAL FILEMuskan AroraNo ratings yet

- Module 4 - Theory and MethodsDocument161 pagesModule 4 - Theory and MethodsMahmoud ElnahasNo ratings yet

- Project Presentation 03-01-2023Document10 pagesProject Presentation 03-01-2023Syed Tariq NaqshbandiNo ratings yet

- Data Mining BKTI 1Document47 pagesData Mining BKTI 1SilogismNo ratings yet