Professional Documents

Culture Documents

Parallel Processing - Openfoam

Uploaded by

suresh_5010 ratings0% found this document useful (0 votes)

261 views44 pagesParallel processing -Openfoam

Original Title

Parallel processing -Openfoam

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentParallel processing -Openfoam

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

261 views44 pagesParallel Processing - Openfoam

Uploaded by

suresh_501Parallel processing -Openfoam

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 44

Disclaimer

This offering is not approved or

endorsed by OpenCFD Limited, the

producer of the OpenFOAM software

and owner of the OPENFOAM and

OpenCFD trade marks.

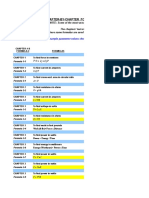

Introductory OpenFOAM Course

University of Genoa, DICCA

Dipartimento di Ingegneria Civile, Chimica e Ambientale

From 17

th

to 21

th

February, 2014

Your Lecturer

Joel GUERRERO

joel.guerrero@unige.it

guerrero@wolfdynamics.com

Todays lecture

1. Running in parallel

2. Running in a cluster using a job scheduler

3. Running with a GPU

4. Hands-on session

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Todays lecture

1. Running in parallel

2. Running in a cluster using a job scheduler

3. Running with a GPU

4. Hands-on session

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

The method of parallel computing used by OpenFOAM is known as

domain decomposition, in which the geometry and associated fields are

broken into pieces and distributed among different processors.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Shared memory architectures Workstations and portable computers

Distributed memory architectures Cluster and super computers

Running in parallel

Some facts about running OpenFOAM in parallel:

Applications generally do not require parallel-specific coding. The

parallel programming implementation is hidden from the user.

Most of the applications and utilities run in parallel.

If you write a new solver, it will be in parallel (most of the times).

I have been able to run in parallel up to 4096 processors.

I have been able to run OpenFOAM using single GPU and multiple

GPUs.

Do not ask me about scalability, that is problem/hardware specific.

If you want to learn more about MPI and GPU programming, do not

look in my direction.

Did I forget to mention that OpenFOAM is free.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

To run OpenFOAM in parallel you will need to:

Decompose the domain. To do so we use the decomposePar utility.

You also will need a dictionary named decomposeParDict which is

located in the system directory of the case.

Distribute the jobs among the processors or computing nodes. To do

so, OpenFOAM uses the public domain OpenMPI implementation of

the standard message passing interface (MPI). By using MPI, each

processor runs a copy of the solver on a separate part of the

decomposed domain.

Finally, the solution is reconstructed to obtain the final result. This is

done by using the reconstrucPar utility.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Domain Decomposition

The mesh and fields are decomposed using the decomposePar utility.

The main goal is to break up the domain with minimal effort but in such a

way to guarantee a fairly economic solution.

The geometry and fields are broken up according to a set of parameters

specified in a dictionary named decomposeParDict that must be located in

the system directory of the case.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Domain Decomposition

In the decomposeParDict file the user must set the number of domains in

which the case should be decomposed. Usually it corresponds to the

number of cores available for the calculation.

numberOfSubdomains NP;

where NP is the number of cores/processors.

The user has a choice of seven methods of decomposition, specified by the

method keyword in the decomposeParDict dictionary.

On completion, a set of subdirectories will have been created, one for each

processor. The directories are named processorN where N = 0, 1, 2, 3,

and so on. Each directory contains the decomposed fields.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Domain Decomposition Methods

simple: simple geometric decomposition in which the domain is split into

pieces by direction.

hierarchical: Hierarchical geometric decomposition which is the same as

simple except the user specifies the order in which the directional split is

done.

manual: Manual decomposition, where the user directly specifies the

allocation of each cell to a particular processor.

multiLevel: similar to hierarchical, but all methods can be used in a nested

form.

structured: 2d decomposition of a structured mesh (special case)

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Domain Decomposition Methods

scotch: requires no geometric input from the user and attempts to minimize

the number of processor boundaries. Similar to metis but with a more

flexible open-source license.

metis: requires no geometric input from the user and attempts to minimize

the number of processor boundaries. You will need to install metis as it is

not distributed with OpenFOAM. Also, you will need to compile the

metisDecomp library.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Please be aware that there are license

restrictions using metis.

Running in parallel

We will now run a case in parallel. From now on follow me.

Go to the $ptofc/parallel_tut/rayleigh_taylor directory. In the

terminal type:

cd $ptofc/parallel_tut/rayleigh_taylor/c1

blockMesh

checkMesh

cp 0/alpha1.org 0/alpha1

funkySetFields -time 0

(If you do not have this tool, copy the file alpha1.init to alpha1. The file is located in the directory 0)

decomposePar

mpirun -np 8 interFoam -parallel

Here I am using 8 processors. In your case, use the maximum number of processor available in your

laptop, for this you will need to modify the decomposeParDict dictionary located in the system folder.

Specifically, you will need to modify the entry numberOfSubdomains, and set its value to the number of

processors you want to use.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

By following the instructions, you should get something like this

Mesh VOF Fraction

Running in parallel

We will now run a case in parallel. From now on follow me.

After the simulation is finish, type in the terminal:

paraFoam -builtin

To directly post-process the decomposed case.

Alternatively, you can reconstruct the case and post-process it:

reconstructPar

paraFoam

To post-process the reconstructed case, it will reconstruct all time-steps saved.

Both of the methods are valid, but the first one does not require to reconstruct

the case.

More about post-processing in parallel in the next slides.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

We will now run a case in parallel. From now on follow me.

Notice the syntax used to run OpenFOAM in parallel:

mpirun -np 8 solver_name -parallel

where mpirun is a shell script to use the mpi library, -np is the number of

processors you want to use, solver_name is the OpenFOAM solver you

want to use, and -parallel is a flag you shall always use if you want to

run in parallel.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Almost all OpenFOAM utilities can be run in parallel. The syntax is as

follows:

mpirun -np 2 utility -parallel

(notice that I am using 2 processors)

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

When post-processing cases that have been run in parallel the user has

three options:

Reconstruction of the mesh and field data to recreate the complete

domain and fields.

reconstructPar

paraFoam

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Post-processing each decomposed domain individually

paraFoam -case processor0

To load all processor directories, the user will need to manually create

the file processorN.OpenFOAM (where N is the processor number) in

each processor folder and then load each file into paraFoam.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Reading the decomposed case without reconstructing it. For this you

will need to:

paraFoam -builtin

This will use a paraFoam plugin that will let you read a decomposed

case or read a reconstructed case.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Let us post-process each decomposed domain individually. From now on follow

me.

Go to the $ptofc/parallel_tut/yf17 directory. In the terminal type:

cd $ptofc/parallel_tut/yf17

In this directory you will find two directories, namely hierarchical and scotch. Let

us decompose both geometries and visualize the partitioning. Let us start with

the scotch partitioning method. In the terminal type:

cd $ptofc/parallel_tut/yf17/scotch

decomposePar

By the way, we do not need to run this case. We just need to decompose it to

visualize the partitions.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Let us post-process each decomposed domain individually. From now on follow

me.

cd $ptofc/parallel_tut/yf17/scotch

decomposePar

touch processor0.OpenFOAM

touch processor1.OpenFOAM

touch processor2.OpenFOAM

touch processor3.OpenFOAM

cp processor0.OpenFOAM processor0

cp processor1.OpenFOAM processor1

cp processor2.OpenFOAM processor2

cp processor3.OpenFOAM processor3

paraFoam

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

By following the instructions, you should get something like this

Mesh partitioning Scotch Method

Running in parallel

In paraFoam open each *.OpenFOAM file within the processor*

directory. By doing like this, we are opening the mesh partition for each

processor. Now, choose a different color for each set you just opened, and

visualize the partition for each processor.

Now do the same with the directory hierarchical, and compare both

partitioning methods.

If you partitioned the mesh with many processors, creating the files

*.OpenFOAM manually can be extremely time consuming, for doing this in

an automatic way you can create a small shell script.

Also, changing the color for each set in paraFoam can be extremely time

consuming, for automate this you can write a python script.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

By following the instructions, you should get something like this

Mesh partitioning Hierarchical Method

Running in parallel

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

By following the instructions, you should get something like this

Mesh partitioning Scotch Method Mesh partitioning Hierarchical Method

Running in parallel

By the way, if you do not want to post-process each decomposed domain

individually, you can use the option cellDist. This option applies for all

decomposition methods. In the terminal type:

cd $ptofc/parallel_tut/yf17/scotch_celldist

decomposePar -cellDist

The option cellDist will write the cell distribution as a volScalarField

for post-processing. The new field will be saved in the file cellDist located in

the time directory 0.

Then launch paraFoam and post process the case as you usually do. You will

need to play with transparency and volume rendering to see the cell distribution

among the processors.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in parallel

Decomposing big meshes

One final word, the utility decomposePar does not run in parallel. So, it is

not possible to distribute the mesh among different computing nodes to do

the partitioning in parallel.

If you need to partition big meshes, you will need a computing node with

enough memory to handle the mesh. I have been able to decompose

meshes with up to 300.000.000 elements, but I used a computing node with

512 gigs of memory.

For example, in a computing node with 16 gigs of memory, it is not possible

to decompose a mesh with 30.000.000; you will need to use a computing

node with at least 32 gigs of memory.

Same applies for the utility reconstructPar.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Todays lecture

1. Running in parallel

2. Running in a cluster using a job scheduler

3. Running with a GPU

4. Hands-on session

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in a cluster using a job scheduler

Running OpenFOAM in a cluster is similar to running in a normal

workstation with shared memory.

The only difference is that you will need to launch your job using a job

scheduler.

Common job schedulers are:

Terascale Open-Source Resource and Queue Manager (TORQUE).

Simple Linux Utility for Resource Management (SLURM).

Portable Batch System (PBS).

Sun Grid Engine (SGE).

Maui Cluster Scheduler.

BlueGene LoadLeveler (LL).

Ask your system administrator the job scheduler installed in your system.

Hereafter I will assume that you will run using PBS.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

To launch a job in a cluster with PBS, you will need to write a small shell

script where you tell to the job scheduler the resources you want to use

and what you want to do.

#!/bin/bash

#

# Simple PBS batch script that reserves 8 nodes and runs a

# MPI program on 64 processors (8 processor on each node)

# The walltime is 24 hours !

#

#PBS -N openfoam_simulation //name of the job

#PBS -l nodes=16,walltime=24:00:00 //max execution time

#PBS -m abe -M joel.guerrero@unige.it //send an email as soon as the job

//is launch or terminated

cd PATH_TO_DIRECTORY //go to this directory

#decomposePar //decompose the case, this line

//is commented

mpirun np 128 pimpleFoam -parallel > log //run parallel openfoam

The green lines are not PBS comments, they are comments inserted in this slide.

PBS comments use the number character (#).

Running in a cluster using a job scheduler

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in a cluster using a job scheduler

To launch your job you need to use the qsub command (part of the PBS job

scheduler). The command will send your job to queue.

qsub script_name

Remember, running in a cluster is no different from running in your

workstation or portable computer. The only difference is that you need to

schedule your jobs.

Depending on the system current demand of resources, the resources you

request and your job priority, sometimes you can be in queue for hours, even

days, so be patient and wait for your turn.

Remember to always double check your scripts.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in a cluster using a job scheduler

Finally, remember to plan how you will use the resources available.

For example, if each computing node has 8 gigs of memory available and 8

cores. You will need to distribute the work load in order not to exceed the

maximum resources available per computing node.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running in a cluster using a job scheduler

So if you are running a simulation that requires 32 gigs of memory, the

following options are valid:

Use 4 computing nodes and ask for 32 cores. Each node will use 8 gigs

of memory and 8 cores.

Use 8 computing nodes and ask for 32 cores. Each node will use 4 gigs

of memory and 4 cores.

Use 8 computing nodes and ask for 64 cores. Each node will use 4 gigs

of memory and 8 cores.

But the following options are not valid:

Use 2 computing nodes. Each node will need 16 gigs of memory.

Use 16 computing nodes and ask for 256 cores. The maximum number

of cores for this job is 128.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

NOTE: In this example each computing node has 8 gigs of memory available and 8 cores.

Todays lecture

1. Running in parallel

2. Running in a cluster using a job scheduler

3. Running with a GPU

4. Hands-on session

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running with a GPU

The official release of OpenFOAM (version 2.2.0), does not support GPU

computing.

To use your GPU with OpenFOAM, you will need to install an external

library.

I currently use the cufflink library. There are a few more options available,

but I do like this one and is open source.

To test OpenFOAM GPU capabilities with cufflink, you will need to install

the latest extend version (OpenFOAM-1.6-ext).

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running with a GPU

You can download cufflink from the following link (as for 15/May/2013) :

http://code.google.com/p/cufflink-library/

Additionally, you will need to install the following libraries:

Cusp: which is a library for sparse linear algebra and graph

computations on CUDA.

http://code.google.com/p/cusp-library/

Thrust: which is a parallel algorithms library which resembles the C++

Standard Template Library (STL).http://code.google.com/p/thrust/

Latest NVIDIA CUDA library.

https://developer.nvidia.com/cuda-downloads

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running with a GPU

Cufflink Features

Currently only supports the OpenFOAM-extend fork of the OpenFOAM

code.

Single GPU support.

Multi-GPU support via OpenFOAM coarse grained parallelism achieved

through domain decomposition (experimental).

A conjugate gradient solver based on Cusp for symmetric matrices (e.g.

pressure), with a choice of Diagonal Preconditioner, Sparse Approximate

Inverse Preconditioner, Algebraic Multigrid (AMG) based on Smoothed

Aggregation Preconditioner.

A bi-conjugate gradient stabilized solver based on CUSP for asymmetric

matrices (e.g. velocity, epsilon, k), with a choice of Diagonal Preconditioner,

Sparse Approximate Inverse Preconditioner.

Single Precision (sm_10), Double precision (sm_13), and Fermi Architecture

(sm_20) supported.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running with a GPU

Running cufflink in OpenFOAM-extend project

Once the cufflink library has been compiled and in order to use the library in

OpenFOAM one needs to include the line

libs ("libCufflink.so");

in the controlDict dictionary. In addition, a solver must be chosen in the

fvSolution dictionary:

p

{

solver cufflink_CG;

preconditioner none;

tolerance 1e-10;

//relTol 1e-08;

maxIter 10000;

storage 1; //COO=0 CSR=1 DIA=2 ELL=3 HYB=4 all other numbers use default CSR

gpusPerMachine 2; //for multi gpu version on a machine with 2 gpus per machine node

AinvType ;

dropTolerance ;

linStrategy ;

}

This particular setup uses an un-preconditioned conjugate gradient solver on a single

GPU; compressed sparse row (CSR) matrix storage; 1e-8 absolute tolerance and 0

relative tolerance; with a maximum number of inner iterations of 10000.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Running with a GPU

The official release of OpenFOAM (version 2.2.0), does not support GPU

computing.

To use your GPU with OpenFOAM, you will need to install an external

library.

There is another open source library named paralution. I am currently

testing this one.

Paralution can be installed in OpenFOAM 2.2.0.

For more information about paralution visit the following link:

www.paralution.com.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Mesh generation using open source tools

Additional tutorials

In the directory $ptofc/parallel_tut you will find many tutorials, try to go through

each one to understand how to setup a parallel case in OpenFOAM.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Thank you for your attention

Todays lecture

1. Running in parallel

2. Running in a cluster using a job scheduler

3. Running with a GPU

4. Hands-on session

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

Hands-on session

In the courses directory ($ptofc) you will find many tutorials (which are different from

those that come with the OpenFOAM installation), let us try to go through each one to

understand and get functional using OpenFOAM.

If you have a case of your own, let me know and I will try to do my best to help you to

setup your case. But remember, the physics is yours.

This offering is not approved or endorsed by OpenCFD Limited, the producer of the OpenFOAM software and owner of the OPENFOAM and OpenCFD trade marks.

You might also like

- Crane Foundation DesignDocument14 pagesCrane Foundation Designway2saleem100% (2)

- Design and Analysis of Bucket Elevator: I. Ii. Literature ReviewDocument6 pagesDesign and Analysis of Bucket Elevator: I. Ii. Literature ReviewRescuemanNo ratings yet

- Answers About HubSpotDocument1 pageAnswers About HubSpotPrasetyaNo ratings yet

- Open FoamDocument1,006 pagesOpen FoamChristian Poveda Gonzalez100% (1)

- Pass1 and 2 CompilerDocument22 pagesPass1 and 2 CompilerPrincess deepika100% (1)

- VNX Power UP Down ProcedureDocument8 pagesVNX Power UP Down ProcedureShahulNo ratings yet

- KSB Megaflow V: Pumps For Sewage, Effuents and MisturesDocument18 pagesKSB Megaflow V: Pumps For Sewage, Effuents and MisturesKorneliusNo ratings yet

- Introductory Openfoam® Course From 2 To6 July, 2012: Joel Guerrero University of Genoa, DicatDocument24 pagesIntroductory Openfoam® Course From 2 To6 July, 2012: Joel Guerrero University of Genoa, DicatSaurabhTripathiNo ratings yet

- 14 Tips and TricksDocument47 pages14 Tips and TricksTarak Nath NandiNo ratings yet

- Open Foarm - OverviewDocument122 pagesOpen Foarm - Overviewsuresh_501No ratings yet

- 1 Introduction-OpenFaomDocument39 pages1 Introduction-OpenFaomsuresh_501No ratings yet

- Open Form Post ProcessingDocument101 pagesOpen Form Post Processingsuresh_501No ratings yet

- 14 Tipsandtricks OFDocument47 pages14 Tipsandtricks OFguilhermehsssNo ratings yet

- OpenFOAM - 12 Tips and TricksDocument85 pagesOpenFOAM - 12 Tips and Trickssina_mechNo ratings yet

- Openbravo ERP Custom Installation GuideDocument4 pagesOpenbravo ERP Custom Installation GuideDows WinNo ratings yet

- Supplement TipsandtricksDocument83 pagesSupplement TipsandtricksVladimir JovanovicNo ratings yet

- Introduction To OpenMPDocument46 pagesIntroduction To OpenMPmceverin9No ratings yet

- Getting StartedDocument14 pagesGetting StartedAva BaranNo ratings yet

- Unit 3 - Programming Multi-Core and Shared MemoryDocument100 pagesUnit 3 - Programming Multi-Core and Shared MemorySupreetha G SNo ratings yet

- OpenFoam Tips and TricksDocument85 pagesOpenFoam Tips and TricksLohengrin Van BelleNo ratings yet

- OpenFoam IntroductionDocument20 pagesOpenFoam Introductionptl_hinaNo ratings yet

- Molpro InstguideDocument16 pagesMolpro Instguiderhle5xNo ratings yet

- Supplement: Tips and TricksDocument78 pagesSupplement: Tips and TricksRajeshChaurasiyaNo ratings yet

- Lec 12 OpenMPDocument152 pagesLec 12 OpenMPAvinashNo ratings yet

- Running in ParallelDocument24 pagesRunning in ParallelmortezagashtiNo ratings yet

- Debugging PDFDocument18 pagesDebugging PDFalejandrovelezNo ratings yet

- Concurrent and Parallel Programming Unit V-Notes Unit V Openmp, Opencl, Cilk++, Intel TBB, Cuda 5.1 OpenmpDocument10 pagesConcurrent and Parallel Programming Unit V-Notes Unit V Openmp, Opencl, Cilk++, Intel TBB, Cuda 5.1 OpenmpGrandhi ChandrikaNo ratings yet

- E3Preptofoam: A Mesh Generator For OpenfoamDocument54 pagesE3Preptofoam: A Mesh Generator For OpenfoamSaurabhTripathiNo ratings yet

- Introduction to OpenMP Parallel ProgrammingDocument47 pagesIntroduction to OpenMP Parallel ProgrammingcaptainnsaneNo ratings yet

- Parallel Programming for Multicore Machines Using OpenMP and MPIDocument77 pagesParallel Programming for Multicore Machines Using OpenMP and MPIThomas YueNo ratings yet

- Multiprocessors: Cs 152 L1 5 .1 DAP Fa97, U.CBDocument38 pagesMultiprocessors: Cs 152 L1 5 .1 DAP Fa97, U.CBIsaacMedeirosNo ratings yet

- Tips and TricksDocument77 pagesTips and TrickskarthikeyaNo ratings yet

- OpenmpDocument21 pagesOpenmpMark VeltzerNo ratings yet

- Free Pascal ManualDocument172 pagesFree Pascal ManualCristian CopceaNo ratings yet

- WINSEM2022-23 CSE4001 ETH VL2022230504003 Reference Material I 22-12-2022 M-2 1parallel ArchitecturesDocument18 pagesWINSEM2022-23 CSE4001 ETH VL2022230504003 Reference Material I 22-12-2022 M-2 1parallel ArchitecturesnehaNo ratings yet

- Selenium GridDocument17 pagesSelenium GridBabeh MugeniNo ratings yet

- A Tutorial On Parallel Computing On Shared Memory SystemsDocument23 pagesA Tutorial On Parallel Computing On Shared Memory SystemsSwadhin SatapathyNo ratings yet

- Introduction To Open MPDocument42 pagesIntroduction To Open MPahmad.nawazNo ratings yet

- Installing Hyperledger Fabric and Composer: Ser/latest/installing/development-To Ols - HTMLDocument13 pagesInstalling Hyperledger Fabric and Composer: Ser/latest/installing/development-To Ols - HTMLVidhi jainNo ratings yet

- OpenmpDocument127 pagesOpenmpivofrompisaNo ratings yet

- Modular ProgrammingDocument14 pagesModular ProgrammingLohith B M BandiNo ratings yet

- Openerp ServerDocument99 pagesOpenerp ServerCarlo ImpagliazzoNo ratings yet

- D74508GC20 Les21Document14 pagesD74508GC20 Les21Belu IonNo ratings yet

- UNWRAPPING TOOLSDocument12 pagesUNWRAPPING TOOLSminojnextNo ratings yet

- Lecture 25-27Document64 pagesLecture 25-27Kripansh mehraNo ratings yet

- Running in Parallel Luc Chin IDocument13 pagesRunning in Parallel Luc Chin INashrif KarimNo ratings yet

- Curso OpenFOAMDocument25 pagesCurso OpenFOAMefcarrionNo ratings yet

- Java Decompiler HOWTODocument8 pagesJava Decompiler HOWTOkrystalklearNo ratings yet

- Parallel Programming Using OpenMPDocument76 pagesParallel Programming Using OpenMPluiei1971No ratings yet

- Odoo Openerp Docs DevelopmentDocument83 pagesOdoo Openerp Docs DevelopmentCoko Mirindi MusazaNo ratings yet

- Printrbot FirmwareDocument5 pagesPrintrbot FirmwareblablablouNo ratings yet

- Installation Guide OpenPPM Complete (ENG) Cell V 4.6.1Document57 pagesInstallation Guide OpenPPM Complete (ENG) Cell V 4.6.1Leonardo ChuquiguancaNo ratings yet

- Compiler in 7 HoursDocument318 pagesCompiler in 7 HoursgitanjaliNo ratings yet

- Odoo DocsDocument83 pagesOdoo DocsNanang HidayatullohNo ratings yet

- P51 - Lab 1, Introduction To Netfpga: DR Noa Zilberman Lent, 2018/19Document15 pagesP51 - Lab 1, Introduction To Netfpga: DR Noa Zilberman Lent, 2018/19Yatheesh KaggereNo ratings yet

- OpenFOAM Exercise: Running a Dam Break SimulationDocument15 pagesOpenFOAM Exercise: Running a Dam Break SimulationFadma FatahNo ratings yet

- Getting Started With Openfoam: Eric PatersonDocument41 pagesGetting Started With Openfoam: Eric PatersonReza Nazari0% (1)

- Openmp: John H. Osorio RíosDocument24 pagesOpenmp: John H. Osorio RíosJuan SuarezNo ratings yet

- Hands On Opencl: Created by Simon Mcintosh-Smith and Tom DeakinDocument258 pagesHands On Opencl: Created by Simon Mcintosh-Smith and Tom DeakinLinh NguyenNo ratings yet

- ManualDocument97 pagesManualdege88No ratings yet

- Day 1 1-12 Intro-OpenmpDocument57 pagesDay 1 1-12 Intro-OpenmpJAMEEL AHMADNo ratings yet

- Boot Time Labs PDFDocument28 pagesBoot Time Labs PDFVaibhav DhinganiNo ratings yet

- Evo Lute Tools For RhinoDocument155 pagesEvo Lute Tools For RhinoNosthbeer HVNo ratings yet

- TensorFlow Developer Certificate Exam Practice Tests 2024 Made EasyFrom EverandTensorFlow Developer Certificate Exam Practice Tests 2024 Made EasyNo ratings yet

- Plasma Spray Process Operating Parameters Optimization Basedon Artificial IntelligenceDocument18 pagesPlasma Spray Process Operating Parameters Optimization Basedon Artificial Intelligencesuresh_501No ratings yet

- Metals: High Velocity Oxygen Liquid-Fuel (HVOLF) Spraying of WC-Based Coatings For Transport Industrial ApplicationsDocument21 pagesMetals: High Velocity Oxygen Liquid-Fuel (HVOLF) Spraying of WC-Based Coatings For Transport Industrial Applicationssuresh_501No ratings yet

- Research Article: Porosity Analysis of Plasma Sprayed Coating by Application of Soft ComputingDocument7 pagesResearch Article: Porosity Analysis of Plasma Sprayed Coating by Application of Soft Computingsuresh_501No ratings yet

- University of Mumbai: "Installation of Safety Brakes in Eot Cranes"Document56 pagesUniversity of Mumbai: "Installation of Safety Brakes in Eot Cranes"suresh_501No ratings yet

- Sensitivity Analysis and Optimisation of HVOF Process Inputs To Reduce Porosity and Maximise Hardness of WC-10Co-4Cr CoatingsDocument23 pagesSensitivity Analysis and Optimisation of HVOF Process Inputs To Reduce Porosity and Maximise Hardness of WC-10Co-4Cr Coatingssuresh_501No ratings yet

- Advanced Simulation of Tower Crane Operation Utilizing System Dynamics Modeling and Lean PrinciplesDocument10 pagesAdvanced Simulation of Tower Crane Operation Utilizing System Dynamics Modeling and Lean Principlessuresh_501No ratings yet

- Research ArticleDocument12 pagesResearch Articlesuresh_501No ratings yet

- Sensors: Evaluation of The Uniformity of Protective Coatings On Concrete Structure Surfaces Based On Cluster AnalysisDocument19 pagesSensors: Evaluation of The Uniformity of Protective Coatings On Concrete Structure Surfaces Based On Cluster Analysissuresh_501No ratings yet

- Detecting macroparticles in thin films using image processingDocument6 pagesDetecting macroparticles in thin films using image processingsuresh_501No ratings yet

- Research ArticleDocument20 pagesResearch Articlesuresh_501No ratings yet

- Structural Engineering: Indian Society OF Structural EngineersDocument24 pagesStructural Engineering: Indian Society OF Structural Engineerssuresh_501No ratings yet

- Sándor Hajdu : Multi-Body Modelling of Single-Mast Stacker CranesDocument9 pagesSándor Hajdu : Multi-Body Modelling of Single-Mast Stacker Cranessuresh_501No ratings yet

- Prediction and Analysis of High Velocity Oxy Fuel (HVOF) Sprayed Coating Using Artificial Neural NetworkDocument31 pagesPrediction and Analysis of High Velocity Oxy Fuel (HVOF) Sprayed Coating Using Artificial Neural Networksuresh_501No ratings yet

- 7.4 Tower Design: Bracing SystemsDocument49 pages7.4 Tower Design: Bracing SystemsBoisterous_Girl100% (1)

- CPA CHIG1901 GPG Tying Hoists To Supporting Structures Rev 1 191201Document84 pagesCPA CHIG1901 GPG Tying Hoists To Supporting Structures Rev 1 191201suresh_501No ratings yet

- Beam Design Formulas With Shear and MomentDocument20 pagesBeam Design Formulas With Shear and MomentMuhammad Saqib Abrar100% (8)

- EOT CraneDocument84 pagesEOT CraneSambhav Poddar80% (5)

- Beam With Joint and Offset LoadDocument9 pagesBeam With Joint and Offset Loadsuresh_501No ratings yet

- Hernandez Eileen C 201212 MastDocument99 pagesHernandez Eileen C 201212 Mastsuresh_501No ratings yet

- Proiect Masa Ridicare PDFDocument41 pagesProiect Masa Ridicare PDFEpure GabrielNo ratings yet

- DSIR Guidlines 2015Document4 pagesDSIR Guidlines 2015suresh_501No ratings yet

- Mohd Fauzi Muhammad (CD 4928)Document24 pagesMohd Fauzi Muhammad (CD 4928)selvakumarNo ratings yet

- Production DrawingDocument98 pagesProduction DrawingThangadurai Senthil Ram PrabhuNo ratings yet

- Finite Element Analysis of Rack-Pinion System of A Jack-Up RigDocument6 pagesFinite Element Analysis of Rack-Pinion System of A Jack-Up Rigsuresh_501No ratings yet

- Failure Analysis of A Pinion of The Jacking System of A Jack-Up PlatformDocument12 pagesFailure Analysis of A Pinion of The Jacking System of A Jack-Up Platformfle92No ratings yet

- Main CraneDocument8 pagesMain CraneMateri BelajarNo ratings yet

- A Model To Optimize Single Tower Crane Location Within A Construction Site (PDFDrive)Document468 pagesA Model To Optimize Single Tower Crane Location Within A Construction Site (PDFDrive)suresh_501No ratings yet

- Beam Design Formulas With Shear and MomentDocument20 pagesBeam Design Formulas With Shear and MomentMuhammad Saqib Abrar100% (8)

- Breaking Shackles of Time: Print vs Digital ReadingDocument2 pagesBreaking Shackles of Time: Print vs Digital ReadingMargarette RoseNo ratings yet

- Software Hardware Tech x86 VirtDocument9 pagesSoftware Hardware Tech x86 VirtwyfwongNo ratings yet

- Factors Effecting PerformanceDocument47 pagesFactors Effecting Performancebembie83No ratings yet

- Methods of Piling ExplainedDocument3 pagesMethods of Piling ExplainedRajesh KhadkaNo ratings yet

- UntitledDocument47 pagesUntitledAndy SánchezNo ratings yet

- Enclosed Product Catalogue 2012Document24 pagesEnclosed Product Catalogue 2012Jon BerryNo ratings yet

- Auto Temp II Heat Pump: Programmable Thermostat For Single and Multistage Heat Pump SystemsDocument22 pagesAuto Temp II Heat Pump: Programmable Thermostat For Single and Multistage Heat Pump Systemswideband76No ratings yet

- Tur C PDFDocument86 pagesTur C PDFWilliam LambNo ratings yet

- DIMENSIONAL TOLERANCES FOR COLD CLOSE RADIUS PIPE BENDINGDocument11 pagesDIMENSIONAL TOLERANCES FOR COLD CLOSE RADIUS PIPE BENDINGpuwarin najaNo ratings yet

- The Top 200 International Design Firms - ENR - Engineering News Record - McGraw-Hill ConstructionDocument4 pagesThe Top 200 International Design Firms - ENR - Engineering News Record - McGraw-Hill ConstructiontarekhocineNo ratings yet

- CH Sravan KumarDocument5 pagesCH Sravan KumarJohnNo ratings yet

- حل جميع المعادلات الكهربائيةDocument60 pagesحل جميع المعادلات الكهربائيةGandhi HammoudNo ratings yet

- Load-Modulated Arrays Emerging MIMO TechnologyDocument83 pagesLoad-Modulated Arrays Emerging MIMO TechnologysmkraliNo ratings yet

- Kodak 2000 Um SM SCHDocument157 pagesKodak 2000 Um SM SCHВиталий КоптеловNo ratings yet

- 2 Biogas Kristianstad Brochure 2009Document4 pages2 Biogas Kristianstad Brochure 2009Baris SamirNo ratings yet

- Automotive Control SystemsDocument406 pagesAutomotive Control SystemsDenis Martins Dantas100% (3)

- Friday Night FightsDocument8 pagesFriday Night Fightsapi-629904068No ratings yet

- Pivot Part NumDocument2 pagesPivot Part Numrossini_danielNo ratings yet

- Zhao PeiDocument153 pagesZhao PeiMuhammad Haris HamayunNo ratings yet

- Phase Locked LoopDocument4 pagesPhase Locked LoopsagarduttaNo ratings yet

- Presentation On BAJAJDocument19 pagesPresentation On BAJAJVaibhav AgarwalNo ratings yet

- Msds Thinner 21-06Document8 pagesMsds Thinner 21-06ridhowibiiNo ratings yet

- Multi-Stage Centrifugal Blower Design Pressure ConsiderationsDocument5 pagesMulti-Stage Centrifugal Blower Design Pressure ConsiderationsSATYA20091100% (1)

- Data ArchivingDocument63 pagesData ArchivingHot_sergio100% (1)

- Disney Channel JRDocument14 pagesDisney Channel JRJonna Parane TrongcosoNo ratings yet

- Chapter-Iv: Profile of The Hindu News PaperDocument5 pagesChapter-Iv: Profile of The Hindu News PaperMurugan SaravananNo ratings yet

- FlowCon General InstructionDocument4 pagesFlowCon General InstructionGabriel Arriagada UsachNo ratings yet