Professional Documents

Culture Documents

Statistics For Management

Uploaded by

Jishu Twaddler D'Crux0 ratings0% found this document useful (0 votes)

28 views17 pagesshort

Original Title

Statistics for Management

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentshort

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

28 views17 pagesStatistics For Management

Uploaded by

Jishu Twaddler D'Cruxshort

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 17

STATISTICS FOR MANAGEMENT

Q: Whats the definition of Statistics ?

A : Statistics are usually defined as:

1. A collection of numerical data that measure something.

2. The science of recording, organising, analysing and reporting quantitative

information.

Q: What are the different components of statistics ?

A: There are four components as per Croxton & Cowden

1. Collection of Data.

2. Presentation of Data

3. Analysis of Data

4. Interpretation of Data

Q: Whats the use of Correlation & Regression ?

A: Correlation & Regression is a statistical tools, are used to measure strength of

relationships between two variables.

Q. What is the need for Statistics?

Statistics gives us a technique to obtain, condense, analyze and relate numerical data.

Statistical methods are of a supreme value in education and psychology.

Q. How is statistics used in everyday life?

Statistics are everywhere, election predictions are statistics, anything food product that

says they x% more or less of a certain ingredient is a statistic. Life expectancy is a

statistic. If you play card games card counting is using statistics. There are tons of

statistics everywhere you look.

Statistical Survey

1. What is statistical survey?

Statistical surveys are used to collect quantitative information about items in a

population. A survey may focus on opinions or factual information depending on its

purpose, and many surveys involve administering questions to individuals. When the

questions are administered by a researcher, the survey is called a structured interview or

a researcher-administered survey. When the questions are administered by

the respondent, the survey is referred to as a questionnaire or a self-administered

survey.

2. What are the advantages of survey?

Efficient way of collecting information

Wide range of information can be collected

Easy to administer

Cheaper to run

3. What are the disadvantages of survey?

Responses may be subjective

Motivation may be low to answer

Errors due to sampling

If the question is not specific, it may lead to vague data.

4. What are the various modes of data collection?

Telephone

Online surveys

Personal survey

Mall intercept survey

5. What is sampling?

Sampling basically means selecting people/objects from a population in order to test

the population for something. For example, we might want to find out how people are

going to vote at the next election. Obviously we cant ask everyone in the country, so we

ask a sample.

Classification, Tabulation & Presentation of data

1. What are the types of data collection?

Qualitative Data

Nominal, Attributable or Categorical data

Ordinal or Ranked data

Quantitative or Interval data

Discrete data

Continuous measurements

2. What is tabulation of data?

Tabulation refers to the systematic arrangement of the information in rows and

columns. Rows are the horizontal arrangement. In simple words, tabulation is a layout

of figures in rectangular form with appropriate headings to explain different rows and

columns. The main purpose of the table is to simplify the presentation and to facilitate

comparisons.

3. What is presentation of data?

Descriptive statistics can be illustrated in an understandable fashion by presenting them

graphically using statistical and data presentation tools.

4. What are the different elements of tabulation?

Tabulation:

Table Number

Title

Captions and Stubs

Headnotes

Body

Source

5. What are the forms of presentation of the data?

Grouped and ungrouped data may be presented as :

Pie Charts

Frequency Histograms

Frequency Polygons

Ogives

Boxplots

Measures used to summarise data

1. What are the measures of summarizing data?

Measures of Central tendency: Mean, median, mode

Measures of Dispersion: Range, Variance, Standard Deviation

2. Define mean, median, and mode?

Mean: The mean value is what we typically call the average. You calculate the mean by

adding up all of the measurements in a group and then dividing by the number of

measurements.

Median: Median is the middle most value in a series when arranged in ascending or

descending order

Mode: The most repeated value in a series.

3. Which measure of central tendency is to be used?

The measure to be used differs in different contexts. If your results involve categories

instead of continuous numbers, then the best measure of central tendency will probably

be the most frequent outcome (the mode). On the other hand, sometimes it is an

advantage to have a measure of central tendency that is less sensitive to changes in the

extremes of the data.

4. Define range, variance and standard deviation?

The range is defined by the smallest and largest data values in the set.

Variance: The variance (

2

) is a measure of how far each value in the data set is from the

mean.

Standard Deviation: it is the square root of the variance.

5. How can standard deviation be used?

The standard deviation has proven to be an extremely useful measure of spread in part

because it is mathematically tractable.

Probablity

1. What is Probability?

Probability is a way of expressing knowledge or belief that an event will occur or has

occurred.

2. What is a random experiment?

An experiment is said to be a random experiment, if its out-come cant be predicted

with certainty.

3. What is a sample space?

The set of all possible out-comes of an experiment is called the sample space. It is

denoted by S and its number of elements are n(s).

Example; In throwing a dice, the number that appears at top is any one of 1,2,3,4,5,6. So

here:

S ={1,2,3,4,5,6} and n(s) = 6

Similarly in the case of a coin, S={Head,Tail} or {H,T} and n(s)=2.

4. What is an event? What are the different kinds of event?

Event: Every subset of a sample space is an event. It is denoted by E.

Example: In throwing a dice S={1,2,3,4,5,6}, the appearance of an event number will be

the event E={2,4,6}.

Clearly E is a sub set of S.

Simple event: An event, consisting of a single sample point is called a simple event.

Example: In throwing a dice, S={1,2,3,4,5,6}, so each of {1},{2},{3},{4},{5} and {6} are

simple events.

Compound event: A subset of the sample space, which has more than on element is

called a mixed event.

Example: In throwing a dice, the event of appearing of odd numbers is a compound

event, because E={1,3,5} which has 3 elements.

5. What is the definition of probability?

If S be the sample space, then the probability of occurrence of an event E is defined as:

P(E) = n(E)/N(S) =

number of elements in E

number of elements in sample space S

Theoretical Distributions

1. What are theoretical distributions?

Theoretical distributions are based on mathematical formulae and logic. It is used in

statistics to define statistics. When empirical and theoretical distributions correspond,

you can use the theoretical one to determine probabilities of an outcome, which will lead

to inferential statistics.

2. What are the various types of theoretical distributions?

Rectangular distribution (or Uniform Distribution)

Binomial distribution

Normal distribution

3. Define rectangular distribution and binomial distribution?

Rectangular distribution: Distribution in which all possible scores have the same

probability of occurrence.

Binomial distribution: Distribution of the frequency of events that can have only two

possible outcomes.

4. What is normal distribution?

The normal distribution is a bell-shaped theoretical distribution that predicts the

frequency of occurrence of chance events. The probability of an event or a group of

events corresponds to the area of the theoretical distribution associated with the event

or group of event. The distribution is asymptotic: its line continually approaches but

never reaches a specified limit. The curve is symmetrical: half of the total area is to the

left and the other half to the right.

5. What is the central limit theorem?

This theorem states that when an infinite number of successive random samples are

taken from a population, the sampling distribution of the means of those samples will

become approximately normally distributed with mean and standard deviation /

N as the same size (N) becomes larger, irrespective of the shape of the population

distribution.

Sampling & Sampling Distributions

1. What is sampling distribution?

Suppose that we draw all possible samples of size n from a given population. Suppose

further that we compute a statistic (mean, proportion, standard deviation) for each

sample. The probability distribution of this statistic is called Sampling Distribution.

2. What is variability of a sampling distribution?

The variability of sampling distribution is measured by its variance or its standard

deviation. The variability of a sampling distribution depends on three factors:

N: the no. of observations in the population.

n: the no. of observations in the sample

The way that the random sample is chosen.

3. How to create the sampling distribution of the mean?

Suppose that we draw all possible samples of size n from a population of size N. Suppose

further that we compute a mean score for each sample. In this way we create the

sampling distribution of the mean.

We know the following. The mean of the population () is equal to the mean of the

sampling distribution (x). And the standard error of the sampling distribution (x) is

determined by the standard deviation of the population (), the population size, and the

sample size. These relationships are shown in the equations below:

x = and x = * sqrt( 1/n 1/N )

4. What is the sampling distribution of the population?

In a population of size N, suppose that the probability of the occurence of an event

(dubbed a success) is P; and the probability of the events non-occurence (dubbed a

failure) is Q. From this population, suppose that we draw all possible samples of

size n. And finally, within each sample, suppose that we determine the proportion of

successes p and failures q. In this way, we create a sampling distribution of the

proportion.

5. Show the mathematical expression of the sampling distribution of the

population.

We find that the mean of the sampling distribution of the proportion (p) is equal to the

probability of success in the population (P). And the standard error of the sampling

distribution (p) is determined by the standard deviation of the population (), the

population size, and the sample size. These relationships are shown in the equations

below:

p = P and p = * sqrt( 1/n 1/N ) = sqrt[ PQ/n - PQ/N ]

where = sqrt[ PQ ].

Estimation

1. When will the sampling distribution be normally distributed?

Generally, the sampling distribution will be approximately normally distributed if any of

the following conditions apply.

The population distribution is normal.

The sampling distribution is symmetric, unimodal, without outliers, and the sample

size is 15 or less.

The sampling distribution is moderately skewed, unimodal, without outliers, and the

sample size is between 16 and 40.

The sample size is greater than 40, without outliers.

2. Get the variability of the sample mean.

Suppose k possible samples of size n can be selected from a population of size N. The

standard deviation of the sampling distribution is the average deviation between

the ksample means and the true population mean, . The standard deviation of the

sample mean x is:

x = * sqrt{ ( 1/n ) * ( 1 n/N ) * [ N / ( N - 1 ) ] }

where is the standard deviation of the population, N is the population size, and n is the

sample size. When the population size is much larger (at least 10 times larger) than the

sample size, the standard deviation can be approximated by:

x = / sqrt( n )

3. How can standard error of the population calculated?

When the standard deviation of the population is unknown, the standard deviation of

the sampling distribution cannot be calculated. Under these circumstances, use the

standard error. The standard error (SE) provides an unbiased estimate of the standard

deviation. It can be calculated from the equation below.

SEx = s * sqrt{ ( 1/n ) * ( 1 n/N ) * [ N / ( N - 1 ) ] }

where s is the standard deviation of the sample, N is the population size, and n is the

sample size. When the population size is much larger (at least 10 times larger) than the

sample size, the standard error can be approximated by:

SEx = s / sqrt( n )

4. How to find the confidence interval of the mean?

Identify a sample statistic. Use the sample mean to estimate the population mean.

Select a confidence level. The confidence level describes the uncertainty of a sampling

method. Often, researchers choose 90%, 95%, or 99% confidence levels; but any percentage can

be used.

Specify the confidence interval. The range of the confidence interval is defined by the sample

statistic + margin of error. And the uncertainty is denoted by the confidence level.

Testing of Hypothesis in case of large & small samples

1. What is a statistical hypothesis?

A statistical hypothesis is an assumption about a population parameter. This

assumption may or may not be true.

2. What are the types of statistical hypothesis?

There are two types of statistical hypotheses.

Null hypothesis. The null hypothesis, denoted by H0, is usually the hypothesis that

sample observations result purely from chance.

Alternative hypothesis. The alternative hypothesis, denoted by H1 or Ha, is the

hypothesis that sample observations are influenced by some non-random cause.

3. What is hypothesis testing?

Statisticians follow a formal process to determine whether to reject a null hypothesis,

based on sample data. This process is called hypothesis testing.

4. Define the steps of hypothesis testing?

Hypothesis testing consists of four steps.

State the hypotheses. This involves stating the null and alternative hypotheses. The

hypotheses are stated in such a way that they are mutually exclusive. That is, if one is

true, the other must be false.

Formulate an analysis plan. The analysis plan describes how to use sample data to

evaluate the null hypothesis. The evaluation often focuses around a single test

statistic.

Analyze sample data. Find the value of the test statistic (mean score, proportion, t-

score, z-score, etc.) described in the analysis plan.

Interpret results. Apply the decision rule described in the analysis plan. If the value

of the test statistic is unlikely, based on the null hypothesis, reject the null

hypothesis.

5. What are decision errors?

Two types of errors can result from a hypothesis test.

Type I error. A Type I error occurs when the researcher rejects a null hypothesis

when it is true. The probability of committing a Type I error is called

the significance level. This probability is also called alpha, and is often denoted

by .

Type II error. A Type II error occurs when the researcher fails to reject a null

hypothesis that is false. The probability of committing a Type II error is called Beta,

and is often denoted by . The probability of not committing a Type II error is called

the Power of the test.

6. How to arrive at a decision on hypothesis?

The decision rules can be taken in two ways with reference to a P-value or with

reference to a region of acceptance.

P-value. The strength of evidence in support of a null hypothesis is measured by

the P-value. Suppose the test statistic is equal to S. The P-value is the probability of

observing a test statistic as extreme as S, assuming the null hypotheis is true. If the

P-value is less than the significance level, we reject the null hypothesis.

Region of acceptance. The region of acceptance is a range of values. If the test

statistic falls within the region of acceptance, the null hypothesis is not rejected. The

region of acceptance is defined so that the chance of making a Type I error is equal to

the significance level.The set of values outside the region of acceptance is called

the region of rejection. If the test statistic falls within the region of rejection, the

null hypothesis is rejected. In such cases, we say that the hypothesis has been

rejected at the level of significance.

7. Explain one-tailed and two-tailed tests?

A test of a statistical hypothesis, where the region of rejection is on only one side of

the sampling distribution, is called a one-tailed test. For example, suppose the null

hypothesis states that the mean is less than or equal to 10. The alternative hypothesis

would be that the mean is greater than 10. The region of rejection would consist of a

range of numbers located located on the right side of sampling distribution; that is, a set

of numbers greater than 10.

A test of a statistical hypothesis, where the region of rejection is on both sides of the

sampling distribution, is called a two-tailed test. For example, suppose the null

hypothesis states that the mean is equal to 10. The alternative hypothesis would be that

the mean is less than 10 or greater than 10. The region of rejection would consist of a

range of numbers located located on both sides of sampling distribution; that is, the

region of rejection would consist partly of numbers that were less than 10 and partly of

numbers that were greater than 10.

F-Distribution and Analysis of variance (ANOVA)

1. What is ANOVA?

Analysis of variance (ANOVA) is a collection of statistical models and their associated

procedures in which the observed variance is partitioned into components due to

different sources of variation. ANOVA provides a statistical test of whether or not the

means of several groups are all equal.

2. What are the assumption in ANOVA?

The following assumptions are made to perform ANOVA:

Independence of cases this is an assumption of the model that simplifies the

statistical analysis.

Normality the distributions of the residuals are normal.

Equality (or homogeneity) of variances, called homoscedasticity the variance of

data in groups should be the same. Model-based approaches usually assume that the

variance is constant. The constant-variance property also appears in the

randomization (design-based) analysis of randomized experiments, where it is a

necessary consequence of the randomized design and the assumption of unit

treatment additivity (Hinkelmann and Kempthorne): If the responses of a

randomized balanced experiment fail to have constant variance, then the assumption

of unit treatment additivity is necessarily violated. It has been shown, however, that

the F-test is robust to violations of this assumption.

3. What is the logic of ANOVA?

Partitioning of the sum of squares

The fundamental technique is a partitioning of the total sum of squares (abbreviated SS)

into components related to the effects used in the model. For example, we show the

model for a simplified ANOVA with one type of treatment at different levels.

So, the number of degrees of freedom (abbreviated df) can be partitioned in a similar

way and specifies the chi-square distribution which describes the associated sums of

squares.

4. What is the F-test?

The F-test is used for comparisons of the components of the total deviation. For

example, in one-way, or single-factor ANOVA, statistical significance is tested for by

comparing the F test statistic

where

I = number of treatments

and

nT = total number of cases

to the F-distribution with I 1,nT I degrees of freedom. Using the F-distribution is a

natural candidate because the test statistic is the quotient of two mean sums of squares

which have a chi-square distribution.

5. Why is ANOVA helpful?

ANOVAs are helpful because they possess a certain advantage over a two-sample t-test.

Doing multiple two-sample t-tests would result in a largely increased chance of

committing a type I error. For this reason, ANOVAs are useful in comparing three or

more means.

Simple correlation and Regression

1. What is correlation?

Correlation is a measure of association between two variables. The variables are not

designated as dependent or independent.

2. What can be the values for correlation coefficient?

The value of a correlation coefficient can vary from -1 to +1. A -1 indicates a perfect

negative correlation and a +1 indicated a perfect positive correlation. A correlation

coefficient of zero means there is no relationship between the two variables.

3. What is the interpretation of the correlation coefficient values?

When there is a negative correlation between two variables, as the value of one variable

increases, the value of the other variable decreases, and vise versa. In other words, for a

negative correlation, the variables work opposite each other. When there is a positive

correlation between two variables, as the value of one variable increases, the value of the

other variable also increases. The variables move together.

4. What is simple regression?

Simple regression is used to examine the relationship between one dependent and one

independent variable. After performing an analysis, the regression statistics can be used

to predict the dependent variable when the independent variable is known. Regression

goes beyond correlation by adding prediction capabilities.

5. Explain the mathematical analysis of regression?

In the regression equation, y is always the dependent variable and x is always the

independent variable. Here are three equivalent ways to mathematically describe a

linear regression model.

y = intercept + (slope x) + error

y = constant + (coefficient x) + error

y = a + bx + e

The significance of the slope of the regression line is determined from the t-statistic. It is

the probability that the observed correlation coefficient occurred by chance if the true

correlation is zero. Some researchers prefer to report the F-ratio instead of the t-

statistic. The F-ratio is equal to the t-statistic squared.

Business Forecasting

1. What is forecasting?

Forecasting is a prediction of what will occur in the future, and it is an uncertain

process. Because of the uncertainty, the accuracy of a forecast is as important as the

outcome predicted by the forecast.

2. What are the various business forecasting techniques?

3. How to model the Causal time series?

With multiple regressions, we can use more than one predictor. It is always best,

however, to be parsimonious, that is to use as few variables as predictors as necessary to

get a reasonably accurate forecast. Multiple regressions are best modeled with

commercial package such as SAS or SPSS. The forecast takes the form:

Y = 0 + 1X1 + 2X2 + . . .+ nXn,

where 0 is the intercept, 1, 2, . . . n are coefficients representing the contribution of the

independent variables X1, X2,, Xn.

4. What are the various smoothing techniques?

Simple Moving average: The best-known forecasting methods is the moving

averages or simply takes a certain number of past periods and add them together; then

divide by the number of periods. Simple Moving Averages (MA) is effective and efficient

approach provided the time series is stationary in both mean and variance. The

following formula is used in finding the moving average of order n, MA(n) for a period

t+1,

MAt+1 = [Dt + Dt-1 + ... +Dt-n+1] / n

where n is the number of observations used in the calculation.

Weighted Moving Average: Very powerful and economical. They are widely used

where repeated forecasts required-uses methods like sum-of-the-digits and trend

adjustment methods. As an example, a Weighted Moving Averages is:

Weighted MA(3) = w1.Dt + w2.Dt-1 + w3.Dt-2

where the weights are any positive numbers such that: w1 + w2 + w3 = 1.

5. Explain exponential smoothing techniques?

Single Exponential Smoothing: It calculates the smoothed series as a damping

coefficient times the actual series plus 1 minus the damping coefficient times the lagged

value of the smoothed series. The extrapolated smoothed series is a constant, equal to

the last value of the smoothed series during the period when actual data on the

underlying series are available.

Ft+1 = Dt + (1 ) Ft

where:

Dt is the actual value

Ft is the forecasted value

is the weighting factor, which ranges from 0 to 1

t is the current time period.

Double Exponential Smoothing: It applies the process described above three to

account for linear trend. The extrapolated series has a constant growth rate, equal to the

growth of the smoothed series at the end of the data period.

6. What are time series models?

A time series is a set of numbers that measures the status of some activity over time. It is

the historical record of some activity, with measurements taken at equally spaced

intervals (exception: monthly) with a consistency in the activity and the method of

measurement.

Time Series Analysis

1. What is time series forecasting?

The time-series can be represented as a curve that evolve over time. Forecasting the

time-series mean that we extend the historical values into the future where the

measurements are not available yet.

2. What are the different models in time series forecasting?

Simple moving average

Weighted moving average

Simple exponential smoothing

Holts double Exponential smoothing

Winters triple exponential smoothing

Forecast by linear regression

3. Explain simple moving average and weighted moving average models?

Simple Moving average: The best-known forecasting methods is the moving

averages or simply takes a certain number of past periods and add them together; then

divide by the number of periods. Simple Moving Averages (MA) is effective and efficient

approach provided the time series is stationary in both mean and variance. The

following formula is used in finding the moving average of order n, MA(n) for a period

t+1,

MAt+1 = [Dt + Dt-1 + ... +Dt-n+1] / n

where n is the number of observations used in the calculation.

Weighted Moving Average: Very powerful and economical. They are widely used

where repeated forecasts required-uses methods like sum-of-the-digits and trend

adjustment methods. As an example, a Weighted Moving Averages is:

Weighted MA(3) = w1.Dt + w2.Dt-1 + w3.Dt-2

where the weights are any positive numbers such that: w1 + w2 + w3 = 1.

4. Explain the exponential smoothing techniques?

Single Exponential Smoothing: It calculates the smoothed series as a damping

coefficient times the actual series plus 1 minus the damping coefficient times the lagged

value of the smoothed series. The extrapolated smoothed series is a constant, equal to

the last value of the smoothed series during the period when actual data on the

underlying series are available.

Ft+1 = a Dt + (1 a) Ft

where:

Dt is the actual value

Ft is the forecasted value

a is the weighting factor, which ranges from 0 to 1

t is the current time period.

Double Exponential Smoothing: It applies the process described above three to

account for linear trend. The extrapolated series has a constant growth rate, equal to the

growth of the smoothed series at the end of the data period.

Triple exponential Smoothing: It applies the process described above three to

account for nonlinear trend.

5. How should one forecast by linear regression?

Regression is the study of relationships among variables, a principal purpose of which is

to predict, or estimate the value of one variable from known or assumed values of other

variables related to it.

Types of Analysis

Simple Linear Regression: A regression using only one predictor is called a simple

regression.

Multiple Regression: Where there are two or more predictors, multiple regression

analysis is employed.

Index Numbers

1. What are index numbers?

Index numbers are used to measure changes in some quantity which we cannot observe

directly. E.g changes in business activity.

2. Describe the classification of index numbers?

Index numbers are classified in terms of the variables that are intended to measure. In

business, different groups of variables in the measurement of which index number

techniques are commonly used are i) price ii) quantity iii) value iv) Business activity

3. What are simple and composite index numbers?

Simple index numbers: A simple index number is a number that measures a relative

change in a single variable with respect to a base.

Composite index numbers: A composite index number is a number that measures

an average relative change in a group of relative variables with respect to a base.

4. What are price index numbers?

Price index numbers measure the relative changes in the prices of commodities between

two periods. Prices can be retail or wholesale.

5. What are quantity index numbers?

These index numbers are considered to measure changes in the physical quantity of

goods produced, consumed, or sold of an item or a group of items.

You might also like

- Aesthetic ElementsDocument137 pagesAesthetic ElementsJishu Twaddler D'CruxNo ratings yet

- Store Atmosphere in Web RetailingDocument14 pagesStore Atmosphere in Web RetailingJishu Twaddler D'CruxNo ratings yet

- E Commerce in IndiaDocument88 pagesE Commerce in Indiavivacaramirez17No ratings yet

- Aesthetic ElementsDocument137 pagesAesthetic ElementsJishu Twaddler D'CruxNo ratings yet

- E Commerce in IndiaDocument88 pagesE Commerce in Indiavivacaramirez17No ratings yet

- Enterprise Cloud Service ArchitectureDocument8 pagesEnterprise Cloud Service ArchitectureJishu Twaddler D'CruxNo ratings yet

- E Commerce in IndiaDocument88 pagesE Commerce in Indiavivacaramirez17No ratings yet

- Value Added Tax: Master Minds - Quality Education Beyond Your ImaginationDocument14 pagesValue Added Tax: Master Minds - Quality Education Beyond Your ImaginationJishu Twaddler D'CruxNo ratings yet

- LeadershipDocument24 pagesLeadershipJishu Twaddler D'CruxNo ratings yet

- Value Added Tax (VAT) : A Presentation by Sanjay JagarwalDocument39 pagesValue Added Tax (VAT) : A Presentation by Sanjay JagarwalJishu Twaddler D'CruxNo ratings yet

- Sem 03 Time TableDocument8 pagesSem 03 Time TableJishu Twaddler D'CruxNo ratings yet

- 335 - Resource - 4. Budget & Budgetary ControlDocument63 pages335 - Resource - 4. Budget & Budgetary ControlJishu Twaddler D'CruxNo ratings yet

- StressDocument20 pagesStressJishu Twaddler D'CruxNo ratings yet

- Que P: Stion Aper CodeDocument20 pagesQue P: Stion Aper CodeJishu Twaddler D'CruxNo ratings yet

- Flip KartDocument38 pagesFlip KartJishu Twaddler D'CruxNo ratings yet

- AppSolution FoxboroDocument2 pagesAppSolution FoxboroJishu Twaddler D'CruxNo ratings yet

- A Study On Profitability Management Nutrine Confectionery Company Private LimitedDocument8 pagesA Study On Profitability Management Nutrine Confectionery Company Private LimitedJishu Twaddler D'CruxNo ratings yet

- Working CapitalDocument77 pagesWorking CapitalJishu Twaddler D'CruxNo ratings yet

- Decision MakingDocument18 pagesDecision MakingJishu Twaddler D'CruxNo ratings yet

- Nervous SystemDocument6 pagesNervous SystemJishu Twaddler D'CruxNo ratings yet

- A Study On Profitability Management Nutrine Confectionery Company Private LimitedDocument8 pagesA Study On Profitability Management Nutrine Confectionery Company Private LimitedJishu Twaddler D'CruxNo ratings yet

- The Real World Use of Big Data AnalyticsDocument6 pagesThe Real World Use of Big Data AnalyticsJishu Twaddler D'CruxNo ratings yet

- Cash Flows in FinanceDocument34 pagesCash Flows in FinanceJishu Twaddler D'CruxNo ratings yet

- Spss AnalysisDocument10 pagesSpss AnalysisJishu Twaddler D'CruxNo ratings yet

- Princeton PoliciesDocument5 pagesPrinceton PoliciesJishu Twaddler D'CruxNo ratings yet

- Lecture - Slides - 0101-Homeostasis Basic Mechanisms 2013 CopyrightDocument7 pagesLecture - Slides - 0101-Homeostasis Basic Mechanisms 2013 CopyrightLisa Aguilar100% (1)

- 6Document6 pages6Gabriel AnastasiuNo ratings yet

- Human Resource Management 611 (Bookmarked)Document83 pagesHuman Resource Management 611 (Bookmarked)Jishu Twaddler D'CruxNo ratings yet

- Princeton PoliciesDocument5 pagesPrinceton PoliciesJishu Twaddler D'CruxNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Introduction To Mathematics of FinanceDocument19 pagesIntroduction To Mathematics of FinanceMehroze ElahiNo ratings yet

- Eular Method TheoryDocument5 pagesEular Method Theory064 Jayapratha P RNo ratings yet

- PLSQL Programs (RDBMS)Document11 pagesPLSQL Programs (RDBMS)Devanshi PatelNo ratings yet

- Senior Section - First Round - SMO Singapore Mathematical Olympiad 2015Document7 pagesSenior Section - First Round - SMO Singapore Mathematical Olympiad 2015Steven RadityaNo ratings yet

- Thomas Fermi Model 1Document6 pagesThomas Fermi Model 1shankshendeNo ratings yet

- Sheet#2 - Cost Equation&Breakeven AnalysisDocument35 pagesSheet#2 - Cost Equation&Breakeven AnalysisMuhammad RakibNo ratings yet

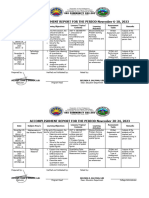

- Mike Ranada Accomplishment Report November 2023Document3 pagesMike Ranada Accomplishment Report November 2023MARICSON TEOPENo ratings yet

- CPP: Parabola: X Y Ax A at at B at at Acab T T Y AxDocument3 pagesCPP: Parabola: X Y Ax A at at B at at Acab T T Y AxSamridh GuptaNo ratings yet

- Ee-Module 1 PDFDocument22 pagesEe-Module 1 PDFravitejNo ratings yet

- W.P.energy Final ColourDocument89 pagesW.P.energy Final Colourmanan100% (1)

- Bra Ket & Linear AlgebraDocument4 pagesBra Ket & Linear AlgebracrguntalilibNo ratings yet

- IME634: Management Decision AnalysisDocument82 pagesIME634: Management Decision AnalysisBeHappy2106No ratings yet

- GB Sir Calculs PrbomDocument607 pagesGB Sir Calculs PrbomAnish VijayNo ratings yet

- Worksheet and Assignment TemplateDocument3 pagesWorksheet and Assignment TemplateJason PanganNo ratings yet

- Crystallography Notes11Document52 pagesCrystallography Notes11AshishKumarNo ratings yet

- MLT Assignment 1Document13 pagesMLT Assignment 1Dinky NandwaniNo ratings yet

- COT-Division of Integers-Lesson-Plan - 4 - 013117Document13 pagesCOT-Division of Integers-Lesson-Plan - 4 - 013117Melanie Briones CalizoNo ratings yet

- UNIT - I-FinalDocument67 pagesUNIT - I-FinalKarthiNo ratings yet

- The Simplex Algorithm and Goal ProgrammingDocument73 pagesThe Simplex Algorithm and Goal ProgrammingmithaNo ratings yet

- P2 Chp5 RadiansDocument28 pagesP2 Chp5 RadiansWaqas KhanNo ratings yet

- Lesson 4: 4. IntegrationDocument34 pagesLesson 4: 4. IntegrationChathura LakmalNo ratings yet

- GCSE Maths Paper 4 (Calculator) - Higher TierDocument20 pagesGCSE Maths Paper 4 (Calculator) - Higher TierO' Siun100% (12)

- Probability Theory Masc467: Prof. Amina SalehDocument39 pagesProbability Theory Masc467: Prof. Amina SalehmadaNo ratings yet

- Parabola Exercise 2 - ADocument19 pagesParabola Exercise 2 - AAtharva Sheersh PandeyNo ratings yet

- Unit - 1.2-15.07.2020-EC8533 - DTSP-Basics of DFTDocument13 pagesUnit - 1.2-15.07.2020-EC8533 - DTSP-Basics of DFTmakNo ratings yet

- Sigworth MatrixDocument10 pagesSigworth MatrixJeannette CraigNo ratings yet

- Algebra I Practice Test With AnswersDocument42 pagesAlgebra I Practice Test With AnswersSarazahidNo ratings yet

- Math1070 130notes PDFDocument6 pagesMath1070 130notes PDFPrasad KharatNo ratings yet

- The Golden Section and The Piano Sonatas of Mozart PDFDocument9 pagesThe Golden Section and The Piano Sonatas of Mozart PDFSanath SrivastavaNo ratings yet

- Final Copy - MD-UD Book List 2014-2015Document33 pagesFinal Copy - MD-UD Book List 2014-2015robokrish6No ratings yet