Professional Documents

Culture Documents

Journal of The American Statistical Association

Uploaded by

mphil.rameshOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Journal of The American Statistical Association

Uploaded by

mphil.rameshCopyright:

Available Formats

This article was downloaded by: [Indian Council of Medical Res], [ramesh athe]

On: 02 May 2013, At: 02:21

Publisher: Taylor & Francis

Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer

House, 37-41 Mortimer Street, London W1T 3JH, UK

Journal of the American Statistical Association

Publication details, including instructions for authors and subscription information:

http://www.tandfonline.com/loi/uasa20

A Bayesian Semiparametric Model for Random-

Effects Meta-Analysis

Deborah Burr and Hani Doss

Deborah Burr is Assistant Professor, Division of Epidemiology and Biometrics, School

of Public Health , and Hani Doss is Professor, Department of Statistics , Ohio State

University, Columbus, OH 43210. We are very grateful to the two reviewers, whose

thorough reading and constructive criticism improved the paper. The research of Burr

was supported by NIH/NIOSH grants 5 R01 OH03913 and 5 U01 OH07313. The research of

Doss was supported by National Security Agency grant MDA904-03-1-0021 and NIH/NCI

grant 03 CA10337-01.

Published online: 31 Dec 2011.

To cite this article: Deborah Burr and Hani Doss (2005): A Bayesian Semiparametric Model for Random-Effects Meta-

Analysis, Journal of the American Statistical Association, 100:469, 242-251

To link to this article: http://dx.doi.org/10.1198/016214504000001024

PLEASE SCROLL DOWN FOR ARTICLE

Full terms and conditions of use: http://www.tandfonline.com/page/terms-and-conditions

This article may be used for research, teaching, and private study purposes. Any substantial or systematic

reproduction, redistribution, reselling, loan, sub-licensing, systematic supply, or distribution in any form to

anyone is expressly forbidden.

The publisher does not give any warranty express or implied or make any representation that the contents

will be complete or accurate or up to date. The accuracy of any instructions, formulae, and drug doses

should be independently verified with primary sources. The publisher shall not be liable for any loss, actions,

claims, proceedings, demand, or costs or damages whatsoever or howsoever caused arising directly or

indirectly in connection with or arising out of the use of this material.

A Bayesian Semiparametric Model for

Random-Effects Meta-Analysis

Deborah BURR and Hani DOSS

In meta-analysis, there is an increasing trend to explicitly acknowledge the presence of study variability through random-effects models.

That is, one assumes that for each study there is a study-specic effect and one is observing an estimate of this latent variable. In a random-

effects model, one assumes that these study-specic effects come from some distribution, and one can estimate the parameters of this

distribution, as well as the study-specic effects themselves. This distribution is most often modeled through a parametric family, usually

a family of normal distributions. The advantage of using a normal distribution is that the mean parameter plays an important role, and

much of the focus is on determining whether or not this mean is 0. For example, it may be easier to justify funding further studies if it

is determined that this mean is not 0. Typically, this normality assumption is made for the sake of convenience, rather than from some

theoretical justication, and may not actually hold. We present a Bayesian model in which the distribution of the study-specic effects

is modeled through a certain class of nonparametric priors. These priors can be designed to concentrate most of their mass around the

family of normal distributions but still allow for any other distribution. The priors involve a univariate parameter that plays the role of the

mean parameter in the normal model, and they give rise to robust inference about this parameter. We present a Markov chain algorithm for

estimating the posterior distributions under the model. Finally, we give two illustrations of the use of the model.

KEY WORDS: Conditional Dirichlet process; Dirichlet process; Likelihood ratio; Markov chain Monte Carlo; meta-analysis; Random-

effects.

1. INTRODUCTION

The following situation arises frequently in medical studies.

Each of m centers reports the outcome of a study that investi-

gates the same medical issue, which for the sake of concreteness

we will think of as a comparison between a new treatment and

an old treatment. The results are inconsistent, with some studies

being favorable to the new treatment and others indicating less

promise, and one would like to arrive at an overall conclusion

regarding the benets of the new treatment.

Early work in meta-analysis involved pooling of effect-size

estimates or combining of p values. However, because the cen-

ters may differ in their patient pool (e.g., overall health level,

age, genetic makeup) or the quality of the health care they

provide, it is now widely recognized that it is important to

explicitly deal with the heterogeneity of the studies through

random-effects models, in which for each center i there is a

center-specic true effect, represented by a parameter

i

.

Suppose that, for each i, center i gathers data D

i

froma distri-

bution P

i

(

i

). This distribution depends on

i

and also on other

quantities, for example, the sample size as well as nuisance pa-

rameters specic to the ith center. For instance,

i

might be

the regression coefcient for the indicator of treatment in a Cox

model, with D

i

the estimate of this parameter. As another exam-

ple,

i

might be the ratio of the survival probabilities at a xed

time for the new and old treatments, with D

i

a ratio of estimates

of survival probabilities based on censored data. A third exam-

ple, which is very common in epidemiological studies, is one

in which

i

is the odds ratio arising in case-control studies and

D

i

is either an adjusted odds ratio based on a logistic regression

model that involves relevant covariates or simply the usual odds

ratio based on a 2 2 table.

Deborah Burr is Assistant Professor, Division of Epidemiology and Bio-

metrics, School of Public Health (E-mail: burr@stat.ohio-state.edu), and Hani

Doss is Professor, Department of Statistics (E-mail: doss@stat.ohio-state.edu),

Ohio State University, Columbus, OH 43210. We are very grateful to the two

reviewers, whose thorough reading and constructive criticism improved the pa-

per. The research of Burr was supported by NIH/NIOSH grants 5 R01 OH03913

and 5 U01 OH07313. The research of Doss was supported by National Security

Agency grant MDA904-03-1-0021 and NIH/NCI grant 03 CA10337-01.

A very commonly used random-effects model for dealing

with this kind of situation is the following:

conditional on

i

, D

i

ind

N(

i

,

2

i

), i =1, . . . , m,

(1a)

and

i

iid

N(,

2

), i =1, . . . , m. (1b)

In (1b), and are unknown parameters. (The

i

s are also

unknown, but we usually will have estimates

i

along with the

D

i

s, and estimation of the

i

s is secondary. This is discussed

further in Sec. 2.)

Model (1) has been considered extensively in the meta-

analysis literature, with much of the work focused on the case

where

i

is the difference between two binomial probabili-

ties or an odds ratio based on two binomial probabilities. In

the frequentist setting, the classic article of DerSimonian and

Laird (1986) gave formulas for the maximum likelihood esti-

mates of and and a test for the null hypothesis that =0.

In a Bayesian analysis, a joint prior is put on the pair (, ).

Bayesian approaches have been developed by a number of

authors, including Skene and Wakeeld (1990), DuMouchel

(1990), Morris and Normand (1992), Carlin (1992), and Smith,

Spiegelhalter, and Thomas (1995). As is discussed by these au-

thors, a key advantage of the Bayesian approach is that infer-

ence concerning the center-specic effects

i

is carried out in

a natural manner through consideration of the posterior distrib-

utions of these parameters. [When Markov chain Monte Carlo

(MCMC) is used, estimates of these posterior distributions typ-

ically arise as part of the output. From the frequentist perspec-

tive, estimation of the study-specic effects

i

is much more

difcult. An important application arises in small area estima-

tion; see Ghosh and Rao 1994 for a review.]

The approximation of P

i

(

i

) by a normal distribution in (1a)

is typically supported by some theoretical result, for example,

2005 American Statistical Association

Journal of the American Statistical Association

March 2005, Vol. 100, No. 469, Theory and Methods

DOI 10.1198/016214504000001024

242

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

Burr and Doss: Random-Effects Meta-Analysis 243

the asymptotic normality of maximum likelihood estimates. By

contrast, the normality statement in (1b) is a modeling assump-

tion, which generally is made for the sake of convenience and

does not have any theoretical justication. One would like to

replace (1) with a model of the following sort:

conditional on

i

, D

i

ind

N(

i

,

2

i

), i =1, . . . , m, (2a)

i

iid

F, i =1, . . . , m, (2b)

and

F , (2c)

where is a nonparametric prior.

This article is motivated by a situation that we recently

encountered (Burr, Doss, Cooke, and Goldschmidt-Clermont

2003), in which we considered 12 articles appearing in med-

ical journals, each of which reported on a case-control study

that aimed to determine whether or not the presence of a cer-

tain genetic trait was associated with an increased risk of coro-

nary heart disease. Each study considered a group of individuals

with coronary heart disease and another group with no history

of heart disease. The proportion having the genetic trait in each

group was noted, and an odds ratio was calculated. The stud-

ies gave rather inconsistent results (with p values for the two-

sided test that the log odds ratio is 0 ranging from .005 to .999,

and the reported log odds ratios themselves ranging from+1.06

to .38), giving rise to sharply conicting opinions on whether

or not there could exist an association between the genetic trait

and susceptibility to heart disease. It was clear that the studies

had different study-specic effects, and it appeared that these

did not follow a normal distribution, so that it was more ap-

propriate to use a model like (2) than a model based on (1).

In our analysis, in the terminology of model (1), the issue of

main interest was not estimating of the center-specic

i

s, but

rather resolving the basic question of whether the overall mean

is different from 0, because this will determine whether or

not carrying out further studies is justied.

To deal with this issue when considering a model of the

form (2), the prior in (2c) must involve a univariate para-

meter that can play a role analogous to that of in (1b). The

purpose of this article is to present a simple such model based

on mixtures of conditional Dirichlet processes. The article is

organized as follows. In Section 2 we review a standard model

based on mixtures of Dirichlet processes and explain its lim-

itations in the meta-analysis setting that we have in mind. In

Section 3 we present the model based on mixtures of condi-

tional Dirichlet processes, explain its rationale, and describe a

MCMC algorithm for estimating the posterior distribution. We

also discuss the connection between the posterior distributions

under this model and the standard model. In Section 4 we give

two examples that illustrate various issues. We give a proof of

a likelihood ratio formula stated in Section 3 in the Appendix.

2. A NONPARAMETRIC BAYESIAN MODEL FOR

RANDOM EFFECTS

In a Bayesian version of model (1) where a prior is put on

the pair (, ), the most common choice is the normal/inverse

gamma prior [see, e.g., Berger 1985, p. 288, and also the de-

scription in (3)], which is conjugate to the family N(,

2

).

For the problem where the

i

s are actually observed, the pos-

terior distribution of (, ) is available in closed form. In the

present situation in which the

i

s are latent variables on which

we have only partial information, there is no closed-form ex-

pression for the posterior, although it is very easy to write a

MCMC algorithm to estimate this posterior, for example, in

BUGS (Spiegelhalter, Thomas, Best, and Gilks 1996).

A convenient choice for a nonparametric Bayesian version

of this is a model based on mixtures of Dirichlet processes

(Antoniak 1974). Before proceeding, we give a brief review

of this class of priors. Let H

; R

k

be a parametric

family of distributions on the real line, and let be a dis-

tribution on . Suppose that M

> 0 for each , and dene

= M

. If is chosen from , and then F is chosen

from D

, the Dirichlet process with parameter measure

(Ferguson 1973, 1974), then we say that the prior on F is a

mixture of Dirichlet processes [with parameter ({

, )].

Although it is sometimes useful to allow M

to depend on ,

for the sake of clarity of exposition we assume that M

does

not vary with , and we denote the common value by M. In

this case, M can be interpreted as a precision parameter that in-

dicates the degree of concentration of the prior on F around

the parametric family {H

; }. In a somewhat oversim-

plied but nevertheless useful view of this class of priors, we

think of the family {H

; } as a line (of dimension k)

in the innite-dimensional space of cdfs, and imagine tubes

around this line. For large values of M, the mixture of Dirichlet

processes puts most of its mass in narrow tubes, whereas for

small values of M, the prior is more diffuse.

If we take the parametric family {H

} to be the N(,

2

)

family and to be the normal/inverse gamma conjugate prior,

then the model is expressed hierarchically as follows:

conditional on

i

, D

i

ind

N(

i

,

2

i

), i =1, . . . , m; (3a)

conditional on F,

i

iid

F, i =1, . . . , m; (3b)

conditional on , , F D

MN(,

2

)

; (3c)

conditional on , N(c, d

2

); (3d)

and

=1/

2

gamma(a, b). (3e)

In (3d) and (3e), a, b, d > 0, and < c < are arbitrary

but xed. The

i

s are unknown, and it is common to use es-

timates

i

instead. If the studies do not involve small sam-

ples, then this substitution has little effect (DerSimonian and

Laird 1986); otherwise, one will want to also put priors on

the

i

s. This kind of model has been used successfully to

model random effects in many situations. An early version of

the model and a Gibbs sampling algorithm for estimating pos-

terior distributions were developed by Escobar (1988, 1994).

Let = (

1

, . . . ,

m

) and D = (D

1

, . . . , D

m

). In essence, the

Gibbs sampler runs over the vector of latent variables and

the pair (, ), and the result is a sample (

(g)

,

(g)

,

(g)

);

g =1, . . . , G, which are approximately distributed according to

the conditional distribution of (, , ) given the data. (See the

papers in Dey, Mller, and Sinha 1998 and Neal 2000 for recent

developments concerning models of this kind.)

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

244 Journal of the American Statistical Association, March 2005

In this article we use L generically to denote distribution or

law. We adopt the convention that subscripting a distribution

indicates conditioning. Thus if U and V are two random vari-

ables, then both L(U|V, D) and L

D

(U|V) mean the same thing.

However, we use L

D

(U|V) when we want to focus attention on

the conditioning on V. This is useful in describing steps in a

Gibbs sampler, for example, when D is xed throughout.

As mentioned earlier, in the kind of meta-analysis that we

have in mind, the question of principal interest is whether or

not the mean of F, the distribution of the study-specic effect,

is different from 0. Note that in model (3), is not equal to

=(F) =

_

x dF(x), the mean of F, and so inference on is

not given automatically as part of the Gibbs sampler output.

The method of Gelfand and Kottas (2002) (see also Muliere

and Tardella 1998) can be used to estimate the posterior dis-

tribution of . This method is based on Sethuramans (1994)

construction, which represents the Dirichlet process as an in-

nite sum of atoms, with an explicit description of their lo-

cations and sizes. In brief, the method of Gelfand and Kottas

(2002) involves working with a truncated version of this in-

nite sum. How far out into the sum one needs to go to get ac-

curate results depends on M +m, the parameters of the model,

and on the data. In particular, the truncation point needs to grow

with the quantity M +m, and the algorithm gives good results

when this quantity is small or moderate but can be quite slow

when it is large. In principle, the truncation point can be chosen

through informal calculations, but it is difcult to do so when

implementing an MCMC algorithm, because then the parame-

ters of the Dirichlet process are perpetually changing. [We men-

tion that we have used Sethuramans construction to generate

a Gibbs sampler in previous work (Doss 1994). The situation

encountered in that article was quite different, however, in that

there, we needed to generate randomvariables froman F with a

Dirichlet distribution. The algorithmthat we used required us to

generate only a segment of F in which the number of terms was

nite but random, and the resulting randomvariables turned out

to have the distribution F exactly.]

3. A SEMIPARAMETRIC MODEL AND ALGORITHM

FOR RANDOMEFFECTS METAANALYSIS

As an alternative to the class of mixtures of Dirichlet

processes in model (3), we may use a class of mixtures of con-

ditional Dirichlet processes, in which is the median of F with

probability 1. This can be done by using a construction given

by Doss (1985), which is reviewed here. Conditional Dirich-

lets have also been used to deal with identiability issues by

Newton, Czado, and Chappell (1996).

3.1 A Model Based on Mixtures of Conditional

Dirichlet Processes

Let be a nite measure on the real line, and let

(, ) be xed. Let

and

+

be the restrictions of to

(, ) and (, ), in the following sense: For any set A,

(A) ={A (, )} +

1

2

_

A {}

_

and

+

(A) ={A (, )} +

1

2

_

A {}

_

.

Choose F

and F

+

D

+

independently, and form F

by

F(t) =

1

2

F

(t) +

1

2

F

+

(t). (4)

The distribution of F is denoted by D

. Note that with proba-

bility 1, the median of F is . The prior D

has the following

interpretation: If F D

, then D

is the conditional distribu-

tion of F given that the median of F is . Note that if has

median , then E(F(t)) = (t)/{(, )}, as before, and

furthermore, the quantity {(, )} continues to play the

role of a precision parameter.

We use a conditional Dirichlet process instead of a Dirichlet

process; thus model (3) is replaced by the following:

conditional on

i

, D

i

ind

N(

i

,

2

i

), i =1, . . . , m; (5a)

conditional on F,

i

iid

F, i =1, . . . , m; (5b)

conditional on , , F D

MN(,

2

)

; (5c)

conditional on , N(c, d

2

); (5d)

and

=1/

2

gamma(a, b). (5e)

If we wish to have a dispersed prior on (, ), then we may take

a and b small, c =0, and d large.

Before proceeding, we remark on the objectives when using

a model of the form of (5). Obtaining good estimates of the en-

tire mixture distribution F usually requires very large sample

sizes (here the number of studies). It will often be the case that

the number of studies is not particularly large, and when us-

ing model (5), the focus will then be on inference concerning

the univariate parameter . For the case where the

i

s are ob-

served completely (equivalent to the model where each study

involves an innite sample size), a model of the form of (5)

reduces to the model studied by Doss (1985), which was intro-

duced for the purpose of robust estimation of . For this case,

the posterior distribution of is given in Proposition 1 in the

next section. Assuming that the

i

s are all distinct, this pos-

terior has a density that is essentially a product of two terms,

one that shrinks it toward the mean of the

i

s and another that

shrinks it toward their median. The mean of this posterior has

good small sample (i.e., small m) frequentist properties (Doss

1983, 1985). We discuss this in more detail in Section 3.2,

where we also discuss the ramications for model (5), where

the

i

s are not necessarily all distinct. The general effect is il-

lustrated in Section 4.1, where we compare models (3) and (5).

To conclude, there are two reasons to use this model. First,

as mentioned earlier, in (5) the parameter has a well-dened

role, and as we discuss in Section 3.2, is easily estimated be-

cause it emerges as part of the Gibbs sampler output. Second,

for model (5), the posterior distribution of is not heavily in-

uenced by a few outlying studies.

3.2 A Gibbs Sampler for Estimating

the Posterior Distribution

There is not known way to obtain the posterior distribution in

closed form for model (5); one must use MCMC. Markov chain

methods for estimating the posterior distribution of F given D

i

,

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

Burr and Doss: Random-Effects Meta-Analysis 245

i = 1, . . . , m, in a model like (3) are now well established and

are based on Escobars (1994) use of the Plya urn scheme

of Blackwell and MacQueen (1973). It is possible to improve

Escobars (1994) original algorithm to substantially speed up

convergence; the recent article by Neal (2000) reviewed pre-

vious work and presented new ideas. Here we describe a ba-

sic Gibbs sampling algorithm for model (5). It is possible to

develop versions of some of the algorithms described by Neal

(2000) that can be implemented for model (5), using as basis the

formulas developed in this section, but we do not do this here.

We are interested primarily in the posterior distribution of ,

but also are interested in other posterior distributions, such as

L

D

(

m+1

) and L

D

(F). Here

m+1

denotes the study-specic

effect for a future study, so that L

D

(

m+1

) is a predictive dis-

tribution. All of these can be estimated if we can generate a

sample from L

D

(, , ). Our Gibbs sampler on (, , ) has

cycle m+1 and proceeds by updating

1

, . . . ,

m

and then the

pair (, ).

One of the steps of our Gibbs sampling algorithm requires

the following result, which gives the posterior distribution of

the mixing parameter for the situation in which the

i

s are

known. Let H be a distribution function. Dene

H

(x) =H

_

(x )/

_

for =(, ).

Let =(

1

, . . . ,

m

). We use

(i)

to denote (

1

, . . . ,

i1

,

i+1

, . . . ,

m

). (For the sake of completeness, we give the re-

sult for the general case, where M

depends on .)

Proposition 1. Assume that H is absolutely continuous,

with continuous density h, and that the median of H is 0. If

1

, . . . ,

m

are

iid

F, and if the prior on F is the mixture of

conditional Dirichlets

_

D

(d), then the posterior dis-

tribution of given

1

, . . . ,

m

is absolutely continuous with

respect to and is given by

(d) =c()

_

dist

h

_

__

K(, )

_

(M

)

#()

(M

)

(M

+n)

_

(d), (6)

where

K(, ) =

_

_

M

/2 +

m

i=1

I(

i

<)

_

_

M

/2 +

m

i=1

I(

i

>)

__

1

, (7)

the dist in the product indicates that the product is taken over

distinct values only, #() is the number of distinct values in the

vector , is the gamma function, and c() is a normalizing

constant.

Proposition 1 is proved through a computation much like the

one used to prove theorem 1 of Doss (1985). We need to pro-

ceed with that calculation with the linear Borel set A replaced

by the product of Borel sets A

1

A

2

, where A

1

is a subset of

the reals and A

2

is a subset of the strictly positive reals, and use

the fact that these rectangles form a determining class.

Note that if M

does not depend on , then in (6) the term

in square brackets is a constant that can be absorbed into the

overall normalizing constant, and K(, ) depends on only

through [by slight abuse of notation we then write K(, )].

In this case, (6) is similar to the familiar formula that says that

the posterior is proportional to the likelihood times the prior,

except that the likelihood is based on the distinct observations

only, and we nowalso have the multiplicative factor K(, ). If

the prior on F was the mixture of Dirichlets

_

D

M

(d)as

opposed to a mixture of conditional Dirichletsthen the poste-

rior distribution of would be the same as (6), but without the

factor K(, ) (lemma 1 of Antoniak 1974).

The factor K(, ) plays an interesting role. Viewed as a

function of , K(, ) has a maximum when is at the me-

dian of the

i

s, and as moves away from the sample median

in either direction, it is constant between the observations and

decreases by jumps at each observation. It has the general effect

of shrinking the posterior distribution of toward the sample

median, and does so more strongly when M is small.

Consider the term L(, ) =

dist

h((

i

)/) in (6).

When implementing the Gibbs sampler, the effect of L(, )

diminishes as M decreases. This is because when M is small,

the vector is partitioned into a batch of clusters, with the

i

s

in the same cluster being equal. As a consequence, for small M,

inference on is stable in model (5) [because of the presence of

the term K(, )] but not in model (3). It is an interesting fact

that for small M, the decreased relevance of the term L(, )

creates problems for the estimation of , but not for the estima-

tion of =

_

x dF(x). We see this as follows. The conditional

distribution of F given

1

, . . . ,

m

is equal to

L

(F) =

_

L

(F|)

(d)

=

_

D

MH

m

i=1

(d),

and for small M, this is essentially equal to D

m

i=1

i

; that is,

the mixing parameter plays no role.

Before giving the detailed description of the algorithm, we

outline the main steps involved.

Step 1. Update . For i = 1, . . . , m, we generate succes-

sively

i

given the current values of

j

, j =i, , ,

and the data. The conditional distribution involved

is a mixture of a normal truncated to the interval

(, ), another normal truncated to the interval

(, ) and point masses at the

j

, j =i.

Step 2. Update (, ). To generate (, ) given , we per-

form two steps:

a. Generate from its marginal distribution given

( being integrated out). This is proportional to

a t-distribution times an easily calculated factor.

b. Generate fromits conditional distribution given

and . The distribution of 1/

2

is a gamma.

We now describe the algorithm in detail. We rst dis-

cuss L

D

(

i

|

(i)

, , ), where

(i)

= (

1

, . . . ,

i1

,

i+1

,

. . . ,

m

). Rewriting this as

L

{

(i)

,,}

(

i

|D) =L

{

(i)

,,}

(

i

|D

i

)

makes it simple to see that we can calculate this using a stan-

dard formula for the posterior distribution (i.e., the posterior is

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

246 Journal of the American Statistical Association, March 2005

proportional to the likelihood times the prior). Using the well-

known fact that

if X

1

, . . . , X

m

are

iid

F, F D

MH

,

then L

_

X

i

X

(i)

_

=

MH +

j=i

X

j

M+m1

,

it is not too difcult to see that the prior is

L

{

(i)

,,}

(

i

) =

1

2

MN

(,

2

) +

j=i;

j

<

j

M/2 +m

+

1

2

MN

+

(,

2

) +

j=i;

j

>

j

M/2 +m

+

, (8)

where

m

j=i

I(

j

<) and m

+

=

j=i

I(

j

> ),

and we use the notation N

(a, b) and N

+

(a, b) to denote the

restrictions (without renormalization) of the N(a, b) distribu-

tion to (, ) and (, ). The likelihood is

L

{

(i)

,,}

(D

i

|

i

) =L(D

i

|

i

) =N(

i

,

2

i

), (9)

where the rst equality in (9) follows because

(i)

, , and

affect D

i

only through their effect on

i

, and the second equal-

ity in (9) is just the model statement (3a).

To combine (8) and (9), we note that the densities of the sub-

distribution functions N

(,

2

) and N

+

(,

2

) are just the

density of the N(,

2

) distribution multiplied by the indica-

tors of the sets (, ) and (, ). When we multiply these

by the density of the N(

i

,

2

i

) distribution, we can complete

the square, resulting in constants times the density of a new

normal distribution. This gives

L

D

_

i

|

(i)

, ,

_

C

(A, B

2

) +C

+

N

+

(A, B

2

)

+

j=i,

j

<

j

1

2

i

exp[(D

i

j

)

2

/(2

2

i

)]

M/2 +m

j=i,

j

>

j

1

2

i

exp[(D

i

j

)

2

/(2

2

i

)]

M/2 +m

+

, (10)

where

A =

2

i

+D

i

2

i

+

2

, B

2

=

2

i

2

2

i

+

2

,

C

=

M/(M/2 +m

)

_

2(

2

i

+

2

)

exp

_

(D

i

)

2

2(

2

i

+

2

)

_

,

and

C

+

=

M/(M/2 +m

+

)

_

2(

2

i

+

2

)

exp

_

(D

i

)

2

2(

2

i

+

2

)

_

.

The normalizing constant in (10) is

C

_

A

B

_

+C

+

_

1

_

A

B

__

+

j=i,

j

<

1

2

i

exp[(D

i

j

)

2

/(2

2

i

)]

M/2 +m

j=i,

j

>

1

2

i

exp[(D

i

j

)

2

/(2

2

i

)]

M/2 +m

+

,

where is the standard normal cumulative distribution func-

tion, and this enables us to sample from L

D

(

i

|

(i)

, , ).

To generate (, ) from L

D

(, |), we note that

L

D

(, |) =L(, |), which is given by Proposition 1. Be-

cause of the factor K(, ), (6) is not available in closed form.

However, we are taking to be given by (5d) and (5e), which is

conjugate to the N(,

2

) family, and this simplies the form

of (6). It is possible to generate (, ) from (6) if we rst gen-

erate from its marginal posterior distribution [which is pro-

portional to a t distribution multiplied by the factor K(, )]

and then generate from its conditional posterior distribution

given (1/

2

is just a gamma).

In more detail, let m

be the number of distinct

i

s and let

=(

dist

i

)/m

, where the dist in the sum indicates that

the sum is taken over distinct values only. Then L(, |) has

density proportional to the product

g

(, )K(, ), (11)

where g

(, ) has the form (5d)(5e), with updated parame-

ters a

, b

, c

, and d

given by

a

=a +m

/2,

b

=b +

1

2

dist_

_

2

+

m

c)

2

2(1 +m

d)

,

c

=

c +m

d +1

,

and

d

=

1

m

+d

1

.

This follows from the conjugacy (Berger 1985, p. 288). Inte-

grating out in (11) [and noting that K(, ) does not depend

on ], we see that the (marginal) conditional distribution of

given has density proportional to

t(2a

, c

, b

/a

)()K(, ). (12)

Here t(d, l, s

2

) denotes the density of the t distribution with

d degrees of freedom, location l, and scale parameter s. Because

K(, ) is a step function, it is possible to do exact random

variable generation from this posterior density. [The real line

is partitioned into the m

+ 1 disjoint intervals formed by the

m

distinct

i

s. Then the measures of these m

+1 intervals are

calculated under (12) and renormalized to form a probability

vector. One of these m

+1 intervals is chosen according to this

probability vector, and nally a random variable is generated

from the t(2a

, c

, b

/a

) distribution restricted to the interval

and renormalized to be a probability measure.] The condi-

tional distribution of 1/

2

given and is gamma(a

+1/2,

b

+(c

)

2

/2d

).

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

Burr and Doss: Random-Effects Meta-Analysis 247

The algorithm described earlier gives us a sequence

(

(g)

,

(g)

,

(g)

), g = 1, . . . , G, approximately distributed ac-

cording to L

D

(, , ). As mentioned earlier, various pos-

terior distributions can be estimated from this sequence. For

example, to estimate L

D

(

m+1

), we express this quantity as

_

L

D

(

m+1

|, , ) dL

D

(, , ), which we estimate by an

average of G distributions each of the form (8).

Shrinkage in the Posterior Distribution. If F is chosen from

a Dirichlet process prior and

1

, . . . ,

m

are

iid

F, then there

will be ties among the

i

s (i.e., they will form clusters). This

tendency to form clusters is stronger when M is smaller. This

fact is well known and can be easily seen from Sethuramans

(1994) construction, for example. We now discuss the impact

of this property on the posterior distribution of

1

, . . . ,

m

.

In a standard parametric hierarchical model [i.e., (5) except

with (5b) and (5c) replaced with the simpler

i

iid

N(,

2

),

i = 1, . . . , m], the posterior distribution of

i

involves shrink-

age toward D

i

and toward a grand mean. If we consider (10),

then we see that in the semiparametric model, the posterior dis-

tribution of

i

is also shrunk toward those

j

s that are close

to D

i

. When M is small, the constants C

and C

+

are small,

which means that this effect is stronger.

To conclude, the posterior distribution of

i

is affected by

the results of all studies, but is more heavily affected by studies

with results similar to those of study i. We illustrate this effect

in the example of Section 4.1.

3.3 Connection Between Posterior Distributions Under

Models (3) and (5)

It is natural to question the connection between the posterior

distribution of under our model (5) and its posterior when

the prior on F is (3). One way to gain some insight into this

question is as follows. Let

c

(, , ) denote the distribution

of (, , ) under (5) for some specication of (M, {H

}, ),

and let

u

(, , ) denote the distribution under (3) for the

same specication of these hyperparameters. Let

c

D

(, , )

and

u

D

(, , ) denote the corresponding posterior distribu-

tions. Proposition 2 gives the relationship between these two

posterior distributions.

Proposition 2. Assume that H is absolutely continuous, with

continuous density h, and that the median of H is 0. Then the

RadonNikodym derivative [d

c

D

/d

u

D

] is given by

_

d

c

D

d

u

D

_

(, , ) =AK(, ),

where K is given in (7), and A does not depend on (, , ).

The proof is given in the Appendix. Here we remark on

how this proposition can be used. In general, A is dif-

cult to compute, but this need not be a problem. Suppose

that (

(g)

,

(g)

,

(g)

), g = 1, . . . , G, is Markov chain out-

put generated under model (3). Expectations with respect to

model (5) can be estimated using a weighted average of the

(

(g)

,

(g)

,

(g)

)s, where the vector (

(g)

,

(g)

,

(g)

) is given

weight proportional to K(

(g)

,

(g)

) (Hastings 1970), and to do

this we do not need to know A. Thus vectors (

(g)

,

(g)

,

(g)

)

such that

(g)

is far fromthe median of

(g)

1

, . . . ,

(g)

m

are given

lower weight than vectors for which

(g)

is close to the median

of

(g)

1

, . . . ,

(g)

m

. Burr et al. (2003) applied this reweighting

scheme on the output of a simpler programthat runs the Markov

chain for model (3) to arrive at their estimates.

A drawback of the reweighting approach is that when the two

distributions differ greatly, a few of the Markov chain points

will take up most of the weight, with the result that estimates are

unstable unless the chain is run for an extremely large number

of cycles.

4. ILLUSTRATIONS

Here we illustrate the use of our models on two meta-

analyses. In the rst example, the issue of main interest is the

basic question of whether or not there is evidence of a treatment

effect, and the focus is on the latent parameter . In the second

example, the principal interest is on the latent parameters,

i

s.

In each case we ran our Gibbs sampler for 100,000 cycles and

discarded the rst 5,000 cycles.

4.1 Decontamination of the Digestive Tract

Infections acquired in intensive care units are an important

cause of mortality. One strategy for dealing with this prob-

lem involves selective decontamination of the digestive tract.

This is designed to prevent infection by preventing carriage of

potentially pathogenic microorganisms from the oropharynx,

stomach, and gut. A meta-analysis of 22 randomized trials to

investigate the benets of selective decontamination of the di-

gestive tract was carried out in 1993 by an international collab-

orative group, the Selective Decontamination of the Digestive

Tract Trialists Collaborative Group (DTTCG). In each trial,

patients in an intensive care unit were randomized to either a

treatment group or a control group. The treatments varied, with

some including a topical (nonabsorbable) antibiotic and others

including in addition a systemic antibiotic. The antibiotics var-

ied across trials. In each trial, the proportion of individuals who

acquired an infection was recorded for the treatment and control

groups, and an odds ratio was reported. The study used a xed-

effects model, in which the 22 trials were assumed to measure

the same quantity. The 22 odds ratios were combined via the

MantelHaenszelPeto method. The results demonstrated over-

whelming evidence that selective decontamination is effective

in reducing the risk of infection; a 95% condence interval for

the common odds ratio was found to be (.31, .43). As expected,

owing to the large variation in treatment across trials, a test of

heterogeneity was signicant ( p value <.001); however, a fre-

quentist random-effects analysis (DerSimonian and Laird 1986)

gave similar results.

This dataset was reconsidered by Smith et al. (1995), who

used a Bayesian hierarchical model in which for each trial there

is a true log odds ratio

i

, viewed as a latent variable, and for

which the observed log odds ratio is an estimate. The true log

odds ratios are assumed to come from a normal distribution

with mean and variance

2

, and a prior is put on (, ).

Smith et al. (1995) showed that the posterior probability that

is negative is extremely close to 1, conrming the results of

DTTCG (1993), although with a different model.

An interesting later section of DTTCG (1993) considered

mortality as the outcome variable. Each of the 22 trials also re-

ported the proportion of individuals who died for the treatment

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

248 Journal of the American Statistical Association, March 2005

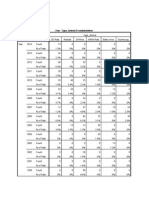

Table 1. Odds Ratios for 14 Studies

Study no. 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Treat infected 14 22 27 11 4 51 33 24 14 14 15 34 45 47

Treat total 45 55 74 75 28 131 91 161 49 48 51 162 220 220

Cont infected 23 33 40 16 12 65 40 32 15 14 14 31 40 40

Cont total 46 57 77 75 60 140 92 170 47 49 50 160 220 220

Obs OR .46 .49 .54 .64 .71 .74 .74 .76 .86 1.03 1.07 1.10 1.16 1.22

M =.1 .67 .66 .66 .76 .82 .78 .80 .81 .87 .93 .95 1.02 1.05 1.06

M =1 .70 .69 .69 .78 .83 .79 .80 .81 .87 .92 .93 .99 1.02 1.04

M =5 .72 .71 .71 .78 .83 .79 .80 .81 .85 .90 .91 .97 1.00 1.03

M =20 .72 .71 .71 .78 .83 .79 .80 .81 .85 .90 .91 .96 1.00 1.03

M =1,000 .72 .71 .71 .78 .83 .79 .80 .81 .85 .90 .91 .96 1.01 1.04

Model (3)

M =1 .79 .78 .78 .82 .84 .82 .83 .83 .85 .86 .87 .89 .91 .93

M =5 .74 .73 .73 .80 .83 .80 .81 .82 .85 .89 .89 .94 .98 1.00

M =20 .72 .72 .72 .79 .83 .79 .80 .81 .85 .89 .90 .96 1.00 1.03

NOTE: Rows 2 and 3 give the number infected and the total number in the treatment group, and rows 4 and 5 give the same information for the control group. The row Obs OR gives the odds

ratios observed in the 14 studies. The next ve rows give the means of the posteriors under the semiparametric model (5) for ve values of M, and the bottom three rows give posterior means for

model (3).

and control groups, and again an odds ratio was reported. Us-

ing a xed-effects model, DTTCG (1993) found that the results

were much less clear cut. The common odds ratio was estimated

as .90, with a 95% condence interval of (.79, 1.04). In 14 of

the studies, the treatment included both topical and systemic an-

tibiotics, and medical considerations suggest that the effect of

the treatment would be stronger in these studies. Indeed, for this

subgroup, the common odds ratio was estimated as .80, with a

95% condence interval of (.67, .97), which does not include 1.

(Consideration of this subgroup had been planned before the

analysis of the data.) The data for these 14 studies appear in

rows 25 of Table 1, and the odds ratios appear in row 6 of the

table. The studies are arranged in order of increasing odds ra-

tio, rather than in the original order given in DTTCG (1993), to

facilitate inspection of the table.

Smith et al. (1995) noted that for infection as the outcome

variable, the data do not seem to follow a normal distribution,

and for this reason also used a t distribution. It is difcult to

test for normality for this kind of data. (The studies had dif-

ferent sizes, and there are only 14 studies.) Nevertheless, the

normal probability plot given in Figure 1 suggests some de-

viation from normality for our data as well. In meta-analysis

studies, it is often the case that there is some kind of grouping

in the datastudies that have similar designs may yield similar

results. Some evidence of this appears in Figure 1.

We analyze these data using our semiparametric Bayesian

model. We t model (5) with a =b =.1, c =0, and d =1,000

and several values of M, including .1 and 1,000, to assess the

effect of this hyperparameter on the conclusions. Large values

of M essentially correspond to a parametric Bayesian model

based on a normal distribution, and this asymptopia is for prac-

tical purposes reached for M as little as 20. The value M = .1

gives an extreme case, and would not ordinarily be used (see

Sethuraman and Tiwari 1982); we include it here only to give

some insight into the behavior of the model.

Figure 2 gives the posterior distribution of for M = 1,000

and 1. For M = 1,000, the posterior probability that is pos-

itive is .04, but for M = 1, the posterior probability is the

nonsignicant gure of .16, which suggests that although the

treatment reduces the rate of infection, there is not enough ev-

idence to conclude that there is a corresponding reduction in

Figure 1. Normal Probability Plot for the Mortality Data for Studies

Including Both Topical and Systemic Antibiotics. The areas of the circles

are proportional to the inverse of the standard error.

Figure 2. Posterior Distribution of for M = 1,000 ( ) and

1 ( ).

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

Burr and Doss: Random-Effects Meta-Analysis 249

mortality, even if the treatment involves both topical and sys-

temic antibiotics.

The middle group of rows in Table 1 give the means of the

posterior distributions for ve values of M. The average of the

posterior means (over the 14 studies) is very close to .84 for

each of the ve values of M. From the table we see that for

M =1,000, the posterior means of the odds ratios are all shrunk

fromthe value Obs OR toward .84. But for small values of M,

the posterior means are also shrunk toward the average for the

observations in their vicinity.

To gain some understanding of the difference in behavior be-

tween models (5) and (3), we ran a Gibbs sampler appropriate

for model (3), from which we created the last three rows of

Table 1. From the table, we make the following observations.

First, the two models give virtually identical results for large M,

as one would expect. Second, the shrinkage in model (3) seems

to have a different character, with attraction toward an over-

all mean being much stronger for small values of M. To gain

some insight into how the two models handle outliers, we per-

formed the following experiment. We took the study with the

largest observed odds ratio and changed that from 1.2 to 2.0

(keeping that observations standard error and everything else

the same), then reran the algorithms. For large M, for ei-

ther model, E(

14

|D) moved from 1.03 to 1.50. For M = 1,

for model (5), E(

14

|D) moved from 1.04 to 1.44, whereas for

model (3), E(

14

|D) moved from .93 to 1.52, a more signi-

cant change.

4.2 Clopidogrel versus Aspirin Trial

When someone suffers an atherosclerotic vascular event

(such as a stroke or a heart attack), it is standard to adminis-

ter an antiplatelet drug to reduce the risk of another event. The

oldest such drug is aspirin, but there are many other drugs on the

market, including clopidogrel. An important study (CAPRIE

Steering Committee 1996) compared this drug with aspirin in a

large-scale trial. In this study, 19,185 individuals who had suf-

fered a recent atherosclerotic vascular event were randomized

to receive either clopidogrel or aspirin. Patients were recruited

over a 3-year period, and mean follow-up was 1.9 years. There

were 939 events over 17,636 person-years for the clopidogrel

group, compared with 1,021 events over 17,519 person-years

for the aspirin group, giving a risk ratio for clopidogrel ver-

sus aspirin of .913 [with 95% condence interval (.835, .997)],

and clopidogrel was judged superior to aspirin, with a two-sided

p value of .043. As a consequence of this landmark study, clopi-

dogrel was favored over the much cheaper aspirin for patients

who have had an atherosclerotic vascular event.

A short section of the CAPRIE article (p. 1334) briey dis-

cusses the outcomes for three subgroups of patients: those

participating in the study because they had a stroke, a heart at-

tack [myocardial infarction (MI)], or peripheral arterial disease

(PAD). The results for the three groups differed; the risk ratios

for clopidogrel versus aspirin were .927, 1.037, and .762 for

the stroke, MI, and PAD groups. A test of homogeneity of the

three risk ratios gives a p value of .042, providing mild evidence

against the hypothesis that the three risk ratios are equal.

We analyze the data using the methods developed in this ar-

ticle. On the surface, this dataset does not t our description in

Section 1 of this article. The study was indeed designed as a

multicenter trial involving 384 centers; however, the protocol

was so well dened that it is reasonable to ignore the center

effect. We focus instead on the patient subgroups. Of course,

one may object to using a random-effects model when there are

only three groups involved. However, there is nothing in our

Bayesian formulation that requires us to have a large number

of groups involvedwe simply should not expect to be able to

obtain accurate estimates of the overall parameter and espe-

cially of the mixing distribution F. We carry out this analysis

for two reasons. First, an analysis by subgroup is of medical in-

terest, in that for the MI subgroup, aspirin seems to outperform

clopidogrel, or at the very least, the evidence in favor of clopi-

dogrel is weaker. Second, there are studies currently planned

comparing the two drugs for patients with several sets of gene

proles. Thus the kind of analysis we do here will apply directly

to those studies, and the shrinkage discussed earlier may give

useful information. Before proceeding, we remark that in this

situation there is no particular reason for preferring model (5)

over model (3) (the emphasis is on the

i

s), and in any case

the two models turn out to give virtually identical conclusions.

Let

stroke

,

MI

, and

PAD

be the logs of the true risk ra-

tios for the three groups. Their corresponding estimates are

(D

stroke

, D

MI

, D

PAD

) = (.076, .036, .272). We work on the

log scale because the normal approximation in (5a) is more ac-

curate on this scale. The estimated standard errors of the Ds

are .067, .083, and .091. (These are obtained from the number

of events and the number of patient-years given on p. 1334 of

the CAPRIE article, using standard formulas.) We t model (5)

with a, b, c, and d as in Section 4.1. The posterior distribu-

tions of the s, on the original scale, are shown in Figure 3 for

M = 1 and 1,000, with the latter essentially corresponding to

a parametric Bayesian model. As expected, there is shrinkage

toward an overall value of .91. The shrinkage is stronger for

smaller values of M.

Our conclusions are as follows. The superiority of clopido-

grel over aspirin for PAD is unquestionable, no matter what

analysis is done. For stroke, the situation is less clear; the poste-

rior probability that clopidogrel is better is about .85, for a wide

range of values of M. For MI, in the Bayesian model the pos-

terior probability that clopidogrel is superior to aspirin is .38

for M =1,000. Even after the stronger shrinkage occurring for

M = 1, this probability is only .65, so there is insufcient evi-

dence for preferring clopidogrel. The CAPRIE report states that

the administration of clopidogrel to patients with atheroscle-

rotic vascular disease is more effective than aspirin . . . . Our

analysis suggests that this recommendation does not have an

adequate basis for the MI subgroup.

Implementation of the Gibbs sampling algorithm of Sec-

tion 3.2 is done through easy-to-use SPLUS/R functions,

available from us on request. All of the simulations can be done

in SPLUS/R, although a considerable gain in speed can be ob-

tained by calling dynamically loaded C subroutines.

APPENDIX: PROOF OF PROPOSITION 2

A very brief outline of the proof is as follows. To calculate the

RadonNikodym derivative [d

c

D

/d

u

D

] at the point (

(0)

,

(0)

,

(0)

),

we nd the ratio of the probabilities, under

c

D

and

u

D

, of the

(m+2)-dimensional cubes centered at (

(0)

,

(0)

,

(0)

) and of

width , let tend to 0, and give a justication for why this yields

the RadonNikodym derivative.

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

250 Journal of the American Statistical Association, March 2005

(a) (b) (c)

Figure 3. Posterior Distributions of Risk Ratios for the Three Subgroups (a) Stroke, (b) MI, and (c) PAD for Model (5) Using M = 1 ( )

and M = 1,000 ( ). In each plot, R-est is the estimate reported in the CAPRIE article, B-est is the mean of the posterior distribution, R-int is

the 95% condence interval reported in the article, B-int is the central 95% probability interval for the posterior distribution, and P(RR < 1) is the

posterior probability that the risk ratio is less than 1. All posteriors are calculated for the case where M = 1, although the corresponding quantities

for M =1,000 are virtually identical.

We now proceed with the calculation, which is not entirely trivial.

Let

0

= (

0

,

0

) and

(0)

= (

(0)

1

, . . . ,

(0)

m

) R

m

be xed.

Let

(0)

(1)

< <

(0)

(r)

be the distinct values of

(0)

1

, . . . ,

(0)

m

, and let

m

1

, . . . , m

r

be their multiplicities. (We write

( j)

instead of

(0)

( j)

and

instead of

0

to lighten the notation, whenever this will not cause

confusion.) Let

m

=

m

i=1

I(

i

<), m

+

=

m

i=1

I(

i

> ),

and

r

=

r

j=1

I

_

( j)

<

_

, r

+

=

r

j=1

I

_

( j)

>

_

.

For small > 0, let C

(0)

i

=(

(0)

i

/2,

(0)

i

+/2), and dene C

(0)

to be the cube C

(0)

1

C

(0)

m

. Similarly dene B

0

in space. To

calculate the likelihood ratio, we rst consider the probability of the

set { B

0

, C

(0)

} under the two measures

c

and

u

(and not

c

D

and

u

D

). We nd it convenient to associate probability measures

with their cumulative distribution functions and to use the same sym-

bol to refer to both. Denoting the set of all probability measures on the

real line by P, we have

c

{ B

0

, C

(0)

}

u

{ B

0

, C

(0)

}

=

c

{ B

0

, F P, C

(0)

}

u

{ B

0

, F P, C

(0)

}

=

_

B

0

_

P

m

i=1

F{C

(0)

i

}D

(dF)(d)

_

B

0

_

P

m

i=1

F{C

(0)

i

}D

(dF)(d)

. (A.1)

Let

g

,

(0)

() =

_

P

1im

i

<

F

_

C

(0)

i

_

D

(dF),

(A.2)

g

+,

(0)

() =

_

P

1im

i

>

F

_

C

(0)

i

_

D

(dF),

and

g

(0)

() =

_

P

1im

F

_

C

(0)

i

_

D

(dF). (A.3)

Take small enough so that the sets (

(0)

( j)

/2,

(0)

( j)

+ /2),

j =1, . . . , r, are disjoint. Using the denition of F given in (4), in-

cluding the independence of F

and F

+

, we rewrite the inner integral

in the numerator of (A.1) and obtain

c

{ B

0

, C

(0)

}

u

{ B

0

, C

(0)

}

=

_

B

0

g

,

(0)

()g

+,

(0)

()(d)

_

B

0

g

(0)

()(d)

=

_

B

0

_

g

,

(0)

()

1im

i

<

(m

i

1)!

__

g

+,

(0)

()

r

+

1im

i

>

(m

i

1)!

_

(d)

__

B

0

_

g

(0)

()

1im

(m

i

1)!

_

(d)

_

1

. (A.4)

We may rewrite g

,

(0)

() and g

+,

(0)

() in (A.2) as

g

,

(0)

() =

_

P

1jr

( j)

<

_

F

_

C

(0)

( j)

__

m

j

D

(dF) (A.5a)

and

g

+,

(0)

() =

_

P

+1jr

( j)

>

_

F

_

C

(0)

( j)

__

m

j

D

(dF), (A.5b)

and rewrite g

(0)

() in (A.3) as

g

(0)

() =

_

P

1jr

_

F

_

C

(0)

( j)

__

m

j

D

(dF). (A.6)

[Note that the conditions

( j)

< and

( j)

> in the products

in (A.5a) and (A.5b) are redundant, because the

( j)

s are ordered.]

Let A

j

() =

{(

( j)

/2,

( j)

+ /2)}, j = 1, . . . , r

, and also

dene A

r

+1

() = (M/2)

r

j=1

A

j

(). Calculation of (A.5a) is

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

Burr and Doss: Random-Effects Meta-Analysis 251

routine, because it involves only the nite-dimensional Dirichlet dis-

tribution. The integral in (A.5a) is E(U

m

1

1

U

m

r

), where (U

1

, . . . ,

U

r

, U

r

+1

) Dirichlet(A

1

(), . . . , A

r

+1

()), and we can calculate

this expectation explicitly. We obtain

g

,

(0)

() =

_

1

2

_

m

(

M

2

)

(

j=1

(A

j

()))(A

r

+1

())

j=1

(A

j

() +m

j

))(A

r

+1

())

(

M

2

+m

)

.

Let

f

(0)

() =

_

1

2

_

m

_

r

j=1

h

( j)

_

_

M

r

(

M

2

)

(

M

2

+m

)

,

f

+

(0)

() =

_

1

2

_

m

_

r

j=r

+1

h

( j)

_

_

M

r

+

(

M

2

)

(

M

2

+m

+

)

,

and

f

(0) () =

_

r

j=1

h

( j)

_

_

M

r

(M)

(M+m)

.

Here h

is the density of H

. Using the recursion (x + 1) = x(x)

and the denition of the derivative, we see that

g

,

(0)

()

j=1

(m

j

1)!

f

(0)

() for each .

Similarly,

g

+,

(0)

()

r

+

r

j=r

+1

(m

j

1)!

f

+

(0)

() and

g

(0)

()

r

j=1

(m

j

1)!

f

(0) () for each .

Furthermore, because h is continuous, the convergence is uniform

in small neighborhoods of

0

. Therefore, it is clear that (A.4) con-

verges to

_

1

2

_

m

2

(

M

2

)(M+m)

(M)(

M

2

+m

)(

M

2

+m

+

)

=

_

1

2

_

m

_

2

(

M

2

)(M+m)

(M)

_

K

_

(0)

,

(0)

_

, (A.7)

and we note that the expression in brackets does not depend on

(

(0)

,

(0)

,

(0)

).

We now return to

c

D

and

u

D

. The posterior is proportional to the

likelihood times the prior, and because the likelihood is the same under

the two models [i.e., (3a) and (5a) are identical], we obtain

_

d

c

D

d

u

D

_

_

(0)

,

(0)

,

(0)

_

=AK

_

(0)

,

(0)

_

,

where A does not depend on (

(0)

,

(0)

,

(0)

), as stated in the propo-

sition.

The calculation of the RadonNikodym derivatives [d

c

/d

u

] and

[d

c

D

/d

u

D

] in this way is supported by a martingale construction

(see, e.g., Durrett 1991, pp. 209210) and the main theorem of

Pfanzagl (1979).

[Received November 2002. Revised June 2004.]

REFERENCES

Antoniak, C. E. (1974), Mixtures of Dirichlet Processes With Applications to

Bayesian Nonparametric Problems, The Annals of Statistics, 2, 11521174.

Berger, J. O. (1985), Statistical Decision Theory and Bayesian Analysis

(2nd ed.), New York: Springer-Verlag.

Blackwell, D., and MacQueen, J. B. (1973), Ferguson Distributions via Plya

Urn Schemes, The Annals of Statistics, 1, 353355.

Burr, D., Doss, H., Cooke, G., and Goldschmidt-Clermont, P. (2003), A Meta-

Analysis of Studies on the Association of the Platelet PlA Polymorphism of

Glycoprotein IIIa and Risk of Coronary Heart Disease, Statistics in Medi-

cine, 22, 17411760.

CAPRIE Steering Committee (1996), A Randomised, Blinded Trial of Clopi-

dogrel versus Aspirin in Patients at Risk of Ischaemic Events (CAPRIE),

Lancet, 348, 13291339.

Carlin, J. B. (1992), Meta-Analysis for 2 2 Tables: A Bayesian Approach,

Statistics in Medicine, 11, 141158.

DerSimonian, R., and Laird, N. (1986), Meta-Analysis in Clinical Trials,

Controlled Clinical Trials, 7, 177188.

Dey, D., Mller, P., and Sinha, D. (1998), Practical Nonparametric and Semi-

parametric Bayesian Statistics, New York: Springer-Verlag.

Doss, H. (1983), Bayesian Nonparametric Estimation of Location, unpub-

lished doctoral thesis, Stanford University.

(1985), Bayesian Nonparametric Estimation of the Median: Part I:

Computation of the Estimates, The Annals of Statistics, 13, 14321444.

(1994), Bayesian Nonparametric Estimation for Incomplete Data via

Successive Substitution Sampling, The Annals of Statistics, 22, 17631786.

DuMouchel, W. (1990), Bayesian Metaanalysis, in Statistical Methodology

in the Pharmaceutical Sciences, New York: Marcel Dekker, pp. 509529.

Durrett, R. (1991), Probability: Theory and Examples, Pacic Grove, CA:

Brooks/Cole.

Escobar, M. (1988), Estimating the Means of Several Normal Populations by

Nonparametric Estimation of the Distribution of the Means, unpublished

doctoral thesis, Yale University.

(1994), Estimating Normal Means With a Dirichlet Process Prior,

Journal of the American Statistical Association, 89, 268277.

Ferguson, T. S. (1973), A Bayesian Analysis of Some Nonparametric Prob-

lems, The Annals of Statistics, 1, 209230.

(1974), Prior Distributions on Spaces of Probability Measures,

The Annals of Statistics, 2, 615629.

Gelfand, A. E., and Kottas, A. (2002), A Computational Approach for Full

Nonparametric Bayesian Inference Under Dirichlet Process Mixture Models,

Journal of Computational and Graphical Statistics, 11, 289305.

Ghosh, M., and Rao, J. N. K. (1994), Small Area Estimation: An Appraisal,

Statistical Science, 9, 5576.

Hastings, W. K. (1970), Monte Carlo Sampling Methods Using Markov

Chains and Their Applications, Biometrika, 57, 97109.

Morris, C. N., and Normand, S. L. (1992), Hierarchical Models for Com-

bining Information and for Meta-Analyses, in Bayesian Statistics 4, eds.

J. M. Bernardo, J. O. Berger, A. P. Dawid, and A. F. M. Smith, Oxford, U.K.:

Clarendon Press, pp. 321335.

Muliere, P., and Tardella, L. (1998), Approximating Distributions of Random

Functionals of Ferguson-Dirichlet Priors, The Canadian Journal of Statis-

tics, 26, 283297.

Neal, R. M. (2000), Markov Chain Sampling Methods for Dirichlet Process

Mixture Models, Journal of Computational and Graphical Statistics, 9,

249265.

Newton, M. A., Czado, C., and Chappell, R. (1996), Bayesian Inference for

Semiparametric Binary Regression, Journal of the American Statistical As-

sociation, 91, 142153.

Pfanzagl, J. (1979), Conditional Distributions as Derivatives, The Annals of

Probability, 7, 10461050.

Selective Decontamination of the Digestive Tract Trialists Collaborative Group

(DTTCG) (1993), Meta-Analysis of Randomised Controlled Trials of Selec-

tive Decontamination of the Digestive Tract, British Medical Journal, 307,

525532.

Sethuraman, J. (1994), A Constructive Denition of Dirichlet Priors, Statis-

tica Sinica, 4, 639650.

Sethuraman, J., and Tiwari, R. C. (1982), Convergence of Dirichlet Measures

and the Interpretation of Their Parameter, in Statistical Decision Theory and

Related Topics III, Vol. 2, eds. S. Gupta and J. Berger, New York: Academic

Press, pp. 305315.

Skene, A. M., and Wakeeld, J. C. (1990), Hierarchical Models for Multicen-

tre Binary Response Studies, Statistics in Medicine, 9, 919929.

Smith, T. C., Spiegelhalter, D. J., and Thomas, A. (1995), Bayesian Ap-

proaches to Random-Effects Meta-Analysis: AComparative Study, Statistics

in Medicine, 14, 26852699.

Spiegelhalter, D. J., Thomas, A., Best, N. G., and Gilks, W. R. (1996), BUGS:

Bayesian Inference Using Gibbs Sampling, Version 0.5 (Version ii), Cam-

bridge, U.K.: MRC Biostatistics Unit.

D

o

w

n

l

o

a

d

e

d

b

y

[

I

n

d

i

a

n

C

o

u

n

c

i

l

o

f

M

e

d

i

c

a

l

R

e

s

]

,

[

r

a

m

e

s

h

a

t

h

e

]

a

t

0

2

:

2

1

0

2

M

a

y

2

0

1

3

You might also like