Professional Documents

Culture Documents

Congestion Control: CSE 123: Computer Networks Stefan Savage

Uploaded by

MhackSahuOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Congestion Control: CSE 123: Computer Networks Stefan Savage

Uploaded by

MhackSahuCopyright:

Available Formats

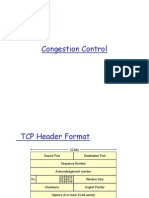

CSE 123: Computer Networks

Stefan Savage

Lecture 15:

Congestion Control

Overview

Yesterday:

TCP & UDP overview

Connection setup

Flow control: resource exhaustion at end node

Today: Congestion control

Resource exhaustion within the network

2

How fast should a sending host transmit data?

Not too fast, not too slow, just right

Should not be faster than the receiver can process

Flow control (last class)

Should not be faster than the senders share

Bandwidth allocation

Should not be faster than the network can process

Congestion control

Congestion control & bandwidth allocation are

separate ideas, but frequently combined

3

Today

Router

Source

2

Source

1

Source

3

Router

Router

Destination

2

Destination

1

How much bandwidth should each flow from a source to a

destination receive when they compete for resources?

What is a fair allocation?

10Mbps

4Mbps

10Mbps

6Mbps

100Mbps

10Mbps

4

Resource Allocation

5

Statistical Multiplexing

1. The bigger the buffer, the lower the packet loss.

2. If the buffer never goes empty, the outgoing line

is busy 100% of the time.

Buffer intended to absorb bursts when input rate > output

But if sending rate is persistently > drain rate, queue builds

Dropped packets represent wasted work; goodput < throughput

(goodput = useful throughput)

Destination

1.5-Mbps T1 link

Router

Source

2

Packets dropped here

6

Congestion

Network Load

G

o

o

d

p

u

t

L

a

t

e

n

c

y

Congestive

packet loss

Congestion

collapse

7

Drop-Tail Queuing

Rough definition: When an increase in network load

produces a decrease in useful work

Why does it happen?

Sender sends faster than bottleneck link speed

Whats a bottleneck link?

Packets queue until dropped

In response to packets being dropped, sender retransmits

Retransmissions further congest link

All hosts repeat in steady state

8

Congestion Collapse

Increase network resources

More buffers for queuing

Increase link speed

Pros/Cons of these approaches?

Reduce network load

Send data more slowly

How much more slowly?

When to slow down?

9

Mitigation Options

Open loop

Explicitly reserve bandwidth in the network in advance of

sending (next class, a bit)

Closed loop

Respond to feedback and adjust bandwidth allocation

Network-based

Network implements and enforces bandwidth allocation

(next class)

Host-based

Hosts are responsible for controlling their sending rate to be

no more than their share

What is typically used on the Internet? Why?

10

Designing a Control

Network Load

T

h

r

o

u

g

h

p

u

t

L

a

t

e

n

c

y

Knee

Cliff

Congestive

packet loss

Congestion

collapse

Congestion avoidance: try to stay to the left of the knee

Congestion control: try to stay to the left of the cliff

11

Proactive vs. Reactive

How to detect congestion?

How to limit sending data rate?

How fast to send?

12

Challenges to Address

Explicit congestion signaling

Source Quench: ICMP message from router to host

Problems?

DECBit / Explicit Congestion Notification (ECN):

Router marks packet based on queue occupancy (i.e. indication that

packet encountered congestion along the way)

Receiver tells sender if queues are getting too full (typically in ACK)

Implicit congestion signaling

Packet loss

Assume congestion is primary source of packet loss

Lost packets (timeout, NAK) indicate congestion

Packet delay

Round-trip time increases as packets queue

Packet inter-arrival time is a function of bottleneck link

13

Detecting Congestion

Window-based (TCP)

Artificially constrain number of outstanding packets allowed

in network

Increase window to send faster; decrease to send slower

Pro: Cheap to implement, good failure properties

Con: Creates traffic bursts (requires bigger buffers)

Rate-based (many streaming media protocols)

Two parameters (period, packets)

Allow sending of x packets in period y

Pro: smooth traffic

Con: fine-grained per-connection timers,

what if receiver fails?

14

Throttling Options

Ideally: Keep equilibrium at knee of power curve

Find knee somehow

Keep number of packets in flight the same

E.g., dont send a new packet into the network until you know

one has left (i.e. by receiving an ACK)

What if you guess wrong, or if bandwidth availability changes?

Compromise: adaptive approximation

If congestion signaled, reduce sending rate by x

If data delivered successfully, increase sending rate by y

How to relate x and y? Most choices dont converge

15

Choosing a Send Rate

Each source independently probes the network to

determine how much bandwidth is available

Changes over time, since everyone does this

Assume that packet loss implies congestion

Since errors are rare; also, requires no support from routers

Sink

45 Mbps T3 link

Router

100 Mbps Ethernet

16

TCPs Probing Approach

Window-based congestion control

Allows congestion control and flow control mechanisms to be

unified

rwin: advertised flow control window from receiver

cwnd: congestion control window estimated at sender

Estimate of how much outstanding data network can deliver in a

round-trip time

Sender can only send MIN(rwin,cwnd) at any time

Idea: decrease cwnd when congestion is encountered;

increase cwnd otherwise

Question: how much to adjust?

17

Basic TCP Algorithm

Goal: Adapt to changes in available bandwidth

Additive increase, Multiplicative Decrease (AIMD)

Increase sending rate by a constant (e.g. by 1500 bytes)

Decrease sending rate by a linear factor (e.g. divide by 2)

Rough intuition for why this works

Let L

i

be queue length at time i

In steady state: L

i

= N, where N is a constant

During congestion, L

i

= N + yL

i-1

, where y > 0

Consequence: queue size increases multiplicatively

Must reduce sending rate multiplicatively as well

18

Congestion Avoidance

Increase slowly while we

believe there is bandwidth

Additive increase per RTT

Cwnd += 1 full packet / RTT

Decrease quickly when

there is loss (went too far!)

Multiplicative decrease

Cwnd /= 2

Source Destination

19

AIMD

Only Wpackets

may be outstanding

Rule for adjusting congestion window (W)

If an ACK is received: W W+1/W

If a packet is lost: W W/2

20

TCP Congestion Control

Goal: quickly find the equilibrium sending rate

Quickly increase sending rate until congestion detected

Remember last rate that worked and dont overshoot it

Algorithm:

On new connection, or after timeout, set cwnd=1 full pkt

For each segment acknowledged, increment cwnd by 1 pkt

If timeout then divide cwnd by 2, and set ssthresh = cwnd

If cwnd >= ssthresh then exit slow start

Why called slow? Its exponential after all

21

Slow Start

cwnd=1

cwnd=2

cwnd=4

cwnd=8

Sender

Receiver

0

50

100

150

200

250

300

0 1 2 3 4 5 6 7 8

round-trip times

c

w

n

d

22

Slow Start Example

Slow Start + Congestion Avoidance

0

2

4

6

8

10

12

14

16

18

0 2 4 6 8

1

0

1

2

1

4

1

6

1

8

2

0

2

2

2

4

2

6

2

8

3

0

3

2

3

4

3

6

3

8

4

0

round-trip times

c

w

n

d

Timeout

ssthresh

Slow start

Congestion

avoidance

23

Basic Mechanisms

Fast retransmit

Timeouts are slow (1 second is fastest timeout on many TCPs)

When packet is lost, receiver still ACKs last in-order packet

Use 3 duplicate ACKs to indicate a loss; detect losses quickly

Why 3? When wouldnt this work?

Fast recovery

Goal: avoid stalling after loss

If there are still ACKs coming in, then no need for slow start

If a packet has made it through -> we can send another one

Divide cwnd by 2 after fast retransmit

Increment cwnd by 1 full pkt for each additional duplicate ACK

24

Fast Retransmit & Recovery

Sender

Receiver

3 Dup

Acks

Fast

retransmit

Fast recovery

(increase cwnd by 1)

25

Fast Retransmit Example

Slow Start + Congestion Avoidance +

Fast Retransmit + Fast Recovery

0

2

4

6

8

10

12

14

16

18

02468

1

0

1

2

1

4

1

6

1

8

2

0

2

2

2

4

2

6

2

8

3

0

3

2

3

4

3

6

3

8

4

0

round-trip times

c

w

n

d

Fast recovery

26

More Sophistication

In request/response programs, want to combine an

ACK to a request with a response in the same packet

Delayed ACK algorithm:

Wait 200ms before ACKing

Must ACK every other packet (or packet burst)

Impact on slow start?

Must not delay duplicate ACKs

Why? What is the interaction with the congestion control

algorithms?

27

Delayed ACKs

Short connection: only contains a handful of pkts

How do short connections and Slow-Start interact?

What happens when a packet is lost during Slow-Start?

Will short connections be able to use full bandwidth?

Do you think most flows are short or long?

Do you think most traffic is in short flows or long flows?

28

Short Connections

TCP is designed around the premise of cooperation

What happens to TCP if it competes with a UDP flow?

What if we divide cwnd by 3 instead of 2 after a loss?

There are a bunch of magic numbers

Decrease by 2x, increase by 1/cwnd, 3 duplicate acks, initial

timeout = 3 seconds, etc

But overall it works quite well!

29

Open Issues

TCP actively probes the network for bandwidth,

assuming that a packet loss signals congestion

The congestion window is managed with an additive

increase/multiplicative decrease policy

It took fast retransmit and fast recovery to get there

Slow start is used to avoid lengthy initial delays

Ramp up to near target rate, then switch to AIMD

Fast recovery is used to keep network full while

recovering from a loss

30

Summary

For next time

Last class: Resource management in the network

(i.e., Quality of Service)

P&D 6.1-6.2, 6.5.1

31

You might also like

- Troubleshooting Slow Networks With WiresharkDocument11 pagesTroubleshooting Slow Networks With Wiresharkpath1955100% (6)

- IOS-Advanced Data Extracts DefinitionsDocument7 pagesIOS-Advanced Data Extracts DefinitionsNhan VuNo ratings yet

- UMTS Troubleshooting PS Problems 201111Document68 pagesUMTS Troubleshooting PS Problems 201111shindeleNo ratings yet

- Lte Low Throughput Analysis and Improvement Techniques PDFDocument47 pagesLte Low Throughput Analysis and Improvement Techniques PDFHussam AljammalNo ratings yet

- CCNA Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesFrom EverandCCNA Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNo ratings yet

- 6 TCP Congestion ControlDocument14 pages6 TCP Congestion ControlakashNo ratings yet

- 32 SPD - eRAN12.1 - LTE FDD Network Design Technical Training-20170315-A-1.0Document112 pages32 SPD - eRAN12.1 - LTE FDD Network Design Technical Training-20170315-A-1.0Juan Ulises CapellanNo ratings yet

- CS438-18 CCDocument32 pagesCS438-18 CCohmshankarNo ratings yet

- Ee 122:Tcp Congestion Control: Ion Stoica Tas: Junda Liu, DK Moon, David ZatsDocument649 pagesEe 122:Tcp Congestion Control: Ion Stoica Tas: Junda Liu, DK Moon, David ZatsSonakshi MathurNo ratings yet

- TCP Congestion Control MechanismsDocument43 pagesTCP Congestion Control MechanismsNguyenTranHungNo ratings yet

- Congestion Control: Reading: Sections 6.1-6.4Document39 pagesCongestion Control: Reading: Sections 6.1-6.4Malu SakthiNo ratings yet

- TCP Congestion ControlDocument20 pagesTCP Congestion ControlengrkskNo ratings yet

- TCP Congestion ControlDocument20 pagesTCP Congestion ControlErik XuNo ratings yet

- Bab 6 Pertemuan 2 Vs2Document32 pagesBab 6 Pertemuan 2 Vs2Arif CupuNo ratings yet

- TCP Congestion Control - WikipediaDocument12 pagesTCP Congestion Control - WikipediaJesmin MostafaNo ratings yet

- TCP Congestion Control and Active Queue ManagementDocument39 pagesTCP Congestion Control and Active Queue ManagementUrvarshi GhoshNo ratings yet

- TCP Congestion Control AIMD and Adaptive RetransmissionsDocument34 pagesTCP Congestion Control AIMD and Adaptive RetransmissionsrockerptitNo ratings yet

- NS simulation implementing Large Window over TCP SACKDocument5 pagesNS simulation implementing Large Window over TCP SACKElizabeth FlowersNo ratings yet

- Congestion ControlDocument33 pagesCongestion ControlDushyant Singh100% (1)

- Assignment 3: Vivek GuptaDocument17 pagesAssignment 3: Vivek Guptavgvivekgupta2No ratings yet

- 10 Transport Layer enDocument29 pages10 Transport Layer enEdimarf SatumboNo ratings yet

- Wireless and Mobile Computing: University of GujratDocument25 pagesWireless and Mobile Computing: University of Gujratinbasaat talhaNo ratings yet

- Int Ant Week3Document32 pagesInt Ant Week3Ahmet ÇakıroğluNo ratings yet

- Advanced Computer Networks: CWND OfferedwindowDocument5 pagesAdvanced Computer Networks: CWND OfferedwindowvermuzNo ratings yet

- TCP Congestion Control MechanismsDocument25 pagesTCP Congestion Control Mechanismsriteshdubey24No ratings yet

- Effect of Maximum Congestion of TCP Reno in Decagon NoCDocument5 pagesEffect of Maximum Congestion of TCP Reno in Decagon NoCJournal of ComputingNo ratings yet

- Advanced Computer Networks: MS-2 SemesterDocument21 pagesAdvanced Computer Networks: MS-2 SemesterAmir ButtNo ratings yet

- Congestion Control 2Document13 pagesCongestion Control 2ADITYA PANDEYNo ratings yet

- TCP Flow Controls: Matthew Roughan Adelaide-Melbourne Grampians Workshop 1999Document43 pagesTCP Flow Controls: Matthew Roughan Adelaide-Melbourne Grampians Workshop 1999thotalnNo ratings yet

- Lecture 6 Transport Layer IIDocument10 pagesLecture 6 Transport Layer IITanvir ChowdhuryNo ratings yet

- CN Assignment No7Document7 pagesCN Assignment No7sakshi halgeNo ratings yet

- 6.033 Computer System Engineering: Mit OpencoursewareDocument7 pages6.033 Computer System Engineering: Mit OpencoursewareAd Ri TaNo ratings yet

- TCP Congestion Control and Flow Control ExplainedDocument20 pagesTCP Congestion Control and Flow Control ExplainedAHWSNo ratings yet

- How the receive window solves the problem of TCP senders constantly waiting for acknowledgementsDocument5 pagesHow the receive window solves the problem of TCP senders constantly waiting for acknowledgementsEngr Ali RazaNo ratings yet

- TCP Congestion ControlDocument30 pagesTCP Congestion ControlPriya NivaragiNo ratings yet

- Chapter 3: Transport Layer: (PART 2)Document16 pagesChapter 3: Transport Layer: (PART 2)sylinxNo ratings yet

- Transmission Control Protocol (TCP) : Comparison of TCP Congestion Control Algorithms Using Netsim™Document20 pagesTransmission Control Protocol (TCP) : Comparison of TCP Congestion Control Algorithms Using Netsim™Thái NguyễnNo ratings yet

- NW Lec 6Document28 pagesNW Lec 6omarnader16No ratings yet

- Mobile Networks: TCP in Wireless NetworksDocument49 pagesMobile Networks: TCP in Wireless Networksbiren275No ratings yet

- TCP Congestion ControlDocument16 pagesTCP Congestion Controlpy thonNo ratings yet

- Transport Layer Congestion Control TechniquesDocument11 pagesTransport Layer Congestion Control TechniquesHaneesha MuddasaniNo ratings yet

- Mobile Networks: TCP in Wireless NetworksDocument49 pagesMobile Networks: TCP in Wireless Networks최석재No ratings yet

- UnitII HSNDocument68 pagesUnitII HSNAnitha Hassan KabeerNo ratings yet

- TCP Slide Set 13: Flow Control and Reliability MechanismsDocument29 pagesTCP Slide Set 13: Flow Control and Reliability MechanismsMittal MakwanaNo ratings yet

- Transmission Control Protocol (TCP)Document16 pagesTransmission Control Protocol (TCP)Diwakar SinghNo ratings yet

- Unit 4 Topic 27-29 Congestion Control and QoSDocument31 pagesUnit 4 Topic 27-29 Congestion Control and QoSashok_it87No ratings yet

- CN Module 3 PPT - 2.Document15 pagesCN Module 3 PPT - 2.N. V Mahesh 9985666441No ratings yet

- CN Bookmarked Note Part 3Document188 pagesCN Bookmarked Note Part 3PavanNo ratings yet

- Lecture 6Document6 pagesLecture 6Rylan2911No ratings yet

- 02a - FTP Generic Optim.1.04 ALUDocument63 pages02a - FTP Generic Optim.1.04 ALUleandre vanieNo ratings yet

- HW 2Document3 pagesHW 2Marina CzuprynaNo ratings yet

- A Review On Snoop With Rerouting in Wired Cum Wireless NetworksDocument4 pagesA Review On Snoop With Rerouting in Wired Cum Wireless NetworksInternational Organization of Scientific Research (IOSR)No ratings yet

- CN - Unit4.2-1Document23 pagesCN - Unit4.2-1Kanika TyagiNo ratings yet

- Congestion Control: IssuesDocument7 pagesCongestion Control: Issuesraw.junkNo ratings yet

- Advanced Lab in Computer Communications: - Transmission Control ProtocolDocument42 pagesAdvanced Lab in Computer Communications: - Transmission Control ProtocolzhreniNo ratings yet

- TCP VegasDocument21 pagesTCP VegasZahid HasanNo ratings yet

- Cs/ee 143 Communication Networks Chapter 4 Transport: Text: Walrand & Parakh, 2010 Steven Low CMS, EE, CaltechDocument34 pagesCs/ee 143 Communication Networks Chapter 4 Transport: Text: Walrand & Parakh, 2010 Steven Low CMS, EE, CaltechThinh Tran VanNo ratings yet

- Transport Layer Challenges By: Talha Mudassar 015 Fahad Nabi 63 Rashid Mehmood 96Document6 pagesTransport Layer Challenges By: Talha Mudassar 015 Fahad Nabi 63 Rashid Mehmood 96Noman AliNo ratings yet

- Priority TX in 802 - 11Document19 pagesPriority TX in 802 - 11Manuel ChawNo ratings yet

- Lecture27 ReviewDocument48 pagesLecture27 ReviewMahamad AliNo ratings yet

- Throughput Analysis of TCP Newreno For Multiple BottlenecksDocument10 pagesThroughput Analysis of TCP Newreno For Multiple BottlenecksTJPRC PublicationsNo ratings yet

- Week6 Lec2Document20 pagesWeek6 Lec2sasaNo ratings yet

- Congestion Control TechniquesDocument27 pagesCongestion Control Techniqueswallace_moreiraNo ratings yet

- 13-CongestioninDataNetworks REALDocument36 pages13-CongestioninDataNetworks REALmemo1023No ratings yet

- 06353936Document13 pages06353936MhackSahuNo ratings yet

- 06313930Document6 pages06313930MhackSahuNo ratings yet

- 06353935Document12 pages06353935MhackSahuNo ratings yet

- 06280203Document5 pages06280203MhackSahuNo ratings yet

- 06280203Document5 pages06280203MhackSahuNo ratings yet

- BioDocument12 pagesBioJayaraman TamilvendhanNo ratings yet

- A Grasping Force Optimization Algorithm For Multiarm Robots With Multifingered HandsDocument13 pagesA Grasping Force Optimization Algorithm For Multiarm Robots With Multifingered HandsMhackSahuNo ratings yet

- Cross-Site Request Forgery: Attack and Defense: Tatiana Alexenko Mark Jenne Suman Deb Roy Wenjun ZengDocument2 pagesCross-Site Request Forgery: Attack and Defense: Tatiana Alexenko Mark Jenne Suman Deb Roy Wenjun ZengMhackSahuNo ratings yet

- 2013 Mechanisms of The Anatomically Correct Testbed HandDocument13 pages2013 Mechanisms of The Anatomically Correct Testbed HandMhackSahuNo ratings yet

- Possible Attacks On XML Web ServicesDocument17 pagesPossible Attacks On XML Web ServicesMhackSahuNo ratings yet

- 05563777(1)+++Document5 pages05563777(1)+++MhackSahuNo ratings yet

- 2014 Dynamics and Compliance Control of A Linkage Robot For Food ChewingDocument10 pages2014 Dynamics and Compliance Control of A Linkage Robot For Food ChewingMhackSahuNo ratings yet

- Methane Capture: Options For Greenhouse Gas Emission ReductionDocument24 pagesMethane Capture: Options For Greenhouse Gas Emission ReductionMhackSahuNo ratings yet

- Air CarDocument4 pagesAir CarRanjeet SinghNo ratings yet

- Kuechler, Schapranow, - Congestion ControlDocument31 pagesKuechler, Schapranow, - Congestion ControlMhackSahuNo ratings yet

- 2007 MulObjctPSODocument17 pages2007 MulObjctPSOMhackSahuNo ratings yet

- Impingement Baffle Plate Scrubber Removes SO2 from Flue GasDocument7 pagesImpingement Baffle Plate Scrubber Removes SO2 from Flue GasMhackSahuNo ratings yet

- 2013 Covering Points of Interest With Mobile SensorsDocument12 pages2013 Covering Points of Interest With Mobile SensorsMhackSahuNo ratings yet

- 2013 Bayesian Multicategorical Soft Data Fusion For Human-Robot CollaborationDocument18 pages2013 Bayesian Multicategorical Soft Data Fusion For Human-Robot CollaborationMhackSahuNo ratings yet

- 2013 A Simulation and Design Tool For A Passive Rotation Flapping Wing MechanismDocument12 pages2013 A Simulation and Design Tool For A Passive Rotation Flapping Wing MechanismMhackSahuNo ratings yet

- 159Document26 pages159MhackSahuNo ratings yet

- 802 11tut PDFDocument24 pages802 11tut PDFmnolasco2010No ratings yet

- 2013 A Novel Method of Motion Planning For An Anthropomorphic Arm Based On Movement PrimitivesDocument13 pages2013 A Novel Method of Motion Planning For An Anthropomorphic Arm Based On Movement PrimitivesMhackSahuNo ratings yet

- 1998 ModifiedPSODocument5 pages1998 ModifiedPSOMhackSahuNo ratings yet

- Reading6 1995 Particle SwarmingDocument7 pagesReading6 1995 Particle SwarmingAlfonso BarrigaNo ratings yet

- 467Document15 pages467MhackSahuNo ratings yet

- 111Document48 pages111MhackSahuNo ratings yet

- Resumen - Indicadores de Tipo Cumplimiento - Mediciones Pre Swap Vs Post Swap (Después de Ajústes Físicos)Document64 pagesResumen - Indicadores de Tipo Cumplimiento - Mediciones Pre Swap Vs Post Swap (Después de Ajústes Físicos)Marcela RojasNo ratings yet

- Stream-Oriented CommunicationDocument20 pagesStream-Oriented CommunicationKumkumo Kussia Kossa100% (1)

- Wireshark User Guide For VntelecomDocument39 pagesWireshark User Guide For VntelecomTran Binh100% (1)

- Lab 8 - Bandwidth-Delay Product andDocument17 pagesLab 8 - Bandwidth-Delay Product andTrevor ChandlerNo ratings yet

- Base Station Controller Transmission Performance Monitoring (01) (PDF) - ENDocument17 pagesBase Station Controller Transmission Performance Monitoring (01) (PDF) - ENa2227 jglNo ratings yet

- Qos Parameters: Delay, Loss, Throughput, and Delay Jitter - Change in DelayDocument39 pagesQos Parameters: Delay, Loss, Throughput, and Delay Jitter - Change in DelayvijaiNo ratings yet

- Abis UtilizationDocument4 pagesAbis UtilizationMudassir HussainNo ratings yet

- Chapter 7 - Mobile IP and TCPDocument45 pagesChapter 7 - Mobile IP and TCPhaileNo ratings yet

- Wireless and Mobile Computing: University of GujratDocument25 pagesWireless and Mobile Computing: University of Gujratinbasaat talhaNo ratings yet

- CNv6 instructorPPT Chapter6Document44 pagesCNv6 instructorPPT Chapter6mohammedqundiNo ratings yet

- RFC 0889Document13 pagesRFC 0889TitoNo ratings yet

- E-Model Based Adaptive Jitter Buffer With Time-ScaDocument7 pagesE-Model Based Adaptive Jitter Buffer With Time-ScabibalelaNo ratings yet

- Check The TCPDocument18 pagesCheck The TCPShwetal ShahNo ratings yet

- Chapter 9: Transport Layer and Security Protocols For Ad Hoc Wireless NetworksDocument14 pagesChapter 9: Transport Layer and Security Protocols For Ad Hoc Wireless NetworksPoornimaNo ratings yet

- Privacy-Preserving and Truthful DetectionDocument11 pagesPrivacy-Preserving and Truthful DetectionKumara SNo ratings yet

- Optimal Rate-Adaptive Data Dissemination in Vehicular PlatoonsDocument11 pagesOptimal Rate-Adaptive Data Dissemination in Vehicular PlatoonslaeeeqNo ratings yet

- SRT Protocol TechnicalOverview DRAFT 2018-10-17 PDFDocument89 pagesSRT Protocol TechnicalOverview DRAFT 2018-10-17 PDFTiếu Tam TiếuNo ratings yet

- Snoop TCPDocument30 pagesSnoop TCPnaveenkumar1215No ratings yet

- Dr. Suad El-Geder EC 433 Computer Engineer DepartmentDocument20 pagesDr. Suad El-Geder EC 433 Computer Engineer DepartmentHeba FarhatNo ratings yet

- HSDPA & PS Rate Maintenance Based On UMATDocument10 pagesHSDPA & PS Rate Maintenance Based On UMATBsskkd KkdNo ratings yet

- Cisco 200-125 Free BraindumpsDocument6 pagesCisco 200-125 Free BraindumpsmattdonaldNo ratings yet

- 1500 ProjectsDocument73 pages1500 ProjectsJugalKSewagNo ratings yet

- An Improved Congestion-Aware Routing Mechanism in SensorDocument15 pagesAn Improved Congestion-Aware Routing Mechanism in SensorNoopur SharmaNo ratings yet

- Airborne Internet ResearchDocument23 pagesAirborne Internet ResearchVEDANT SAGALENo ratings yet

- Multiplex HDDocument32 pagesMultiplex HDCarlistoss CharlangasNo ratings yet