Professional Documents

Culture Documents

Answer 14

Uploaded by

Gabriella GoodfieldCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Answer 14

Uploaded by

Gabriella GoodfieldCopyright:

Available Formats

Chapter 14 - Multiple Regressions and Correlation Analysis

Chapter 14

Multiple Regressions and Correlation Analysis

1.

a.

b.

c.

Multiple regression equation

the Y-intercept

$374,748 found by Y = 64,100 + 0.394(796,000) + 9.6(6940) 11,600(6.0)

2.

a.

b.

c.

d.

e.

Multiple regression equation

One dependent, four independent

A regression coefficient

0.002

105.014, found by Y = 11.6 + 0.4(6) + 0.286(280) + 0.112(97) + 0.002(35)

3.

a.

497.736, found by Y = 16.24 + 0.017(18) + 0.0028(26,500) + 42(3) +

0.0012(156,000) + 0.19(141) + 26.8(2.5)

Two more social activities. Income added only 28 to the index; social activities added

53.6.

b.

4.

a.

b.

c.

30.69 cubic feet

A decrease of 1.86 cubic feet, down to 28.83 cubic feet.

Yes, logically, as the amount of insulation increases and outdoor temperature

increases, the consumption of natural gas decreases.

5.

a.

sY .12

b.

c.

6.

SSE

n (k 1)

583.693

65 ( 2 1)

9.414 3.068

95% of the residuals will be between 6.136, found by 2(3.068)

SSR

77.907

R2

.118

SStotal

661.6

The independent variables explain 11.8% of the variation.

SSE

583.693

9.414

n ( k 1)

65 ( 2 1)

2

Radj

1

1

1

1 .911 .089

SStotal

661.6

10.3375

n 1

65 1

SSE

n (k 1)

2647.38

52 (5 1)

a.

sY .12345

b.

95% of the residuals will be between 15.17, found by 2(7.59)

SSR

3710

R2

.584

SStotal 6357.38

The independent variables explain 58.4% of the variation.

14-1

57.55 7.59

Chapter 14 - Multiple Regressions and Correlation Analysis

c.

7.

a.

b.

c.

d.

e.

f.

8.

a.

b

c.

d.

e.

9.

a.

b.

c.

2

Radj

SSE

2647.38

57.55

n (k 1)

46

1

1

1

1 .462 .538

SStotal

6357.38

124.65

n 1

51

Y 84.998 2.391X 1 0.4086 X 2

90.0674, found by Y^ = 84.998+2.391(4)-0.4086(11)

n = 65 and k = 2

Ho: 1 = 2 = 0

H1: Not all s are 0

Reject Ho if F > 3.15

F = 4.14 Reject Ho. Not all net regression coefficients equal zero.

For X1

for X2

Ho: 1 = 0

Ho: 2 = 0

H1: 1 0

H1: 2 0

t = 1.99

t = -2.38

Reject Ho if t > 2.0 or t < 2.0

Delete variable 1 and keep 2

The regression analysis should be repeated with only X2 as the independent variable.

Y 7.987 0.12242 X 1 0.12166 X 2 0.06281X 3 0.5235 X 4 0.06472 X 5 .

n = 52 and k = 5

Ho: 1 = 2 = 3 = 4 = 5 = 0

H1: Not all s are 0

Reject Ho if F > 2.43

Since the computed value of F is 12.89, reject Ho.

Not all of the regression coefficients are zero.

For X1

for X2

for X3

for X4

for X5

Ho: 1 = 0

Ho: 2 = 0

Ho: 3 = 0

Ho: 4 = 0

Ho: 5 = 0

H1: 1 0

H1: 2 0

H1: 3 0

H1: 4 0

H1: 5 0

t = 3.92

t = 2.27

t = -1.61

t = 3.69

t = -1.62

Reject Ho if t > 2.015 or t < 2.015. Consider eliminating variables 3 and 5.

Delete variable 3 then rerun. Perhaps you will delete variable 5 also.

The regression equation is: Performance = 29.3 + 5.22 Aptitude + 22.1 Union

Predictor Coef

SE Coef

T

P

Constant

29.28

12.77

2.29

0.041

Aptitude

5.222

1.702

3.07

0.010

Union

22.135

8.852

2.50

0.028

S = 16.9166 R-Sq = 53.3% R-Sq(adj) = 45.5%

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

2

3919.3

1959.6 6.85

0.010

Residual Error 12

3434.0

286.2

Total

14

7353.3

These variables are effective in predicting performance. They explain 45.5 percent of

the variation in performance. In particular union membership increases the typical

performance by 22.1.

Ho: 2 = 0

H1: 2 0

Reject Ho if t < 2.1788 or t > 2.1788

Since 2.50 is greater than 2.1788 we reject the null hypothesis and conclude that union

membership is significant and should be included.

14-2

Chapter 14 - Multiple Regressions and Correlation Analysis

d.

When you consider the interaction variable, the regression equation is

Performance = 38.7 + 3.80 Aptitude - 0.1 Union + 3.61 X1X2

Predictor

Coef SECoef T

P

Constant

38.69 15.62 2.48

0.031

Aptitude

3.802 2.179 1.74

0.109

Union

-0.10 23.14 -0.00 0.997

X1X2

3.610 3.473 1.04

0.321

The t value corresponding to the interaction term is 1.04. This is not significant. So

we conclude there is no interaction between aptitude and union membership when

predicting job performance.

10.

The regression equation is: Commissions = 315 + 2.15 Calls + 0.0941 Miles 0.000556

X1X2

Predictor

Constant

Calls

Miles

X1X2

Coef

-314.6

2.150

0.09410

-0.0005564

SE

162.6

1.159

0.05944

0.0004215

Coef

-1.93

1.86

1.58

-1.32

T

0.067

0.078

0.128

0.201

The t value corresponding to the interaction term is 1.32. This is not significant. So we

conclude there is no interaction.

11.

a.

b.

The regression equation is Price = 3080 - 54.2 Bidders + 16.3 Age

Predictor

Coef

SE Coef

T

P

Constant

3080.1

343.9

8.96

0.000

Bidders

-54.19

12.28

-4.41

0.000

Age

16.289

3.784

4.30

0.000

The price decreases 54.2 as each additional bidder participates. Meanwhile the price

increases 16.3 as the painting gets older. While one would expect older paintings to

be worth more, it is unexpected that the price goes down as more bidders participate!

The regression equation is Price = 3972 - 185 Bidders + 6.35 Age + 1.46 X1X2

Predictor

Coef SE

Coef T

P

Constant

3971.7 850.2 4.67

0.000

Bidders

-185.0 114.9 -1.61 0.122

Age

6.353 9.455 0.67

0.509

X1X2

1.462 1.277 1.15

0.265

The t value corresponding to the interaction term is 1.15. This is not significant. So

we conclude there is no interaction.

14-3

Chapter 14 - Multiple Regressions and Correlation Analysis

c.

In the stepwise procedure, the number of bidders enters the equation first. Then the

interaction term enters. The variable age would not be included as it is not significant.

Response is Price on 3 predictors, with N = 25

Step

1

2

Constant

4507 4540

Bidders

T-Value

P-Value

-57

-256

-3.53 -5.59

0.002 0.000

X1X2

T-Value

P-Value

2.25

4.49

0.000

S

R-Sq

R-Sq(adj)

12.

218

66.14

63.06

The number of family members would enter first. Then their income would come in next.

None of the other variables is significant.

Response is Size on 4 predictors, with N = 10

Step

1

2

Constant 1271.6 712.7

Family

T-Value

P-Value

Income

T-Value

P-Value

541 372

6.12 3.59

0.000 0.009

12.1

2.26

0.059

S

336

R-Sq

82.42

R-Sq(adj) 80.22

13.

295

35.11

32.29

273

89.82

86.92

a.

b.

c.

d.

n = 40

4

R2 = 750/1250 = 0.60

S y 1234 500 / 35 3.7796

e.

Ho: 1 = 2 = 3 = 4 = 0

H1: Not alls equal 0

Ho is rejected if F > 2.65

750 / 4

F

13.125 Ho is rejected. At least one I does not equal zero.

500 / 35

14-4

Chapter 14 - Multiple Regressions and Correlation Analysis

14.

Ho: 1 = 0

Ho: 2 = 0

H1: 1 0

H1: 2 0

Ho is rejected if t < 2.074 or t > 2.074

2.676

0.880

t

4.779

t

1.239

0.56

0.710

The second variable can be deleted.

15.

a.

b.

c.

d.

e.

n = 26

R2 = 100/140 = 0.7143

1.4142, found by 2

Ho: 1 = 2 = 3 = 4 = 5 = 0 H1: Not all s are 0

Reject Ho if F > 2.71

Computed F = 10.0. Reject Ho. At least one regression coefficient is not zero.

Ho is rejected in each case if t < 2.086 or t > 2.086.

X1 and X5 should be dropped.

16.

Ho: 1 = 2 = 3 = 4 = 5 = 0

H1: Not all s equal zero.

df1 = 5

df2 = 20 (5 + 1) = 14, so Ho is rejected if F > 2.96

Source

SS

df MSE F

Regression

448.28

5

89.656 17.58

Error

71.40 14

5.10

Total

519.68 19

So Ho is rejected. Not all the regression coefficients equal zero.

17.

a.

$28,000

b.

0.5809 found by R 2

c.

d.

e.

18.

a.

b.

c.

d.

e.

SSR

3050

SStotal 5250

9.199 found by 84.62

Ho is rejected if F > 2.97 (approximately) Computed F = 1016.67/84.62 = 12.01 Ho is

rejected. At least one regression coefficient is not zero.

If computed t is to the left of 2.056 or to the right of 2.056, the null hypothesis in

each of these cases is rejected. Computed t for X2 and X3 exceed the critical value.

Thus, population and advertising expenses should be retained and number of

competitors, X1 dropped.

The strongest relationship is between sales and income (0.964). A problem could

occur if both cars and outlets are part of the final solution. Also, outlets and

income are strongly correlated (0.825). This is called multicollinearity.

1593.81

R2

0.9943

1602.89

Ho is rejected. At least one regression coefficient is not zero. The computed value of

F is 140.42.

Delete outlets and bosses. Critical values are 2.776 and 2.776

1593.66

R2

0.9942

1602.89

14-5

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

g.

19.

a.

20.

a.

There was little change in the coefficient of determination

The normality assumption appears reasonable.

There is nothing unusual about the plots.

The strongest correlation is between GPA and legal. No problem with

4.3595

R2

0.8610

multicollinearity.

b.

5.0631

c.

Ho is rejected if F > 5.41

F = 1.4532/0.1407 = 10.328 At least one coefficient is not zero.

d.

Any Ho is rejected if t < 2.571 or t > 2.571. It appears that only GPA is significant.

Verbal and math could be eliminated.

4.2061

R2

0.8307 R2 has only been reduced 0.0303

e.

5.0631

f.

The residuals appear slightly skewed (positive), but acceptable.

g.

There does not seem to be a problem with the plot.

b.

c.

d.

e.

f.

The correlation matrix is:

Salary

Years

Rating

Years

0.868

Rating

0.547

0.187

Master

0.311

0.208

0.458

Years has the strongest correlation with salary. There does not appear to be a problem

with multicollinearity.

The regression equation is: Y = 19.92 + 0.899X1 + 0.154X2 0.67 X3

Y = 33.655 or $33,655

Ho is rejected if F > 3.24 Computed F = 301.06/5.71 = 52.72

Ho is rejected. At least one regression coefficient is not zero.

A regression coefficient is dropped if computed t is to the left of 2.120 or right of

2.120. Keep years and rating; drop masters.

Dropping masters, we have:

Salary = 20.1157 + 0.8926(years) + 0.1464(rating)

The stem and leaf display and the histogram revealed no problem with the assumption

of normality. Again using MINITAB:

Midpoint

Count

4

1*

3

1*

2

3***

1

3***

0

4****

1

4****

2

0

3

3***

4

0

5

1*

14-6

Chapter 14 - Multiple Regressions and Correlation Analysis

There does not appear to be a pattern to the residuals according to the following

MINITAB plot.

Residuals(YY')

g.

6

4

2

0

-2 10

15

20

25

30

35

40

45

-4

-6

Y'

21.

a.

b.

c.

The correlation of Screen and Price is 0.893. So there does appear to be a linear

relationship between the two.

Price is the dependent variable.

The regression equation is Price = 2484 + 101 Screen. For each inch increase in

screen size, the price increases $101 on average.

d.

Using dummy indicator variables for Sharp and Sony, the regression equation is

Price = 2308 + 94.1 Screen + 15 Manufacturer Sharp + 381 Manufacturer Sony

Sharp can obtain on average $15 more than Samsung and Sony can collect an

additional benefit of $381.

e.

Here is some of the output.

Predictor

Coef SE Coef

T

P

Constant

-2308.2

492.0 -4.69 0.000

Screen

94.12

10.83

8.69 0.000

Manufacturer_Sharp

15.1

171.6

0.09 0.931

Manufacturer_Sony

381.4

168.8

2.26 0.036

The p-value for Sharp is relatively large. A test of their coefficient would not be

rejected. That means they may not have any real advantage over Samsung. On the

other hand, the p-value for the Sony coefficient is quite small. That indicates that it

did not happen by chance and there is some real advantage to Sony over Samsung.

14-7

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

A histogram of the residuals indicates they follow a normal distribution.

Residual plot

Normal

4

-5.931

Frequency

g.

-600

-400

-200

0

RESI1

200

400

600

The residual variation may be increasing for larger fitted values.

Residuals vs. Fits for LCD's

500

RESI1

250

-250

-500

1000

22.

a.

be a

b.

c.

d.

e.

both

1500

2000

FITS1

2500

3000

The correlation of Income and Median Age = 0.721. So there does appear to

linear relationship between the two.

Income is the dependent variable.

The regression equation is Income = 22805 + 362 Median Age. For each year

increase in age, the income increases $362 on average.

Using an indicator variable for a coastal county, the regression equation is

Income = 29619 + 137 Median Age + 10640 Coastal.

That changes the estimated effect of an additional year of age to $137 and the

effect of living in the coastal area as adding $10,640 to income.

Notice the p-values are virtually zero on the output following. That indicates

variables are significant influences on income

Predictor

Constant

Median Age

Coastal

Coef

29619.2

136.887

10639.7

SE Coef

0.3

0.008

0.2

14-8

T

90269.67

18058.14

54546.92

P

0.000

0.000

0.000

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

A histogram of the residuals is close to a normal distribution.

Residual plot

Normal

Mean

StDev

N

3.0

-3.23376E-12

0.2005

9

Frequency

2.5

2.0

1.5

1.0

0.5

0.0

-0.2

0.0

RESI2

0.2

0.4

The variation is about the same across the different fitted values.

Residuals vs. Fits

0.4

0.3

0.2

RESI2

-0.4

0.1

0.0

-0.1

-0.2

-0.3

35000

40000

45000

FITS2

14-9

50000

Chapter 14 - Multiple Regressions and Correlation Analysis

a.

Scatterplot of Sales vs Advertising, Accounts, Competitors, Potential

Advertising

Accounts

300

200

100

0

Sales

23.

6

Competitors

30

40

50

Potential

60

70

300

200

100

0

4

10

12

10

15

20

Sales seem to fall with the number of competitors and rise with the number of accounts and

potential.

b.

c.

d.

Pearson correlations

Sales

Advertising

Accounts

Competitors

Advertising

0.159

Accounts

0.783

0.173

Competitors

-0.833

-0.038

-0.324

Potential

0.407

-0.071

0.468

-0.202

The number of accounts and the market potential are moderately correlated.

The regression equation is

Sales = 178 + 1.81 Advertising + 3.32 Accounts - 21.2 Competitors + 0.325 Potential

Predictor

Coef

SE Coef

T

P

Constant

178.32

12.96

13.76

0.000

Advertising

1.807

1.081

1.67

0.109

Accounts

3.3178

0.1629

20.37

0.000

Competitors -21.1850

0.7879

-26.89 0.000

Potential

0.3245

0.4678

0.69 0.495

S = 9.60441 R-Sq = 98.9% R-Sq(adj) = 98.7%

Analysis of Variance

Source

DF SS

MS

F

P

Regression

4

176777

44194

479.10 0.000

Residual Error 21

1937

92

Total

25

178714

The computed F value is quite large. So we can reject the null hypothesis that all of

the regression coefficients are zero. We conclude that some of the independent

variables are effective in explaining sales.

Market potential and advertising have large p-values (0.495 and 0.109, respectively).

You would probably cut them.

14-10

Chapter 14 - Multiple Regressions and Correlation Analysis

e.

If you omit potential, the regression equation is

Sales = 180 + 1.68 Advertising + 3.37 Accounts - 21.2 Competitors

Predictor

Coef

SE Coef

T

P

Constant

179.84 12.62 14.25

0.000

Advertising

1.677 1.052

1.59

0.125

Accounts

3.3694 0.1432 23.52

0.000

Competitors -21.2165 0.7773 -27.30

0.000

Now advertising is not significant. That would also lead you to cut out the advertising

variable and report the polished regression equation is:

Sales = 187 + 3.41 Accounts - 21.2 Competitors.

Predictor

Coef

SE Coef

T

P

Constant

186.69

12.26

15.23 0.000

Accounts

3.4081

0.1458

23.37 0.000

Competitors -21.1930

0.8028

-26.40 0.000

f.

Histogram of the Residuals

(response is Sales)

9

8

7

Frequency

6

5

4

3

2

1

0

g.

-20

-10

0

Residual

10

20

The histogram looks to be normal. There are no problems shown in this plot.

The variance inflation factor for both variables is 1.1. They are less than 10. There

are no troubles as this value indicates the independent variables are not strongly

correlated with each other.

14-11

Chapter 14 - Multiple Regressions and Correlation Analysis

24.

a.

b.

Ho: 1 = 2 = 3 = 0

Hi: Not all Is = 0

11.3437 / 3

F

35.38

2.2446 / 21

All coefficients are significant. Do not delete any.

The residuals appear to be random. No problem.

Residual

c.

d.

Y = 5.7328 + 0.00754X1 + 0.0509X2 + 1.0974X3

0.8

0.6

0.4

0.2

0

-0.2 37

-0.4

-0.6

-0.8

38

39

40

Reject Ho if F > 3.07

41

Fitted

25.

e.

The histogram appears to be normal. No problem.

Histogram of Residual N = 25

Midpoint

Count

0.6

1

*

0.4

3

***

0.2

6

******

0.0

6

******

0.2

6

******

0.4

2

**

0.6

1

*

a.

The correlation matrix is:

Salary

GPA

GPA

0.902

Business 0.911

0.851

The two independent variables are related. There may be multicollinearity.

The regression equation is: Salary = 23.447 + 2.775 GPA + 1.307 Business. As GPA

increases by one point salary increases by $2775. The average business school

graduate makes $1307 more than a corresponding non-business graduate. Estimated

salary is $33,079; found by $23,447 + 2775(3.00) + 1307(1).

b.

14-12

Chapter 14 - Multiple Regressions and Correlation Analysis

d.

e.

R2

21.182

0.888

23.857

To conduct the global test: Ho: 1 2 0 versus H1: Not all is = 0

At the 0.05 significance level, Ho is rejected if F > 3.89.

Source

DF

SS

MS

F

p

Regression

21.182

2 10.591 47.50 0.000

Error

2.676 12

0.223

Total

14

The computed value of F is 47.50, so Ho is rejected. Some of the regression

coefficients are not zero.

Since the p-values are less than 0.05, there is no need to delete variables.

Predictor

Coef

SE Coef

T

P

Constant

23.447

3.490

6.72

0.000

GPA

2.775

1.107

2.51

0.028

Business

1.3071

0.4660

2.80

0.016

The residuals appear normally distributed.

Histogram of the Residuals

(response is Salary)

4

Frequency

c.

0

-1.0

-0.8

-0.6

-0.4

-0.2

Residual

14-13

0.0

0.2

0.4

0.6

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

The variance is the same as we move from small values to large. So there is no

homoscedasticity problem.

RESI1

0.5

0.0

-0.5

-1.0

32

33

34

35

FITS2

26.

a.

Y = 28.2 + 0.0287X1 + 0.650X2 0.049X3 0.004X4 + 0.723X5

b.

R2 = 0.750

c & d. Predictor

Coef

Stdev

t-ratio

P

Constant

28.242

2.986

9.46

0.000

Value

0.028669 0.004970

5.77

0.000

Years

0.6497

0.2412

2.69

0.014

Age

0.04895

0.3126

1.57

0.134

Mortgage 0.000405 0.001269

0.32

0.753

Sex

0.7227

0.2491

2.90

0.009

s = 0.5911

R-sq = 75.0% R-sq(adj) = 68.4%

Analysis of Variance

Source

DF

SS

MS

F

p

Regression

5 19.8914 3.9783 11.39 0.000

Error

19

6.6390 0.3494

Total

24 26.5304

Consider dropping the variables age and mortgage.

e.

The new regression equation is: Y = 29.81 + 0.0253X1 + 0.41X2 + 0.708X5

The R-square value is 0.7161

14-14

Chapter 14 - Multiple Regressions and Correlation Analysis

27.

The computer output is:

Predictor

Coef

Stdev

t-ratio

P

Constant

651.9

345.3

1.89

0.071

Service

13.422

5.125

2.62

0.015

Age

6.710

6.349

1.06

0.301

Gender

205.65

90.27

2.28

0.032

Job

33.45

89.55

0.37

0.712

Analysis of Variance

Source

DF

SS

MS

F

p

Regression

4 1066830 266708

4.77 0.005

Error

25 1398651 55946

Total

29 2465481

a.

=

651.9

+

13.422X

1 6.710X2 + 205.65X3 33.45X4

Y

2

b.

R = 0.433, which is somewhat low for this type of study.

c.

Ho: 1 = 2 = 3 = 4 = 0

Hi: Not all Is = 0

Reject Ho if F > 2.76

1066830 / 4

F

4.77

Ho is rejected. Not all the is equal zero.

1398651/ 25

d.

Using the 0.05 significance level, reject the hypothesis that the regression coefficient

is 0 if t < 2.060 or t > 2.060. Service and gender should remain in the analyses, age

and job should be dropped.

e.

Following is the computer output using the independent variable service and gender.

Predictor

Coef

Stdev

t-ratio

P

Constant

784.2

316.8

2.48

0.020

Service

9.021

3.106

2.90

0.007

Gender

224.41

87.35

2.57

0.016

Analysis of Variance

Source

DF

SS

MS

F

p

Regression

2

998779 499389

9.19 0.001

Error

27 1466703 54322

Total

29 2465481

A man earns $224 more per month than a woman. The difference between technical

and clerical jobs in not significant.

28.

a.

b.

The correlation matrix is:

Food

Income

Income

0.156

Size

0.876

0.098

Income and size are not related. There is no multicollinearity.

The regression equation is: Food = 2.84 + 0.00613 Income + 0.425 Size. Another

dollar of income leads to an increased food bill of 0.6 cents. Another member of the

family adds $425 to the food bill.

14-15

Chapter 14 - Multiple Regressions and Correlation Analysis

d.

e.

R2

18.41

0.826

22.29

To conduct the global test: Ho: = 1 2 0 versus H1: Not all is = 0

At the 0.05 significance level, Ho is rejected if F > 3.44.

Source

DF

SS

MS

F

p

Regression

2 18.4095 9.2048 52.14 0.000

Error

22

3.8840 0.1765

Total

24 22.2935

The computed value of F is 52.14, so Ho is rejected. Some of the regression

coefficients and R2 are not zero.

Since the p-values are less than 0.05, there is no need to delete variables.

Predictor

Coef

SE Coef

T

P

Constant

2.8436

0.2618

10.86

0.000

Income

0.006129 0.002250

2.72

0.012

Size

0.42476

0.04222

10.06

0.000

The residuals appear normally distributed.

Histogram of the Residuals

(response is Food)

6

5

Frequency

c.

4

3

2

1

0

-0.8

-0.6

-0.4

-0.2

0.0

Residual

14-16

0.2

0.4

0.6

0.8

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

The variance is the same as we move from small values to large. So there is no

homoscedasticity problem.

Residuals Versus the Fitted Values

(response is Food)

Residual

-1

4

Fitted Value

a.

b.

c.

d.

e.

The regression equation is P/E = 29.9 5.32 EPS + 1.45 Yield.

Here is part of the software output:

Predictor

Coef

SE Coef

T

P

Constant

29.913

5.767

5.19 0.000

EPS

5.324

1.634

3.26 0.005

Yield

1.449

1.798

0.81 0.431

Thus EPS has a significant relationship with P/E while Yield does not.

As EPS increase by one, P/E decreases by 5.324 and when Yield increases by one, P/E

increases by 1.449.

The second stock has a residual of -18.43, signifying that its fitted P/E is around 21.

7

6

5

Frequency

29.

4

3

2

1

0

-20

-15

-10

-5

10

Residuals

The distribution of residuals may show a negative skewed.

14-17

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

Residuals Versus the Fitted Values

(response is P/E)

10

Residual

-10

-20

10

20

30

40

Fitted Value

There does not appear to any problem with homoscedasticity.

30.

g.

The correlation matrix is

P/E

EPS

EPS

0.602

Yield

0.054

0.162

The correlation between the independent variables is 0.162. That is not

multicollinearity.

a.

The regression equation is

Tip = - 0.586 + 0.0985 Bill + 0.598 Diners

Predictor Coef

SE Coef

T

P

VIF

Constant -0.5860

0.3952

-1.48

0.150

Bill

0.09852

0.02083

4.73

0.000

3.6

Diners

0.5980

0.2322

2.58

0.016

3.6

Another diner on average adds $.60 to the tip.

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

2

112.596 56.298 89.96

0.000

Residual Error 27

16.898

0.626

Total

29

129.494

The F value is quite large and the p value is extremely small. So we can reject a null

hypothesis that all the regression coefficients are zero and conclude that at least one of

the independent variables is significant.

The null hypothesis is that the coefficient is zero in the individual test. It would be

rejected if t is less than -2.05183 or more than 2.05183. In this case they are both

larger than the critical value of 2.0518. Thus, neither should be removed.

The coefficient of determination is 0.87, found by 112.596/129.494.

b.

c.

d.

14-18

Chapter 14 - Multiple Regressions and Correlation Analysis

e.

Histogram of the Residuals

(response is Tip)

7

6

Frequency

5

4

3

2

1

0

-1.2

-0.6

0.0

Residual

0.6

1.2

The residuals are approximately normally distributed.

f.

Residuals Versus the Fitted Values

(response is Tip)

1.5

1.0

Residual

0.5

0.0

-0.5

-1.0

4

5

Fitted Value

The residuals look random.

14-19

Chapter 14 - Multiple Regressions and Correlation Analysis

31.

a.

The regression equation is

Sales = 1.02 + 0.0829 Infomercials

Predictor

Coef SE Coef

T

P

Constant

1.0188 0.3105 3.28 0.006

Infomercials 0.08291 0.01680 4.94 0.000

S = 0.308675

R-sq = 65.2%

R-sq(adj) = 62.5%

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

1

2.3214 2.3214

24.36

0.000

Residual Error 13

1.2386 0.0953

Total

14

3.5600

The global test on F demonstrates there is a substantial connection between sales and the

number of commercials.

b.

Histogram of the Residuals

(response is Sales)

3.0

Frequency

2.5

2.0

1.5

1.0

0.5

0.0

-0.4

-0.2

0.0

Residual

0.2

0.4

The residuals are close to normally distributed.

32.

a.

The regression equation is: Fall = 1.72 + 0.762 July

Predictor

Coef SE Coef

T

P

Constant

1.721

1.123

1.53 0.149

July

0.7618

0.1186

6.42 0.000

Analysis of Variance

Source

DF

Regression

1

Residual Error

13

Total

14

b.

SS

36.677

11.559

48.236

MS

36.677

0.889

F

41.25

P

0.000

The global F test demonstrates there is a substantial connection between attendance at

the Fall Festival and the fireworks.

The Durbin-Watson statistic is 1.613. There is no problem with autocorrelation.

14-20

Chapter 14 - Multiple Regressions and Correlation Analysis

33.

a.

The regression equation is

Auction Price = - 118929 + 1.63 Loan + 2.1 Monthly Payment + 50 Payments Made

Analysis of Variance

Source

DF

Regression

3

Residual Error

16

Total

19

SS

5966725061

798944439

6765669500

MS

1988908354

49934027

F

39.83

P

0.000

The computed F is 39.83. It is much larger than the critical value 3.2388. The pvalue is also quite small. Thus the null hypothesis that all the regression coefficients

are zero can be rejected. At least one of the multiple regression coefficients is

different from zero.

b.

c.

34.

Predictor

Coef

Constant

-118929

Loan

1.6268

Monthly Payment

2.06

Payments Made

50.3

SE Coef

19734

0.1809

14.95

134.9

T

-6.03

8.99

0.14

0.37

P

0.000

0.000

0.892

0.714

The null hypothesis is that the coefficient is zero in the individual test. It would be

rejected if t is less than -2.1199 or more than 2.1199. In this case the t value for the

loan variable is larger than the critical value. Thus, it should not be removed.

However, the monthly payment and payments made variables would likely be

removed.

The revised regression equation is: Auction Price = 119893 + 1.67 Loan.

The regression equation is

Auction Price = -164718 + 2.02 Loan + 9.3 Monthly Payment + 1773 Payments Made

0.0167 X1X3

Predictor

Coef

SE Coef

T

P

Constant

-164718

30823

-5.34

0.000

Loan

2.0191

0.2708

7.46

0.000

Monthly Payment

9.26

14.46

0.64

0.532

Payments Made

1772.5

938.9

1.89

0.079

X1X3

-0.016725

0.009036

-1.85

0.084

The t value corresponding to the interaction term is -1.85. This is not significant. So we

conclude there is no interaction.

14-21

Chapter 14 - Multiple Regressions and Correlation Analysis

35.

The computer output is as follows:

Predictor

Coef

StDev

T

P

Constant

57.03

39.99

1.43

0.157

Bedrooms

7.118

2.551

2.79

0.006

Size

0.03800

0.01468

2.59

0.011

Pool

18.321

6.999

2.62

0.010

Distance

0.9295

0.7279

1.28

0.205

Garage

35.810

7.638

4.69

0.000

Baths

23.315

9.025

2.58

0.011

s = 33.21

R-sq = 53.2%

R-sq (adj) = 50.3%

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

6

122676

20446 18.54

0.000

Error

98

108092

1103

Total

104

230768

a. The regression equation is: Price = 57.0 + 7.12 Bedrooms + 0.0380 Size 18.3 Pool

0.929 Distance + 35.8 Garage + 23.3 Baths. Each additional bedroom adds about $7000

to the selling price, each additional square foot of space in the house adds $38 to the

selling price, each additional mile the home is from the center of the city lowers the

selling price about $900, a pool lowers the selling price $18,300, an attached garage adds

and additional $35,800 to the selling price as does another bathroom $23,300.

b. The R-square value is 0.532. The variation in the six independent variables explains

somewhat more than half of the variation in the selling price.

c. The correlation matrix is as follows:

Price

Bedrooms Size

Pool

Distance

Garage

Bedrooms

0.467

Size

0.371

0.383

Pool

0.294

0.005

0.201

Distance

0.347

0.153

0.117

0.139

Garage

0.526

0.234

0.083

0.114

0.359

Baths

0.382

0.329

0.024

0.055

0.195

0.221

The independent variable Garage has the strongest correlation with price. Distance

and price are inversely related, as expected. There does not seem to be any strong

correlations among the independent variables.

d. To conduct the global test: Ho: 1 = 2 = 3 = 4 = 5 = 6 = 0, H1: Some of the net

regression coefficients do not equal zero. The null hypothesis is rejected if F > 2.25.

The computed value of F is 18.54, so the null hypothesis is rejected. We conclude that

all the net coefficients are equal to zero.

e. In reviewing the individual values, the only variable that appears not to be useful is

distance. The p-value is greater than 0.05. We can drop this variable and rerun the

analysis.

14-22

Chapter 14 - Multiple Regressions and Correlation Analysis

f.

g.

h.

The output is as follows:

Predictor

Coef

StDev

T

P

Constant

36.12

36.59

0.99

0.326

Bedrooms 7.169

2.559

2.80

0.006

Size

0.03919

0.01470

2.67

0.009

Pool

19.110

6.994 2.73

0.007

Garage

38.847

7.281

5.34

0.000

Baths

24.624

8.995

2.74

0.007

s = 33.32 R-sq = 52.4%

R-sq (adj) = 50.0%

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

5

120877

24175

21.78 0.000

Error

99

109890

1110

Total

104

230768

In this solution observe that the p-value for the Global test is less than 0.05 and the pvalues for each of the independent variables are also all less than 0.05. The R-square

value is 0.524, so it did not change much when the independent variable distance was

dropped

The following histogram was developed using the residuals from part f. The normality

assumption is reasonable.

Histogram of Residuals

80

1

*

60

7

*******

40

13

*************

20

20

********************

0

20

********************

20

26

**************************

40

10

**********

60

8

********

The following scatter diagram is based on the residuals in part f. There does not seem to

be any pattern to the plotted data.

x2xxx

50+xxxx

xxxxxxx

RESI1xxxxx

xxxxxxxxx2xxx

xxx2xxxxxxx

0+xxxxxxxxxx2

xx2xxxxxxx

x2xxxx2x

xxxxxxx

x2xxxxx

50+xxxx

xx2

x

+++++FITS1

175200225250275

14-23

Chapter 14 - Multiple Regressions and Correlation Analysis

36.

a.

The equation is: Wins = 54.05 + 318.5 Batting + 0.0057 SB 0.200 Errors

11.656 ERA + 0.0702 HR 0.478 Surface

For each additional point that the team batting average increases, the number

of wins goes up by 0.318. Each stolen base adds 0.0057 to average wins. Each error

lowers the average number of wins by 0.200. An increase of one on the ERA

decreases the number of wins by 11.7. Each home run adds 0.0702 wins. Playing on

artificial lowers the number of wins by 0.48.

1640

0.655 Sixty-five percent of the variation in the number of wins is

2505

b.

R2

c.

accounted for by these six variables.

The correlation matrix is as follows:

Batting

SB

Errors

ERA

HR

Surface

d.

e.

Batting

SB

Errors

ERA

HR

0.386

-0.224

-0.027

0.042

-0.126

-0.058

0.103

0.062

0.027

-0.067

0.093

0.118

0.052

0.161

-0.096

Batting and ERA have the strong correlation with winning. Stolen bases and surface

have the weakest correlation with winning. The highest correlation among the

independent variables is 0.386. So multicollinearity does not appear to be a problem.

To conduct the global test: Ho: 1 = 2 == 6 = 0, H1: Not all Is = 0

At the 0.05 significance level, Ho is rejected if F > 2.528.

Source

DF

SS

MS

F

P

Regression

6

1639.6

273.27

7.26

0.000

Residual Error 23

865.3

37.62

Total

29

2504.9

Since the p-value is virtually zero. You can reject the null hypothesis that all of the

net regression coefficients are zero and conclude at least one of the variables has a

significant relationship to winning.

Surface would be deleted first because of its large p-value.

Predictor

Constant

Batting

SB

Errors

ERA

HR

Surface

f.

Wins

0.496

0.147

-0.342

-0.542

0.187

-0.184

Coef

54.05

318.5

0.00569

-0.19986

-11.656

0.07024

0.478

SE Coef

34.17

111.0

0.03687

0.08147

2.578

0.03815

3.866

T

1.58

2.87

0.15

-2.45

-4.52

1.84

0.12

P

0.127

0.009

0.879

0.022

0.000

0.079

0.903

The p-values for stolen bases and surface are large. Think about delegating these two

variables.

The reduced regression equation is:

Wins = 57.88 + 3336.6 Avg - 0.182 Errors - 11.264 ERA

14-24

Chapter 14 - Multiple Regressions and Correlation Analysis

g.

The residuals are normally distributed.

Histogram of RESI1

9

8

7

Frequency

6

5

4

3

2

1

0

h.

-10

-5

0

RESI1

10

This plot appears to be random and to have a constant variance.

Scatter Diagram of Residuals versus Fitted Values

15

10

RESI1

-5

-10

65

37.

a.

b.

70

75

80

FITS1

85

90

95

The regression equation is:

Wage = 14174 + 3325 Ed 11675 Fe + 448 Ex 5355 Union

Each year of education adds $3325 to wage. Females make an average of $11675 less

than males. Each year of experience adds $448 and union membership lowers wages

by $5355.

R-Sq = 0.366 meaning 37% of the variation in wages is accounted for by these

variables.

14-25

Chapter 14 - Multiple Regressions and Correlation Analysis

c.

Ed

Fe

Ex

Age

Union

Wage

0.408

0.356

0.071

0.167

0.052

Ed

Fe

0.082

0.440 0.051

0.253 0.037

0.083 0.128

Ex

0.980

0.236

Age

0.273

Education has the strongest correlation (0.408) with wage. Age and experience are very

highly correlated (0.980)! Age is removed to avoid multicollinearity

d.

Analysis of Variance

Source

DF

SS

MS

F

P

Regression

4

10395077453 2598769363 13.69

0.000

Residual Error 95

18038129030

189875042

Total

99

28433206483

The p-value is so small we reject the null hypothesis of no significant coefficients and

conclude at least one of the variables is a predictor of annual wage.

e.

Predictor

Coef

SE Coef

T

P

Constant

14174

8720

1.63 0.107

Ed

3325.1

566.0

5.87 0.000

Fe

11675

2796

4.18 0.000

Ex

448.0

119.7

3.74 0.000

Union

5355

3813

1.40 0.163

Union membership does not appear to be significant.

f. The reduced regression equation is: Wage = 12228 +3160Ed 11149Fe +396Ex.

g.

Frequency

20

10

0

-40000

-20000

20000

RESI1

The residuals are normally distributed.

14-26

40000

60000

Chapter 14 - Multiple Regressions and Correlation Analysis

h.

60000

RESI1

40000

20000

0

-20000

-40000

10000

20000

30000

40000

50000

60000

FITS1

38.

a.

b.

c.

d.

e.

f.

The regression equation is: Unemployment = 79.0 +0.439 65 & over 0.423 Life

Expectancy 0.465 Literacy %.

RSq =0.555

Unemploy 65 & over Life Exp

65 & over

0.467

Life Exp

0.637

0.640

Literacy

0.684

0.794 0.681

The correlation between the percent of the population over 65 and the literacy rate is

0.794, which is above the 0.7 rule of thumb level, indicating multicollinearity. One

of those two variables should be dropped.

Analysis of Variance

Source

D

SS

MS

F

P

F

Regression

3 1316.92 438.97 15.38 0.000

Residual Error 37 1056.16 28.54

Total

40 2373.08

The

pvalue is so small we reject the null hypothesis of no significant coefficients and

conclude at least one of the variables is a predictor of unemployment.

Predicto

Coef

SE

T

P

r

Coef

Constant

78.98

11.93

6.62 0.000

65 & ove

0.4390 0.2785

1.58 0.123

Life Exp 0.4228 0.1698 2.49 0.017

Literacy 0.4647 0.1345 3.46 0.001

The variable percent

of the population over 65 appears to be an insignificant because the pvalue is well

above 0.10. Hence it should be dropped from the analysis.

The regression equation is:

Unemployment = 67.0 0.357 Life Expectancy 0.334 Literacy %

14-27

Chapter 14 - Multiple Regressions and Correlation Analysis

g.

9

8

Frequency

7

6

5

4

3

2

1

0

-10

10

20

RESI1

The residuals appear normal.

h.

RESI1

10

-10

10

20

30

FITS2

There may be some homoscedasticity as the variance seems larger for the bigger values.

14-28

You might also like

- Effect of persuasive communicationDocument9 pagesEffect of persuasive communicationelvira yuliaNo ratings yet

- Abubakar Muhammad - 1701339011 - Compensation - Case Google Evolving Pay StrategyDocument2 pagesAbubakar Muhammad - 1701339011 - Compensation - Case Google Evolving Pay StrategyAbu AssegafNo ratings yet

- Baron & Kenny PDFDocument10 pagesBaron & Kenny PDFBitter MoonNo ratings yet

- Pengaruh E-Service Quality Terhadap Perceived Value Dan E - (Survei Pada Pelanggan Go-Ride Yang Menggunakan Mobile Application Go-Jek Di Kota Malang)Document10 pagesPengaruh E-Service Quality Terhadap Perceived Value Dan E - (Survei Pada Pelanggan Go-Ride Yang Menggunakan Mobile Application Go-Jek Di Kota Malang)m.knoeri habibNo ratings yet

- Assignment 1Document2 pagesAssignment 1nazareth621No ratings yet

- DSInnovate MSME Report 2022Document82 pagesDSInnovate MSME Report 2022Syaiful Bahri MbgiNo ratings yet

- The Future of Resource-Based TheoryDocument19 pagesThe Future of Resource-Based TheoryKaori BecerraNo ratings yet

- ABBA - Annual Report - 2015 - Revisi PDFDocument271 pagesABBA - Annual Report - 2015 - Revisi PDFmei-257402No ratings yet

- Proposal TQM RahmatikaDocument34 pagesProposal TQM RahmatikaNur Anif Purnamasari0% (1)

- Case Study 3 - Janssen-Cilag Wiki Solution: MBA FT-7601 Managing E-BusinessDocument5 pagesCase Study 3 - Janssen-Cilag Wiki Solution: MBA FT-7601 Managing E-BusinessAayush GoyalNo ratings yet

- City BankDocument57 pagesCity BankRanjan Kumar100% (1)

- Ekonomi Manajerial Dalam Ekonomi Global, Edisi 5 Oleh Dominick SalvatoreDocument24 pagesEkonomi Manajerial Dalam Ekonomi Global, Edisi 5 Oleh Dominick SalvatoreDhani SardonoNo ratings yet

- Capital BudgetingDocument15 pagesCapital BudgetingKiki Sidharta TahaNo ratings yet

- STIKES Widya Dharma Husada TangerangDocument3 pagesSTIKES Widya Dharma Husada TangerangnelfriNo ratings yet

- The Data of Macroeconomics: AcroeconomicsDocument54 pagesThe Data of Macroeconomics: AcroeconomicsAnkur GoelNo ratings yet

- Performance Management GuideDocument306 pagesPerformance Management GuiderakeshrakeshNo ratings yet

- Deskriptif dan Inferensi Statistika serta Teknik SamplingDocument11 pagesDeskriptif dan Inferensi Statistika serta Teknik SamplingFaJar ALmaaNo ratings yet

- Andrew Hayes (Process Macro)Document9 pagesAndrew Hayes (Process Macro)Bilal JavedNo ratings yet

- C09 +chap+16 +Capital+Structure+-+Basic+ConceptsDocument24 pagesC09 +chap+16 +Capital+Structure+-+Basic+Conceptsstanley tsangNo ratings yet

- PENGARUH CSR, CITRA MEREK, DAN REPUTASI TERHADAP KEPUTUSAN PEMBELIAN SABUN LIFEBUOYDocument19 pagesPENGARUH CSR, CITRA MEREK, DAN REPUTASI TERHADAP KEPUTUSAN PEMBELIAN SABUN LIFEBUOYPerdana Ardhi NugrohoNo ratings yet

- Winning Markets Through Strategic PlanningDocument58 pagesWinning Markets Through Strategic PlanningWalid Ben HuseinNo ratings yet

- Chap 8 E-CommerceDocument17 pagesChap 8 E-Commercesinghashish54440% (1)

- 1,425 277 273 100 915 120 1,687 259 234 40 142 25 57 258 31 894 141 Coefficient of Correlat 0.95684Document3 pages1,425 277 273 100 915 120 1,687 259 234 40 142 25 57 258 31 894 141 Coefficient of Correlat 0.95684Aliza IshraNo ratings yet

- Franchise Opportunity: A Presentation byDocument30 pagesFranchise Opportunity: A Presentation bySweta Singh100% (1)

- Le Beau Romantic Event Organizer - Business Canvas ModelDocument3 pagesLe Beau Romantic Event Organizer - Business Canvas ModelMiraNo ratings yet

- Estimate Venture Value Using VC MethodsDocument5 pagesEstimate Venture Value Using VC Methodsultra oilyNo ratings yet

- Mcdonald's PDFDocument18 pagesMcdonald's PDFHemantNo ratings yet

- Ekonomi Informasi - Pengantar 1Document89 pagesEkonomi Informasi - Pengantar 1ekowidodopwkNo ratings yet

- Consumer Behavior StylesDocument14 pagesConsumer Behavior StylesMichelle LimNo ratings yet

- General Business Environment Bibliography Dwitya MM uGMDocument2 pagesGeneral Business Environment Bibliography Dwitya MM uGMDwitya Aribawa100% (1)

- EcoSense Capstone Project Plan - Bangkit 2022Document7 pagesEcoSense Capstone Project Plan - Bangkit 2022443 646RIZKY AKSA KATRESNA100% (1)

- Strategi Bisnis Umkm Selama Pandemi Covid-19Document9 pagesStrategi Bisnis Umkm Selama Pandemi Covid-19nabila zhafirah100% (1)

- Analisa Tarif Trans JogjaDocument7 pagesAnalisa Tarif Trans JogjaRahman Ari MulyajiNo ratings yet

- I. Comment On The Effect of Profmarg On CEO Salary. Ii. Does Market Value Have A Significant Effect? ExplainDocument5 pagesI. Comment On The Effect of Profmarg On CEO Salary. Ii. Does Market Value Have A Significant Effect? ExplainTrang TrầnNo ratings yet

- Statistics & ProbabilityDocument11 pagesStatistics & ProbabilityAnuj Kumar Srivastava100% (1)

- Individual Capital CanvasDocument12 pagesIndividual Capital CanvasAngeline VedyNo ratings yet

- Tutorial CashflowDocument2 pagesTutorial CashflowArman ShahNo ratings yet

- 2a-Konsep Dan Ruang Lingkup Biobisnis (Meet 2) - 2021 07.39.13Document29 pages2a-Konsep Dan Ruang Lingkup Biobisnis (Meet 2) - 2021 07.39.13Luthfia ZulfaNo ratings yet

- Time Series Forecasting Chapter 16Document43 pagesTime Series Forecasting Chapter 16Jeff Ray SanchezNo ratings yet

- Pengaruh Kualitas Produk Dan Kualitas Pelayanan Terhadap Kepuasan Konsumen Handpone SamsungDocument14 pagesPengaruh Kualitas Produk Dan Kualitas Pelayanan Terhadap Kepuasan Konsumen Handpone SamsungDiana LidiaNo ratings yet

- Quiz Pertemuan 2 - OperasiDocument9 pagesQuiz Pertemuan 2 - OperasiCICI SITI BARKAHNo ratings yet

- Communication of Innovations PDFDocument2 pagesCommunication of Innovations PDFJoshNo ratings yet

- The Influence of Product, Price, Place and Promotion On Marketing Performance With Innovation As A Mediating Variable (Case Study On Madura Grocery Store)Document8 pagesThe Influence of Product, Price, Place and Promotion On Marketing Performance With Innovation As A Mediating Variable (Case Study On Madura Grocery Store)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Oligopoly Strategic Decision MakingDocument30 pagesOligopoly Strategic Decision Makingmittalnipun2009No ratings yet

- Contoh Soal Statistik DeskriptifDocument3 pagesContoh Soal Statistik DeskriptifAora NabilaNo ratings yet

- Ratios PrblmsDocument13 pagesRatios PrblmsAbraz KhanNo ratings yet

- Soal Akuntansi ManajemenDocument7 pagesSoal Akuntansi ManajemenInten RosmalinaNo ratings yet

- Product Life Cycle of SAMSUNG & INFINIX MOBILE PHONESDocument7 pagesProduct Life Cycle of SAMSUNG & INFINIX MOBILE PHONESKESS DEAMETANo ratings yet

- Republic of Indonesia Maintaining Stability and Supporting Growth, Mitigating Covid-19 RiskDocument138 pagesRepublic of Indonesia Maintaining Stability and Supporting Growth, Mitigating Covid-19 RiskAryttNo ratings yet

- Multiple Sourcing (Samsung) : Samsung Has Approximately 2,200 Suppliers All Over The World (Samsung, 2020)Document5 pagesMultiple Sourcing (Samsung) : Samsung Has Approximately 2,200 Suppliers All Over The World (Samsung, 2020)Hương LýNo ratings yet

- Beximco Pharma :MKISDocument10 pagesBeximco Pharma :MKISshahin317No ratings yet

- Ventura, Marymickaellar Chapter5 (17,19,20)Document1 pageVentura, Marymickaellar Chapter5 (17,19,20)Mary VenturaNo ratings yet

- Group 5 - Ebook Marketing Theories and ConceptDocument167 pagesGroup 5 - Ebook Marketing Theories and ConceptVcore MusicNo ratings yet

- PT XYZ's Capital Structure and Working Capital Policy Impact on Company PerformanceDocument12 pagesPT XYZ's Capital Structure and Working Capital Policy Impact on Company PerformanceTopalSangPelajarNo ratings yet

- Princ-Ch24-02 Measuring Cost OflivingDocument29 pagesPrinc-Ch24-02 Measuring Cost OflivingnianiaNo ratings yet

- Part 2 PPT Etika Lingkungan (Chapter 5 Velasquez, Manuel G)Document11 pagesPart 2 PPT Etika Lingkungan (Chapter 5 Velasquez, Manuel G)destya windaNo ratings yet

- 11 Quantifying The Impact of CEO Social Media Celebrity Status On Firm Value Novel Measures From Digital Gatekeeping TheoryDocument10 pages11 Quantifying The Impact of CEO Social Media Celebrity Status On Firm Value Novel Measures From Digital Gatekeeping TheoryAhmada FebriyantoNo ratings yet

- Quantitative and Qualitative Analysis Review QuestionsDocument4 pagesQuantitative and Qualitative Analysis Review QuestionsJohnNo ratings yet

- Conjoint AnalysisDocument12 pagesConjoint AnalysisIseng IsengNo ratings yet

- Student Name: Zainab Kashani Student ID: 63075: Date of Submission: Sep 16, 2020Document10 pagesStudent Name: Zainab Kashani Student ID: 63075: Date of Submission: Sep 16, 2020Zainab KashaniNo ratings yet

- PM Project SelectionDocument6 pagesPM Project SelectionGabriella GoodfieldNo ratings yet

- Inferential Statistics and FindingsDocument6 pagesInferential Statistics and FindingsGabriella GoodfieldNo ratings yet

- American Corporation AnalysisDocument6 pagesAmerican Corporation AnalysisGabriella GoodfieldNo ratings yet

- Sarbanes-Oxley Act 2002Document6 pagesSarbanes-Oxley Act 2002Gabriella Goodfield100% (1)

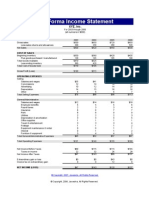

- Pro Forma Write-UpDocument4 pagesPro Forma Write-UpGabriella GoodfieldNo ratings yet

- Week 5 Individual Assignment Excerise AnswersDocument3 pagesWeek 5 Individual Assignment Excerise AnswersGabriella GoodfieldNo ratings yet

- Week 5 Individual Assignment Excerise AnswersDocument3 pagesWeek 5 Individual Assignment Excerise AnswersGabriella GoodfieldNo ratings yet

- Comparative and Ratio AnalysisDocument5 pagesComparative and Ratio AnalysisGabriella GoodfieldNo ratings yet

- BYP17 2 AssignmentDocument5 pagesBYP17 2 AssignmentGabriella GoodfieldNo ratings yet

- Acc561 r5 Financial Statement ReviewDocument4 pagesAcc561 r5 Financial Statement ReviewGabriella GoodfieldNo ratings yet

- Chapter 2 ActivityDocument1 pageChapter 2 ActivityGabriella GoodfieldNo ratings yet

- Solutions To Exercises: External UsersDocument17 pagesSolutions To Exercises: External UsersGabriella GoodfieldNo ratings yet

- 2014 Ford Annual ReportDocument200 pages2014 Ford Annual ReportborokawNo ratings yet

- Bottlenecks ForDocument1 pageBottlenecks ForGabriella GoodfieldNo ratings yet

- Business Structures AssignmentDocument5 pagesBusiness Structures AssignmentGabriella GoodfieldNo ratings yet

- Amway's Process Design and Supply Chain AnalysisDocument7 pagesAmway's Process Design and Supply Chain AnalysisGabriella GoodfieldNo ratings yet

- OPS/571 Week Six Quiz AnswersDocument2 pagesOPS/571 Week Six Quiz AnswersGabriella GoodfieldNo ratings yet

- PM Project SelectionDocument6 pagesPM Project SelectionGabriella GoodfieldNo ratings yet

- OPS/571 Week Six Quiz AnswersDocument2 pagesOPS/571 Week Six Quiz AnswersGabriella GoodfieldNo ratings yet

- Pro-Forma Income StatementDocument3 pagesPro-Forma Income StatementoxfordentrepreneurNo ratings yet

- 2011 Financial Statement For RadianDocument282 pages2011 Financial Statement For RadianGabriella GoodfieldNo ratings yet

- Analyzing Pro Forma Statements FIN/571Document5 pagesAnalyzing Pro Forma Statements FIN/571AriEsor100% (1)

- Business Structures AssignmentDocument5 pagesBusiness Structures AssignmentGabriella GoodfieldNo ratings yet

- PM Project SelectionDocument6 pagesPM Project SelectionGabriella GoodfieldNo ratings yet

- Arthur Andersen Case StudyDocument5 pagesArthur Andersen Case StudyGabriella GoodfieldNo ratings yet

- Gabriella Goodfied Disc Assessment SelfReportDocument28 pagesGabriella Goodfied Disc Assessment SelfReportGabriella GoodfieldNo ratings yet

- Process Improvement Final - Week 6Document8 pagesProcess Improvement Final - Week 6Gabriella GoodfieldNo ratings yet

- Elauf vs Abercrombie Religious Headscarf Hiring CaseDocument4 pagesElauf vs Abercrombie Religious Headscarf Hiring CaseGabriella GoodfieldNo ratings yet

- Week Three Quiz AnswersDocument3 pagesWeek Three Quiz AnswersGabriella GoodfieldNo ratings yet

- CH 9-12Document58 pagesCH 9-12coincopyNo ratings yet

- Demographic Analysis: Mortality: The Life Table Its Construction and ApplicationsDocument40 pagesDemographic Analysis: Mortality: The Life Table Its Construction and Applicationsswatisin93No ratings yet

- Quasi Newton MethodsDocument15 pagesQuasi Newton MethodsPoornima NarayananNo ratings yet

- Linear Non Linear RegressionDocument2 pagesLinear Non Linear Regressionledmabaya23No ratings yet

- Samples and InstructionsDocument479 pagesSamples and InstructionsWunmi OlaNo ratings yet

- Round Tree ManorDocument3 pagesRound Tree ManorMahidhar SurapaneniNo ratings yet

- Edu Spring Ltam QuesDocument245 pagesEdu Spring Ltam QuesEricNo ratings yet

- Forecasting 1Document69 pagesForecasting 1Shreya KanjariyaNo ratings yet

- Regression Analysis-Statistics NotesDocument8 pagesRegression Analysis-Statistics NotesRyan MarvinNo ratings yet

- Chapter 05Document50 pagesChapter 05Putri IndrianaNo ratings yet

- Tabel Time Value of Money PDFDocument10 pagesTabel Time Value of Money PDFTriyuniarti Padji KanaNo ratings yet

- Elementary Statistics - Picturing The World (gnv64) - Part2Document5 pagesElementary Statistics - Picturing The World (gnv64) - Part2Martin Rido0% (1)

- 6 Chapter 18 Queuing SystemDocument40 pages6 Chapter 18 Queuing SystemMir Md Mofachel HossainNo ratings yet

- BITSPILANI QUANTITATIVE METHODSDocument3 pagesBITSPILANI QUANTITATIVE METHODSWilson SainiNo ratings yet

- Zheng 2012Document4 pagesZheng 2012adarsh_arya_1No ratings yet

- ESTIMATION OF PARAMETERSDocument32 pagesESTIMATION OF PARAMETERSJade Berlyn AgcaoiliNo ratings yet

- Tic Tac Toe AI with MinimaxDocument2 pagesTic Tac Toe AI with MinimaxTAKASHI BOOKSNo ratings yet

- The Assumptions of The Classical Linear Regression ModelDocument4 pagesThe Assumptions of The Classical Linear Regression ModelNaeem Ahmed HattarNo ratings yet

- How To Use Minitab 1 BasicsDocument28 pagesHow To Use Minitab 1 Basicsserkan_apayNo ratings yet

- JOSI - Vol. 13 No. 2 Oktober 2014 - Hal 618-640 Integrasi QFD Dan Conjoint Analysis ...Document29 pagesJOSI - Vol. 13 No. 2 Oktober 2014 - Hal 618-640 Integrasi QFD Dan Conjoint Analysis ...samuelNo ratings yet

- Trends in Analytical Chemistry Experimental DesignDocument6 pagesTrends in Analytical Chemistry Experimental DesignkimaripNo ratings yet

- Bilinear Regression Rosen PDFDocument473 pagesBilinear Regression Rosen PDFMayuri ChatterjeeNo ratings yet

- Quantile Regression: 40 Years On: Annual Review of EconomicsDocument24 pagesQuantile Regression: 40 Years On: Annual Review of EconomicsSrinivasaNo ratings yet

- Operations Management: William J. StevensonDocument26 pagesOperations Management: William J. Stevensonaprian100% (4)

- Linear Regression Sample Size CalculationsDocument4 pagesLinear Regression Sample Size CalculationsMusliyana Salma Mohd RadziNo ratings yet

- AE211 Tutorial 11 - SolutionsDocument3 pagesAE211 Tutorial 11 - SolutionsJoyce PamendaNo ratings yet

- A Session 18 2021Document36 pagesA Session 18 2021Hardik PatelNo ratings yet

- Introduction To Game Theory: Peter MorrisDocument4 pagesIntroduction To Game Theory: Peter MorrisPerepePereNo ratings yet

- An Introduction To Objective Bayesian Statistics PDFDocument69 pagesAn Introduction To Objective Bayesian Statistics PDFWaterloo Ferreira da SilvaNo ratings yet

- Behavioral Economics Final Exam InsightsDocument10 pagesBehavioral Economics Final Exam InsightsSvetlana PetrosyanNo ratings yet