Professional Documents

Culture Documents

Analysis of Voice Recognition Algorithms using MATLAB

Uploaded by

Houda BOUCHAREBOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Analysis of Voice Recognition Algorithms using MATLAB

Uploaded by

Houda BOUCHAREBCopyright:

Available Formats

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

Analysis of Voice Recognition Algorithms using

MATLAB

Atheer Tahseen Hussein

Department of Electrical, Electronic and Systems Engineering

University Kebangsaan Malaysia

Malaysia, 43600 Bangi, Selangor, Malaysia.

Abstract— Voice recognition has become one of the most research is voice recognition technology. Research in voice

important tools of the modern generation and is widely used in recognition involves studies in physiology, psychology,

various fields for various purposes. The past decade has seen linguistics, computer science, signal processing, and many

dramatic progress in voice recognition technology, to the extent other fields. Voice recognition technology consists of two

that systems and high-performance algorithms have become

different technologies such as speaker recognition and speech

accessible. Voice recognition system performance is commonly

specified in terms of speed and accuracy, recognition accuracy is recognition [2]. Speech recognition is a technique that

the most important and straightforward measure of voice enables a device to recognize and understand spoken words,

recognition performance. This research were proposed to review by digitizing the sound and matching its pattern against the

several voice algorithms in terms of detection accuracy and stored patterns. Speech is the most natural form of human

processing overhead and to identify the optimal voice communication and speech processing has been one of the

recognition algorithm that can give the best trade-offs most exciting areas of the signal processing. Speech

between processing cost (speed, power) and accuracy. Also, to recognition technology has made it possible for computer to

implement and verify the chosen voice recognition follow human voice commands and understand human

algorithm using MATLAB. Ten words were spoken in an

languages [1]. Speech recognition algorithms can be in

isolated way by male and female speakers (four speakers) using

MATLAB as a simulation environment, these word were used as general divided into speaker dependent and speaker

a reference signal to trained the algorithm, for evaluating phase, independent. Speaker dependent system focuses on

all algorithms dictates to subject them to similar test criteria. developing a system to recognize unique voiceprint of

From the simulation results, the Wiener Filter algorithm individuals. Speaker independent system involves identifying

outperform the other four algorithms in terms of all measure of the word uttered by the speaker [3].

performance, and power requirement with the moderate

complexity of the algorithm and its prospective implementation

as a hardware. Wiener filter algorithm scored accuracy of A. Types of speech recognition

100%, 5%, and 50% for test cases i,ii,and iii respectively, with There are three types of ASR depends on speakers, size of

recognition speed range of (695-867) msec and estimated power vocabulary, and bandwidth are the different basis on which

range of (750-885) µW.

researchers have worked[4]; these types are classified as

Keywords— Voice recognition, spectrum normalization, cross- folowing:

correlation, auto- correlation , wiener filter , hidden markov model, Isolated word.

Matlab. Connected word.

I. INTRODUCTION Continuous word.

Spontaneous word.

Voice recognition is a popular theme in today's life. Voice

recognition's programs are available which make our life far This research concentrates on the isolated word speech

better. Voice recognition is a technology that the system can recognition. Isolated word speech recognition system

be controlled by people with their language. Rather than requires the user to pause after each utterance. The

typing controlling the buttons for the system or the computer system composes of two phases; training phase and

keyboard, using language to control system is more suitable. recognition phase. During the training phase, a training

Additionally, it may reduce the price of the business vector is generated for each word spoken by the user.

production at the exact same time. The efficiency of the daily The training vectors extract the spectral features for

life enhances, but also makes people's life more diversified separating different classes of words. Each training

[1]. Voice recognition technology is the process of vector can serve as a template for a single word or a

identifying, understanding and converting voice signals into word class. These training vectors (patterns) are stored in

text or commands. There are different types of authentication a database for subsequent use in the recognition phase.

mechanisms available today like alphanumeric passwords, During the recognition phase, the user speaks any word

graphical passwords etc. Along with these, biometric for which the system was trained. A test pattern is

authentication mechanisms like fingerprint recognition generated for that word and the corresponding text string

system, voice recognition system, iris recognition system etc. is displayed as the output using a pattern comparison

add more security for data. One of the important areas of technique [5].

IJERTV4IS080082 www.ijert.org 273

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

II. LITERATURE REVIEW C. Autocorrelation (AC)

Autocorrelation can be treated as computing the cross-

Many algorithms have been proposed to implement speech correlation for the signal and itself instead of the correlation

recognition. These methods are autocorrelation, cross- with different signals. Autocorrelation algorithm aims to

correlation, spectrum normalization, Wiener Filter, and hidden measure how the signal is self-correlated. Thus, training

Markov model. This research investigates several approaches signal autocorrelations are compared to find the minimum

for implementing the speech recognition system of isolated difference between autocorrelations [8].

words “Digit” using the built-in functionality in Matlab and D. Wiener filter(WF)

related products to develop the algorithms associated with

FIR Wiener filter is used to estimate the desired signal from

each approach. These algorithms are as follows: spectrum

the observation process to get the estimated signal. The

normalization, cross –correlation, auto- correction, FIR

desired or test and training signals are assumed to be

Wiener filter, and hidden Markov model. This part of the

correlated and are jointly wide-sense stationary. The purpose

thesis focuses on the theoretical framework for these

of Wiener filter is to choose the suitable filter order and to

algorithms.

find the filter coefficients with which the system can get the

best estimation. In other words, the system can minimize the

A. Spectrum normalization(SN)

mean-square error with proper coefficients. The recorded

Comparing spectrums in different measurement standards signals are wide-sense stationary processes. Then, the

could be difficult when comparing the differences between reference or training signals can be used as input signals, and

different speech signals. Hence, the use of normalization recorded test signals can be used as desired signals [9].

could maintain the measurement standard. In other words, the

normalization reduces the error when comparing the E. Hidden markov model(HMM)

spectrums. Before analyzing the spectrum differences for A hidden Markov model (HMM) is a triple ( π, A, B) where

different words, the first step is to normalize the spectrum by each probability in the state transition matrix and the

the linear normalization. After normalization, the values of emission matrix is time independent, which means that the

the spectrum are obtained or normalized spectrum is set to the matrices do not change in time as the system evolves. In

interval [0, 1]. Normalization changes the value range of the practice, this model is one of the most unrealistic assumptions

spectrum, but does not change the shape or the information of of Markov models about real processes. HMMs, described by

the spectrum itself. After the normalization of the absolute a vector and two matrices (A, B), which are of great value in

values of FFT, the next step in programming the speech describing real systems. Although, the model is usually an

recognition is to observe spectrums of the recorded speech approximation, the results are amenable to analysis. The

signals. Then, the algorithms are compared based on the commonly solved problems are [10]:

differences between the test or target signal and the training

signals or reference signals [6].

Matching the most likely system to a sequence of

observations–evaluation, and solved using the

B. Cross – correlation(CC) forward algorithm.

Assuming the recorded speech signals for the same word are Determining the hidden sequence most likely to

the same, the spectrums of two recorded speech signals are have generated a sequence of observations –

also similar. When doing the cross-correlation of the two decoding, solved using the Viterbi algorithm;

similar spectrums and plotting the cross-correlation, the Determining the model parameters that are most

cross-correlation should be symmetrical according to the likely to have generated a sequence of observations

definition of the cross-correlation. After calculating the cross- – learning, and solved using the forward-backward

correlation of two recorded frequency spectrums for the algorithm.

speech recognition comparison, find the position of the F. Related works.

maximum value of the cross-correlation and use the values

right to the maximum value position to minus the values left Isolated speech recognition by mel-scale frequency cepstral

to the maximum value position. Then, take the absolute value coefficients (MFCCs) and dynamic time warping (DTW)

of this difference and find the mean square error of this have been proposed. In these studies, several features were

absolute value. The cross-correlation symmetry of two extracted from a speech signal of spoken words. DTW is used

signals indicates the matching level of both signals. to measure the similarity between two sequences that may

Moreover, the more symmetric is the cross-correlation, the vary in time or speed. The experimental results were analyzed

smaller is the value of the mean square error. By comparing using Matlab and were proven to be efficient. DTW is the

the mean square errors of processing trained words and the best nonlinear feature matching technique in speech

target word, the system decides the training word that has a identification, having minimal error rates and fast computing

better match with the test signal based on the minimum mean speed. DTW will be of utmost importance for speech

square error of the cross-correlation differences at different recognition in voice-based automatic teller machines [11].

lags[7]. The data of one successful system was based on an HMM

pattern recognition procedure. The successful system used

three states and one Gaussian mixture. This system had a

success rate of 87.5% with an average running time of 7.9 sec

IJERTV4IS080082 www.ijert.org 274

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

compared with DTW, which has an average running time of experimental results, improved system performance was

22.1 s and a success rate of 25%; thus, the hidden Markov achieved using DTW while maintaining consideration for the

system is evidently the better system [12]. A new approach is overall design process [15]. The performance of the system

developed for real-time isolated-word speech recognition was highly dependent on the speech detection block. The

systems for human-computer interaction. The system was a detection of speech signals from the silence periods resulted

speaker-dependent system. The main motive behind the in improved results, improving the template generated by the

development of this system was to recognize a list of words MFCC processors according to the uttered words. In addition,

uttered by a speaker through a microphone. The features used system performance was improved using multiple templates

were MFCCs, which allowed good discrimination of speech per word. Endpoint detection, framing, normalization,

signal. The dynamic programming algorithm used in the MFCCs, and DTW algorithm have been used to process

system measured the similarity between the stored templates speech samples. The speech samples were spoken English

and the test templates for speech recognition and specified numbers (zero to nine); these words were spoken in an

the optimal distance. The recognition accuracy obtained isolated way by different male and female speakers. A speech

under the system was 90.1%. A simple list of four words, recognition algorithm for English numbers using MFCC

comprising four spelled-out numbers was made (i.e., one, vectors provided an estimate of the vocal tract filter.

two, three and four), and stored these words under certain Meanwhile, DTW was used to detect the nearest recorded

command names. When a particular word was spoken into voice that had appropriate constraint. The system was then

the microphone, the system recognized the word and applied to recognize the isolated spoken English numbers

displayed the respective command name under which the (i.e., “one,” “two,” “three,” “four,” “five,” “six,” “seven,”

word was stored. With a few modifications, this system could “eight,” and “nine”). The algorithm was tested on recorded

be used in many areas for specific functions (e.g., to control a speech samples. The results indicated that the algorithm

robot using simple commands) and in many applications [13]. managed to achieve an accuracy of almost 90.5% in

Speech recognition is the process of converting an acoustic recognizing the English numbers among the recorded words

waveform into text that is similar to the information being [16]. Speech recognition using HMM, which works well for n

conveyed by the speaker. In the reported study, the users, is widely used in pattern recognition applications. A

implementation of an automatic speech recognition system recorded signal (test data) was compared with its

(ASR) for isolated and connected words (i.e., for the words of corresponding original signal (trained data) using HMM

Hindi language) were discussed. The HTK based on HMM, algorithms simulated in Matlab. The examination of

as a statistical approach, is used to develop the system. improved techniques for modeling audio signals can result in

Initially, the system was trained for 100 distinct Hindi words. additional depth and helps realize improved speech

By presenting the detailed architecture of the ASR system, recognition. For the training set, 100% recognition was

which was developed using various HTK library modules and achieved. The recognizer performed well overall and worked

tools, the process has been described by which the HTK tool efficiently in a noisy environment. However, the performance

works, which was used in various phases of the ASR system. of the system could be improved if additional training

The reported recognition results show that the system has samples were available; the system could then be compared

overall accuracies of 95% and 90% for isolated and with neural network algorithms. Disturbances exist in a real

connected words, respectively [14]. A speech recognition environment, which might influence the performance of the

system can be easily distributed by way of speaking and by speech recognizer. The speech recognizer was realized only

noise. When the reference signal is a word that is identical to in Matlab. The speech recognizer achieved good results (75–

the target signal, the speech recognition algorithm based on 100%) under a simulation in a car with the engine running.

an FIR Wiener filter may be applied, in which case, the The recognition rate varied from 100% in a noise-free

reference signal for modeling the target will have fewer environment to 75% in a noisy environment. As indicated by

errors. When reference and target signals are recorded by one a comparison of the recognition rates under white Gaussian

person, in the same accent, both systems would work well for noise and that under the noise recorded in a car, the

distinguishing different words, regardless of the identity of recognizer is more robust against the noise in the car than the

the person. Meanwhile, when the target and reference signals white Gaussian noise because the noise in the car mainly

are recorded by different persons, both systems would affects low frequencies (0–2000 Hz), whereas the white

perform unsatisfactorily. Therefore, to improve system Gaussian noise affects all frequencies [17]. The Wiener filter

performance in terms of recognition, the noise immunity of is an adaptive approach in speech enhancement that depends

the system must be improved and the distinctive features of on the adoption of a filter transfer function based on speech

speech uttered by various people must be characterized signal statistics (mean and variance) from sample to sample.

reported [1]. Speech recognition is a technology that enables To accommodate the varying nature of speech signal, the

people to interact with machines. A speech recognition adaptive Wiener filter is accomplished in the time domain

system design capable of 100% accuracy has not been rather than in the frequency domain. Results show that this

achieved. One speaker-dependent speech recognition system approach provides better SNR improvement than the

capable of recognizing isolated spoken words with high conventional Wiener filter approach or than a spectral

accuracy have been the system was verified using Matlab, subtraction in frequency domain. Furthermore, this approach

achieving an overall accuracy above 90%. This work is superior to the spectral subtraction approach in treating

emphasized the memory efficiency offered by speech musical noise and can eschew the drawbacks of the Wiener

detection for separating words from silence. As supported by filter in the frequency domain (Abd El-Fatta

IJERTV4IS080082 www.ijert.org 275

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

III. METHODOLOGY

In principle, the fundamental concept of a speech recognition

system is designed to perform two operations. These

operations are the modeling of the signal under investigation

and matching of patterns using the extracted features obtained

from the first step). Signal modeling aims to find a set of

parameters from the conversion process of the speech signal.

The task is to find a parameter set from “previously

processed” memory with matching properties of the

parameter set obtained from the target speech signal “to

certain extent” that represents the pattern matching step.

The five speech recognition algorithms were trained

using pre-recorded voice signals of words in ‘.wav’ format. Fig 1. GUI panel

In this research, 10 words were selected as a reference signal

recorded by male voice and female voices. Therefore, we The simulation results of the testing data set used to evaluate

were able to obtain 20 reference signals. Table I lists these the performance of the developed speech recognition

signals. Evaluating speech recognition algorithms dictates to algorithm. All five developed algorithms were subjected to

subject them to similar test criteria. A performance criterion similar training and testing data sets in each case. These five

was set to obtain the results of the recognition. The algorithms were tested with three different case scenarios:

performance index consists of three components, and the

speed of recognition was calculated in “milliseconds”. The The first test uses a data set that consists of isolated

accuracy rate was calculated as a percentage (%). Finally, words uttered “spoken” by male and female voice

consumed power was hypothetically estimated once the and consequently recorded, the first set of words is

algorithm implemented in the hardware and calculated as listed in Table II.

“OC/µWatt”.

Table 1. Pre-recorded word Table 2. Isolated word used in the evaluation of the speech

File Name ‘.wav’ Format

No. recognition algorithms

Word Female voice Male voice

1 Mariam 1_1 1_11 No. Isolated Words

2 Sunday 1_2 1_12 1 Mariam

2 Sunday

3 Monday 1_3 1_13 3 Monday

4 Tuesday

4 Tuesday 1_4 1_14 5 School

5 School 1_5 1_15 6 Holiday

7 Football

6 Holiday 1_6 1_16 8 Happy

9 Beautiful

7 Football 1_7 1_17 10 Crazy

8 Happy 1_8 1_18

The second test uses a data set that consists of the 10

9 Beautiful 1_9 1_19

words as in the previous case and this set that

10 Crazy 1_10 1_20 consists of 20 “.wav” files is used for training. The

same 10 words were uttered again and recorded by

different speakers (female and male). The set that

IV. RESULT AND DISCUSSION includes different speakers was used as a test set to

check the capabilities of the different five algorithms

Starting the performance evaluation by running h algorithms

to recognize the correct uttered. The set that includes

in Matlab, running the algorithm codes shall display a

different speakers was used as a test set to check the

GUI(graphical user interface) panel to train, test and display capabilities of the different five algorithms to

the tested voice signal and the short time Fourier transform recognize the correct uttered word.

(STFT) plots within the panel, from this panel, the operator

The third test uses a data set that consists of the 10

can chose one test signal for all five algorithm and press the

words as in the case of the first test. The 20 recorded

performance comparison button and display results; power in

files are used as training data. Two words, namely,

µW, speed in msec, and accuracy as percentage with the plots

“beautiful” and “mariam” were selected for the test

as shown in Figure 1.

data. These two words were recorded 10 times each

by the same speaker (male voice) which resulted in

20 recorded files that were used as test data to

determine the recognition rate as percentage for all

five algorithms of these specific two words.

IJERTV4IS080082 www.ijert.org 276

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

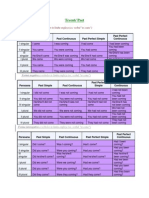

The results of the test were tabulated in Tables 3, Tables 4,

Figure 2, and Figure 3. The measure of performance for the

three algorithms was determined from the first test result case

I, Table 4 shows that the estimated required power was

minimum for the SN algorithm and maximum for the HMM

algorithm and this can be attributed to the computational

requirements for pre-processing, feature extraction and

classification. HMM highly demands power, whereas the SN

usually requires less computer operation. Thus, the basic

method used in DSP and FPGA applications to estimate and

measure processing power and perform a rough estimation of

required operational power is based on operation count or

count of floating point operations required to perform the task

that is usually in the form of vector and matrix Fig 2. Performance comparison (power, speed, and accuracy) for test case I.

multiplications and inversions.

Table 4. Recognition rate results of the five algorithms based

During simulations, the same approach was used for on three test cases (I, II, and III).

Algorithm recognition rate %

the calculation of power based on floating point operations, No.

algorithms Case I Case II Case III

which used to be a function in the older versions of Matlab.

1 SN 100 5 20

When the Matlab data handling structure was changed, this

flop count function was also removed. Three different 2 CC 100 5 5

parameters were used while each algorithm ran to form an 3 AC 100 10 0

estimate of the required processing and operational power. 4 WF 100 5 50

These parameters are the number of created arrays by each

5 HMM 100 0 10

algorithm, the amount of CPU usage by each algorithm and

the amount of memory access by each algorithm. Based on

these three measurements, a relative measure of required

power for each algorithm was formulated. Thus, the power

measurements should be considered as relative power

requirements for each algorithm.

The speed measurements are based on Matlab

runtime and CPU time measurement. They accurately

represent the time required for each algorithm to execute by

pressing the start button until the elapsed time value is shown

in the command window. Accuracy of calculation is based on

a simple comparison of the initial test file and the file

recognized. Thus, the results are either 100 for correct

recognition or 0 for unsuccessful or wrong recognition of the

initial test file.

Fig 3. Recognition rate results of the five algorithms based on three test

cases (I, II, and III).

Table 3. Performance comparison (power, speed, and

accuracy) for test case i.

V. CONCLUSSION

Metric parameters

No. Speed (msec) Power (µW) Accuracy

This research study presents the challenges in implementing a

algorithms speech recognition system using the various techniques

(%)

1 SN 386 - 516 387 – 662 100 namely;spectrumnormalization,cross-

2 CC 765 - 985 776 -1103 100 rrelation,autocorrelation, wiener filter, and hidden markov

model. To develop algorithms for isolated word recognition

3 AC 492 - 556 552 - 740 100

only in the relatively quiet environment. From the simulation

4 WF 695 - 867 750 - 885 100 result, the Wiener filter algorithm produced the best trade-off

5 HMM 10255 - 11085 10425 - 100 over the other four techniques in terms of all measure of

11244 performance as a whole that are; speed, accuracy, and power.

Wiener filter algorithm scored accuracy of 100%, 5%, and

50% for test cases i,ii,and iii respectively, with recognition

speed range of (695-867) msec and estimated power range of

(750-885) µW. The performance of speech recognition

algorithm can be easily disturbed by the noise and the

pronunciation of the word. To improve the developved

algorithms particularly for hidden markov model algorithm

IJERTV4IS080082 www.ijert.org 277

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

International Journal of Engineering Research & Technology (IJERT)

ISSN: 2278-0181

Vol. 4 Issue 08, August-2015

requires solution to many factors for example, noise [7] Chen, Jingdong, Jacob Benesty, and Yiteng Huang. "Robust time delay

estimation exploiting redundancy among multiple

immunity, robustness, ability to handle varying speaking

microphones." Speech and Audio Processing, IEEE Transactions

accents and styles, humman interaction design (HCI) issues on 11.6 (2003),pp,549-557,2003.

that are suitable for speech- based interaction. [8] Varshney, N., & Singh, S, “ Embedded Speech Recognition System”,

International Journal of Advanced Research in Electrical, Electronics

ACKNOWLEDGMENT and Instrumentation Engineering ,pp,9218-9227,2014.

[9] Patel, V. D, “ Voice recognition system in noisy environment”,.

The author would like to thank the Ministry of Electricity of California State University, Sacramento,2011.

Iraq (MOE) or their continued support and encouragement for [10] Ye, J., “ Speech recognition using time domain features from phase

the completion of this research, as well as thanks to the space reconstructions” ,Marquette University Milwaukee, Wisconsin,

2004.

Ministry of Higher Education and Scientific Research [11] Dhingra, Shivanker Dev, Geeta Nijhawan, and Poonam Pandit.

(MOHER) for their support to me and to facilitate a lot of the "Isolated speech recognition using MFCC and DTW." International

difficulties that I faced during the study period. Journal of Advanced Research in Electrical, Electronics and

Instrumentation Engineering 2.8 (2013),pp. 4085-4092,2013.

[12] Price, Jamel, and Sophomore Student. "Design of an Automatic Speech

REFERENCES Recognition System Using MATLAB." Dept. of Engineering and

Aviation Sciences, University of Maryland Eastern Shore Princess

[1] Deepak, M. Vikas, “Speech Recognition using FIR Wiener Filter”, Ann ,2005.

International Journal of Application or Innovation in Engineering & [13] Makhijani, Rajesh, and Ravindra Gupta. "Isolated word speech

management (IJAIEM),pp.204-20,2013. recognition system using dynamic time warping." International Journal

[2] Paul, Teenu Therese, and Shiju George. "Voice recognition based of Engineering Sciences & Emerging Technologies (IJESET) 6.3

secure android model for inputting smear test result." International (2013): pp.352-367,2013.

Journal of Engineering Sciences & Emerging Technologies, ISSN: [14] Choudhary, Annu, Mr RS Chauhan, and Mr Gautam Gupta.

2231-6604,2013. "Automatic Speech Recognition System for Isolated & Connected

[3] Mohan, Bhadragiri Jagan, and N. R. Babu. "Speech recognition using Words of Hindi Language By Using Hidden Markov Model Toolkit

MFCC and DTW." Advances in Electrical Engineering (ICAEE), 2014 (HTK)", 2013.

International Conference on. IEEE, 2014. [15] Amin, Talal Bin, and Iftekhar Mahmood. "Speech recognition using

[4] Ghai, Wiqas, and Navdeep Singh. "Literature review on automatic dynamic time warping." Advances in Space Technologies, 2008. ICAST

speech recognition." International Journal of Computer 2008. 2nd International Conference on. IEEE, 2008.

Applications 41.8 (2012),pp, 42-50,2012. [16] Limkar, Maruti, Rama Rao, and Vidya Sagvekar. "Isolated Digit

[5] Amin, Talal Bin, and Iftekhar Mahmood. "Speech recognition using Recognition Using MFCC AND DTW." International Journal On

dynamic time warping." Advances in Space Technologies, 2008. ICAST Advanced Electrical And Electronics Engineering,(IJAEEE), ISSN

2008. 2nd International Conference on. IEEE, 2008. (Print) (2012),pp 2278-8948,2012.

[6] Buera, Luis, et al. "Cepstral vector normalization based on stereo data [17] Srinivasan, A. "Speech recognition using Hidden Markov

for robust speech recognition." Audio, Speech, and Language model." Applied Mathematical Sciences 5.79 (2011),pp.3943-

Processing, IEEE Transactions on 15.3 (2007),pp, 1098-1113,2007. 3948,2011.

[18] Abd El-Fattah, M. A., et al. "Speech enhancement using an adaptive

wiener filtering approach." progress in electromagnetics research M 4

(2008),pp. 167-184,2008.

IJERTV4IS080082 www.ijert.org 278

(This work is licensed under a Creative Commons Attribution 4.0 International License.)

You might also like

- A Study On Automatic Speech RecognitionDocument2 pagesA Study On Automatic Speech RecognitionInternational Journal of Innovative Science and Research Technology100% (1)

- A Survey On Speech RecognitionDocument2 pagesA Survey On Speech RecognitionseventhsensegroupNo ratings yet

- Speech Recognition Using Correlation TecDocument8 pagesSpeech Recognition Using Correlation TecAayan ShahNo ratings yet

- (IJCST-V10I3P32) :rizwan K Rahim, Tharikh Bin Siyad, Muhammed Ameen M.A, Muhammed Salim K.T, Selin MDocument6 pages(IJCST-V10I3P32) :rizwan K Rahim, Tharikh Bin Siyad, Muhammed Ameen M.A, Muhammed Salim K.T, Selin MEighthSenseGroupNo ratings yet

- (IJCST-V4I2P62) :Dr.V.Ajantha Devi, Ms.V.SuganyaDocument6 pages(IJCST-V4I2P62) :Dr.V.Ajantha Devi, Ms.V.SuganyaEighthSenseGroupNo ratings yet

- 1 PaperDocument9 pages1 PaperSanjai SiddharthNo ratings yet

- Performance Improvement of Speaker Recognition SystemDocument6 pagesPerformance Improvement of Speaker Recognition SystemShiv Ram ChNo ratings yet

- Voice Recognition System Using Machine LDocument7 pagesVoice Recognition System Using Machine LShahriyar Chowdhury ShawonNo ratings yet

- Speech Recognition As Emerging Revolutionary TechnologyDocument4 pagesSpeech Recognition As Emerging Revolutionary TechnologybbaskaranNo ratings yet

- Voice to Text Conversion using Deep LearningDocument6 pagesVoice to Text Conversion using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Document10 pagesIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNo ratings yet

- Utterance Based Speaker IdentificationDocument14 pagesUtterance Based Speaker IdentificationBilly BryanNo ratings yet

- Presentation Voice RecognitionDocument15 pagesPresentation Voice RecognitionamardeepsinghseeraNo ratings yet

- Shareef Seminar DocsDocument24 pagesShareef Seminar DocsImran ShareefNo ratings yet

- JournalNX - Speaker RecognitionDocument6 pagesJournalNX - Speaker RecognitionJournalNX - a Multidisciplinary Peer Reviewed JournalNo ratings yet

- Speaker Recognition System - v1Document7 pagesSpeaker Recognition System - v1amardeepsinghseeraNo ratings yet

- Artificial Intelligence in Voice RecognitionDocument14 pagesArtificial Intelligence in Voice Recognition4GH20EC403Kavana K MNo ratings yet

- Hindi Speech Important Recognition System Using HTKDocument12 pagesHindi Speech Important Recognition System Using HTKhhakim32No ratings yet

- Is 2016 7737405Document6 pagesIs 2016 7737405Nicolas Ricardo Mercado MaldonadoNo ratings yet

- Tecnology On VRDocument2 pagesTecnology On VRNikhil GuptaNo ratings yet

- A Review On Feature Extraction and Noise Reduction TechniqueDocument5 pagesA Review On Feature Extraction and Noise Reduction TechniqueVikas KumarNo ratings yet

- 2004.04099Document23 pages2004.04099202002025.jayeshsvmNo ratings yet

- Speech Recognition Using Matrix Comparison: Vishnupriya GuptaDocument3 pagesSpeech Recognition Using Matrix Comparison: Vishnupriya GuptaInternational Organization of Scientific Research (IOSR)No ratings yet

- Progress - Report - of - Intership MD Shams AlamDocument4 pagesProgress - Report - of - Intership MD Shams Alamm s AlamNo ratings yet

- Ai SpeechDocument17 pagesAi SpeechJishnu RajendranNo ratings yet

- 25 The Comprehensive Analysis Speech Recognition SystemDocument5 pages25 The Comprehensive Analysis Speech Recognition SystemIbrahim LukmanNo ratings yet

- Speaker Recognition Using MATLABDocument75 pagesSpeaker Recognition Using MATLABPravin Gareta95% (64)

- Speech Recognition ReportDocument20 pagesSpeech Recognition ReportRamesh k100% (1)

- A Review On Speech Recognition Methods: Ram Paul Rajender Kr. Beniwal Rinku Kumar Rohit SainiDocument7 pagesA Review On Speech Recognition Methods: Ram Paul Rajender Kr. Beniwal Rinku Kumar Rohit SainiRahul SharmaNo ratings yet

- Development of A Novel Voice Verification System Using WaveletsDocument4 pagesDevelopment of A Novel Voice Verification System Using WaveletsKushNo ratings yet

- Speech Recognition System - A Review: April 2016Document10 pagesSpeech Recognition System - A Review: April 2016Joyce JoyceNo ratings yet

- MATLAB Speech Recognition Using Cross CorrelationDocument13 pagesMATLAB Speech Recognition Using Cross CorrelationCharitha ReddyNo ratings yet

- AJSAT Vol.5 No.2 July Dece 2016 pp.23 30Document8 pagesAJSAT Vol.5 No.2 July Dece 2016 pp.23 30Shahriyar Chowdhury ShawonNo ratings yet

- Design of Voice Command Access Using MatlabDocument5 pagesDesign of Voice Command Access Using MatlabIJRASETPublicationsNo ratings yet

- Automatic Speech Recognition For Resource-Constrained Embedded SystemsDocument2 pagesAutomatic Speech Recognition For Resource-Constrained Embedded SystemsmstevanNo ratings yet

- Speech Recognition Using Deep Learning TechniquesDocument5 pagesSpeech Recognition Using Deep Learning TechniquesIJRASETPublicationsNo ratings yet

- JAWS (Screen Reader)Document18 pagesJAWS (Screen Reader)yihoNo ratings yet

- Ibrahim 2020Document5 pagesIbrahim 202020261A3232 LAKKIREDDY RUTHWIK REDDYNo ratings yet

- ++++tutorial Text Independent Speaker VerificationDocument22 pages++++tutorial Text Independent Speaker VerificationCharles Di LeoNo ratings yet

- Design of A Voice Recognition System Using Artificial IntelligenceDocument7 pagesDesign of A Voice Recognition System Using Artificial IntelligenceADEMOLA KINGSLEYNo ratings yet

- Performance Analysis of Combined Wavelet Transform and Artificial Neural Network For Isolated Marathi Digit RecognitionDocument7 pagesPerformance Analysis of Combined Wavelet Transform and Artificial Neural Network For Isolated Marathi Digit Recognitionatul narkhedeNo ratings yet

- Speech To TextDocument6 pagesSpeech To TextTint Swe OoNo ratings yet

- Gender Recognition Using Voice Signal AnalysisDocument5 pagesGender Recognition Using Voice Signal AnalysisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Speech Recognition: A Guide to How It Works & Its FutureDocument20 pagesSpeech Recognition: A Guide to How It Works & Its FutureRamesh kNo ratings yet

- Este Es 1 Make 01 00031 PDFDocument17 pagesEste Es 1 Make 01 00031 PDFlgaleanocNo ratings yet

- Vol7no3 331-336 PDFDocument6 pagesVol7no3 331-336 PDFNghị Hoàng MaiNo ratings yet

- Audio Signal Processing Audio Signal ProcessingDocument31 pagesAudio Signal Processing Audio Signal ProcessingFah RukhNo ratings yet

- Efficient speech recognition using correlation methodDocument9 pagesEfficient speech recognition using correlation methodNavbruce LeeNo ratings yet

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Document6 pagesIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNo ratings yet

- Speech Recognition Full ReportDocument11 pagesSpeech Recognition Full ReportpallavtyagiNo ratings yet

- Voice Recognition System Speech To TextDocument5 pagesVoice Recognition System Speech To TextIbrahim LukmanNo ratings yet

- Text-dependent Speaker Recognition System Based on Speaking Frequency CharacteristicsDocument15 pagesText-dependent Speaker Recognition System Based on Speaking Frequency CharacteristicsCalvin ElijahNo ratings yet

- Spoken Language Understanding: Systems for Extracting Semantic Information from SpeechFrom EverandSpoken Language Understanding: Systems for Extracting Semantic Information from SpeechNo ratings yet

- Robust Automatic Speech Recognition: A Bridge to Practical ApplicationsFrom EverandRobust Automatic Speech Recognition: A Bridge to Practical ApplicationsNo ratings yet

- Author ContributionsDocument6 pagesAuthor ContributionsBipasha MalakarNo ratings yet

- Energies 11 02120 PDFDocument24 pagesEnergies 11 02120 PDFHouda BOUCHAREBNo ratings yet

- Energies 11 01820Document36 pagesEnergies 11 01820Houda BOUCHAREBNo ratings yet

- Hindawi Pso PDFDocument14 pagesHindawi Pso PDFHouda BOUCHAREBNo ratings yet

- Battery Management System BMS For Lithium Ion Batterie PDFDocument98 pagesBattery Management System BMS For Lithium Ion Batterie PDFHouda BOUCHAREBNo ratings yet

- What Are Some of The Best Websites To Learn Competitive Coding - QuoraDocument4 pagesWhat Are Some of The Best Websites To Learn Competitive Coding - QuoragokulNo ratings yet

- Marker VerbsDocument1 pageMarker VerbsManal SalamehNo ratings yet

- Curriculum Vitae: Personal Information Ridwan Romadoni, STDocument3 pagesCurriculum Vitae: Personal Information Ridwan Romadoni, STridwan romadoniNo ratings yet

- Scripting For The Radio Documentary: Why Do We Script?Document12 pagesScripting For The Radio Documentary: Why Do We Script?hai longNo ratings yet

- Multimedia Making It Work Chapter2 - TextDocument33 pagesMultimedia Making It Work Chapter2 - TextalitahirnsnNo ratings yet

- Nouns Ending inDocument4 pagesNouns Ending inZtrick 1234No ratings yet

- Regular and irregular English verbsDocument13 pagesRegular and irregular English verbsHenry Patricio PinguilNo ratings yet

- GCC ManualDocument662 pagesGCC ManualpaschapurNo ratings yet

- EFL Teacher Trainees Guide to Testing Writing SkillsDocument26 pagesEFL Teacher Trainees Guide to Testing Writing SkillsGabriel HernandezNo ratings yet

- Chapter 8 Eng01 Purposive CommunicationDocument5 pagesChapter 8 Eng01 Purposive CommunicationAngeline SilaganNo ratings yet

- English Verb Tenses GuideDocument4 pagesEnglish Verb Tenses GuideComuna IaraNo ratings yet

- Own It 3 - Student's Book - Starter-Unit 2Document30 pagesOwn It 3 - Student's Book - Starter-Unit 2Simona SanchezNo ratings yet

- The Eight Parts of Speech RevisedDocument11 pagesThe Eight Parts of Speech RevisedAlhaNo ratings yet

- Poetry Packet ELA IIDocument34 pagesPoetry Packet ELA IIEna BorlatNo ratings yet

- Unit 14 Exercise 3 - Future PlanDocument2 pagesUnit 14 Exercise 3 - Future PlanBailey NguyenNo ratings yet

- The Problem and Its BackgroundDocument36 pagesThe Problem and Its BackgroundBhea Irish Joy BuenaflorNo ratings yet

- Group A - SUMMER CAMP 2008 - Lesson PlansDocument4 pagesGroup A - SUMMER CAMP 2008 - Lesson Plansapi-19635461No ratings yet

- English For Social Work ProfessionDocument1,176 pagesEnglish For Social Work ProfessionValy de VargasNo ratings yet

- New Microsoft Word DocumentDocument14 pagesNew Microsoft Word DocumentdilasifNo ratings yet

- English SlangDocument9 pagesEnglish SlangJen EnglishNo ratings yet

- 121 Lec DelimitedDocument96 pages121 Lec DelimitedJonathanA.RamirezNo ratings yet

- MATH Grade 4 Quarter 1 Module 1 FINALDocument32 pagesMATH Grade 4 Quarter 1 Module 1 FINALLalaine Eladia EscovidalNo ratings yet

- Skype Conversations August - DecemberDocument318 pagesSkype Conversations August - DecemberCraven BirdNo ratings yet

- Mathematics: Year 6 Achievement TestDocument20 pagesMathematics: Year 6 Achievement TestIfrat Khankishiyeva100% (4)

- BS 9 Grade Will VS Be Going To FutureDocument3 pagesBS 9 Grade Will VS Be Going To FutureLEIDY VIVIANA RENDON VANEGASNo ratings yet

- University People 5 Contexts CommunicationDocument4 pagesUniversity People 5 Contexts CommunicationHoneyDewNo ratings yet

- Developing Academic Writing Skills Booklet - 1920 - Sem1Document44 pagesDeveloping Academic Writing Skills Booklet - 1920 - Sem1WinnieNo ratings yet

- Dialnet LegalTanslationExplained 2010054Document7 pagesDialnet LegalTanslationExplained 2010054ene_alexandra92No ratings yet

- Learn about Simple Present and Present Continuous TensesDocument9 pagesLearn about Simple Present and Present Continuous TensesFasilliaNo ratings yet

- Delhi Public School Firozabad Class-III Subject-EnglishDocument3 pagesDelhi Public School Firozabad Class-III Subject-EnglishPRNo ratings yet