Professional Documents

Culture Documents

Neural Networks For Web Content Filtering

Uploaded by

rush_aztechOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Neural Networks For Web Content Filtering

Uploaded by

rush_aztechCopyright:

Available Formats

N e u r a l N e t w o r k s

Neural Networks for

Web Content Filtering

Pui Y. Lee and Siu C. Hui, Nanyang Technological University

Alvis Cheuk M. Fong, Massey University, Albany

W ith the proliferation of harmful Internet content such as pornography, violence,

and hate messages, effective content-filtering systems are essential. Many

Web-filtering systems are commercially available, and potential users can download trial

versions from the Internet. However, the techniques these systems use are insufficiently

accurate and do not adapt well to the ever-changing ments used to construct a multiframe Web page as a

Web. (For more on current approaches and systems, single entity. This is because statistics obtained from

The Intelligent see the “Web Content-Filtering Approaches” and any aspects of a Web page should be derived as a

“Current Web Content-Filtering Systems” sidebars.) whole from the aggregated data collected from every

Classification Engine To solve this problem, we propose using artificial HTML document that is part of that page.

neural networks (ANNs)1,2 to classify Web pages

uses neural networks’ during content filtering. We focus on blocking PICS use. Web publishers can use PICS labels to

pornography because it is among the most prolific limit access to Web content (see the “Web Content-

learning capabilities to and harmful Web content. According to CyberAtlas, Filtering Approaches” sidebar). A PICS label is usu-

pornography-related terms such as “sex” and “porn” ally distributed with the associated Web page by one

provide fast, accurate are among the top 20 search terms queried at the 10 of these methods:

leading Internet portals and search engines.3 Fur-

differentiation thermore, research suggests that pornography is • The Web publisher (or Web content owner) embeds

addictive and causes harmful side effects.4 However, the label in the HTML code’s header section.

between pornographic our general framework is adaptable for filtering other • The Web server inserts the label in the HTTP

objectionable Web material. packet’s header section before sending the page

and nonpornographic to a requesting client.

Know the enemy

Web pages. The basic How do pornographic Web pages differ from oth- In both cases, we can’t determine whether a Web

ers? We attempt to answer this question by studying page has a PICS label simply by inspecting the Web

framework can also these pages’ characteristics and analyzing data. page contents displayed in a browser. The first

method requires viewing the Web page’s HTML

serve to distinguish Characteristics code, which you can easily do with any major Web

Understanding pornographic Web pages’ charac- browser that supports such functionality. In contrast,

other types of Web teristics can help us develop effective content analysis the second method requires checking the HTTP

techniques. Although it is well known that porno- packet’s header section, which you can’t do with any

content. graphic Web sites contain many sexually oriented known browser. Consequently, to analyze porno-

images, text and other information can also help us dis- graphic Web sites’ use of PICS systems, we collect

tinguish these sites. We focus on three characteristics: statistics from the samples’ HTML code.

page layout format, use of PICS (Platform for Internet

Content Selection) ratings, and indicative terms. Indicative terms. Terms (words or phrases) that indi-

cate pornographic Web pages fall into two major

Page layout format. We treat all the HTML docu- groups according to their meanings and use. Most

48 1094-7167/02/$17.00 © 2002 IEEE IEEE INTELLIGENT SYSTEMS

Web Content-Filtering Approaches

The four major content-filtering approaches are Platform can first identify the nature of a Web page’s content. If the sys-

for Internet Content Selection, URL blocking, keyword fil- tem determines that the content is objectionable, it can add

tering, and intelligent content analysis. the page’s URL to the black list. Later, if a user tries to access

the Web page, the system can immediately make a filtering

Platform for Internet Content Selection decision by matching the URL. Dynamically updating the black

PICS is a set of specifications for content-rating systems (for list achieves speed and efficiency, and accuracy is maintained

URLs for these rating systems, see the “Useful URLs” sidebar). provided that content analysis is accurate.

It lets Web publishers associate labels or metadata with Web

pages to limit certain Web content to target audiences. The Keyword filtering

two most popular PICS content-rating systems are RSACi and This intuitively simple approach blocks access to Web sites on

SafeSurf. Created by the Recreational Software Advisory Coun- the basis of the occurrence of offensive words and phrases on

cil, RSACi uses four categories—harsh language, nudity, sex, and those sites. It compares every word or phrase on a retrieved

violence. For each category, it assigns a number indicating the Web page against those in a keyword dictionary of prohibited

degree of potentially offensive content, ranging from 0 (none) words and phrases. Blocking occurs if the number of matches

to 4. SafeSurf is a much more detailed content-rating system. reaches a predefined threshold.

Besides identifying a Web site’s appropriateness for specific age This fast content analysis method can quickly determine if a

groups, it uses 11 categories to describe Web content’s potential Web page contains potentially harmful material. However, it is

offensiveness. Each category has nine levels, from 1 (none) to 9. well known for overblocking—that is, blocking many Web

Currently, Microsoft Internet Explorer, Netscape Navigator, sites that do not contain objectionable content. Because it fil-

and several Web-filtering systems offer PICS support and can ters content by matching keywords (or phrases) such as “sex”

filter Web pages according to the embedded PICS rating labels. and “breast,” it could accidentally block Web sites about sex-

However, PICS is a voluntary self-labeling system, and each Web ual harassment or breast cancer, or even the home page of

content publisher is totally responsible for rating the content. someone named Sexton. Although the dictionary of objection-

Consequently, Web-filtering systems should use PICS only as a able words and phrases does not require frequent updates,

supplementary filtering approach. the high overblocking rate greatly jeopardizes a Web-filtering

system’s capability and is often unacceptable. However, a Web-

URL blocking filtering system can use this approach to decide whether to

This technique restricts or allows access by comparing the further process a Web page using a more precise content

requested Web page’s URL (and equivalent IP address) with analysis method, which usually requires more processing time.

URLs in a stored list. Two types of lists can be maintained. A

black list contains URLs of objectionable Web sites to block; a Intelligent content analysis

white list contains URLs of permissible Web sites. Most Web- A Web-filtering system can use intelligent content analysis to

filtering systems that employ URL blocking use black lists. automatically classify Web content. One interesting method for

This approach’s chief advantages are speed and efficiency. A implementing this capability is artificial neural networks, which

system can make a filtering decision by matching the requested can learn and adapt according to training cases fed to them.1

Web page’s URL with one in the list even before a network (Our Intelligent Classification Engine uses this method—see the

connection to the remote Web server is made. However, this main article.) Such a learning and adaptation process can give

approach requires implementing a URL list, and it can identify semantic meaning to context-dependent words, such as “sex,”

only the sites on the list. Also, unless the list is updated con- which can occur frequently in both pornographic and non-

stantly, the system’s accuracy will decrease over time owing to pornographic Web pages. To achieve high classification accuracy,

the explosive growth of new Web sites. Most Web-filtering sys- ANN training should involve a sufficiently large number of train-

tems that use URL blocking employ a large team of human ing exemplars, including both positive and negative cases.

reviewers to actively search for objectionable Web sites to add ANN inputs can be characterized from the Web pages, such as

to the black list. They then make this list available for down- the occurrence of keywords and key phrases, and hyperlinks to

loading as an update to the list’s local copy. This is both time other similar Web sites.

consuming and resource intensive.

Despite this approach’s drawback, its fast and efficient oper-

ation is desirable in a Web-filtering system. Using sophisticated Reference

content analysis techniques during classification, the system 1. G. Salton, Automatic Text Processing, Addison-Wesley, Boston, 1989.

are sexually explicit terms; the rest consist graphic Web sites. These locations are mouse; it usually occurs in the <IMG> tag)

primarily of legal terms used to establish the • Graphical text (sometimes graphics or

legal conditions of use of the material. Legal • The Web page title images contain text that we can extract)

terms often appear because many porno- • The warning message block

graphic Web sites’ entry pages contain a • Other viewable text in the Web browser Indicative terms might be displayed or

warning message block. window nondisplayed in the Web browser window.

Most indicative terms occur in the porno- • The “description” and “keywords” metadata Displayed terms appear in the Web page title,

graphic Web page’s text. We can extract them • The Web page’s URL and other URLs warning message block, other viewable text,

from different locations of the correspond- embedded in the Web page and graphical text. Nondisplayed terms are

ing HTML document that might contain • The image tooltip (the text string displayed stored in the URL, the “description” and “key-

information useful for distinguishing porno- when a user points to an object using a words” metadata, and the image tooltip.

SEPTEMBER/OCTOBER 2002 computer.org/intelligent 49

N e u r a l N e t w o r k s

Current Web Content-Filtering Systems

Web-filtering systems are either client- or server-based. A To gauge the filtering approaches’ accuracy, we evaluated

client-based system performs Web content filtering solely on five representative systems: Cyber Patrol, Cyber Snoop, CYBER-

the computer where it is installed, without consulting remote sitter, SurfWatch, and WebChaperone. We installed each sys-

servers about the nature of the Web content that a user tries to tem on an individual computer and visited different Web sites

access. A server-based system provides filtering to computers on while running the system. We collected the URLs of 200 porno-

the local area network where it is installed. It screens outgoing graphic and 300 nonpornographic Web pages and used them

Web requests, analyzes incoming Web pages to determine for evaluation. To more easily measure each approach’s accu-

their content type, and blocks inappropriate material from racy, we limited each system to only its major approach. So, we

reaching the client’s Web browser. configured Cyber Patrol and SurfWatch to use URL blocking,

Table A summarizes the features of 10 popular Web-filter- Cyber Snoop used keyword filtering, CYBERsitter used context-

ing systems (for URLs for these systems, see the “Useful URLs” based key phrase filtering, and WebChaperone used iCRT.

sidebar). Only two systems specifically filter pornographic Table B summarizes our results. We obtained the overall

Web sites. Bold text indicates each system’s main approach. accuracy by averaging the percentage of correctly classified

(For a description of the approaches, see the “Web Content- Web pages for both pornographic and nonpornographic

Filtering Approaches” sidebar.) No system relies on PICS (Plat- pages. For the two systems that employ URL blocking, the

form for Internet Content Selection) as its main approach. Six number of incorrectly classified nonpornographic Web pages is

systems rely mainly on URL blocking, and only two systems small compared to those using keyword filtering. This shows

mainly use keyword filtering. I-Gear incorporates both URL that the black list (a list of URLs to block—see the “Web Con-

blocking and Dynamic Document Review, a proprietary tent-Filtering Approaches” sidebar) the specialists compiled

technique that dynamically looks for matches with keywords. accurately excludes URLs of most nonpornographic Web sites,

Only WebChaperone (which is no longer sold or supported) even if the Web sites contain sexually explicit terms in a non-

employs content analysis as its main approach. It uses Internet pornographic context. However, both systems have fairly high

Content Recognition Technology (iCRT), a unique mechanism occurrences of incorrectly classified pornographic Web pages.

that dynamically evaluates each Web page before the system This highlights the problem of keeping the black list up to date.

passes the page to a Web browser. iCRT analyzes word count Systems that rely on keyword filtering tend to perform well

ratios, page length, page structure, and contextual phrases. on pornographic Web pages, but the percentage of incorrectly

The system then aggregates the results according to the classified nonpornographic Web pages can be high. This high-

attributes’ weighting. On the basis of the overall results, lights the keyword approach’s major shortcoming. On the other

WebChaperone identifies whether the Web page contains hand, WebChaperone, which uses iCRT, achieves the highest

pornographic material. overall accuracy: 91.6 percent. This underlines the effectiveness

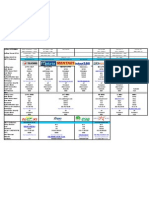

Table A. A comparison of 10 popular Web-filtering systems. Bold lettering indicates each system’s main approach.

Content-filtering approach

System Location PICS support URL blocking Keyword filtering Content analysis Filtering domain

Cyber Patrol Client Yes Yes Yes No General

Cyber Snoop Client Yes Yes Yes No General

CYBERsitter Client Yes Yes YesA No General

I-Gear Server Yes Yes YesB No General

Net Nanny Client No Yes Yes No General

SmartFilter Server No Yes No No General

SurfWatch Client Yes Yes Yes No General

WebChaperone Client Yes Yes Yes YesC Pornographic

Websense Server No Yes YesD No General

X-Stop Client Yes Yes YesE No Pornographic

A. Context-based key-phrase filtering technique

B. Employed in Dynamic Document Review and software robots

C. Content analysis carried out using iCRT (Internet Content Recognition Technology)

D. Employed only in Web crawlers

E. Employed in MudCrawler

Table B. The performance of five Web-filtering systems.

Web page

Pornographic (200 total) Nonpornographic (300 total)

System Major approach Correctly classified Incorrectly classified Correctly classified Incorrectly classified Overall accuracy

Cyber Patrol URL blocking 163 (81.5%) 37 (18.5%) 282 (94.0%) 18 (6.0%) 87.75%

SurfWatch URL blocking 171 (85.5%) 29 (14.5%) 287 (95.7%) 13 (4.3%) 90.60%

Cyber Snoop Keyword filtering 187 (93.5%) 13 (6.5%) 247 (82.3%) 53 (17.7%) 87.90%

Cybersitter Context-based key 183 (91.5%) 17 (8.5%) 255 (85.0%) 45 (15.0%) 88.25%

phrase filtering

WebChaperone iCRT 177 (88.5%) 23 (11.5%) 284 (94.7%) 16 (5.3%) 91.60%

50 computer.org/intelligent IEEE INTELLIGENT SYSTEMS

Useful URLs

Nondisplayed text is contained in HTML Cyber Patrol, www.cyberpatrol.com

Cyber Snoop, www.cyber-snoop.com/index.html

tags (blocks that begin with a “<” and end

CYBERsitter, www.cybersitter.com

with a “>”); displayed text is not. I-Gear, www.symantec.com/sabu/igear

Net Nanny, www.netnanny.com/home/home.asp

Statistics Platform for Internet Content Selection, www.w3.org/PICS

For sample collection, we consider that a SafeSurf, www.safesurf.com

pornographic Web site fulfills any of these SmartFilter, www.smartfilter.com

conditions: SurfWatch, www.surfcontrol.com

Websense, www.Websense.com

• It contains sexually oriented content. X-Stop, www.xstop.com

• It contains erotic stories and text descrip-

tions of sexual acts.

• It shows images of sexual acts, including Table 1. Use of sexually explicit terms in eight locations of 100 samples.

inanimate objects used sexually. Number of unique terms Frequency of occurrence

• It shows erotic full or partial nudity. Location (42 total) (5,673 total)

• It contains sexually violent text or graphics. Web page title 28 (66.67%) 399 (7.03%)

Warning message block 18 (42.86%) 344 (6.06%)

To generally represent such Web sites, we Other viewable text 37 (88.10%) 1,919 (33.83%)

captured 100 different pornographic Web “description” metadata 27 (64.29%) 464 (8.18%)

“keywords” metadata 38 (90.48%) 1,438 (25.35%)

sites and defined their entry pages as sam- URL 29 (69.05%) 581 (10.24%)

ples for data collection. Image tooltip 31 (73.81%) 419 (7.39%)

Graphical text 22 (52.38%) 109 (1.92%)

Page layout format. We found that 86 per-

cent of the entry pages used a single-frame

layout. Among the 14 multiframe pages, 13 Table 2. Use of legal terms in eight locations of 100 samples.

had a two-frame layout. Only one Web page Number of unique terms Frequency of occurrence

had a three-frame layout. Although a single- Location (13 total) (708 total)

frame layout appears prevalent among porno- Web page title 2 (15.38%) 23 (3.25%)

graphic Web sites, our techniques must still Warning message block 13 (100%) 355 (50.14%)

be able to process and analyze multiframe Other viewable text 8 (61.54%) 135 (19.07%)

Web pages. “description” metadata 1 (7.69%) 31 (4.38%)

“keywords” metadata 1 (7.69%) 72 (10.17%)

URL 1 (7.69%) 51 (7.20%)

PICS use. We also found that 89 percent did Image tooltip 2 (15.38%) 39 (5.51%)

not use any PICS support. Of the 11 pages Graphical text 1 (7.69%) 2 (0.28%)

that did, nine used the RSACi content-rating

system; the rest used SafeSurf (see the “Web

Content-Filtering Approaches” sidebar). We These two locations together contribute more not clearly defined, linear or low-order sta-

suspect the low use of PICS among porno- than 59 percent of the total sexually explicit tistical models cannot always describe them.

graphic Web sites is because these sites’ pub- term occurrences, while other locations have So, we use ANNs because they are robust

lishers might not want their sites filtered out. occurrences of approximately six to 10 per- enough to fit a wide range of distributions

The results might not be statistically rep- cent, except graphical text, which contributes accurately and can model any high-degree

resentative of overall PICS use among porno- only 1.92 percent. exponential models.

graphic Web sites. However, they do indicate Table 2 shows that all 13 legal terms appear On the basis of our knowledge of porno-

that implementing PICS support in a Web- in the warning message block; this location graphic-Web-site characteristics, we devel-

filtering system is useful; some pornographic contributes more than 50 percent of the total oped the Intelligent Classification Engine.

sites do have PICS labels embedded in the occurrences of legal terms. Also, more than The engine comprises two major processes:

HTML code’s header section. So, checking 72 percent of the legal term occurrences training and classification (see Figure 1).

PICS labels works best as a supplementary appear in the Web pages’ displayed text. During training, the system learns from sam-

approach for positive identification. ple pornographic and nonpornographic Web

Our ANN approach pages to form a knowledge base of the ANN

Indicative terms. We compiled a list of 55 We could use statistical methods to clas- models. The system then classifies incoming

indicative terms comprising 42 sexually sify Web pages according to the statistical Web pages according to their content.

explicit terms and 13 legal terms. Tables 1 occurrence of certain features. Such methods

and 2 summarize the distribution of these include k-nearest neighbor classification,5 lin- Training

terms in eight locations. As Table 1 shows, ear least-squares fit,6 linear discriminant The Intelligent Classification Engine

more than 90 percent of the terms appear in analysis,7 and naïve Bayes probabilistic clas- extracts the Web page’s representative fea-

the “keywords” metadata, while approximately sification.8 However, because real-world data tures as inputs for training the ANN. Train-

88 percent appear in “Other viewable text.” such as we’re using tend to be noisy and are ing’s objective is to let the ANN configure

SEPTEMBER/OCTOBER 2002 computer.org/intelligent 51

N e u r a l N e t w o r k s

tive terms. So, excluding the URLs will not

Training

compromise the Web page’s relevance to

WWW pornography and will help minimize the sys-

exemplar set

tem’s computational cost.

Feature extraction, then, parses a Web

Training Web pages Online Web pages page to identify the relevant information that

indicates the page’s nature or characteristics.

Engine database

The engine separates the extracted informa-

Feature Feature tion into four types:

extraction extraction

Selected content Selected content • Web page title

Indicative

Preprocessing term Preprocessing • Displayed contents, including the warn-

dictionary ing message and other viewable contents

Terms' occurrence Terms' occurrence • “description” and “keywords” metacontents (the

information contained in the metadata

Transformation Transformation Transformation fields)

weights

Web page vector Web page vector • Image tooltip

Neural Neural Neural Preprocessing. This step converts the raw

network network network

models text extracted from a Web page into numeric

New weights Training Activated cluster

results data representing the frequencies of occur-

NN model Cluster-to-

rence of indicative terms. Figure 2 shows

Categorization this step, which has two phases: tokeniza-

generation category

mapping Cluster category tion and indicative-term identification and

counting.

Category Metacontent

assignment checking The extracted text must first be parsed into

Classification a list of single words. Because we initially

results

focus on English-language Web sites, the

Training Classification

tokenization algorithm treats white spaces

and punctuation marks as separators between

Figure 1. The Intelligent Classification Engine. words. It converts each extracted word into

lower case before inserting it into a list. To

facilitate identifying phrasal terms, this phase

itself and adjust its weight parameters preprocessing, transformation, ANN model preserves the word sequence in the list as it

according to the training exemplars, to facil- generation, and category assignment. was in the Web page’s raw text. Because fea-

itate generalization beyond the training sam- ture extraction produced four types of con-

ples. This requires a large set of training Feature extraction. We use frequencies of tents for each Web page, tokenization pro-

exemplars to obtain an ANN that produces occurrence of indicative terms in a Web page duces four word lists: one each for the Web

statistically accurate results. to judge its relevance to pornography. Our page title, the displayed contents, the “descrip-

In our case, the training exemplar is a vec- analysis of pornographic Web pages indicates tion” and “keywords” metacontents, and the

tor representing the sample Web page. that indicative terms most likely appear in image tooltip. Because each list has a differ-

Because our objective is to distinguish porno- ent degree of relation to the nature of the Web

graphic Web pages from nonpornographic • Displayed contents consisting of the Web page, the lists will carry different weights

Web pages, we have collected a total of 3,777 page title, the warning message block, and when training the ANN.

nonpornographic Web pages and 1,009 other viewable text The engine then feeds the four lists into

pornographic Web pages for training. To • Nondisplayed contents including the indicative-term identification and counting.

extensively cover a variety of Web pages with “description” and “keywords” metadata, image This phase uses an indicative-term dictionary

different kinds of content nature, we gath- tooltips, and URLs to identify the indicative terms in the four

ered the nonpornographic Web pages from lists. (We manually compiled this dictionary

13 categories of the Yahoo! search engine. However, we excluded URLs because of according to the analysis we described in the

These include Arts & Humanities, Business the difficulties of identifying indicative terms section “Know the enemy.”) It collects the

& Economy, Computers & Internet, Educa- in a URL address. Because words in a URL occurrences for each set of indicative terms

tion, Entertainment, Government, Health, are concatenated and are not separated by in the dictionary. Because there are 55 dis-

News & Media, Recreation & Sports, Refer- white spaces, extracting words or terms from tinctive sets of indicative terms, the system

ence, Science, Social Science, and Society it will be difficult. Furthermore, occurrences obtains 55 frequencies of occurrence. Also,

& Culture. The result was a training set of of indicative terms in the URLs in porno- the system counts the total number of indica-

4,786 exemplars. graphic Web pages contribute only a small tive-term occurrences and the total number

Training has five steps: feature extraction, percentage to the total occurrences of indica- of words in each list.

52 computer.org/intelligent IEEE INTELLIGENT SYSTEMS

Transformation. This step converts the group

Web page title Displayed contents Metacontents Image tooltip

of numbers resulting from preprocessing into

the Web page vector to feed into the ANN.

Transformation involves three phases: vec-

Preprocessing

tor encoding, weight multiplication, and nor-

Tokenization

malization.

Vector encoding uses the data from pre-

processing to form the basic vector. It has a

dimension of 61 and consists of

• The 55 frequencies of occurrence of

indicative terms (55 elements)

• The total number of indicative term occur- Title Displayed content Metacontent Image tooltip

rences in each of the four lists (four ele- word list word list word list word list

ments), which represents the relative

importance of the four locations in the

Web page

• The total number of an indicative term’s Indicative Indicative term

occurrences in the four lists (one element), term identification and counting

dictionary

which represents the term’s degree of rela-

tion to the nature of the Web page

• The total number of words in the four lists,

which indicates the total number of words Frequency of occurrence Total indicative term occurrences Total words in

in the Web page (one element) and repre- of 55 indicative terms in each of four lists each of four lists

sents the amount of Web page contents

Figure 2. Preprocessing consists of tokenization, which locates individual words, and

These elements form the vector representing a indicative-term identification and counting.

Web page owing to their significance in aiding

Web page content identification. The system

uses the last two elements to train the ANN on Figure 3 illustrates an example of trans- parameters: the number of training iterations,

the relation between a Web page’s total number formation. the initial and final learning rates, and the ini-

of indicative terms and total number of words. tial neighborhood size and its decrement

Weight multiplication weights the basic ANN model generation. This step trains the interval. For good statistical accuracy, the

vector according to the 61 elements’ relative ANN for online classification. Two ANNs that number of iterations should be at least 500

indicativeness. An element’s indicativeness have proven effective for document classifi- times larger than the number of output neu-

is the degree of indication it contributes cation are Kohonen’s Self-Organizing Maps9 rons.9 Because we have 49 neurons, we train

toward a specific type of content—in this (KSOM) and the Fuzzy Adaptive Resonance the network for 24,500 iterations. In our

case, pornography. For example, “xxx” has a Theory10 (Fuzzy ART).11,12 So, we evaluated implementation of the KSOM network, the

higher relative indicativeness than “babe” them for Web content classification. learning rate linearly decreases in each iter-

does, while indicative terms in the displayed Because each vector contains 61 elements, ation from an initial value of 0.6 toward the

contents have a lower relative indicativeness the KSOM network requires 61 inputs. We final learning rate of 0.01. The initial neigh-

than those in the metacontents. determined the output neuron array’s dimen- borhood size is set to five and decreases once

Set initially according to the relative sion after conducting a series of training every 4,000 iterations.

indicativeness, the weights are adjusted experiments on the ANN with several differ- Before a training session begins, we ini-

before each ANN training session. So, the ent dimensions. We found that if the dimen- tialize the network weights with random real

system also treats them as parameters for sion is smaller than 7 × 7, some generated numbers between 0 and 1. Each training iter-

fine-tuning the network’s performance to clusters contain a large mixture of porno- ation feeds the whole set of training exem-

improve accuracy. It stores the 61 elements’ graphic and nonpornographic Web pages. plars to the network. The training session

weights in a weight database with the weight However, output neuron arrays that are larger determines the winning neuron by finding

entry’s position corresponding to the index than 7 × 7 do not significantly improve accu- the response that gives the smallest Euclidean

of the respective elements. racy. Also, training an ANN with a larger distance between the exemplar and the

The engine then normalizes the weighted number of output neurons will take much weight vector. Only the weights associated

vector according to a common Euclidean more time. So, we consider a 7 × 7 output with the winning neuron and its neighbor-

length, giving the final Web page vector to neuron array the best compromise between hood are adjusted to adapt to the input pat-

feed to the ANN. This normalization improves maximizing the classification distinctiveness tern. When the training session successfully

the numerical condition of the problem the and minimizing training complexity. completes, the engine saves the network’s

ANN will tackle and makes the training Figure 4 shows the KSOM training algo- weights into the ANN model.

process better behaved. rithm. Before training, we must set several When a training session ends, clusters of

SEPTEMBER/OCTOBER 2002 computer.org/intelligent 53

N e u r a l N e t w o r k s

Web page preprocessing

Index Web page data Frequency

Indicative term

1 18 or older | 18 or over |... 1

2 18 years old | 18 year old |... 1

3 of age | of legal age 0

4 adult links | adult link 0

. . .

. . .

. . .

55 xxx 3

Total indicative terms

56 In image tooltip word list 1

57 In displayed content word list 14

58 In metacontent word list 8

59 In title word list 1

60 Sum of all indicative terms 24

61 Sum of all words 236

Vector encoding

Index 1 2 3 4 ... 55 56 57 58 59 60 61

Basic vector 1 1 0 0 ... 3 1 14 8 1 24 236

Weight multiplication

Weighted vector 1 1 0 0 ... 12 3.5 14 32 3 24 236

Normalization

Normalized vector 0.0042 0.0042 0 0 ... 0.0499 0.0146 0.0582 0.1331 0.0125 0.0998 0.9775

Figure 3. An example of transformation. Vector encoding is the result of preprocessing that forms the 61-element basic vector.

Weight multiplication then weighs the elements according to their relative indicativeness. The weighted vector is then normalized

before being fed to the ANN.

Web pages are formed at the array of output

Step 1: Initialize the weight vector of all output neurons. neurons. These clusters might contain dif-

Step 2: Determine the output winning neuron m by searching for the shortest normalized ferent proportions of pornographic and non-

Euclidean distance between the input vector and each output neuron’s weight vector: pornographic Web pages. Category assign-

ment will classify each cluster.

X − Wm = min X − Wj ,

j =1KM The Fuzzy ART network will have 61

nodes at its F0 layer. Owing to complement

where X is the input vector,

Wj is the weight vector of output neuron j, and coding, each F0 node will feed data to two

M is the total number of output neurons. F1 nodes. So, the F1 layer has 122 (61 × 2)

Step 3: Let Nm(t) denote a set of indices corresponding to a neighborhood size of the cur- nodes. Because we wish to compare Fuzzy

rent winner neuron m. Slowly decrease the neighborhood size during the training ART to the KSOM network, the network

session. Update the weights of the weight vector associated with the winner neu-

should generate as close to 49 (7 × 7) clusters

ron m and its neighboring neurons:

as possible. To meet this requirement, the

∆Wj (t ) = α(t)[X(t ) − Wj (t ) ] for j ∈ Nm(t ), engine constructs 60 F2 nodes.

Next, we need to select values for three

where α is a positive-valued learning factor, α ∈ [0, 1]. Slowly decrease α with Fuzzy ART network parameters: the choice

each training iteration.

So, the new weight vector is given by parameter α, the vigilance parameter ρ, and

the learning parameter β. α affects the inputs

Wj (t + 1) = Wj (t ) + α(t ) [X(t ) − Wj (t ) ] for j ∈ Nm(t ) .

Repeat steps 2 and 3 for every exemplar in the training set for a user-defined number of iterations.

Figure 4. The KSOM (Kohonen’s Self-

Organizing Maps) training algorithm.

54 computer.org/intelligent IEEE INTELLIGENT SYSTEMS

Step 1: Initialize the weights of all weight vectors to 1, and set all F2 layer nodes to

“uncommitted.”

Step 2: Apply complement coding to the M-dimensional input vector. The resultant com-

produced at each node of the F2 layer accord- plement-coded vector I is of 2M-dimensions:

ing to all the nodes of the F1 layer. To mini-

mize the network training time, α must have ( ) (

I = a,aC = a1,K,aM ,a1C ,K,aM

C

),

a small value.10 We chose α = 0.1.

ρ indicates the threshold of proximity to a C

where ai = 1 − ai ,for i ∈ [1, M].

cluster that an input vector must fulfill before

a desirable match occurs. The choice of ρ Step 3: Compute the choice function value for every node of the F2 layer. For the com-

plement-coded vector I and node j of the F2 layer, the choice function Tj is

affects the number of clusters that the Fuzzy

ART network generates. In this case, ρ I ∧W j

Tj (I) = ,

should have a value that lets the network gen- α + Wj

erate approximately 49 clusters. After exper-

imenting with the network several times, we

where Wj is the weight vector of node j, and

chose ρ = 0.66. α is the choice parameter.

β manipulates the adjustment of weight The fuzzy AND operator ∧ is defined by (P ∧ Q)i = min(pi, qi), and the norm | | is

vector W J, where node J is the chosen defined as

cluster that fulfills the vigilance match. We M

use the fast-commit, slow-recode updating P ≡ ∑ pi .

scheme. More specifically, β = 1 when the i =1

chosen cluster is an uncommitted F2 node,

while β = 0.5 for a committed F2 node. Step 4: Find the node J of the F2 layer that gives the largest choice function value:

Unlike the case of KSOM, we do not need to

Tj = max{Tj : J = 1 … N } .

decrease the learning parameter as training

progresses. The output vector Y of layer F2 is thus given by yJ =1 and yj = 0 for j ≠ J.

Figure 5 shows the training algorithm for Step 5: Determine if resonance occurs by checking if the chosen node J meets the vigi-

the Fuzzy ART network. Before the training lance threshold:

session begins, the engine initializes the I ∧ WJ

weights in all weight vectors to 1 and marks ≥ρ ,

I

all the F2 layer nodes as uncommitted. Once

the training session commences, the engine

feeds the training exemplars to the network. where ρ is the vigilance parameter.

If resonance occurs, update the weight vector WJ; the new WJ is given by

This process will carry on until all weight

vectors become static. The engine collects

the network parameters, weights of all ( ) (

WJnew = β I ∧ WJold + 1 − β WJold ) .

weight vectors, and committed status of all

Otherwise, set the value of the choice function TJ to 0.

F2 nodes and stores them as the ANN model Repeat steps 4 and 5 until a chosen node meets the vigilance threshold.

for our Fuzzy ART implementation. For every input pattern in the training set, perform steps 2 to 5.

Similarly to KSOM training, the Fuzzy Repeat the whole process until the weights in all weight vectors become unchanged.

ART training session forms Web page clus-

ters that category assignment will classify. Figure 5. The Fuzzy ART (Fuzzy Adaptive Resonance Theory) training algorithm.

Category assignment. After the ANN gener- Table 3. Thresholds for category assignment.

ates the clusters, we still need to determine Proportion of Web pages in clusters

each cluster’s nature. This is because a clus-

Cluster category Pornographic Nonpornographic

ter defines a group of Web pages with simi-

lar features and characteristics but does not Pornographic [70%, 100%] [0%, 30%)

classify those similarities. Nonpornographic [0%, 30%) [70%, 100%]

The engine assigns each cluster to one of Unascertained [30%, 70%) [30%, 70%]

three categories: pornographic, nonporno-

graphic, or unascertained. Unascertained Table 4. Training efficiency for the Kohonen’s Self-Organizing Maps and the Fuzzy

clusters contain a fair mixture of porno- Adaptive Resonance Theory networks.

graphic and nonpornographic Web pages. Results

Table 3 summarizes the thresholds for cate-

gory assignment. For example, if a cluster Attribute KSOM Fuzzy ART

contains 70 percent of Web pages labeled as Number of inputs 61 61

“pornographic,” we map the cluster to the Number of output neurons 49 47

Number of iterations 24,500 93

“pornographic” category.

Number of training exemplars 4,786 4,786

The system records the results in a cluster- Total processing time 37 hrs., 43 min., 23 sec. 47 sec.

to-category mapping database. Each database

SEPTEMBER/OCTOBER 2002 computer.org/intelligent 55

N e u r a l N e t w o r k s

Table 5. Training accuracy.

ANN type Web page Correctly classified Incorrectly classified Unascertained Total

KSOM Pornographic 911 (90.3%) 27 (2.7%) 71 (7.0%) 1,009

Nonpornographic 3,529 (93.4%) 56 (1.5%) 192 (5.1%) 3,777

Total 4,440 (92.8%) 83 (1.7%) 263 (5.5%) 4,786

Fuzzy ART Pornographic 780 (77.3%) 100 (9.9%) 129 (12.8%) 1,009

Nonpornographic 3,429 (90.8%) 88 (2.3%) 260 (6.9%) 3,777

Total 4,209 (87.9%) 188 (3.9%) 389 (8.1%) 4,786

Table 6. Classification accuracy. The “Meta” column indicates the number of Web pages that metacontent checking classified.

Correctly classified Incorrectly classified

ANN type Web page ANN Meta ANN Meta Unascertained Total

KSOM Pornographic 499 9 23 0 4 535

Nonpornographic 496 1 5 2 19 523

Total 1,005 (95.0%) 30 (2.8%) 23 (2.2%) 1,058

Fuzzy ART Pornographic 428 32 47 0 28 535

Nonpornographic 475 8 7 9 24 523

Total 943 (89.1%) 63 (6.0%) 52 (4.9%) 1,058

entry has a unique ID identifying one of the produce an activated cluster. The catego- the metacontents include terms directly

generated clusters and its category. rization step uses the cluster-to-category related to the associated Web pages’ subject.

mapping database to determine the activated For keywords, we use the terms in the indica-

Classification cluster’s category, which it uses to classify tive terms dictionary. If the filtering finds at

This process uses the trained ANN to clas- the corresponding Web page. least one indicative term in the metacontents,

sify incoming Web pages; it outputs one of To further reduce the number of unascer- the engine classifies the associated Web page

the three predefined categories. Similar to the tained Web pages, we introduce a postpro- as pornographic. Otherwise, it identifies the

training process, it also performs feature cessing step called metacontent checking. page as nonpornographic. If the engine can-

extraction, preprocessing, and transforma- This step applies keyword filtering to the not find the metacontents or they do not exist,

tion for each incoming Web page. After these “description” and “keywords” metacontents in the the page remains unascertained.

steps have generated the Web page vector, HTML header of the unascertained Web

the system feeds the vector into the ANN to pages. This mechanism is effective because Performance evaluation

For training, we used the 4,786 Web pages

(93,578,232 bytes) mentioned in the “Train-

ing” section. First, we measured the pro-

cessing time required for the three pre-ANN

steps (feature extraction, preprocessing, and

transformation) for both training and classi-

fication. The three steps took 167 seconds to

process all Web pages (an average of 35 ms

per page).

Next, we measured the training efficiency

and accuracy for KSOM and Fuzzy ART.

Tables 4 and 5 summarize the results.

Although KSOM requires much longer train-

ing time, it produces better training accuracy

and gives a smaller set of unascertained Web

pages.

Finally, we measured both networks’ clas-

sification accuracy. We compiled a testing

exemplar set with 535 pornographic Web

pages and 523 nonpornographic Web pages.

Table 6 summarizes the results. The “Meta”

column indicates the number of Web pages

Figure 6. The engine misclassified this page as pornographic because it contains that metacontent checking classified.

sexually explicit terms in its displayed contents and the “description” and “keywords” From these results, we conclude that the

metacontents. KSOM network performed much better than

56 computer.org/intelligent IEEE INTELLIGENT SYSTEMS

Fuzzy ART. Overall, the ANNs effectively

generalized information for solving new T h e A u t h o r s

cases. Furthermore, a simple technique such

Pui Y. Lee is a graduate student in Nanyang Technological University’s

as metacontent checking was also beneficial.

School of Computer Engineering. His research interests are neural networks,

To help us improve the engine, we also Web page classification, intelligent filtering, and Internet technology. He

analyzed cases of misclassifications. For received his BASc (Hons.) in computer engineering from NTU. Contact him

example, the engine misclassified the Web at the School of Computer Eng., Nanyang Technological Univ., Blk N4,

page in Figure 6 as pornographic. The main #02A-32, Nanyang Ave, Singapore 639798; a3397245@ntu.edu.sg.

reason for the misclassification is that the

Web page contains many sexually explicit

terms in its displayed contents and the “descrip-

tion” and “keywords” metacontents. Siu C. Hui is an associate professor in Nanyang Technological University’s

Another common reason for misclassifi- School of Computer Engineering. His research interests include data min-

ing, Internet technology, and multimedia systems. He received his BSc in

cation is the insufficient text information in mathematics and his DPhil in computer science, both from the University of

some Web pages that contain mainly image Sussex. He is a member of the IEEE and ACM. Contact him at the School of

information. If a page’s metacontents do not Computer Eng., Nanyang Technological Univ., Blk N4, #02A-32, Nanyang

contain any information, and the page does Ave., Singapore 639798, Republic of Singapore; asschui@ntu.edu.sg; www.

ntu.edu.sg/sce.

not display any warning messages as text, the

system will misclassify it.

Alvis Cheuk M. Fong is a faculty member in Massey University’s Institute

of Information and Mathematical Sciences. His research interests include

various aspects of Internet technology, information theory, and video and

image signal processing. He received his BEng and MSc from Imperial Col-

lege, London, and his PhD from the University of Auckland. He is a mem-

ber of the IEEE and IEE, and is a Chartered Engineer. Contact him at the Inst.

C ompared to Web-filtering systems we

have surveyed (see the “Current Web

Content-Filtering Systems” sidebar), our

of Information and Mathematical Sciences, Massey Univ., Albany Campus,

Private Bag 102-904, North Shore Mail Centre, Auckland, New Zealand;

a.c.fong@massey.ac.nz; www.massey.ac.nz/~acfong.

Intelligent Classification Engine based on the

KSOM network model is clearly more accu- For more information on this or any other computing topic, please visit our Digital Library at

rate. Our results indicate that the engine can http://computer.org/publications/dlib.

automate the maintenance of a URL black

list (a list of URLs to block—see the “Web References 7. G.J. Koehler and S.S. Erenguc, “Minimizing

Content-Filtering Approaches” sidebar) for Misclassifications in Linear Discriminant

1. G. Salton, Automatic Text Processing, Addi- Analysis,” Decision Sciences, vol. 21, no. 1,

fast, effective online filtering. Also, auto- 1990, pp. 63–85.

son-Wesley, Boston, 1989.

mated content classification minimizes the

need to manually examine Web pages. 2. R.P. Lippmann, “An Introduction to Com- 8. A. McCallum and K. Nigam, “A Comparison

Our research is advancing in two direc- puting with Neural Networks,” IEEE ASSP of Event Models for Naïve Bayes Text Clas-

tions. First, we are developing heuristics to Magazine, vol. 4, no. 2, Apr. 1987, pp. 4–22. sification,” AAAI-98 Workshop Learning for

Text Categorization, AAAI Press, Menlo

complement machine intelligence for better Park, Calif., 1998, pp. 41–48.

3. M. Pastore, “Search Engines, Browsers Still

classification accuracy. A particular focus is

Confusing Many Web Users,” CyberAtlas,

Web pages that do not contain much text. We INT Media Group, Darien, Conn., 14 Feb. 9. T. Kohonen, Self-Organizing Maps, Springer-

are applying pattern recognition techniques 2001, http://cyberatlas.internet.com/big_picture/ Verlag, Berlin, 1995.

for understanding graphical information. applications/article/0,,1301_588851,00.html.

Second, we are extending the work to cover 10. G.A. Carpenter, S. Grossberg, and D.B.

4. The Effects of Pornography and Sexual Mes- Rosen, “Fuzzy ART: Fast Stable Learning and

multilingual, XML, and nonpornographic Categorization of Analog Patterns by an

sages, National Coalition for the Protection

but objectionable (for example, violence- and of Children & Families, Cincinnati, Ohio, Adaptive Resonance System,” Neural Net-

drug-related) Web pages. Because we have www.nationalcoalition.org/pornharm. works, vol. 4, no. 6, July 1991, pp. 759–771.

established the framework, the changes phtml?ID=102.

require only the extraction of exemplars that 11. G. Troina and N. Walker, “Document Classi-

5. Y. Yang, “An Evaluation of Statistical fication and Searching: A Neural Network

characterize such Web content. Approach,” ESA Bull., no. 87,Aug. 1996; http://

Approaches to Text Categorization,” Infor-

mation Retrieval, vol. 1, nos. 1–2, Apr. 1999, esapub.esrin.esa.it/bulletin/bullet87/troina87.

pp. 69–90. htm.

Acknowledgments 6. Y. Yang and C.G. Chute, “An Application of 12. D. Roussinov and H. Chen, “A Scalable Self-

Least Squares Fit Mapping to Text Informa- Organizing Map Algorithm for Textual Clas-

The list of products surveyed in this article is tion Retrieval,” Proc. 16th Ann. Int’l ACM sification: A Neural Network Approach to

not exhaustive. We are not related to any of the SIGIR Conf. Research and Development in Thesaurus Generation,” Communication and

vendors, and we do not endorse any of these Information Retrieval (SIGIR 93), ACM Cognition—Artificial Intelligence, vol. 15,

products. Press, New York, 1993, pp. 281–290. nos. 1–2, Spring 1998, pp. 81–111.

SEPTEMBER/OCTOBER 2002 computer.org/intelligent 57

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Quantum Cryptography - Wikipedia, The Free EncyclopediaDocument5 pagesQuantum Cryptography - Wikipedia, The Free EncyclopediaDebashish PalNo ratings yet

- ESP32 Weather Station With BM280Document15 pagesESP32 Weather Station With BM280CahyaDsnNo ratings yet

- AstriumServices XChange Administrator Handbook - 3Document192 pagesAstriumServices XChange Administrator Handbook - 3Lohidas PailaNo ratings yet

- A. System UsabilityDocument15 pagesA. System UsabilityReinheart Marl Gatera GolisNo ratings yet

- Sicap Configuring BIG-IP-LTM Local Traffic Manager F5-TRG-BIG-LTM-CFG-3 PDFDocument5 pagesSicap Configuring BIG-IP-LTM Local Traffic Manager F5-TRG-BIG-LTM-CFG-3 PDFAlessandro PazNo ratings yet

- PRACTICAL NO 2 E-CommerceDocument5 pagesPRACTICAL NO 2 E-CommerceHarpreet SinghNo ratings yet

- Min-Max Planning in Oracle Inventory eBS R12: September 2009Document48 pagesMin-Max Planning in Oracle Inventory eBS R12: September 2009Carlos HernandezNo ratings yet

- En Crypt Ing Query Strings WithDocument3 pagesEn Crypt Ing Query Strings WithkhundaliniNo ratings yet

- Pan Os 6.0 Cli RefDocument770 pagesPan Os 6.0 Cli Reffato055No ratings yet

- Reglamento LEY 27979Document47 pagesReglamento LEY 27979Raul NavarroNo ratings yet

- PasswordsDocument25 pagesPasswordsbilal chouatNo ratings yet

- Html File Submitted By Ranjeet SinghDocument29 pagesHtml File Submitted By Ranjeet Singhbharat sachdevaNo ratings yet

- ServiceNow Instance Hardening Customer Security DocumentDocument19 pagesServiceNow Instance Hardening Customer Security DocumentAlex Boan0% (1)

- TL-WR840N User GuideDocument113 pagesTL-WR840N User GuideRebecca FantiNo ratings yet

- (Autopilot) Gods Money Make 55$ A DayDocument6 pages(Autopilot) Gods Money Make 55$ A Dayjrhaye76No ratings yet

- Umbrella Instant v2-2Document43 pagesUmbrella Instant v2-2ahmad100% (1)

- Master Setting BaruDocument1 pageMaster Setting BaruSindhu Kurnia100% (2)

- Tor Write Up FinalDocument23 pagesTor Write Up FinalLiquidDragonNo ratings yet

- Lab Answer Key: Module 2: Implementing DHCP Lab: Implementing DHCPDocument9 pagesLab Answer Key: Module 2: Implementing DHCP Lab: Implementing DHCPLadislauNo ratings yet

- Remote Deposit Capture Team - Blue BarchDocument3 pagesRemote Deposit Capture Team - Blue BarchA.G. AshishNo ratings yet

- Power To Heal - Joan HunterDocument110 pagesPower To Heal - Joan Huntercb_baker114% (7)

- Defense in Depth PDFDocument9 pagesDefense in Depth PDFYasir MushtaqNo ratings yet

- FanfictionDocument20 pagesFanfictionMegivareza Putri HanansyahNo ratings yet

- PA-400-series DatasheetDocument5 pagesPA-400-series DatasheetPrasetya NugrahaNo ratings yet

- Test Case DocumentDocument18 pagesTest Case DocumentambikaNo ratings yet

- EPM 10.0 Functions Vs EVDRE 7.X Functions in BPCDocument2 pagesEPM 10.0 Functions Vs EVDRE 7.X Functions in BPCw1sh888No ratings yet

- How To Fix and Resolve Quickbooks Error 12007Document4 pagesHow To Fix and Resolve Quickbooks Error 12007andrewmoore01No ratings yet

- Rat's concise mobile app security methodologyDocument14 pagesRat's concise mobile app security methodologyabdullahNo ratings yet

- United Institute of Management Digital Marketing Group PresentationDocument16 pagesUnited Institute of Management Digital Marketing Group PresentationTusharNo ratings yet

- 2020 The Journalof Defense Modelingand SimulationDocument51 pages2020 The Journalof Defense Modelingand SimulationKhadijaNo ratings yet