Professional Documents

Culture Documents

Files

Uploaded by

Selenzo SolomonCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Files

Uploaded by

Selenzo SolomonCopyright:

Available Formats

I.

Joint Distribution Example

p(1, 1) = 0.3 p(2, 1) = 0.1

p(1, 2) = 0.1 p(2, 2) = 0.5

Expectation of g(x, y) = xy:

E[XY ] = 1 0.3 + 2 0.1 + 2 0.1 + 4 0.5 = 2.7

Marginal probability mass functions:

p

X

(1) = p(1, 1) +p(1, 2) = 0.4

p

X

(2) = p(2, 1) +p(2, 2) = 0.6

p

Y

(1) = p(1, 1) +p(2, 1) = 0.4

p

Y

(2) = p(1, 2) +p(2, 2) = 0.6

II. Conditional distributions

A. Denition: The conditional probability mass function for discrete r.v.s X given Y = y

is

p

X|Y

(x|y) = P(X = x|Y = y)

=

P(X = x, Y = Y )

P(Y = Y )

=

p(x, y)

p

Y

(y)

,

for all y such that P(Y = y) > 0.

B. Example: Using the same joint pmf given above, compute p

X|Y

(x|y).

p

X|Y

(1|1) =

p(1, 1)

p

Y

(1)

=

0.3

0.4

= 0.75

p

X|Y

(1|2) =

p(1, 2)

p

Y

(2)

=

0.1

0.6

0.167

p

X|Y

(2|1) =

p(2, 1)

p

X

(1)

=

0.1

0.4

= 0.25

p

X|Y

(2|2) =

p(2, 2)

p

X

(2)

=

0.5

0.6

0.83.

C. Example: Suppose Y Bin(n

1

, p) and X Bin(n

2

, p) and let Z = X+Y and q = 1p.

Calculate p

X|Z

(x|z).

p

X|Z

(x|z) =

p

X,Z

(x, z)

p

Z

(z)

=

p

X,Y

(x, z x)

p

Z

(z)

1

=

p

X

(x)p

Y

(z x)

p

Z

(z)

=

_

n

1

x

_

p

x

q

n

1

x

_

n

2

zx

_

p

zx

q

n

2

z+x

_

n

1

+n

2

z

_

p

z

q

n

1

+n

2

z

=

_

n

1

x

__

n

2

zy

_

_

n

1

+n

2

m

_ .

The last formula is the pmf of the Hypergeometric distribution, the distribution that is

canonically associated with the following experiment. Suppose you draw m balls from an

urn containing n

1

black balls and n

2

red balls. The Hypergeometric is the distribution

of the random number counting the number of black balls you selected.

D. Denition: The conditional probability density function for continuous r.v.s X given

Y = y is

f

X|Y

(x|y) =

f(x, y)

f

Y

(y)

.

E. Example:

f(x, y) =

_

6xy(2 x y) 0 < x < 1, 0 < y < 1

0 ow.

f

X|Y

(x|y) =

f(x, y)

f

Y

(y)

=

6xy(2 x y)

_

1

0

6xy(2 x y)dx

=

6x(2 x y)

4 3y

.

III. Conditional expectation

A. Denition: The conditional expectation of discrete r.v. X given Y = y is

E[X|Y = y] =

x

xP(X = x|Y = y)

=

x

xp

X|Y

(x|y).

B. Example: Using the same joint pmf given above, compute E[X|Y = 1].

E[X|Y = 1] = 1 p

X|Y

(1|1) + 2 p

X|Y

(2|1) = 1 0.75 + 2 0.25 = 1.25.

C. Denition: The conditional expectation of continuous r.v.s X given Y = y is

E[X|Y = y] =

_

xf

X|Y

(x|y)dx.

D. Example: Using the same joint pdf as in the previous section, nd E[X|Y = y].

E[X|Y = y] =

_

1

0

xf

X|Y

(x|y)dx =

_

1

0

6x

2

(2 x y)

4 3y

dx =

5 4y

8 6y

.

2

E. Note: We can think of E[X|Y ], where a value for the r.v. Y is not specied, as a random

function of the r.v. Y .

F. Proposition:

E[X] = E[E[X|Y ]]

Proof:

E[E[X|Y ]] =

_

E[X|Y = y]f

Y

(y)dy

=

_

xf

X|Y

(x, y)dxf

Y

(y)dy

=

_

x

f(x, y)

f

Y

(y)

f

Y

(y)dxdy

=

_

xf(x, y)dxdy

=

_

x

_

f(x, y)dydx

=

_

xf

X

(x)dx

= E[X].

G. Example: Suppose that the expected number of accidents per week is 4 and suppose

that the number of workers injured per accident has mean 2. Also assume that the

number of people injured is independently determined for each accident. What is the

expected number of injuries per week?

HOMEWORK

H. Denition: a compound random variable is a sum of random length of independent and

identically distributed random variables.

I. Property: If X =

N

i=1

X

i

is a compound random variable and E[X

i

] = , then

E[X] = E[N].

J. Example: Suppose you are in a room in a cave. There are three exits. The rst takes

you on a path that takes 3 hours but eventually deposits you back in the same room.

The second takes you on a path that takes 5 hours but also deposits you back in the

room. The third takes you to the exit, which is 2 hours away. On average, how long do

you expect to stay in the cave if you randomly select an exit each time you enter the

room in the cave?

3

HOMEWORK

Hint: It is helpful to condition on the random variable Y indicating which exit you

choose on your rst attempt to exit the room.

K. Proposition: There is an equivalent results for variances.

Var(X) = E[Var(X|Y )] + Var(E[X|Y ]).

L. Result: We can use the above result to nd the variance of a compound r.v. Suppose

that E[X

i

] = and Var(X

i

) =

2

.

Var(X|N) = Var

_

N

i=1

X

i

|N

_

=

N

i=1

Var(X

i

) = N

2

.

Var(X) = E[N

2

] + Var(N) =

2

E[N] +

2

Var(N)

and you obtain the variance of the compound random variable X by knowing the mean

and variance of X

i

and N.

IV. Comprehensive Example:

You are presented n prizes in random sequence. When presented with a prize, you are told

its rank relative to all the prizes you have seen (or you can observe this, for example if the

prizes are money). If you accept the prize, the game is over. If you reject the prize, you are

presented with the next prize. How can you best improve your chance of getting the best

prize of all n.

Lets attempt a strategy where you reject the rst k prizes. Thereafter, you accept the rst

prize that is better than the rst k. We will nd the k that maximizes your chance of getting

the best prize and compute your probability of getting the best prize for that k.

Let P

k

(best) be the probability that the best prize is selected using the above strategy.

HOMEWORK

Hint: Think LTP. It is easiest to compute P

k

(best) by conditioning on the position i of the

best prize X. Also note that the probability of choosing the best prize is only achieved if the

best of the rst i 1 is among the rst k. Finally,

n

i=k

1

i

_

n

k

1

x

dx.

The answer without elaboration: Using the proposed strategy you can achieve nearly

40% chance of getting the best prize out of all n prizes.

4

You might also like

- Understanding Bivariate Distributions and Correlated Random VariablesDocument9 pagesUnderstanding Bivariate Distributions and Correlated Random Variablesjacksim1991No ratings yet

- Review MidtermII Summer09Document51 pagesReview MidtermII Summer09Deepak RanaNo ratings yet

- Basic Probability Exam PacketDocument44 pagesBasic Probability Exam PacketPerficmsfitNo ratings yet

- Statistic CE Assignment & AnsDocument6 pagesStatistic CE Assignment & AnsTeaMeeNo ratings yet

- TD1 SolutionDocument5 pagesTD1 SolutionaaaaaaaaaaaNo ratings yet

- E 04 Ot 6 SDocument7 pagesE 04 Ot 6 SÂn TrầnNo ratings yet

- 5 BMDocument20 pages5 BMNaailah نائلة MaudarunNo ratings yet

- Financial Engineering & Risk Management: Review of Basic ProbabilityDocument46 pagesFinancial Engineering & Risk Management: Review of Basic Probabilityshanky1124No ratings yet

- Chapter 5Document19 pagesChapter 5chilledkarthikNo ratings yet

- Unit 1 - 03Document10 pagesUnit 1 - 03Raja RamachandranNo ratings yet

- Chapter 2 2Document8 pagesChapter 2 2Uni StuffNo ratings yet

- Conditional Probability ExplainedDocument8 pagesConditional Probability ExplainedPedro KoobNo ratings yet

- Lecture Notes Week 1Document10 pagesLecture Notes Week 1tarik BenseddikNo ratings yet

- Master's Written Examination and SolutionDocument14 pagesMaster's Written Examination and SolutionRobinson Ortega MezaNo ratings yet

- Class6 Prep ADocument7 pagesClass6 Prep AMariaTintashNo ratings yet

- Instructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeDocument13 pagesInstructor: DR - Saleem AL Ashhab Al Ba'At University Mathmatical Class Second Year Master DgreeNazmi O. Abu JoudahNo ratings yet

- HW1 SolDocument2 pagesHW1 SolAlexander LópezNo ratings yet

- MA2216/ST2131 Probability Notes 5 Distribution of A Function of A Random Variable and Miscellaneous RemarksDocument13 pagesMA2216/ST2131 Probability Notes 5 Distribution of A Function of A Random Variable and Miscellaneous RemarksSarahNo ratings yet

- Conditional ProbabilityDocument8 pagesConditional ProbabilitySindhutiwariNo ratings yet

- Stochastic ProcessesDocument46 pagesStochastic ProcessesforasepNo ratings yet

- Indicator functions and expected values explainedDocument10 pagesIndicator functions and expected values explainedPatricio Antonio VegaNo ratings yet

- Regression Analysis: Properties of Least Squares EstimatorsDocument7 pagesRegression Analysis: Properties of Least Squares EstimatorsFreddie YuanNo ratings yet

- Metric Spaces1Document33 pagesMetric Spaces1zongdaNo ratings yet

- Math 3215 Practice Exam 3 SolutionsDocument4 pagesMath 3215 Practice Exam 3 SolutionsPei JingNo ratings yet

- BartleDocument11 pagesBartleLucius Adonai67% (3)

- International Competition in Mathematics For Universtiy Students in Plovdiv, Bulgaria 1995Document11 pagesInternational Competition in Mathematics For Universtiy Students in Plovdiv, Bulgaria 1995Phúc Hảo ĐỗNo ratings yet

- MA 2213 Numerical Analysis I: Additional TopicsDocument22 pagesMA 2213 Numerical Analysis I: Additional Topicsyewjun91No ratings yet

- Lecture 6. Order Statistics: 6.1 The Multinomial FormulaDocument19 pagesLecture 6. Order Statistics: 6.1 The Multinomial FormulaLya Ayu PramestiNo ratings yet

- נוסחאות ואי שיוויוניםDocument12 pagesנוסחאות ואי שיוויוניםShahar MizrahiNo ratings yet

- KorovkinDocument10 pagesKorovkinMurali KNo ratings yet

- LTE: Lifting The Exponent Lemma ExplainedDocument14 pagesLTE: Lifting The Exponent Lemma ExplainedSohail FarhangiNo ratings yet

- Ma635-Hw04 SolDocument3 pagesMa635-Hw04 SolGerardo SixtosNo ratings yet

- Solutions To Sample Midterm QuestionsDocument14 pagesSolutions To Sample Midterm QuestionsJung Yoon SongNo ratings yet

- Engineering Mathematics II - RemovedDocument90 pagesEngineering Mathematics II - RemovedMd TareqNo ratings yet

- Conditional Expectations E (X - Y) As Random Variables: Sums of Random Number of Random Variables (Random Sums)Document2 pagesConditional Expectations E (X - Y) As Random Variables: Sums of Random Number of Random Variables (Random Sums)sayed Tamir janNo ratings yet

- Set4 Func of Random Variable ExpectationDocument43 pagesSet4 Func of Random Variable ExpectationRavi SinghNo ratings yet

- Informationtheory-Lecture Slides-A1 Solutions 5037558613Document0 pagesInformationtheory-Lecture Slides-A1 Solutions 5037558613gowthamkurriNo ratings yet

- Real Analysis Homework SolutionsDocument41 pagesReal Analysis Homework Solutionsamrdif_20066729No ratings yet

- Actsc 432 Review Part 1Document7 pagesActsc 432 Review Part 1osiccorNo ratings yet

- Mathematical Expectation ExplainedDocument49 pagesMathematical Expectation ExplainednofiarozaNo ratings yet

- HW4 SolutionDocument6 pagesHW4 SolutionDi WuNo ratings yet

- Tutsheet 5Document2 pagesTutsheet 5qwertyslajdhjsNo ratings yet

- Book by Dr. LutfiDocument107 pagesBook by Dr. Lutfitkchv684xpNo ratings yet

- Measure Theory - SEODocument62 pagesMeasure Theory - SEOMayank MalhotraNo ratings yet

- Metric Spaces: 4.1 CompletenessDocument7 pagesMetric Spaces: 4.1 CompletenessJuan Carlos Sanchez FloresNo ratings yet

- Manipulation of Ideals: Theorem 1.1 (Radical Ideal Membership) Let I FDocument6 pagesManipulation of Ideals: Theorem 1.1 (Radical Ideal Membership) Let I FRania SadekNo ratings yet

- MTH102 EXACT DIFFERENTIAL EQUATIONDocument13 pagesMTH102 EXACT DIFFERENTIAL EQUATIONKool KaishNo ratings yet

- Elementary Properties of Cyclotomic Polynomials by Yimin GeDocument8 pagesElementary Properties of Cyclotomic Polynomials by Yimin GeGary BlakeNo ratings yet

- Measurable Functions and Random VariablesDocument10 pagesMeasurable Functions and Random VariablesMarjo KaciNo ratings yet

- Exercise 5: Probability and Random Processes For Signals and SystemsDocument4 pagesExercise 5: Probability and Random Processes For Signals and SystemsGpNo ratings yet

- 2-ENSIA 2023-2024 Worksheet 1Document4 pages2-ENSIA 2023-2024 Worksheet 1Bekhedda AsmaNo ratings yet

- Eli Maor, e The Story of A Number, Among ReferencesDocument10 pagesEli Maor, e The Story of A Number, Among ReferencesbdfbdfbfgbfNo ratings yet

- MSexam Stat 2019S SolutionsDocument11 pagesMSexam Stat 2019S SolutionsRobinson Ortega MezaNo ratings yet

- LteDocument12 pagesLteMarcos PertierraNo ratings yet

- 151 Midterm 2 SolDocument2 pages151 Midterm 2 SolChristian RamosNo ratings yet

- Stats3 Topic NotesDocument4 pagesStats3 Topic NotesSneha KhandelwalNo ratings yet

- Stanford University CS 229, Autumn 2014 Midterm ExaminationDocument23 pagesStanford University CS 229, Autumn 2014 Midterm ExaminationErico ArchetiNo ratings yet

- Introductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)From EverandIntroductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)No ratings yet

- Mathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsFrom EverandMathematics 1St First Order Linear Differential Equations 2Nd Second Order Linear Differential Equations Laplace Fourier Bessel MathematicsNo ratings yet

- Differentiation (Calculus) Mathematics Question BankFrom EverandDifferentiation (Calculus) Mathematics Question BankRating: 4 out of 5 stars4/5 (1)

- Csma CNDocument6 pagesCsma CNpramod_bogarNo ratings yet

- Path Loss ModelsDocument36 pagesPath Loss ModelsHugo ValvassoriNo ratings yet

- CD MaDocument37 pagesCD MaNikita GuptaNo ratings yet

- Error Correction TutorialDocument16 pagesError Correction TutorialAlexandros SourNo ratings yet

- Hydraulic Power Steering System Design PDFDocument16 pagesHydraulic Power Steering System Design PDFAdrianBirsan100% (1)

- Great Gatsby Study NotesDocument69 pagesGreat Gatsby Study NotesLara Westwood100% (2)

- Dr. Blyden: Chronic Obstructive Pulmonary Disease (Copd)Document63 pagesDr. Blyden: Chronic Obstructive Pulmonary Disease (Copd)Blyden NoahNo ratings yet

- Carte Automatic TransmissionsDocument20 pagesCarte Automatic TransmissionsGigelNo ratings yet

- Pd3c CV Swa (22kv)Document2 pagesPd3c CV Swa (22kv)เต่า วีไอNo ratings yet

- 3 Valvula Modular Serie 01Document42 pages3 Valvula Modular Serie 01Leandro AguiarNo ratings yet

- Inakyd 3623-X-70Document2 pagesInakyd 3623-X-70roybombomNo ratings yet

- Niryana Shoola DasaDocument7 pagesNiryana Shoola DasaSuryasukraNo ratings yet

- Porter's Diamond Model Explains Nations' Success in IT CompetitionDocument30 pagesPorter's Diamond Model Explains Nations' Success in IT CompetitionKuthubudeen T MNo ratings yet

- The Karnataka Maternity Benefit (Amendment) Rules 2019Document30 pagesThe Karnataka Maternity Benefit (Amendment) Rules 2019Manisha SNo ratings yet

- Outline 1. Background of Revision: JEITA CP-1104BDocument4 pagesOutline 1. Background of Revision: JEITA CP-1104BkksdnjdaNo ratings yet

- Monsterology Activity KitDocument2 pagesMonsterology Activity KitCandlewick PressNo ratings yet

- Nest Installation GuideDocument8 pagesNest Installation GuideOzzyNo ratings yet

- Ficha Tecnica Cat. Bard 36kbtu Act.Document15 pagesFicha Tecnica Cat. Bard 36kbtu Act.Jehison M Patiño TenorioNo ratings yet

- 3.1-T.C.Dies PDFDocument6 pages3.1-T.C.Dies PDFYahyaMoummouNo ratings yet

- MACRO XII Subhash Dey All Chapters PPTs (Teaching Made Easier)Document2,231 pagesMACRO XII Subhash Dey All Chapters PPTs (Teaching Made Easier)Vatsal HarkarNo ratings yet

- 3 Edition February 2013: Ec2 Guide For Reinforced Concrete Design For Test and Final ExaminationDocument41 pages3 Edition February 2013: Ec2 Guide For Reinforced Concrete Design For Test and Final ExaminationDark StingyNo ratings yet

- Applied Acoustics: André M.N. Spillere, Augusto A. Medeiros, Julio A. CordioliDocument13 pagesApplied Acoustics: André M.N. Spillere, Augusto A. Medeiros, Julio A. CordioliAbdelali MoumenNo ratings yet

- Matrix Algebra by A.S.HadiDocument4 pagesMatrix Algebra by A.S.HadiHevantBhojaram0% (1)

- Hyundai Elevator Manual Helmon 2000 InstructionDocument27 pagesHyundai Elevator Manual Helmon 2000 InstructionReynold Suarez100% (1)

- Instrument To Be CalibratedDocument3 pagesInstrument To Be Calibratedsumit chauhanNo ratings yet

- Operation & Maintenance Manual For Bolted Steel Tanks: Complete InstallationDocument6 pagesOperation & Maintenance Manual For Bolted Steel Tanks: Complete InstallationIrvansyah RazadinNo ratings yet

- ASIAN LIVESTOCK PERSPECTIVESDocument18 pagesASIAN LIVESTOCK PERSPECTIVESMuadz AbdurrahmanNo ratings yet

- Kaustubh Laturkar Fuel Cell ReportDocument3 pagesKaustubh Laturkar Fuel Cell Reportkos19188No ratings yet

- S10 Electric Power PackDocument12 pagesS10 Electric Power PackrolandNo ratings yet

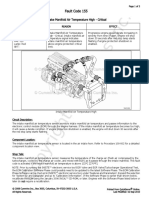

- Fault Code 155: Intake Manifold Air Temperature High - CriticalDocument3 pagesFault Code 155: Intake Manifold Air Temperature High - Criticalhamilton miranda100% (1)

- General Psychology - Unit 2Document23 pagesGeneral Psychology - Unit 2shivapriya ananthanarayananNo ratings yet

- Introducing Inspira's: Managed Noc & Itoc ServicesDocument2 pagesIntroducing Inspira's: Managed Noc & Itoc ServicesmahimaNo ratings yet

- Unit 3 Assignment - CompletedDocument7 pagesUnit 3 Assignment - CompletedSu GarrawayNo ratings yet

- Mycotoxin Test ProcedureDocument3 pagesMycotoxin Test ProcedureKishenthi KerisnanNo ratings yet