Professional Documents

Culture Documents

Infor Basics

Uploaded by

Sree VasOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Infor Basics

Uploaded by

Sree VasCopyright:

Available Formats

A Data warehouse is a repository of integrated information, available for querie s and analysis.

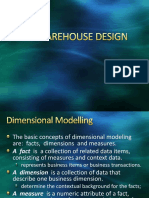

Data and information are extracted from heterogeneous sources as they are generated. This makes it much easier and more efficient to run queries over data that originally came from different sources". Another definition for data warehouse is: " A data warehouse is a logical collection of information gat hered from many different operational databases used to create business intellig ence that supports business analysis activities and decision-making tasks, prima rily, a record of an enterprise's past transactional and operational information , stored in a database designed to favour efficient data analysis and reporting (especially OLAP)". Generally, data warehousing is not meant for current "live" data, although 'virtual' or 'point-to-point' data warehouses can access operatio nal data. A 'real' data warehouse is generally preferred to a virtual DW because stored What is Star Schema? Star Schema is a relational database schema for representing multimensional data . It is the simplest form of data warehouse schema that contains one or more dim ensions and fact tables. It is called a star schema because the entity-relations hip diagram between dimensions and fact tables resembles a star where one fact t able is connected to multiple dimensions. The center of the star schema consists of a large fact table and it points towards the dimension tables. The advantage of star schema are slicing down, performance increase and easy understanding of data. Steps in designing Star Schema Identify a business process for analysis(like sales). Identify measures or facts (sales dollar). Identify dimensions for facts(product dimension, location dimension, time dimens ion, organization dimension). List the columns that describe each dimension.(region name, branch name, region name). Determine the lowest level of summary in a fact table(sales dollar). Important aspects of Star Schema & Snow Flake Schema In a star schema every dimension will have a primary key. In a star schema, a dimension table will not have any parent table. Whereas in a snow flake schema, a dimension table will have one or more parent t ables. Hierarchies for the dimensions are stored in the dimensional table itself in sta r schema. Whereas hierachies are broken into separate tables in snow flake schema. These h ierachies helps to drill down the data from topmost hierachies to the lowermost hierarchies. Hierarchy A logical structure that uses ordered levels as a means of organizing data. A hi erarchy can be used to define data aggregation; for example, in a time dimension , a hierarchy might be used to aggregate data from the Month level to the Quarte r level, from the Quarter level to the Year level. A hierarchy can also be used to define a navigational drill path, regardless of whether the levels in the hie rarchy represent aggregated totals or not. Level A position in a hierarchy. For example, a time dimension might have a hierarchy that represents data at the Month, Quarter, and Year levels. Fact Table A table in a star schema that contains facts and connected to dimensions. A fact table typically has two types of columns: those that contain facts and those th at are foreign keys to dimension tables. The primary key of a fact table is usua lly a composite key that is made up of all of its foreign keys. A fact table might contain either detail level facts or facts that have been agg regated (fact tables that contain aggregated facts are often instead called summ ary tables). A fact table usually contains facts with the same level of aggregat

ion. Example of Star Schema: Figure 1.6 In the example figure 1.6, sales fact table is connected to dimensions location, product, time and organization. It shows that data can be sliced across all dim ensions and again it is possible for the data to be aggregated across multiple d imensions. "Sales Dollar" in sales fact table can be calculated across all dimen sions independently or in a combined manner which is explained below. Sales Dollar value for a particular product Sales Dollar value for a product in a location Sales Dollar value for a product in a year within a location Sales Dollar value for a product in a year within a location sold or serviced by an employee Snowflake Schema A snowflake schema is a term that describes a star schema structure normalized t hrough the use of outrigger tables. i.e dimension table hierachies are broken in to simpler tables. In star schema example we had 4 dimensions like location, pro duct, time, organization and a fact table(sales). In Snowflake schema, the example diagram shown below has 4 dimension tables, 4 l ookup tables and 1 fact table. The reason is that hierarchies(category, branch, state, and month) are being broken out of the dimension tables(PRODUCT, ORGANIZA TION, LOCATION, and TIME) respectively and shown separately. In OLAP, this Snowf lake schema approach increases the number of joins and poor performance in retri eval of data. In few organizations, they try to normalize the dimension tables t o save space. Since dimension tables hold less space, Snowflake schema approach may be avoided. Example of Snowflake Schema: Figure 1.7 Fact Table The centralized table in a star schema is called as FACT table. A fact table typ ically has two types of columns: those that contain facts and those that are for eign keys to dimension tables. The primary key of a fact table is usually a comp osite key that is made up of all of its foreign keys. In the example fig 1.6 "Sales Dollar" is a fact(measure) and it can be added acr oss several dimensions. Fact tables store different types of measures like addit ive, non additive and semi additive measures. Measure Types Additive - Measures that can be added across all dimensions. Non Additive - Measures that cannot be added across all dimensions. Semi Additive - Measures that can be added across few dimensions and not with ot hers. A fact table might contain either detail level facts or facts that have been agg regated (fact tables that contain aggregated facts are often instead called summ ary tables). In the real world, it is possible to have a fact table that contains no measures or facts. These tables are called as Factless Fact tables. Steps in designing Fact Table Identify a business process for analysis(like sales). Identify measures or facts (sales dollar). Identify dimensions for facts(product dimension, location dimension, time dimens ion, organization dimension). List the columns that describe each dimension.(region name, branch name, region name). Determine the lowest level of summary in a fact table(sales dollar). Example of a Fact Table with an Additive Measure in Star Schema: Figure 1.6 In the example figure 1.6, sales fact table is connected to dimensions location, product, time and organization. Measure "Sales Dollar" in sales fact table can be added across all dimensions independently or in a combined manner which is ex plained below.

Sales Dollar value for a particular product Sales Dollar value for a product in a location Sales Dollar value for a product in a year within a location Sales Dollar value for a product in a year within a location sold or serviced by an employee Dimension Table Dimension table is one that describe the business entities of an enterprise, rep resented as hierarchical, categorical information such as time, departments, loc ations, and products. Dimension tables are sometimes called lookup or reference tables. Location Dimension In a relational data modeling, for normalization purposes, country lookup, state lookup, county lookup, and city lookups are not merged as a single table. In a dimensional data modeling(star schema), these tables would be merged as a single table called LOCATION DIMENSION for performance and slicing data requirements. This location dimension helps to compare the sales in one region with another re gion. We may see good sales profit in one region and loss in another region. If it is a loss, the reasons for that may be a new competitor in that area, or fail ure of our marketing strategy etc. Example of Location Dimension: Figure 1.8 Country Lookup Country Code Country Name DateTimeStamp USA United States Of America 1/1/2005 11:23:31 AM State Lookup State Code State Name DateTimeStamp NY New York 1/1/2005 11:23:31 AM FL Florida 1/1/2005 11:23:31 AM CA California 1/1/2005 11:23:31 AM NJ New Jersey 1/1/2005 11:23:31 AM County Lookup County Code County Name DateTimeStamp NYSH Shelby 1/1/2005 11:23:31 AM FLJE Jefferson 1/1/2005 11:23:31 AM CAMO Montgomery 1/1/2005 11:23:31 AM NJHU Hudson 1/1/2005 11:23:31 AM City Lookup City Code City Name DateTimeStamp NYSHMA Manhattan 1/1/2005 11:23:31 AM FLJEPC Panama City 1/1/2005 11:23:31 AM CAMOSH San Hose 1/1/2005 11:23:31 AM NJHUJC Jersey City 1/1/2005 11:23:31 AM Location Dimension Location Dimension Id Country Name State Name County Name City Name DateTime Stamp 1 USA New York Shelby Manhattan 1/1/2005 11:23:31 AM 2 USA Florida Jefferson Panama City 1/1/2005 11:23:31 AM 3 USA California Montgomery San Hose 1/1/2005 11:23:31 AM 4 USA New Jersey Hudson Jersey City 1/1/2005 11:23:31 AM Product Dimension In a relational data model, for normalization purposes, product category lookup, product sub-category lookup, product lookup, and and product feature lookups ar e are not merged as a single table. In a dimensional data modeling(star schema), these tables would be merged as a single table called PRODUCT DIMENSION for per

formance and slicing data requirements. Example of Product Dimension: Figure 1.9 Product Category Lookup Product Category Code Product Category Name DateTimeStamp 1 Apparel 1/1/2005 11:23:31 AM 2 Shoe 1/1/2005 11:23:31 AM Product Sub-Category Lookup Product Sub-Category Code Product Sub-Category Name DateTime Stamp 11 Shirt 1/1/2005 11:23:31 AM 12 Trouser 1/1/2005 11:23:31 AM 13 Casual 1/1/2005 11:23:31 AM 14 Formal 1/1/2005 11:23:31 AM Product Lookup Product Code Product Name DateTimeStamp 1001 Van Heusen 1/1/2005 11:23:31 AM 1002 Arrow 1/1/2005 11:23:31 AM 1003 Nike 1/1/2005 11:23:31 AM 1004 Adidas 1/1/2005 11:23:31 AM Product Feature Lookup Product Feature Code Product Feature Description DateTimeStamp 10001 Van-M 1/1/2005 11:23:31 AM 10002 Van-L 1/1/2005 11:23:31 AM 10003 Arr-XL 1/1/2005 11:23:31 AM 10004 Arr-XXL 1/1/2005 11:23:31 AM 10005 Nike-8 1/1/2005 11:23:31 AM 10006 Nike-9 1/1/2005 11:23:31 AM 10007 Adidas-10 1/1/2005 11:23:31 AM 10008 Adidas-11 1/1/2005 11:23:31 AM Product Dimension Product Dimension Id Product Category Name Product Sub-Category Name Product Nam e Product Feature Desc DateTime Stamp 100001 Apparel Shirt Van Heusen Van-M 1/1/2005 11:23:31 AM 100002 Apparel Shirt Van Heusen Van-L 1/1/2005 11:23:31 AM 100003 Apparel Shirt Arrow Arr-XL 1/1/2005 11:23:31 AM 100004 Apparel Shirt Arrow Arr-XXL 1/1/2005 11:23:31 AM 100005 Shoe Casual Nike Nike-8 1/1/2005 11:23:31 AM 100006 Shoe Casual Nike Nike-9 1/1/2005 11:23:31 AM 100007 Shoe Casual Adidas Adidas-10 1/1/2005 11:23:31 AM 100008 Shoe Casual Adidas Adidas-11 1/1/2005 11:23:31 AM Organization Dimension In a relational data model, for normalization purposes, corporate office lookup, region lookup, branch lookup, and employee lookups are not merged as a single t able. In a dimensional data modeling(star schema), these tables would be merged as a single table called ORGANIZATION DIMENSION for performance and slicing data . This dimension helps us to find the products sold or serviced within the organiz ation by the employees. In any industry, we can calculate the sales on region ba sis, branch basis and employee basis. Based on the performance, an organization can provide incentives to employees and subsidies to the branches to increase fu rther sales. Example of Organization Dimension: Figure 1.10 Corporate Lookup

Corporate Code Corporate Name DateTimeStamp CO American Bank 1/1/2005 11:23:31 AM Region Lookup Region Code Region Name DateTimeStamp SE South East 1/1/2005 11:23:31 AM MW Mid West 1/1/2005 11:23:31 AM Branch Lookup Branch Code Branch Name DateTimeStamp FLTM Florida-Tampa 1/1/2005 11:23:31 AM ILCH Illinois-Chicago 1/1/2005 11:23:31 AM Employee Lookup Employee Code Employee Name DateTimeStamp E1 Paul Young 1/1/2005 11:23:31 AM E2 Chris Davis 1/1/2005 11:23:31 AM Organization Dimension Organization Dimension Id Corporate Name Region Name Branch Name Employee Name D ateTime Stamp 1 American Bank South East Florida-Tampa Paul Young 1/1/2005 11:23:31 AM 2 American Bank Mid West Illinois-Chicago Chris Davis 1/1/2005 11:23:31 AM Time Dimension In a relational data model, for normalization purposes, year lookup, quarter loo kup, month lookup, and week lookups are not merged as a single table. In a dimen sional data modeling(star schema), these tables would be merged as a single tabl e called TIME DIMENSION for performance and slicing data. This dimensions helps to find the sales done on date, weekly, monthly and yearly basis. We can have a trend analysis by comparing this year sales with the previ ous year or this week sales with the previous week. Example of Time Dimension: Figure 1.11 Year Lookup Year Id Year Number DateTimeStamp 1 2004 1/1/2005 11:23:31 AM 2 2005 1/1/2005 11:23:31 AM Quarter Lookup Quarter Number Quarter Name DateTimeStamp 1 Q1 1/1/2005 11:23:31 AM 2 Q2 1/1/2005 11:23:31 AM 3 Q3 1/1/2005 11:23:31 AM 4 Q4 1/1/2005 11:23:31 AM Month Lookup Month Number Month Name DateTimeStamp 1 January 1/1/2005 11:23:31 AM 2 February 1/1/2005 11:23:31 AM 3 March 1/1/2005 11:23:31 AM 4 April 1/1/2005 11:23:31 AM 5 May 1/1/2005 11:23:31 AM 6 June 1/1/2005 11:23:31 AM 7 July 1/1/2005 11:23:31 AM 8 August 1/1/2005 11:23:31 AM 9 September 1/1/2005 11:23:31 AM 10 October 1/1/2005 11:23:31 AM 11 November 1/1/2005 11:23:31 AM 12 December 1/1/2005 11:23:31 AM Week Lookup Week Number Day of Week DateTimeStamp 1 Sunday 1/1/2005 11:23:31 AM 1 Monday 1/1/2005 11:23:31 AM

1 Tuesday 1/1/2005 11:23:31 AM 1 Wednesday 1/1/2005 11:23:31 AM 1 Thursday 1/1/2005 11:23:31 AM 1 Friday 1/1/2005 11:23:31 AM 1 Saturday 1/1/2005 11:23:31 AM 2 Sunday 1/1/2005 11:23:31 AM 2 Monday 1/1/2005 11:23:31 AM 2 Tuesday 1/1/2005 11:23:31 AM 2 Wednesday 1/1/2005 11:23:31 AM 2 Thursday 1/1/2005 11:23:31 AM 2 Friday 1/1/2005 11:23:31 AM 2 Saturday 1/1/2005 11:23:31 AM Time Dimension Time Dim Id Year No Day Of Year Quarter No Month No Month Name Month Day No Week No Day of Week Cal Date DateTime Stamp 1 2004 1 Q1 1 January 1 1 5 1/1/2004 1/1/2005 11:23:31 AM 2 2004 32 Q1 2 February 1 5 1 2/1/2004 1/1/2005 11:23:31 AM 3 2005 1 Q1 1 January 1 1 7 1/1/2005 1/1/2005 11:23:31 AM 4 2005 32 Q1 2 February 1 5 3 2/1/2005 1/1/2005 11:23:31 AM Slowly Changing Dimensions Dimensions that change over time are called Slowly Changing Dimensions. For inst ance, a product price changes over time; People change their names for some reas on; Country and State names may change over time. These are a few examples of Sl owly Changing Dimensions since some changes are happening to them over a period of time. Slowly Changing Dimensions are often categorized into three types namely Type1, Type2 and Type3. The following section deals with how to capture and handling th ese changes over time. The "Product" table mentioned below contains a product named, Product1 with Prod uct ID being the primary key. In the year 2004, the price of Product1 was $150 a nd over the time, Product1's price changes from $150 to $350. With this informat ion, let us explain the three types of Slowly Changing Dimensions. Product Price in 2004: Product ID(PK) Year Product Name Product Price 1 2004 Product1 $150 Type 1: Overwriting the old values. In the year 2005, if the price of the product changes to $250, then the old valu es of the columns "Year" and "Product Price" have to be updated and replaced wit h the new values. In this Type 1, there is no way to find out the old value of t he product "Product1" in year 2004 since the table now contains only the new pri ce and year information. Product Product ID(PK) Year Product Name Product Price 1 2005 Product1 $250 Type 2: Creating an another additional record. In this Type 2, the old values will not be replaced but a new row containing the new values will be added to the product table. So at any point of time, the dif ference between the old values and new values can be retrieved and easily be com pared. This would be very useful for reporting purposes. Product Product ID(PK) Year Product Name Product Price 1 2004 Product1 $150 1 2005 Product1 $250

The problem with the above mentioned data structure is "Product ID" cannot store duplicate values of "Product1" since "Product ID" is the primary key. Also, the current data structure doesn't clearly specify the effective date and expiry da te of Product1 like when the change to its price happened. So, it would be bette r to change the current data structure to overcome the above primary key violati on. Product Product ID(PK) Effective DateTime(PK) Year Product Name Product Price Expiry DateTime 1 01-01-2004 12.00AM 2004 Product1 $150 12-31-2004 11.59PM 1 01-01-2005 12.00AM 2005 Product1 $250 In the changed Product table's Data structure, "Product ID" and "Effective DateT ime" are composite primary keys. So there would be no violation of primary key c onstraint. Addition of new columns, "Effective DateTime" and "Expiry DateTime" p rovides the information about the product's effective date and expiry date which adds more clarity and enhances the scope of this table. Type2 approach may need additional space in the data base, since for every changed record, an additiona l row has to be stored. Since dimensions are not that big in the real world, add itional space is negligible. Type 3: Creating new fields. In this Type 3, the latest update to the changed values can be seen. Example men tioned below illustrates how to add new columns and keep track of the changes. F rom that, we are able to see the current price and the previous price of the pro duct, Product1. Product Product ID(PK) Current Year Product Name Current Product Price Old Product Price Old Year 1 2005 Product1 $250 $150 2004 The problem with the Type 3 approach, is over years, if the product price contin uously changes, then the complete history may not be stored, only the latest cha nge will be stored. For example, in year 2006, if the product1's price changes t o $350, then we would not be able to see the complete history of 2004 prices, si nce the old values would have been updated with 2005 product information. Product Product ID(PK) Year Product Name Product Price Old Product Price Old Year 1 2006 Product1 $350 $250 2005 Example: In order to store data, over the years, many application designers in e ach branch have made their individual decisions as to how an application and dat abase should be built. So source systems will be different in naming conventions , variable measurements, encoding structures, and physical attributes of data. C onsider a bank that has got several branches in several countries, has millions of customers and the lines of business of the enterprise are savings, and loans. The following example explains how the data is integrated from source systems t o target systems. Example of Source Data System Name Attribute Name Column Name Datatype Values Source System 1 Customer Application Date CUSTOMER_APPLICATION_DATE NUMERIC(8,0) 11012005 Source System 2 Customer Application Date CUST_APPLICATION_DATE DATE 11012005 Source System 3 Application Date APPLICATION_DATE DATE 01NOV2005

In the aforementioned example, attribute name, column name, datatype and values are entirely different from one source system to another. This inconsistency in data can be avoided by integrating the data into a data warehouse with good stan dards. Example of Target Data(Data Warehouse) Target System Attribute Name Column Name Datatype Values Record #1 Customer Application Date CUSTOMER_APPLICATION_DATE DATE 01112005 Record #2 Customer Application Date CUSTOMER_APPLICATION_DATE DATE 01112005 Record #3 Customer Application Date CUSTOMER_APPLICATION_DATE DATE 01112005 In the above example of target data, attribute names, column names, and datatype s are consistent throughout the target system. This is how data from various sou rce systems is integrated and accurately stored into the data warehouse. Data Warehouse & Data Mart A data warehouse is a relational/multidimensional database that is designed for query and analysis rather than transaction processing. A data warehouse usually contains historical data that is derived from transaction data. It separates ana lysis workload from transaction workload and enables a business to consolidate d ata from several sources. In addition to a relational/multidimensional database, a data warehouse environm ent often consists of an ETL solution, an OLAP engine, client analysis tools, an d other applications that manage the process of gathering data and delivering it to business users. There are three types of data warehouses: 1. Enterprise Data Warehouse - An enterprise data warehouse provides a central d atabase for decision support throughout the enterprise. 2. ODS(Operational Data Store) - This has a broad enterprise wide scope, but unl ike the real entertprise data warehouse, data is refreshed in near real time and used for routine business activity. 3. Data Mart - Datamart is a subset of data warehouse and it supports a particul ar region, business unit or business function. Data warehouses and data marts are built on dimensional data modeling where fact tables are connected with dimension tables. This is most useful for users to ac cess data since a database can be visualized as a cube of several dimensions. A data warehouse provides an opportunity for slicing and dicing that cube along ea ch of its dimensions. Data Mart: A data mart is a subset of data warehouse that is designed for a part icular line of business, such as sales, marketing, or finance. In a dependent da ta mart, data can be derived from an enterprise-wide data warehouse. In an indep endent data mart, data can be collected directly from sources. Figure 1.12 : Dat a Warehouse and Datamarts Business Intelligence Tools Business Intelligence Tools help to gather, store, access and analyze corporate data to aid in decision-making. Generally these systems will illustrate business intelligence in the areas of customer profiling, customer support, market resea rch, market segmentation, product profitability, statistical analysis, inventory and distribution analysis. With Business Intelligence Tools, various data like customer related, product re lated, sales related, time related, location related, employee related etc. are gathered and analysed based on which important strategies or rules are formed an d goals to achieve their target are set. These decisions are very efficient and effective in promoting an Organization s growth. Since the collected data can be sliced across almost all the dimensions like tim e, location, product, promotion etc., valuable statistics like sales profit in o ne region for the current year can be calculated and compared with the previous year statistics. Popular Business Intelligence Tools

Tool Name Company Name Business Objects Business Objects Cognos Cognos Hyperion Hyperion Microstrategy Microstrategy Microsoft Reporting Services Microsoft Crystal Business Objects OLAP & its Hybrids OLAP, an acronym for Online Analytical Processing is an approach that helps orga nization to take advantages of DATA. Popular OLAP tools are Cognos, Business Obj ects, Micro Strategy etc. OLAP cubes provide the insight into data and helps the topmost executives of an organization to take decisions in an efficient manner Technically, OLAP cube allows one to analyze data across multiple dimensions by providing multidimensional view of aggregated, grouped data. With OLAP reports, the major categories like fiscal periods, sales region, products, employee, prom otion related to the product can be ANALYZED very efficiently, effectively and r esponsively. OLAP applications include sales and customer analysis, budgeting, m arketing analysis, production analysis, profitability analysis and forecasting e tc. ROLAP ROLAP stands for Relational Online Analytical Process that provides multidimensi onal analysis of data, stored in a Relational database(RDBMS). MOLAP MOLAP(Multidimensional OLAP), provides the analysis of data stored in a multi-di mensional data cube. HOLAP HOLAP(Hybrid OLAP) a combination of both ROLAP and MOLAP can provide multidimens ional analysis simultaneously of data stored in a multidimensional database and in a relational database(RDBMS). DOLAP DOLAP(Desktop OLAP or Database OLAP)provide multidimensional analysis locally in the client machine on the data collected from relational or multidimensional da tabase servers. OLAP Analysis Imagine an organization that manufactures and sells goods in several States of U SA which employs hundreds of employees in its manufacturing, sales and marketing division etc. In order to manufacture and sell this product in profitable manne r, the executives need to analyse(OLAP analysis) the data on the product and thi nk about various possibilities and causes for a particular event like loss in sa les, less productivity or increase in sales over a particular period of the year . During the OLAP analysis, the top executives may seek answers for the following: 1. Number of products manufactured. 2. Number of products manufactured in a location. 3. Number of products manufactured on time basis within a location. 4. Number of products manufactured in the current year when compared to the prev ious year. 5. Sales Dollar value for a particular product. 6. Sales Dollar value for a product in a location. 7. Sales Dollar value for a product in a year within a location. 8. Sales Dollar value for a product in a year within a location sold or serviced by an employee. OLAP tools help executives in finding out the answers, not only to the above men tioned measures, even for the very complex queries by allowing them to slice and dice, drill down from higher level to lower level summarized data, rank, sort, etc. Example of OLAP Analysis Report

Time Dimension Id Location Dimension Id Product Dimension Id Organization Dimens ion Id Sales Dollar DateTimeStamp 1 1 100001 1 1000 1/1/2005 11:23:31 AM 3 1 100001 1 750 1/1/2005 11:23:31 AM 1 1 100001 2 1000 1/1/2005 11:23:31 AM 3 1 100001 2 750 1/1/2005 11:23:31 AM In the above example of OLAP analysis, data can be sliced and diced, drilled up and drilled down for various hierarchies like time dimension, location dimension , product dimension, and organization dimension . This would provide the topmost executives to take a decision about the product performance in a location/time/ organization. In OLAP reports, Trend analysis can be also made by comparing the sales value of a particular product over several years or quarters. Erwin Erwin Tutorial All Fusion Erwin Data Modeler commonly known as Erwin, is a powerful and leading data modeling tool from Computer Associates. Computer Associates delivers sever al softwares for enterprise management, storage management solutions, security s olutions, application life cycle management, data management and business intell igence. Erwin makes database creation very simple by generating the DDL(sql) scripts fro m a data model by using its Forward Engineering technique or Erwin can be used t o create data models from the existing database by using its Reverse Engineering technique. Erwin workplace consists of the following main areas: Logical: In this view, data model represents business requirements like entities , attributes etc. Physical: In this view, data model represents physical structures like tables, c olumns, datatypes etc. Modelmart: Many users can work with a same data model concurrently. What can be done with Erwin? Logical, Physical and dimensional data models can be created. Data Models can be created from existing systems(rdbms, dbms, files etc.). Different versions of a data model can be compared. Data model and database can be compared. SQl scripts can be generated to create databases from data model. Reports can be generated in different file formats like .html, .rtf, and .txt. Data models can be opened and saved in several different file types like .er1, . ert, .bpx, .xml, .ers, .sql, .cmt, .df, .dbf, and .mdb files. By using ModelMart, concurrent users can work on the same data model. In order to create data models in Erwin, you need to have this All Fusion Erwin Data Modeler installed in your system. If you have installed Modelmart, then mor e than one user can work on the same model. How to create a Logical Data Model: In the following section, a simple example with a step by step procedure to crea te a logical data model with two entities and their relationship are explained i n detail. 1: Open All Fusion Erwin Data Modeler software. 2: Select the view as "Logical" from the drop-down list. By default, logical wil l be your workplace. 3: Click New from File menu. Select the option "Logical/Physical" from the displ ayed wizard. Click Ok. 4: To create an Entity, click the icon "Entity" and drop it on the workplace. By default E/1 will be displayed as the entity name. Change it to "Country". 5: To create an Attribute, Place the cursor on the entity "Country" and right cl ick it. From the displayed menu, click attributes which will take you to the att ribute wizard. Click "New" button on the wizard and type attribute name as "Coun try Code". Select the data type as "String" and click OK. Select the option Prim

ary Key to identify attribute "Country Code" as the primary key. Follow the same approach and create another attribute "Country Name" without selecting the prim ary key option. Click ok, and now you will be having 2 attributes Country Code, and Country Name under the entity "Country" in the current logical workplace. 6: Create another entity "Bank" with two attributes namely Bank Code and Bank Na me by following steps 4 and 5. 7: In order to relate these two tables country, bank, a Foreign Key relationship must be created. To create a Foreign Key relationship, follow these steps. (a) Click the symbol "Non Identifying Relationship". (b) Place the cursor on the entity "Country". (c) Place the cursor on the entity "Bank". Now you can see the relationship(a li ne drawn from Bank to Country) between "Country" and "Bank". Double click on tha t relationship line to open "Relationships wizard" and change the option from "N ulls Allowed" to "No Nulls" since bank should have a country code. The Logical Data Model created by following the above steps looks similar to the following diagram. How to create a Physical Data Model: 1: Change the view from "Logical to Physical" from the drop down list. 2: Click "Database" from main menu and then click "Choose Database" from the sub menu. Then select your target database server where the database has to be crea ted. Click ok. 3: Place the cursor on the table "Country" and right click it. From the displaye d menu, click columns which will take you to the column wizard. Click the "Datab ase Tab", which is next to "General Tab" and assign datatypes "VARCHAR2(10), VAR CHAR2(50) for columns COUNTRY_CODE and COUNTRY_NAME respectively. Change the def ault NULL to NOT NULL for the column COUNTRY_NAME. Similarly, repeat the above s tep for the BANK table. Once you have done all of these, you can see the physica l version of the logical data model in the current workplace. The Physical Data Model created by following the above steps looks similar to th e following diagram. How to generate DDL(sql) scripts to create a database: 1: Select the view as Physical from the drop down list. 2:Click "Tools" from main menu and then click "Forward Engineer/Schema Generatio n" from the sub menu which will take you to the "Schema Generation Wizard". Sele ct the appropriate properties that satisfies your database requirements like sch ema, table, primary key etc. Click preview to see your scripts. Either you can c lick to generate the table in a database or you can store the scripts and run ag ainst the database later. The DDL(sql) scripts generated by Erwin by following the above steps looks simil ar to the following script. CREATE TABLE Country(Country_Code VARCHAR2(10) NOT NULL, Country_Name VARCHAR2(50) NOT NULL, CONSTRAINT PK_Country PRIMARY KEY (Country_Code)); CREATE TABLE Bank(Bank_Code VARCHAR2(10) NOT NULL, Bank_Name VARCHAR2(50) NOT NULL, Country_Code VARCHAR2(10) NOT NULL, CONSTRAINT PK_Bank PRIMARY KEY(Bank_Code) ); ALTER TABLE Bank ADD( CONSTRAINT FK_Bank FOREIGN KEY (Country_Code) REFERENCES Country ); Data Modeling Development Cycle Gathering Business Requirements - First Phase: Data Modelers have to interact wi th business analysts to get the functional requirements and with end users to fi nd out the reporting needs. Secphase Conceptual data model is created by gathering business requirements fro

m various sources like business documents, discussion with functional teams, bus iness analysts, smart management experts and end users who do the reporting on t he database. Data modelers create conceptual data model and forward that model t o functional team for their review. Conceptual Data Modeling(CDM) - Second Phase: See Figure 1.1 This data model includes all major entities, relationships and it will not conta in much detail about attributes and is often used in the INITIAL PLANNING PHASE. Conceptual Data Model - Highlights CDM is the first step in constructing a data model in top-down approach and is a clear and accurate visual representation of the business of an organization. CDM visualizes the overall structure of the database and provides high-level inf ormation about the subject areas or data structures of an organization. CDM discussion starts with main subject area of an organization and then all the major entities of each subject area are discussed in detail. CDM comprises of entity types and relationships. The relationships between the s ubject areas and the relationship between each entity in a subject area are draw n by symbolic notation(IDEF1X or IE). In a data model, cardinality represents th e relationship between two entities. i.e. One to one relationship, or one to man y relationship or many to many relationship between the entities. CDM contains data structures that have not been implemented in the database. In CDM discussion, technical as well as non-technical team projects their ideas for building a sound logical data model. See Figure 1.1 below Consider an example of a bank that contains different line of businesses like sa vings, credit card, investment, loans and so on. In example(figure 1.1) conceput al data model contains major entities from savings, credit card, investment and loans. Conceptual data modeling gives an idea to the functional and technical te am about how business requirements would be projected in the logical data model. Logical Data Modeling(LDM) - Third Phase: See Figure 1.2 This is the actual implementation of a conceptual model in a logical data model. A logical data model is the version of the model that represents all of the bus iness requirements of an organization. As soon as the conceptual data model is accepted by the functional team, develop ment of logical data model gets started. Once logical data model is completed, i t is then forwarded to functional teams for review. A sound logical design shoul d streamline the physical design process by clearly defining data structures and the relationships between them. A good data model is created by clearly thinkin g about the current and future business requirements. Logical data model include s all required entities, attributes, key groups, and relationships that represen t business information and define business rules. Example of Logical Data Model: Figure 1.2 In the example, we have identified the entity names, attribute names, and relati onship. For detailed explanation, refer to relational data modeling. Physical Data Modeling Physical data model includes all required tables, columns, relationships, databa se properties for the physical implementation of databases. Database performance , indexing strategy, physical storage and denormalization are important paramete rs of a physical model. Logical data model is approved by functional team and there-after development of physical data model work gets started. Once physical data model is completed, i t is then forwarded to technical teams(developer, group lead, DBA) for review. T he transformations from logical model to physical model include imposing databas e rules, implementation of referential integrity, super types and sub types etc. Example of Physical Data Model: Figure 1.3

In the example, the entity names have been changed to table names, changed attri bute names to column names, assigned nulls and not nulls, and datatype to each c olumn. Database - Fifth Phase: DBAs instruct the data modeling tool to create SQL code from physical data model . Then the SQL code is executed in server to create databases. Standardization Needs Modeling data: Several data modelers may work on the different subject areas of a data model an d all data modelers should use the same naming convention, writing definitions a nd business rules. Nowadays, business to business transactions(B2B) are quite common, and standardi zation helps in understanding the business in a better way. Inconsistency across column names and definition would create a chaos across the business. For example, when a data warehouse is designed, it may get data from several sou rce systems and each source may have its own names, data types etc. These anomal ies can be eliminated if a proper standardization is maintained across the organ ization. Table Names Standardization: Giving a full name to the tables, will give an idea about data what it is about. Generally, do not abbreviate the table names; however this may differ according to organization s standards. If the table name s length exceeds the database standa rds, then try to abbreviate the table names. Some general guidelines are listed below that may be used as a prefix or suffix for the table. Examples: Lookup LKP - Used for Code, Type tables by which a fact table can be directly ac cessed. e.g. Credit Card Type Lookup CREDIT_CARD_TYPE_LKP Fact FCT - Used for transaction tables: e.g. Credit Card Fact - CREDIT_CARD_FCT Cross Reference - XREF Tables that resolves many to many relationships. e.g. Credit Card Member XREF CREDIT_CARD_MEMBER_XREF History HIST - Tables the stores history. e.g. Credit Card Retired History CREDIT_CARD_RETIRED_HIST Statistics STAT - Tables that store statistical information. e.g. Credit Card Web Statistics CREDIT_CARD_WEB_STAT Column Names Standardization: Some general guidelines are listed below that may be used as a prefix or suffix for the column. Examples: Key Key System generated surrogate key. e.g. Credit Card Key CRDT_CARD_KEY Identifier ID - Character column that is used as an identifier. e.g. Credit Card Identifier CRDT_CARD_ID Code CD - Numeric or alphanumeric column that is used as an identifying attribut e. e.g. State Code ST_CD Description DESC - Description for a code, identifier or a key. e.g. State Description ST_DESC Indicator IND to denote indicator columns. e.g. Gender Indicator GNDR_IND Database Parameters Standardization: Some general guidelines are listed below that may be used for other physical par ameters. Examples: Index Index IDX for index names. e.g. Credit Card Fact IDX01 CRDT_CARD_FCT_IDX01 Primary Key PK for Primary key constraint names. e.g. CREDIT Card Fact PK01- CRDT-CARD_FCT_PK01

Alternate Keys AK for Alternate key names. e.g. Credit Card Fact AK01 CRDT_CARD_FCT_AK01 Foreign Keys FK for Foreign key constraint names. e.g. Credit Card Fact FK01 CRDT_CARD_FCT_FK01 Steps to create a Data Model These are the general guidelines to create a standard data model and in real tim e, a data model may not be created in the same sequential manner as shown below. Based on the enterprise s requirements, some of the steps may be excluded or incl uded in addition to these Sometimes, data modeler may be asked to develop a data model based on the existi ng database. In that situation, the data modeler has to reverse engineer the dat abase and create a data model. 1 Get Business requirements. 2 Create High Level Conceptual Data Model. 3 Create Logical Data Model. 4 Select target DBMS where data modeling tool creates the physical schema. 5 Create standard abbreviation document according to business standard. 6 Create domain. 7 Create Entity and add definitions. 8 Create attribute and add definitions. 9 Based on the analysis, try to create surrogate keys, super types and sub types. 10 Assign datatype to attribute. If a domain is already present then the attribut e should be attached to the domain. 11 Create primary or unique keys to attribute. 12 Create check constraint or default to attribute. 13 Create unique index or bitmap index to attribute. 14 Create foreign key relationship between entities. 15 Create Physical Data Model. 15 Add database properties to physical data model. 16 Create SQL Scripts from Physical Data Model and forward that to DBA. 17 Maintain Logical & Physical Data Model. 18 For each release (version of the data model), try to compare the present versi on with the previous version of the data model. Similarly, try to compare the da ta model with the database to find out the differences. 19 Create a change log document for differences between the current version and p revious version of the data model. Forums Time Pass Chai TimeTP GamesPuzzlesJokesFunny Images & VideosPoems & WritingsMovie Chat & N ewsMovies Telugu MoviesHindi MoviesEnglish MoviesOther Language MoviesMusic Telugu MP3Hindi MP3English MP3Tamil MP3Devotional & ClassicalSong LyricsTechnolo gy Software DownloadsComputer GamesE-BooksMobile SectionTips & TweaksSports & TVBio scopeVanithaSearchRegisterToday's Posts All Forums Telugu Movies Hindi Movies English Movies Music Software Games All Posts Titles Only Lazydesis > Downloads > Software and Games > Tips n Tweaks Informatica Faq's User Name Remember Me? Password

Go to Page...

To gain full access to all our features, you must register for a free account 1Likes TopAllThis Page 1 Post By vasubonam LinkBack Thread Tools

#1 03-27-2007, 06:21 PM vasubonam Member Join Date: Mar 2007Posts: 78 Likes Received: 4 Informatica Faq's --------------------------------------------------------------------------------

You might also like

- DW ConceptsDocument7 pagesDW ConceptsKrishna PrasadNo ratings yet

- Data Warehouse Concepts PDFDocument14 pagesData Warehouse Concepts PDFhot_job_hunt0% (1)

- Dataware House StrctureDocument13 pagesDataware House Strctureshital7028733151No ratings yet

- Data Cubemod2Document21 pagesData Cubemod2sgk100% (1)

- Data Warehouse Concepts: TCS InternalDocument19 pagesData Warehouse Concepts: TCS Internalapi-3716519No ratings yet

- Schema Is A Logical Description of The Entire DatabaseDocument4 pagesSchema Is A Logical Description of The Entire DatabasejawadNo ratings yet

- Unit-1 Lecture NotesDocument43 pagesUnit-1 Lecture NotesSravani Gunnu100% (1)

- DW-DM R19 Unit-1Document25 pagesDW-DM R19 Unit-1PRIYANKA GUPTA100% (1)

- Dimensions DWDocument6 pagesDimensions DWDinesh GoraNo ratings yet

- Data Warehouse: Subject OrientedDocument6 pagesData Warehouse: Subject OrientedAnonymous QMzMmdRsfNo ratings yet

- Fact and Dimension TablesDocument11 pagesFact and Dimension Tablespavan2711No ratings yet

- Data MningDocument10 pagesData MningrapinmystyleNo ratings yet

- Fact TablesDocument3 pagesFact TablesBhaskar TrinadhNo ratings yet

- What Is Fact?: A Fact Is A Collection of Related Data Items, Each Fact Typically Represents A Business Item, ADocument28 pagesWhat Is Fact?: A Fact Is A Collection of Related Data Items, Each Fact Typically Represents A Business Item, ARajaPraveenNo ratings yet

- Dimensions and Facts in Data WarehouseDocument6 pagesDimensions and Facts in Data WarehouseSatish Kumar100% (1)

- Chapter NineDocument36 pagesChapter Nineambroseoryem1No ratings yet

- Datastage Interview TipsDocument56 pagesDatastage Interview TipsSoma GhoshNo ratings yet

- Star SchemaDocument5 pagesStar Schemarr4wn7e9nkNo ratings yet

- Nasir Wisal 49Document7 pagesNasir Wisal 49NASIR KHANNo ratings yet

- Star and SnowflakeDocument4 pagesStar and SnowflakeRahul ChauhanNo ratings yet

- Group 9 GSLCDocument11 pagesGroup 9 GSLCandreNo ratings yet

- 3.1. Dimensional Modelling: IndexDocument21 pages3.1. Dimensional Modelling: IndexMallikarjun ChagamNo ratings yet

- Star vs Snowflake Schema DifferencesDocument15 pagesStar vs Snowflake Schema DifferencesAdrian Ismael RomeroNo ratings yet

- Data Warehouse QuesDocument10 pagesData Warehouse Quesviswanath12No ratings yet

- Final Interview Questions (Etl - Informatica) : Subject Oriented, Integrated, Time Variant, Non VolatileDocument77 pagesFinal Interview Questions (Etl - Informatica) : Subject Oriented, Integrated, Time Variant, Non VolatileRams100% (1)

- Data Warehouse BasicsDocument19 pagesData Warehouse Basicsr.m.ram234No ratings yet

- What Is Data Warehouse?: Explanatory NoteDocument10 pagesWhat Is Data Warehouse?: Explanatory NoteMrityunjay SinghNo ratings yet

- Data Warehouse SchemasDocument87 pagesData Warehouse Schemassnivas1No ratings yet

- Chapter 2 Kimball Dimensional Modelling Techniques OverviewDocument14 pagesChapter 2 Kimball Dimensional Modelling Techniques OverviewAngelo SimbulanNo ratings yet

- Dataware House TutorialsDocument2 pagesDataware House TutorialsMadhes AnalystNo ratings yet

- A Concise Explanation of the Classic Star Schema Data ModelDocument7 pagesA Concise Explanation of the Classic Star Schema Data ModelAnanya BiswasNo ratings yet

- Different Types of Dimensions and Facts in DataDocument5 pagesDifferent Types of Dimensions and Facts in DataKrishna Rao ChigatiNo ratings yet

- Data Warehousing ConceptsDocument9 pagesData Warehousing ConceptsramanaravalaNo ratings yet

- DW Basic QuestionsDocument9 pagesDW Basic QuestionsGopi BattuNo ratings yet

- ETL Testing FundamentalsDocument5 pagesETL Testing FundamentalsPriya DharshiniNo ratings yet

- Order AnalysisDocument7 pagesOrder Analysisrut22222No ratings yet

- Retail Sales Fact Table DesignDocument28 pagesRetail Sales Fact Table DesignVikas50% (2)

- Entity-Relationship Model: Data Warehouse Data ModelsDocument4 pagesEntity-Relationship Model: Data Warehouse Data ModelsMahima PrasadNo ratings yet

- Informatica FAQsDocument143 pagesInformatica FAQsdddpandayNo ratings yet

- Unit 2Document8 pagesUnit 2Binay YadavNo ratings yet

- 2.data Warehouse and OLAPDocument14 pages2.data Warehouse and OLAPBibek NeupaneNo ratings yet

- Abinitio Session 1Document237 pagesAbinitio Session 1Sreenivas Yadav100% (1)

- Logical DWDesignDocument5 pagesLogical DWDesignbvishwanathrNo ratings yet

- Data warehouse introduction and star schemaDocument29 pagesData warehouse introduction and star schemaBhanu G100% (1)

- Data Warehouse DesignDocument29 pagesData Warehouse DesignEri ZuliarsoNo ratings yet

- Chapter Four - Data Warehouse Design: SATA Technology and Business CollageDocument10 pagesChapter Four - Data Warehouse Design: SATA Technology and Business Collagetigistu wogayehu tigeNo ratings yet

- Definition and Types of Data WarehousingDocument187 pagesDefinition and Types of Data Warehousingvinayreddy460No ratings yet

- Design star, snowflake and galaxy schemas for lab experimentDocument10 pagesDesign star, snowflake and galaxy schemas for lab experimentShubham GuptaNo ratings yet

- DWDM Unit 2Document104 pagesDWDM Unit 2Sakshi UjjlayanNo ratings yet

- Unit2 OlapDocument13 pagesUnit2 OlapAnurag sharmaNo ratings yet

- Data Warehousing Dimensional Data ModelDocument2 pagesData Warehousing Dimensional Data ModelDeepanshuNo ratings yet

- DW and Abinitio Basic ConceptsDocument27 pagesDW and Abinitio Basic ConceptssaichanderreddyNo ratings yet

- Business Analytics Assessment 2 InstructionsDocument7 pagesBusiness Analytics Assessment 2 Instructionsianking373No ratings yet

- Basics of Dimensional ModelingDocument14 pagesBasics of Dimensional Modelingrajan periNo ratings yet

- Unit 2Document33 pagesUnit 2panimalar123No ratings yet

- Exp1 BIDocument3 pagesExp1 BIGautamkrishna ChintaNo ratings yet

- What Is The Difference Between OLTP and OLAP?Document33 pagesWhat Is The Difference Between OLTP and OLAP?Sainath PattipatiNo ratings yet

- Microsoft Excel Statistical and Advanced Functions for Decision MakingFrom EverandMicrosoft Excel Statistical and Advanced Functions for Decision MakingRating: 4 out of 5 stars4/5 (2)

- Cisco Nexus 9000 Series NX-OS Command Reference (Show Commands), Release 7.0 (3) I7 (1) (2017-11-15) PDFDocument3,150 pagesCisco Nexus 9000 Series NX-OS Command Reference (Show Commands), Release 7.0 (3) I7 (1) (2017-11-15) PDFjeffe333100% (1)

- SAP Transaction CodesDocument1 pageSAP Transaction CodesHemant RasamNo ratings yet

- MRP and ErpDocument33 pagesMRP and ErpAdityasinh DesaiNo ratings yet

- RMAN in The Trenches: To Go Forward, We Must Backup: by Philip RiceDocument5 pagesRMAN in The Trenches: To Go Forward, We Must Backup: by Philip Ricebanala.kalyanNo ratings yet

- MOHID Studio - User GuideDocument153 pagesMOHID Studio - User GuideLuís Silva100% (1)

- (Implementing Oracle Fusion Workforce Compensation) FAIWCDocument284 pages(Implementing Oracle Fusion Workforce Compensation) FAIWCMalik Al-WadiNo ratings yet

- PDFGenerationDocument25 pagesPDFGenerationAnonymous XYRuJZNo ratings yet

- MSDN Library For Visual Studio 2008 Readme PDFDocument2 pagesMSDN Library For Visual Studio 2008 Readme PDFFulano de TalNo ratings yet

- SW Roads ManualDocument97 pagesSW Roads ManualKarki2No ratings yet

- Eduma v4.2.8.2 - Education WordPress ThemeDocument3 pagesEduma v4.2.8.2 - Education WordPress ThemeLearning WebsiteNo ratings yet

- Install Wamp SSL PDFDocument9 pagesInstall Wamp SSL PDFIonel GherasimNo ratings yet

- CA Spectrum Event Alarm Handling-SDocument21 pagesCA Spectrum Event Alarm Handling-Sasdf2012No ratings yet

- (Win2k3) Step-By-Step Guide For Setting Up Secure Wireless Access in A Test LabDocument63 pages(Win2k3) Step-By-Step Guide For Setting Up Secure Wireless Access in A Test Labthe verge3No ratings yet

- Start and Stop The Microsoft Access - Create New A Database - Database Objects - Design The Table - Data Types - Field PropertiesDocument12 pagesStart and Stop The Microsoft Access - Create New A Database - Database Objects - Design The Table - Data Types - Field PropertiesLoïc JEAN-CHARLESNo ratings yet

- Approaches For Vehicle Cyber Security PDFDocument2 pagesApproaches For Vehicle Cyber Security PDFdavidcenNo ratings yet

- Scada Controlling Automatic Filling PlantDocument38 pagesScada Controlling Automatic Filling PlantDejan RadivojevicNo ratings yet

- Lab ManualDocument56 pagesLab ManualmohanNo ratings yet

- M 4 - Introduction of Server FarmsDocument32 pagesM 4 - Introduction of Server Farmsalan sibyNo ratings yet

- Yozo LogDocument2 pagesYozo LogAstri GustiantiNo ratings yet

- Acer Diagnostic Suite Toolkit: User GuideDocument57 pagesAcer Diagnostic Suite Toolkit: User GuideKiran M50% (2)

- App OptionsDocument3 pagesApp OptionsJustin GalipeauNo ratings yet

- DevOps FinalDocument195 pagesDevOps FinalJanardhan ChNo ratings yet

- DSP Domains: Digital Signal Processing (DSP) Is Concerned With The Representation ofDocument4 pagesDSP Domains: Digital Signal Processing (DSP) Is Concerned With The Representation ofsaichowdariNo ratings yet

- Acer Aspire 7000 SeriesDocument220 pagesAcer Aspire 7000 Series8Scartheface8No ratings yet

- Thermal Bar Code - ProgrammingDocument150 pagesThermal Bar Code - Programminggabriela vania rodriguez bustosNo ratings yet

- Oracle Apps - R12 Payment Document Setup - Oracle Apps TechnicalDocument2 pagesOracle Apps - R12 Payment Document Setup - Oracle Apps Technicalali iqbalNo ratings yet

- IATE API Reference 2001Document145 pagesIATE API Reference 2001LeonNo ratings yet

- Konica Minolta AccurioPress - C14000 - C12000 - BrochureDocument8 pagesKonica Minolta AccurioPress - C14000 - C12000 - BrochureRICKONo ratings yet

- The Bean Validation Reference Implementation. - Hibernate ValidatorDocument4 pagesThe Bean Validation Reference Implementation. - Hibernate ValidatorChandu ChandrakanthNo ratings yet

- CABALLERO - 1994 - Cardin 30 A Model For Forest Fire Spread and Fire Fighting SimulationDocument2 pagesCABALLERO - 1994 - Cardin 30 A Model For Forest Fire Spread and Fire Fighting SimulationgnomusyNo ratings yet