Professional Documents

Culture Documents

The Poor Man's Super Computer

Uploaded by

kudupudinageshOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Poor Man's Super Computer

Uploaded by

kudupudinageshCopyright:

Available Formats

www.1000projects.

com

The Poor mans Super Computer

Contents

Abstract Introduction How It Works Distributed Computing Management Server Comparisons and Other Trends Distributed vs. Grid Computing Other Trends at a Glance Application Characteristics Types of Distributed Computing Applications Security and Standards Challenges Conclusion Bibliography 1 1 2 3 3 4 5 6 7 8

www.chetanasprojects.com

www.1000projects.com

Abstract

Increasing desktop CPU power and communications bandwidth have lead to the discovery of a new computation environment which helped to make distributed computing a more practical idea. The advantages of this type of architecture for the right kinds of applications are impressive. The most obvious is the ability to provide access to supercomputer level processing power or better for a fraction of the cost of a typical supercomputer. For this reasons this technology can be termed as the Poor mans Super Computer. In this paper we discuss How Distributed Computing Works, The Application Characteristics that make it special from other networking technologies. When going for a new technology its a must for us to the competing technologies and compare it with the new trends. We also discuss the areas where this computational technology can be applied. We also discuss the level of security and the standards behind this Technology. Finally coming to the conclusion, however, the specific promise of distributed computing lies mostly in harnessing the system resources that lies within the firewall. It will take years before the systems on the Net will be sharing compute resources as effortlessly as they can share information.

Introduction

The numbers of real applications are still somewhat limited, and the challenges-particularly standardization--are still significant. But there's a new energy in the market,

www.chetanasprojects.com

www.1000projects.com as well as some actual paying customers, so it's about time to take a look at where distributed processing fits and how it works. Increasing desktop CPU power and communications bandwidth has also helped to make distributed computing a more practical idea. Various vendors have developed numerous initiatives and architectures to permit distributed processing of data and objects across a network of connected systems. One area of distributed computing has received a lot of attention, and it will be a primary focus of this paper--an environment where you can harness idle CPU cycles and storage space of hundreds or thousands of networked systems to work together on a processing-intensive problem. The growth of such processing models has been limited, however, due to a lack of compelling applications and by bandwidth bottlenecks, combined with significant security, management, and standardization challenges.

How It Works

A distributed computing architecture consists of very lightweight software agents installed on a number of client systems, and one or more dedicated distributed computing management servers. There may also be requesting clients with software that allows them to submit jobs along with lists of their required resources. An agent running on a processing client detects when the system is idle, notifies the management server that the system is available for processing, and usually requests an application package. The client then receives an application package from the server and runs the software when it has spare CPU cycles, and sends the results back to the server. If the user of the client system needs to run his own applications at any time, control is immediately returned, and processing of the distributed application package ends.

www.chetanasprojects.com

www.1000projects.com

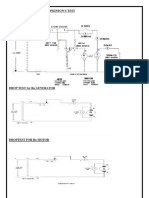

A Typical Distributed System

Distributed Computing Management Server The servers have several roles. They take distributed computing requests and divide their large processing tasks into smaller tasks that can run on individual desktop systems (though sometimes this is done by a requesting system). They send application packages and some client management software to the idle client machines that request them. They monitor the status of the jobs being run by the clients. After the client machines run those packages, they assemble the results sent back by the client and structure them for presentation, usually with the help of a database. The server is also likely to manage any security, policy, or other management functions as necessary, including handling dialup users whose connections and IP addresses are inconsistent. Obviously the complexity of a distributed computing architecture increases with the size and type of environment. A larger environment that includes multiple departments, partners, or participants across the Web requires complex resource identification, policy management, authentication, encryption, and secure sandboxing functionality.

www.chetanasprojects.com

www.1000projects.com Administrators or others with rights can define which jobs and users get access to which systems, and who gets priority in various situations based on rank, deadlines, and the perceived importance of each project. Obviously, robust authentication, encryption, and sandboxing are necessary to prevent unauthorized access to systems and data within distributed systems that are meant to be inaccessible. If you take the ideal of a distributed worldwide grid to the extreme, it requires standards and protocols for dynamic discovery and interaction of resources in diverse network environments and among different distributed computing architectures. Most distributed computing solutions also include toolkits, libraries, and API's for porting third party applications to work with their platform, or for creating distributed computing applications from scratch.

Comparisons and Other Trends

Distributed vs. Grid Computing There are actually two similar trends moving in tandem--distributed computing and grid computing. Depending on how you look at the market, the two either overlap, or distributed computing is a subset of grid computing. Grid Computing got its name because it strives for an ideal scenario in which the CPU cycles and storage of millions of systems across a worldwide network function as a flexible, readily accessible pool that could be harnessed by anyone who needs it. Sun defines a computational grid as "a hardware and software infrastructure that provides dependable, consistent, pervasive, and inexpensive access to computational capabilities." Grid computing can encompass desktop PCs, but more often than not its focus is on more powerful workstations, servers, and even mainframes and supercomputers working on problems involving huge datasets that can run for days. And grid computing leans more to dedicated systems, than systems primarily used for other tasks.

www.chetanasprojects.com

www.1000projects.com Large-scale distributed computing of the variety we are covering usually refers to a similar concept, but is more geared to pooling the resources of hundreds or thousands of networked end-user PCs, which individually are more limited in their memory and processing power, and whose primary purpose is not distributed computing, but rather serving their user. Distributed vs. Other Trends

Application Characteristics

www.chetanasprojects.com

www.1000projects.com Obviously not all applications are suitable for distributed computing. The closer an application gets to running in real time, the less appropriate it is. Even processing tasks that normally take an hour are two may not derive much benefit if the communications among distributed systems and the constantly changing availability of processing clients becomes a bottleneck. Instead you should think in terms of tasks that take hours, days, weeks, and months. Generally the most appropriate applications consist of "loosely coupled, non-sequential tasks in batch processes with a high compute-to-data ratio." The high compute to data ratio goes hand-in-hand with a high compute-to-communications ratio, as you don't want to bog down the network by sending large amounts of data to each client, though in some cases you can do so during off hours. Programs with large databases that can be easily parsed for distribution are very appropriate. Clearly, any application with individual tasks that need access to huge data sets will be more appropriate for larger systems than individual PCs. If terabytes of data are involved, a supercomputer makes sense as communications can take place across the system's very high speed backplane without bogging down the network. Server and other dedicated system clusters will be more appropriate for other slightly less data intensive applications. For a distributed application using numerous PCs, the required data should fit very comfortably in the PC's memory, with lots of room to spare. Taking this further, it is recommended that the application should have the capability to fully exploit "coarse-grained parallelism," meaning it should be possible to partition the application into independent tasks or processes that can be computed concurrently. For most solutions there should not be any need for communication between the tasks except at task boundaries, though Data Synapse allows some interprocess communications. The tasks and small blocks of data should be such that they can be processed effectively on a modern PC and report results that, when combined with other PC's results, produce coherent output. And the individual tasks should be small enough to produce a result on these systems within a few hours to a few days

Types of Distributed Computing Applications

www.chetanasprojects.com

www.1000projects.com The following scenarios are examples of types of application tasks that can be set up to take advantage of distributed computing.

A query search against a huge database that can be split across lots of desktops, with the submitted query running concurrently against each fragment on each desktop.

Complex modeling and simulation techniques that increase the accuracy of results by increasing the number of random trials would also be appropriate, as trials could be run concurrently on many desktops, and combined to achieve greater statistical significance (this is a common method used in various types of financial risk analysis).

Exhaustive search techniques that require searching through a huge number of results to find solutions to a problem also make sense. Drug screening is a prime example.

Complex financial modeling, weather forecasting, and geophysical exploration are on the radar screens of the vendors, as well as car crash and other complex simulations.

Many of today's vendors are aiming squarely at the life sciences market, which has a sudden need for massive computing power. Pharmaceutical firms have repositories of millions of different molecules and compounds, some of which may have characteristics that make them appropriate for inhibiting newly found proteins. The process of matching all these ligands to their appropriate targets is an ideal task for distributed computing, and the quicker it's done, the quicker and greater the benefits will be.

www.chetanasprojects.com

www.1000projects.com

Security and Standards Challenges

The major challenges come with increasing scale. As soon as you move outside of a corporate firewall, security and standardization challenges become quite significant. Most of today's vendors currently specialize in applications that stop at the corporate firewall, though Avaki, in particular, is staking out the global grid territory. Beyond spanning firewalls with a single platform, lies the challenge of spanning multiple firewalls and platforms, which means standards. Most of the current platforms offer high level encryption such as Triple DES. The application packages that are sent to PCs are digitally signed, to make sure a rogue www.chetanasprojects.com

www.1000projects.com application does not infiltrate a system. Avaki comes with its own PKI (public key infrastructure). Identical application packages are typically sent to multiple PCs and the results of each are compared. Any set of results that differs from the rest becomes security suspect. Even with encryption, data can still be snooped when the process is running in the client's memory, so most platforms create application data chunks that are so small, that it is unlikely snooping them will provide useful information. Avaki claims that it integrates easily with different existing security infrastructures and can facilitate the communications among them, but this is obviously a challenge for global distributed computing. Working out standards for communications among platforms is part of the typical chaos that occurs early in any relatively new technology. In the generalized peer-to-peer realm lies the Peer-to-Peer Working Group, started by Intel, which is looking to devise standards for communications among many different types of peer-to-peer platforms, including those that are used for edge services and collaboration. The Global Grid Forum is a collection of about 200 companies looking to devise grid computing standards. Then you have vendor-specific efforts such as Sun's Open Source JXTA platform, which provides a collection of protocols and services that allows peers to advertise themselves to and communicate with each other securely. JXTA has a lot in common with JINI, but is not Java specific (thought the first version is Java based). Intel recently released its own peer-to-peer middleware, the Intel Peer-to-Peer Accelerator Kit for Microsoft .Net, also designed for discovery, and based on the Microsoft.Net platform.

Conclusion

The advantages of this type of architecture for the right kinds of applications are impressive. The most obvious is the ability to provide access to supercomputer level processing power or better for a fraction of the cost of a typical supercomputer.

www.chetanasprojects.com

www.1000projects.com Scalability is also a great advantage of distributed computing. Though they provide massive processing power, super computers are typically not very scalable once they're installed. A distributed computing installation is infinitely scalable--simply add more systems to the environment. In a corporate distributed computing setting, systems might be added within or beyond the corporate firewall. For today, however, the specific promise of distributed computing lies mostly in harnessing the system resources that lies within the firewall. It will take years before the systems on the Net will be sharing compute resources as effortlessly as they can share information.

www.chetanasprojects.com

www.1000projects.com

Bibliography

www.google.com http://www.extremetech.com/ Distributed Systems Architecture and Implementation B.W. Lampsen, M.Paul Distributed Systems, Methods and tools for Specification An Advanced Course, Springer-Verlag. Operating Systems - Tennanbaman

www.chetanasprojects.com

You might also like

- Z and Y ParametersDocument6 pagesZ and Y Parameterskudupudinagesh100% (12)

- Principles of Failure AnalysisDocument2 pagesPrinciples of Failure AnalysisLuis Kike Licona DíazNo ratings yet

- Load Test On DC Compund GeneratorDocument4 pagesLoad Test On DC Compund Generatorkudupudinagesh50% (12)

- Growing Power of Social MediaDocument13 pagesGrowing Power of Social MediaKiran HanifNo ratings yet

- Linde H16DDocument2 pagesLinde H16Dfox mulderNo ratings yet

- Brake Test On DC Shunt MotorDocument4 pagesBrake Test On DC Shunt Motorkudupudinagesh87% (31)

- Distributed ComputingDocument13 pagesDistributed Computingpriyanka1229No ratings yet

- Load Test On Series GeneratorDocument3 pagesLoad Test On Series Generatorkudupudinagesh88% (8)

- Technical Seminar Report on Cloud ComputingDocument13 pagesTechnical Seminar Report on Cloud ComputingRajesh KrishnaNo ratings yet

- Business Implementation of Cloud ComputingDocument6 pagesBusiness Implementation of Cloud ComputingQuaid Johar SodawalaNo ratings yet

- Introduction To Cloud ComputingDocument32 pagesIntroduction To Cloud Computingliuliming100% (3)

- Cloud Computing: An Overview: Charandeep Singh Bedi, Rajan SachdevaDocument10 pagesCloud Computing: An Overview: Charandeep Singh Bedi, Rajan SachdevaCharndeep BediNo ratings yet

- Cloud Computing Research PaperDocument11 pagesCloud Computing Research PaperPracheta BansalNo ratings yet

- Distributed Computing Management Server 2Document18 pagesDistributed Computing Management Server 2Sumanth MamidalaNo ratings yet

- The Poor Man's Super ComputerDocument12 pagesThe Poor Man's Super ComputerVishnu ParsiNo ratings yet

- The Poor Man's Super ComputerDocument13 pagesThe Poor Man's Super Computerapi-26830587No ratings yet

- Grid Computing: 1. AbstractDocument10 pagesGrid Computing: 1. AbstractBhavya DeepthiNo ratings yet

- Load Balancing and Process Management Over Grid ComputingDocument4 pagesLoad Balancing and Process Management Over Grid ComputingInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Grid Computing As Virtual Supercomputing: Paper Presented byDocument6 pagesGrid Computing As Virtual Supercomputing: Paper Presented bysunny_deshmukh_3No ratings yet

- Grid ComputingDocument8 pagesGrid ComputingKiran SamdyanNo ratings yet

- Distributed Computing ExplainedDocument6 pagesDistributed Computing ExplainedMiguel Gabriel FuentesNo ratings yet

- FinalDocument19 pagesFinalmanjiriaphaleNo ratings yet

- Grid and Cloud ComputingDocument10 pagesGrid and Cloud ComputingWesley MutaiNo ratings yet

- Study and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalDocument6 pagesStudy and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalIJERDNo ratings yet

- TopologyiesDocument18 pagesTopologyieslahiruaioitNo ratings yet

- Unit - 1: Cloud Architecture and ModelDocument9 pagesUnit - 1: Cloud Architecture and ModelRitu SrivastavaNo ratings yet

- Data Storage DatabasesDocument6 pagesData Storage DatabasesSrikanth JannuNo ratings yet

- UNIT-1: Overview of Grid ComputingDocument16 pagesUNIT-1: Overview of Grid ComputingFake SinghNo ratings yet

- Harnessing Multiple Computers for Complex TasksDocument31 pagesHarnessing Multiple Computers for Complex TasksCutiepiezNo ratings yet

- CSE 423 Virtualization and Cloud Computinglecture0Document16 pagesCSE 423 Virtualization and Cloud Computinglecture0Shahad ShahulNo ratings yet

- Multilibos: An Os Architecture For Cloud ComputingDocument10 pagesMultilibos: An Os Architecture For Cloud ComputingchatzchatzNo ratings yet

- Presentation 1Document15 pagesPresentation 1Arshia SultanaNo ratings yet

- Cloud Computing, Seminar ReportDocument17 pagesCloud Computing, Seminar ReportAveek Dasmalakar78% (9)

- Comparing Cloud Computing and Grid ComputingDocument15 pagesComparing Cloud Computing and Grid ComputingRajesh RaghavanNo ratings yet

- Grid Computing: 1. AbstractDocument10 pagesGrid Computing: 1. AbstractARVINDNo ratings yet

- What Is Cloud Computing?: ComputersDocument8 pagesWhat Is Cloud Computing?: ComputersNaidu SwethaNo ratings yet

- Cloud Computing ExplainedDocument17 pagesCloud Computing Explainedsaurabh128No ratings yet

- Grid Computing Is The Federation of Computer Resources From MultipleDocument7 pagesGrid Computing Is The Federation of Computer Resources From MultiplekaushalankushNo ratings yet

- Grid Computing A New DefinitionDocument4 pagesGrid Computing A New DefinitionChetan NagarNo ratings yet

- Notes - DN - Grid and Cloud ComputingDocument6 pagesNotes - DN - Grid and Cloud ComputingCharles DarwinNo ratings yet

- Unit 1 Introduction To Cloud Computing: StructureDocument17 pagesUnit 1 Introduction To Cloud Computing: StructureRaj SinghNo ratings yet

- Grid Computing: Distributed SystemDocument15 pagesGrid Computing: Distributed SystemLekha V RamaswamyNo ratings yet

- JNTUK 4-1 CSE R20 CC UNIT-I (WWW - Jntumaterials.co - In)Document18 pagesJNTUK 4-1 CSE R20 CC UNIT-I (WWW - Jntumaterials.co - In)satyaNo ratings yet

- Topic:Cloud Computing Name:Aksa Kalsoom Submitted To:Sir Amir Taj Semester:7 ROLL# 11PWBCS0276 Section:BDocument6 pagesTopic:Cloud Computing Name:Aksa Kalsoom Submitted To:Sir Amir Taj Semester:7 ROLL# 11PWBCS0276 Section:BlailaaabidNo ratings yet

- Cloud ComputingDocument8 pagesCloud ComputingAnjali SoniNo ratings yet

- Designing Corporate IT ArchitectureDocument37 pagesDesigning Corporate IT ArchitectureayaNo ratings yet

- Requirements to Transform IT to a ServiceDocument4 pagesRequirements to Transform IT to a ServiceMD Monowar Ul IslamNo ratings yet

- s5 Notes CCDocument2 pagess5 Notes CCganeshNo ratings yet

- Paper Presentation-Cloud ComputingDocument14 pagesPaper Presentation-Cloud Computingdeepakfct100% (4)

- Osamah Shihab Al-NuaimiDocument7 pagesOsamah Shihab Al-NuaimiOmar AbooshNo ratings yet

- The Distributed Computing Model Based On The Capabilities of The InternetDocument6 pagesThe Distributed Computing Model Based On The Capabilities of The InternetmanjulakinnalNo ratings yet

- University of Education WinneabaDocument10 pagesUniversity of Education WinneabaOwusu-Agyemang FrankNo ratings yet

- Cloud Computing Notes (UNIT-2)Document43 pagesCloud Computing Notes (UNIT-2)AmanNo ratings yet

- Unit 1Document69 pagesUnit 1Head CSEAECCNo ratings yet

- Distributed Computing: Solving Complex Problems Using Multiple ComputersDocument4 pagesDistributed Computing: Solving Complex Problems Using Multiple ComputersJohn Henry ChanNo ratings yet

- UNIT 1 Grid ComputingDocument55 pagesUNIT 1 Grid ComputingAbhishek Tomar67% (3)

- An Assignment On Cloud ComputingDocument5 pagesAn Assignment On Cloud ComputingMD Monowar Ul IslamNo ratings yet

- Cloud Computing: Cloud Computing Is The Delivery of Computing As A Service Rather Than A Product, WherebyDocument15 pagesCloud Computing: Cloud Computing Is The Delivery of Computing As A Service Rather Than A Product, WherebyShashank GuptaNo ratings yet

- Grid Computing 1Document6 pagesGrid Computing 1cheecksaNo ratings yet

- Cloud Computing: Presentation ByDocument20 pagesCloud Computing: Presentation Bymahesh_mallidiNo ratings yet

- Assignment No.:01: Course Title: Cloud Computing Submitted By: Sabera Sultana (1803003)Document11 pagesAssignment No.:01: Course Title: Cloud Computing Submitted By: Sabera Sultana (1803003)MD Monowar Ul IslamNo ratings yet

- C C CCCCCCCCCCCCCC CC CCCDocument8 pagesC C CCCCCCCCCCCCCC CC CCCNissymary AbrahamNo ratings yet

- Grid Computing: An Introduction to Distributed Supercomputing NetworksDocument26 pagesGrid Computing: An Introduction to Distributed Supercomputing NetworksVIBHORE SHARMANo ratings yet

- Md. Jakir Hossain - CC - Assign-1Document4 pagesMd. Jakir Hossain - CC - Assign-1MD Monowar Ul IslamNo ratings yet

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingFrom EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo ratings yet

- Hop Kin Sons TestDocument4 pagesHop Kin Sons Testkudupudinagesh100% (1)

- Field TestDocument5 pagesField Testkudupudinagesh100% (3)

- Power Systems and Simulation Laboratory: Department of Electrical and Electronics EngineeringDocument48 pagesPower Systems and Simulation Laboratory: Department of Electrical and Electronics EngineeringkudupudinageshNo ratings yet

- Speed Control& Swinburns TestDocument9 pagesSpeed Control& Swinburns TestkudupudinageshNo ratings yet

- Brake Test On DC Compound MotorDocument4 pagesBrake Test On DC Compound Motorkudupudinagesh90% (10)

- OCCDocument4 pagesOCCkudupudinageshNo ratings yet

- On of Stray LossesDocument5 pagesOn of Stray LosseskudupudinageshNo ratings yet

- Presentation 1Document1 pagePresentation 1kudupudinageshNo ratings yet

- Em Lab1Document1 pageEm Lab1kudupudinageshNo ratings yet

- Swinburne S Test On DC Shunt MotorDocument5 pagesSwinburne S Test On DC Shunt Motortamann2004No ratings yet

- Thevinin and NortonDocument4 pagesThevinin and Nortonkudupudinagesh100% (1)

- Time Responseof RL and RC NetworksDocument5 pagesTime Responseof RL and RC Networkskudupudinagesh100% (1)

- Maglev Trains: Trains That Fly On AirDocument21 pagesMaglev Trains: Trains That Fly On AirkudupudinageshNo ratings yet

- Super Position and ReciprocityDocument6 pagesSuper Position and ReciprocitykudupudinageshNo ratings yet

- Max Power TransferDocument5 pagesMax Power TransferkudupudinageshNo ratings yet

- O.C & S.C of Single Phase TransformerDocument8 pagesO.C & S.C of Single Phase Transformerkudupudinagesh50% (2)

- Regulation of AlternatorDocument6 pagesRegulation of Alternatorkudupudinagesh100% (1)

- Global System For Mobile CommunicationDocument21 pagesGlobal System For Mobile CommunicationPulkit AroraNo ratings yet

- Final Fuzzy ReportDocument19 pagesFinal Fuzzy ReportBhagyashree SoniNo ratings yet

- N&ET Lab SyllabusDocument1 pageN&ET Lab SyllabuskudupudinageshNo ratings yet

- Magnetization Characterisitcs of Dcshunt GeneratorDocument4 pagesMagnetization Characterisitcs of Dcshunt GeneratorkudupudinageshNo ratings yet

- Brake Test On DC Shunt MotorDocument5 pagesBrake Test On DC Shunt MotorkudupudinageshNo ratings yet

- Maglev Trains: Rajamah EndriDocument9 pagesMaglev Trains: Rajamah EndrikudupudinageshNo ratings yet

- Control SystemsDocument8 pagesControl SystemsprakashjntuNo ratings yet

- Fujitsu-General ASYG07-12 LLC 2014Document2 pagesFujitsu-General ASYG07-12 LLC 2014Euro-klima BitolaNo ratings yet

- Sharp Lc-46d65u & Lc-52d65u Final LCD TV SMDocument56 pagesSharp Lc-46d65u & Lc-52d65u Final LCD TV SMDan PrewittNo ratings yet

- Vibration PPV EstimationDocument8 pagesVibration PPV EstimationJensen TanNo ratings yet

- DS150 Simon RO Service Manual EnglishDocument16 pagesDS150 Simon RO Service Manual EnglishJosé AdelinoNo ratings yet

- Baremos Espanoles CBCL6-18 PDFDocument24 pagesBaremos Espanoles CBCL6-18 PDFArmando CasillasNo ratings yet

- Water Plant EstimateDocument4 pagesWater Plant EstimateVishnu DasNo ratings yet

- Application of PWM Speed ControlDocument7 pagesApplication of PWM Speed ControlJMCproductsNo ratings yet

- ASHIDA Product CatalogueDocument4 pagesASHIDA Product Cataloguerahulyadav2121545No ratings yet

- w13 - CRM How It Works and Help BusinessDocument15 pagesw13 - CRM How It Works and Help BusinessYahya Vernanda RamadhaniNo ratings yet

- R2B - Buffer Seals - InchDocument2 pagesR2B - Buffer Seals - InchBill MurrayNo ratings yet

- RapidAnalytics ManualDocument23 pagesRapidAnalytics ManualansanaNo ratings yet

- Factory Overhead Variances: Flexible Budget ApproachDocument4 pagesFactory Overhead Variances: Flexible Budget ApproachMeghan Kaye LiwenNo ratings yet

- SolverTable HelpDocument13 pagesSolverTable HelpM Ibnu Aji DwiyantoNo ratings yet

- JMeter OAuth SamplerDocument3 pagesJMeter OAuth SamplerFredy NataNo ratings yet

- 17 Refutation Concession InstructionsDocument4 pages17 Refutation Concession InstructionsfiahstoneNo ratings yet

- Fly by WireDocument2 pagesFly by WireAmit VarmaNo ratings yet

- EPC Civil06Document6 pagesEPC Civil06MHanif ARNo ratings yet

- EHP 200A 11-10-NardaDocument7 pagesEHP 200A 11-10-NardaDrughinsNo ratings yet

- Physics Sample ProblemsDocument5 pagesPhysics Sample ProblemsEdin Abolencia0% (1)

- Dissertation Grosser 2012 PDFDocument359 pagesDissertation Grosser 2012 PDFPanos Sp0% (1)

- docPOI UkDocument27 pagesdocPOI UkpvitruvianNo ratings yet

- Synergy in The Urban Solid Waste Management With APPENDIXDocument25 pagesSynergy in The Urban Solid Waste Management With APPENDIXvincent jay ruizNo ratings yet

- CRANE RUNWAY BEAM DESIGN - ASD 9Document5 pagesCRANE RUNWAY BEAM DESIGN - ASD 9MITHUN BDML100% (1)

- R6 Relay ValveDocument2 pagesR6 Relay ValveveereshNo ratings yet

- Nonlinear Analyses For Thermal Cracking in The Design of Concrete StructuresDocument8 pagesNonlinear Analyses For Thermal Cracking in The Design of Concrete Structuresmohammed_fathelbabNo ratings yet

- Philips DoseRight 2.0 CT Dose ControlDocument4 pagesPhilips DoseRight 2.0 CT Dose Controlsombrero61No ratings yet

- Piper Archer II Checklist GuideDocument8 pagesPiper Archer II Checklist GuideJosías GenemNo ratings yet