Professional Documents

Culture Documents

Problem E: Huffman Codes

Uploaded by

vivekoberoiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Problem E: Huffman Codes

Uploaded by

vivekoberoiCopyright:

Available Formats

Problem E

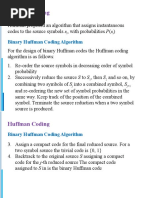

Huffman Codes

Input file: huffman.in

Dan McAmbi is a member of a crack counter-espionage team and has recently obtained the partial contents of a file containing information vital to his nations interests. The file had been compressed using Huffman encoding. Unfortunately, the part of the file that Dan has shows only the Huffman codes themselves, not the compressed information. Since Huffman codes are based on the frequencies of the characters in the original message, Dans boss thinks that some information might be obtained if Dan can reverse the Huffman encoding process and obtain the character frequencies from the Huffman codes. Dans gut reaction to this is that any given set of codes could be obtained from a wide variety of frequency distributions, but his boss is not impressed with this reasoned analysis. So Dan has come to you to get more definitive proof to take back to his boss. Huffman encoding is an optimal data compression method if you know in advance the relative frequencies of letters in the text to be compressed. The method works by first constructing a Huffman tree as follows. Start with a forest of trees, each tree a single node containing a character from the text and its frequency (the character value is used only in the leaves of the resulting tree). Each step of the construction algorithm takes the two trees with the lowest frequency values (choosing arbitrarily if there are ties), and replaces them with a new tree formed by joining the two trees as the left and right subtrees of a new root node. The frequency value of the new root is the sum of the frequencies of the two subtrees. This procedure repeats until only one tree is left. An example of this is shown below, assuming we have a file with only 5 characters A, B, C, D and E with frequencies 10%, 14%, 31%, 25% and 20%, respectively.

After you have constructed a Huffman tree, assign the Huffman codes to the characters as follows. Label each left branch of the tree with a 0 and each right branch with a 1. Reading down from the root to each character gives the Huffman code for that character. The tree above results in the following Huffman codes: A - 010, B - 011, C - 11, D - 10 and E - 00. For the purpose of this problem, the tree with the lower frequency always becomes the left subtree of the new tree. If both trees have the same frequencies, either of the two trees can be chosen as the left subtree. Note that this means that for some frequency distributions, there are several valid Huffman encodings. The same Huffman encoding can be obtained from several different frequency distributions: change 14% to 13% and 31% to 32%, and you still get the same tree and thus the same codes. Dan wants you to write a program to determine

the total number of distinct ways you could get a given Huffman encoding, assuming that all percentages are positive integers. Note that two frequency distributions that differ only in the ordering of their percentages (for example 30% 70% for one distribution and 70% 30% for another) are not distinct. Input The input consists of several test cases. Each test case consists of a single line starting with a positive integer n (2 n 20), which is the number of different characters in the compressed document, followed by n binary strings giving the Huffman encoding of each character. You may assume that these strings are indeed a Huffman encoding of some frequency distribution (though under our additional assumptions, it may still be the case that the answer is 0 see the last sample case below). The last test case is followed by a line containing a single zero. Output For each test case, print a line containing the test case number (beginning with 1) followed by the number of distinct frequency distributions that could result in the given Huffman codes. Sample Input 5 010 011 11 10 00 8 00 010 011 10 1100 11010 11011 111 8 1 01 001 0001 00001 000001 0000001 0000000 0 Output for the Sample Input Case 1: 3035 Case 2: 11914 Case 3: 0

You might also like

- Mrs. Universe PH - Empowering Women, Inspiring ChildrenDocument2 pagesMrs. Universe PH - Empowering Women, Inspiring ChildrenKate PestanasNo ratings yet

- Real Estate Broker ReviewerREBLEXDocument124 pagesReal Estate Broker ReviewerREBLEXMar100% (4)

- Huffman CodeDocument51 pagesHuffman CodeHoney LaraNo ratings yet

- History of Microfinance in NigeriaDocument9 pagesHistory of Microfinance in Nigeriahardmanperson100% (1)

- Lossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor AditDocument39 pagesLossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor Aditmehul03ecNo ratings yet

- LIST OF ENROLLED MEMBERS OF SAHIWAL CHAMBER OF COMMERCEDocument126 pagesLIST OF ENROLLED MEMBERS OF SAHIWAL CHAMBER OF COMMERCEBASIT Ali KhanNo ratings yet

- Huffman Coding TreesDocument3 pagesHuffman Coding Treesrazee_No ratings yet

- Huffman Coding TechniqueDocument13 pagesHuffman Coding TechniqueAnchal RathoreNo ratings yet

- EC3021DDocument22 pagesEC3021DSimmon ShajiNo ratings yet

- Huff Man CodingDocument8 pagesHuff Man CodingYamini ReddyNo ratings yet

- Data Compression Techniques ComparedDocument9 pagesData Compression Techniques ComparedperhackerNo ratings yet

- Unit 2 CA209Document29 pagesUnit 2 CA209Farah AsifNo ratings yet

- CCE5102 - 2 - Data Compression MethodsDocument9 pagesCCE5102 - 2 - Data Compression MethodsperhackerNo ratings yet

- Dce 1Document21 pagesDce 1Jay MehtaNo ratings yet

- Static Huffman Coding Term PaperDocument23 pagesStatic Huffman Coding Term PaperRavish NirvanNo ratings yet

- The Audio Codec: Stereo and Huffman CodingDocument6 pagesThe Audio Codec: Stereo and Huffman CodingapplefounderNo ratings yet

- Implementing Huffman Coding in MATLABDocument8 pagesImplementing Huffman Coding in MATLABMavineNo ratings yet

- Problem Set 4: MAS160: Signals, Systems & Information For Media TechnologyDocument4 pagesProblem Set 4: MAS160: Signals, Systems & Information For Media TechnologyFatmir KelmendiNo ratings yet

- University of Management & Technology: Submitted By: Usama Dastagir 14030027011 Hassan Humayoun 14030027043Document7 pagesUniversity of Management & Technology: Submitted By: Usama Dastagir 14030027011 Hassan Humayoun 14030027043Hassan HumayounNo ratings yet

- Huffman Coding: Related TermsDocument18 pagesHuffman Coding: Related TermsNuredin AbdumalikNo ratings yet

- What Is Huffman Coding and Its HistoryDocument5 pagesWhat Is Huffman Coding and Its HistoryNam PhươngNo ratings yet

- Huffman Coding AssignmentDocument7 pagesHuffman Coding AssignmentMavine0% (1)

- Huffman Code (Variable Length)Document19 pagesHuffman Code (Variable Length)AdityaNo ratings yet

- Huffman Code (Variable Length)Document19 pagesHuffman Code (Variable Length)AdityaNo ratings yet

- Unit 2Document28 pagesUnit 2Zohaib Hasan KhanNo ratings yet

- Huffman Codes and Its Implementation: Submitted by Kesarwani Aashita Int. M.Sc. in Applied Mathematics (3 Year)Document28 pagesHuffman Codes and Its Implementation: Submitted by Kesarwani Aashita Int. M.Sc. in Applied Mathematics (3 Year)headgoonNo ratings yet

- Huffman TreeDocument10 pagesHuffman Treepritam nayakNo ratings yet

- Data Structure: Huffman Tree:Project Submitted To: Sir Abdul WahabDocument24 pagesData Structure: Huffman Tree:Project Submitted To: Sir Abdul WahabMuhammad Zia ShahidNo ratings yet

- Huffman CodingDocument10 pagesHuffman CodingPunit YadavNo ratings yet

- Huffman Coding-: Step-01Document19 pagesHuffman Coding-: Step-01Gurwinder SinghNo ratings yet

- Huffman Coding 1Document54 pagesHuffman Coding 1dipu ghoshNo ratings yet

- Data Compression Algorithms and Their ApplicationsDocument14 pagesData Compression Algorithms and Their ApplicationsMohammad Hosseini100% (1)

- CS112: Modeling Uncertainty in Information Systems Homework 3 Due Friday, May 18 (See Below For Times)Document6 pagesCS112: Modeling Uncertainty in Information Systems Homework 3 Due Friday, May 18 (See Below For Times)Anritsi AnNo ratings yet

- Huffman Coding Ms 140400147 Sadia Yunas ButtDocument9 pagesHuffman Coding Ms 140400147 Sadia Yunas ButtendaleNo ratings yet

- Assignment of SuccessfulDocument5 pagesAssignment of SuccessfulLe Tien Dat (K18 HL)No ratings yet

- Term Paper Huffman CodingDocument9 pagesTerm Paper Huffman CodingendaleNo ratings yet

- LZW compression algorithm explainedDocument4 pagesLZW compression algorithm explainedVlad PașcaNo ratings yet

- HuffmanDocument4 pagesHuffmaneeerrr026No ratings yet

- Data Compression TechniquesDocument11 pagesData Compression Techniquessmile00972No ratings yet

- 3G 04 DigitalCommDocument163 pages3G 04 DigitalCommArockia WrenNo ratings yet

- Lossless Compression: Lesson 1Document10 pagesLossless Compression: Lesson 1dhiraj_das_10No ratings yet

- Intro to Data Compression Techniques & ConceptsDocument22 pagesIntro to Data Compression Techniques & ConceptsVijaya AzimuddinNo ratings yet

- Unit Iii Greedy and Dynamic ProgrammingDocument16 pagesUnit Iii Greedy and Dynamic ProgrammingMohamed ImranNo ratings yet

- Huffman CodingDocument8 pagesHuffman CodingRajni ParasharNo ratings yet

- Text compression algorithms studyDocument9 pagesText compression algorithms studyFranz Lorenz GaveraNo ratings yet

- SatformatDocument8 pagesSatformatHorus IonNo ratings yet

- Assignment No: 02 Title: Huffman AlgorithmDocument7 pagesAssignment No: 02 Title: Huffman AlgorithmtejasNo ratings yet

- Huffman CodingDocument10 pagesHuffman CodingGhoul FTWNo ratings yet

- Chapter 5 Solved ProblemDocument3 pagesChapter 5 Solved ProblemNatyBNo ratings yet

- Spectra, Signals ReportDocument8 pagesSpectra, Signals ReportDaniel Lorenz Broces IIINo ratings yet

- Mth element in linked listDocument4 pagesMth element in linked listJayachandra JcNo ratings yet

- Introduction To Digital Communications and Information TheoryDocument8 pagesIntroduction To Digital Communications and Information TheoryRandall GloverNo ratings yet

- 6.1 Lossless Compression Algorithms: Introduction: Unit 6: Multimedia Data CompressionDocument25 pages6.1 Lossless Compression Algorithms: Introduction: Unit 6: Multimedia Data CompressionSameer ShirhattimathNo ratings yet

- String CompressionDocument13 pagesString CompressionvatarplNo ratings yet

- CS301 Assig 04solved With Ref ExplDocument9 pagesCS301 Assig 04solved With Ref Explimr_hseNo ratings yet

- GreedyDocument39 pagesGreedyTameneNo ratings yet

- Data Compression and Coding: Stefano Marmi (Scuola Normale Superiore, Pisa)Document29 pagesData Compression and Coding: Stefano Marmi (Scuola Normale Superiore, Pisa)Bernardo TariniNo ratings yet

- Mini Project 2Document4 pagesMini Project 2Nutan KesarkarNo ratings yet

- Huffman Coding Explained in 40 CharactersDocument5 pagesHuffman Coding Explained in 40 CharactersNitinNo ratings yet

- Greedy and DFS in Huffman CodingDocument5 pagesGreedy and DFS in Huffman CodingRaydhitya YosephNo ratings yet

- Wireless Digital Communications Systems OverviewDocument83 pagesWireless Digital Communications Systems OverviewIsmael Casarrubias GNo ratings yet

- Lossless Data Compression TechniquesDocument22 pagesLossless Data Compression TechniquesDawit BassaNo ratings yet

- S 2Document8 pagesS 2Vidushi BindrooNo ratings yet

- Flowmon Ads Enterprise Userguide enDocument82 pagesFlowmon Ads Enterprise Userguide ennagasatoNo ratings yet

- Trillium Seismometer: User GuideDocument34 pagesTrillium Seismometer: User GuideDjibril Idé AlphaNo ratings yet

- Cell Organelles ColoringDocument2 pagesCell Organelles ColoringThomas Neace-FranklinNo ratings yet

- SolBridge Application 2012Document14 pagesSolBridge Application 2012Corissa WandmacherNo ratings yet

- Letter From Attorneys General To 3MDocument5 pagesLetter From Attorneys General To 3MHonolulu Star-AdvertiserNo ratings yet

- Tutorial 1 Discussion Document - Batch 03Document4 pagesTutorial 1 Discussion Document - Batch 03Anindya CostaNo ratings yet

- Computer Networks Transmission Media: Dr. Mohammad AdlyDocument14 pagesComputer Networks Transmission Media: Dr. Mohammad AdlyRichthofen Flies Bf109No ratings yet

- OS LabDocument130 pagesOS LabSourav BadhanNo ratings yet

- Shopping Mall: Computer Application - IiiDocument15 pagesShopping Mall: Computer Application - IiiShadowdare VirkNo ratings yet

- Rubric 5th GradeDocument2 pagesRubric 5th GradeAlbert SantosNo ratings yet

- LegoDocument30 pagesLegomzai2003No ratings yet

- Form 709 United States Gift Tax ReturnDocument5 pagesForm 709 United States Gift Tax ReturnBogdan PraščevićNo ratings yet

- Prlude No BWV in C MinorDocument3 pagesPrlude No BWV in C MinorFrédéric LemaireNo ratings yet

- Indian Standard: Pla Ing and Design of Drainage IN Irrigation Projects - GuidelinesDocument7 pagesIndian Standard: Pla Ing and Design of Drainage IN Irrigation Projects - GuidelinesGolak PattanaikNo ratings yet

- Easa Management System Assessment ToolDocument40 pagesEasa Management System Assessment ToolAdam Tudor-danielNo ratings yet

- Mosfet 101Document15 pagesMosfet 101Victor TolentinoNo ratings yet

- Master SEODocument8 pagesMaster SEOOkane MochiNo ratings yet

- 2021 Impact of Change Forecast Highlights: COVID-19 Recovery and Impact On Future UtilizationDocument17 pages2021 Impact of Change Forecast Highlights: COVID-19 Recovery and Impact On Future UtilizationwahidNo ratings yet

- Consensus Building e Progettazione Partecipata - Marianella SclaviDocument7 pagesConsensus Building e Progettazione Partecipata - Marianella SclaviWilma MassuccoNo ratings yet

- Reading Comprehension Exercise, May 3rdDocument3 pagesReading Comprehension Exercise, May 3rdPalupi Salwa BerliantiNo ratings yet

- MQC Lab Manual 2021-2022-AutonomyDocument39 pagesMQC Lab Manual 2021-2022-AutonomyAniket YadavNo ratings yet

- ITP Exam SuggetionDocument252 pagesITP Exam SuggetionNurul AminNo ratings yet

- Gapped SentencesDocument8 pagesGapped SentencesKianujillaNo ratings yet

- Endangered EcosystemDocument11 pagesEndangered EcosystemNur SyahirahNo ratings yet

- Weone ProfileDocument10 pagesWeone ProfileOmair FarooqNo ratings yet

- Statistical Quality Control, 7th Edition by Douglas C. Montgomery. 1Document76 pagesStatistical Quality Control, 7th Edition by Douglas C. Montgomery. 1omerfaruk200141No ratings yet