Professional Documents

Culture Documents

Establishing Operation-1. Michael. 1982

Uploaded by

Iaciu LaurentiuOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Establishing Operation-1. Michael. 1982

Uploaded by

Iaciu LaurentiuCopyright:

Available Formats

JOURNAL OF THE EXPERIMENTAL ANALYSIS OF BEHAVIOR

1982cl, 37, 149-155

NUMBER

(JANUARY)

DISTINGUISHING BETWEEN DISCRIMINATIVE AND MOTIVATIONAL FUNCTIONS OF STIMULI JACK MICHAEL

WESTERN MICHIGAN UNIVERSITY

A discriminative stimulus is a stimulus condition which, (1) given the momentary effectiveness of some particular type of reinforcement (2) increases the frequency of a particular type of response (3) because that stimulus condition has been correlated with an increase in the frequency with which that type of response has been followed by that type of reinforcement. Operations such as deprivation have two different effects on behavior. One is to increase the effectiveness of some object or event as reinforcement, and the other is to evoke the behavior that has in the past been followed by that object or event. "Establishing operation" is suggested as a general term for operations having these two effects. A number of situations involve what is generally assuined to be a discriminative stimulus relation, but with the third defining characteristic of the discriminative stimulus absent. Here the stimulus change functions more like an establishing operation than a discriminative stimulus, and the new term, "establishing stimulus," is suggested. There are three other possible approaches to this terminological problem, but none are entirely satisfactory. Key words: stimulus control, establishing operation, establishing stimulus, deprivation, reinforcement

ing with this issue it is necessary to refine the BACKGROUND CONCEPTS concept of the discriminative stimulus or SD Technical Terminology and to review the way deprivation affects beSkinner (1957, pg. 430) described a three- havior. stage process in the development of scientific verbal behavior: first, more effective forms of The Discriminative Stimulus It seems in keeping with current usage to verbal behavior are discovered; these are then explicitly adopted and encouraged by the rel- describe the presentation of a discriminative evant technical and scientific community; stimulus, or the change from SA to SD, in terms finally, these verbal practices are themselves of three defining features. It is a stimulus critically examined and altered to overcome change which, (1) given the momentary effecwhat seem to be inadequacies or limitations. tiveness of some particular type of reinforceThis paper fits into the third state of this ment1 (2) increases the frequency of a particuprocess. Our way of talking about operant lar type of response (3) because that stimulus stimulus control seems to include but fails to change has been correlated with an increase in distinguish between two quite different forms the frequency with which that type of response of control. We might improve our verbal prac- has been followed by that type of reinforcetices by adopting a new technical term for one ment. (Frequency of reinforcement is the most of these forms of control or at least by explic- common variable used to develop the discrimiitly recognizing the problem. But before deal- native stimulus relation. However, this relation

The analysis in this paper developed as a result of discussions with a number of colleagues and students but especially G. Dennehy, C. Cherpas, B. Fulton, B. Hesse, M. Minervini, L. Parrott, M. Peterson, M. Sundberg, P. Whitley, and K. Wright, who are also responsible for many improvements in the present manuscript. Reprints may be obtained from Jack Michael, Department of Psychology, Western Michigan University, Kalamazoo, Michigan 49008.

1It would be more in keeping with current practice to make frequent use of the term "reinforcer," rather than "type of reinforcement" or "form of reinforcement," etc. However, I have argued in another paper (Michael, 1975) that "reinforcer" implies a static event, which cannot have the behavioral implications that "reinforcer" appears to have. "Reinforcement" properly implies stimulus change, and only change can function as a consequence for behavior. Therefore throughout this paper "reinforcer" is deliberately avoided.

149

150

JACK MICHAEL where they have been more frequently followed by water reinforcement (the SD effect). The distinction between these two ways to evoke operant behavior is the basis for the suggestion of a new term which is the main point of the present paper.

can also be developed on the basis of reinforcement quantity or quality, the delay to reinforcement, the response requirement or effort, and other variables.) The first feature is sometimes taken for granted but for the present purposes it is better to be explicit: SDs do not generally alter response frequency when the organism is satiated with respect to the type of reinforcement relevant to that SD. The second feature is important in distinguishing behavioral from cognitive accounts of stimulus control, where the stimulus supposedly "signals" the availability of reinforcement, without any direct implication for any particular type of behavior. Whereas the first two features describe the controlling relation once it has been developed, the third identifies the relevant history and thus makes possible a distinction between the operant discriminative stimulus and the unconditioned and conditioned stimuli of the respondent relation. There are a number of situations involving what is generally taken to be an SD because the relation seems so obviously operant rather than respondent, but where the third defining feature is clearly absent. An attempt will be made to show that in some of these situations the stimulus change is functioning more like a motivational operation such as deprivation or aversive stimulation.

The Behavioral Effects of Deprivation

How does deprivation, of water for example, affect behavior? It is necessary to distinguish two quite different effects which cannot be easily derived from one another. One is an increase in the effectiveness of water as reinforcement for any new behavior which should happen to be followed by access to water. The other is an increase in the frequency of all behavior that has been reinforced with water and in this respect is like the evocative effect of an SD. Operant behavior can thus be increased in frequency (evoked) in two different ways. Consider, for example, an organism that is at least somewhat water deprived and for which some class of responses has a history of water reinforcement. Assume further that the current stimulus conditions have been associated with a low, but nonzero, frequency of water reinforcement for those responses. Such responses can be made momentarily more frequent (1) by further depriving the organism of water, or (2) by changing to a situation

The Need for a More General Term: the "Establishing Operation" The term "deprivation" has been generally used for relations such as the one discussed above but does not adequately characterize many of them. Salt ingestion, perspiration, and blood loss have similar effects but cannot be accurately referred to as water deprivation. Aversive stimulation also establishes its absence as reinforcement and evokes the behavior that has in the past removed it. Likewise temperature changes away from the organism's normal thermal condition increase the effectiveness of changes in the opposite direction as reinforcement and also evoke behavior that has resulted in such changes. Skinner explicitly identifies deprivation-satiation operations and aversive stimulation as motivational variables (1957, pp. 31-33 and also 212), and with the term "predisposition" (1953, p. 162) includes the so-called emotional operations in this collection. Again, the two different effects of such operations seem clear. For example, to be angry is, in part, to have one's behavior susceptible to reinforcement by signs of discomfort on the part of the person one is "angry at," and also to be engaging in behavior that has produced such effects. Likewise, "fear," from an operant point of view at least, seems to consist of an increased capacity for one's responses to be reinforced by the removal of certain stimuli plus the high frequency of behavior that has accomplished such removal. A general term is needed for operations having these two effects on behavior. There is, of course, the traditional "motive" and "drive," but these terms have a number of disadvantages, not the least of which is the strong implication of a determining inner state. I have found "establishing operation" appropriate in its commitment to the environment, and by abbreviating it to EO one may achieve the convenience of a small word without losing the implications of the longer term. An establishing operation, then, is any change in the environment which alters the effectiveness of

DISCRIMINATIVE AND MOTIVATIONAL FUNCTIONS OF STIMULI

151

some object or event as reinforcement and simultaneously alters the momentary frequiency of the behavior that has been followed by that reinforcement. (Sttudents of J. R. Kantor may see some similarity to his term "setting factor," but I believe that his term includes operations that have a broader or less specific effect on behavior than the establishing operation as defined above.) There is still a problem with this usage in that "establishing" implies only "increasing," but changes obviously occtur in both directions. "Deprivation" has the same limitation and is usually accompanied by its opposite "satiation." It does not seem useful at this time to introduce the term "abolishing" to serve a similar function, so perhaps in the present context "establishing" should be taken to be short for "establishing or abolishing." The value of a general term is more than just terminological convenience, however. The absence of such a term may have been responsible for some tendency to disregard such effects or to subsume them under other headings. For example, it is common to describe the basic operant procedure as a three-term relation involving stimulus, response, and consequence. Yet it is clear that such a relation is not in effect unless the relevant establishing operation is at an appropriate level. A stimulus that is correlated with increased frequency of water reinforcement for some class of responses will not evoke those responses if water is not currently effective as reinforcement. Furthermore, aversive stimuli and other events like temperature changes that quickly evoke behavior may appear to be discriminative stimuli but should not be so considered, although the argument is somewhat complex. As mentioned earlier, in order for a stimulus to be considered a discriminative stimulus the differential frequency of responding in its presence as compared with its absence must be due to a history of differential reinforcement in its presence as compared with its absence. Although it is not usually mentioned, there is in this requirement the further implication that the event or object which is functioning as reinforcement must have been equally effective as reinforcement in the absence as in the presence of the stimulus. It would not, for example, be considered appropriate discrimination training if during the presence of the SD, the organism was food deprived and received food as reinforcement for responding,

but in the absence of the SD it was food satiated, and responding was not followed by food. Such training would be considered of dubious value in developing stimulus control because during the absence of the SD, the satiated organism's failure to receive food after the relevant response (if that response were to occtur) could not easily be considered "unreinforced" responding, since at that time food would not be functioning as reinforcement. The type of differential reinforcement relevant to stimuilus control ordinarily implies that in the presence of the stimulus, the organism receives the reinforcement with greater frequency than it receives the reinforcement in the absence of the stimulus. If in the absence of the stimtulus, the critical event no longer functions as reinforcement, then/ receiving it at a lower frequency is not behaviorally equivalent to a "lower frequency of reinforcement." This supplement to the definition of the SD relation is not usually mentioned simply because the SD-SA concepts were developed in a laboratory setting with food and water as reinforcement and with the SD and SA alternating during the same session. If food was reinforcing during the presence of the SD, it would generally be equally reinforcing during its absence. With establishing operations that affect behavior more quickly, however, this aspect of the definition becomes more critical. Aversive stimulation is just such an establishing operation. Consider a typical shock-escape procedure. The organism is in a situation where the shock can be administered until some response, say a lever press, occurs. This escape response removes the shock for a period, then the shock comes on again, and so on. With a well-trained organism the shock onset evokes an immediate lever pressing response, and since the relation is obviously an operant one it might seem reasonable to refer to the shock as an SD for the lever press. For the shock to be an SD, it must have been a stimulus in the presence of which the animal received more frequent reinforcement-in this case shock termination-than it received in its absence. But in the absence of the shock, failure to receive shock termination for the lever press is not properly considered a lower frequency of reinforcement. Unless the shock is on, shock termination is not behaviorally functional as a form of reinforcement, and the fact that the lever does not produce this effect is irrelevant. The shock, in this sit-

152

JACK MICHAEL like to suggest the term "establishing stimulus" and SE for this relation.

uation, is functioning more like an establishing operation, such as food deprivation, than like an SD. It evokes the escape response because it changes what functions as reinforcement rather than because it is correlated with a higher frequency of reinforcement. It would be quite possible, of course, to contrive a proper discriminative stimulus in the escape situation. Let the escape response terminate shock only when a tone is sounding; the shock remains on irrespective of the animal's behavior when the tone is not sounding. Lever pressing is clearly unreinforced when the tone is off, and the tone is thus clearly an SD for lever pressing. In summary, we could improve our verbal behavior about behavior if we could identify all environmental operations which alter the effectiveness of events as reinforcement with the same term, and especially if this term has also been explicitly linked to the evocative effects of such operations. "Establishing operation" might very well accomplish these purposes. THE ESTABLISHING STIMULUS OR SE Establishing Conditioned Reinforcement Most of the establishing operations discussed so far have been the kind that alter the effectiveness of stimulus changes that can be classified as unconditioned reinforcement. Stimulus changes identified as conditioned reinforcement are also established as such by various operations. The most obvious are the same operations that establish the effectiveness of the relevant unconditioned reinforcement. A light correlated with food becomes effective conditioned reinforcement as a function of food deprivation. Information about the location of a restaurant becomes reinforcing when

General Conditions for the Establishing Stimulus The circumstances for an establishing stimulus (Figure 1) involve a stimulus change, Si, which functions as a discriminative stimulus for a response, R1, but under circumstances where that response cannot be executed or cannot be reinforced until another stimulus change, So, takes place. This second stimulus change, then, becomes effective as conditioned reinforcement, and the behavior that has in the past achieved this second stimulus change, R9, is evoked. S1, then, is an SD for R1, but an SE for R2.

A Human Example Suppose that an electrician is prying a face plate off a piece of equipment which must be removed from the wall. The electrician's assistant is nearby with the tool box. The removal of the face plate reveals that the equipment is fastened to the wall with a slotted screw. We can consider the slotted screw under the present circumstances to be a discriminative stimulus (Si) for the use of a screw driver in removing the screw (R1). (The reinforcement of this behavior is, of course, related to the electrician's job. When the wall fixture is removed and a new one applied, payment for the job may become available, etc.) But removing the screw is not possible without an appropriate

BUT

S"CCESSFULLY WITHOUT S2

EVOKES R1

'

Rl

CANNOT OCCUR

RI

(sD)

Slt

ESTABLISHES AS Sr

EVOKES

food becomes reinforcing. There is, however, a common situation in which a stimulus change establishes another stimulus change as conditioned reinforcement without altering the effectiveness of the relevant unconditioned reinforcement. If the behavior which has previously obtained such conditioned reinforcement now becomes strong we have an evocative relation like that produced by an establishing operation but where the effect depends upon an organism's individual history rather than the history of the species. I would

(sE)

S2

>R2

Fig. 1. General condition for an establishing stimulus. S1, functioning as an SD evokes R, but this response cannot occur or cannot be reinforced without the presence of S. Thus S, also functions as an SE, establishing S2 as a form of conditioned reinforcement and at the same time evoking R& which has previously produced

S2.

DISCRIMINATIVE AND MOTIVATIONAL FUNCTIONS OF STIMULI screw driver (S2). So the electrician turns to the assistant and says "screw driver" (R2). It is the occurrence of this response which illustrates a new type of evocative effect. It is reasonable to consider the offered screw driver as the reinforcement of the request, and the history of such reinforcement to be the basis for the occurrence of this request under the present circumstances. It might also seem reasonable to consider the sight of the slotted screw as an SD for this response, but here we should be cautious. If it is proper to restrict the definition of the SD to a stimulus in the presence of which the relevant behavior has been more frequently reinforced, then the present example does not qualify. A slotted screw is not a stimulus that is correlated with an increased frequency of obtaining screw drivers. Electricians' assistants generally provide requested tools irrespective of the use to which they will be put. The presence and attention of the assistant are SDS correlated with successful asking, but not the slotted screw. However, it is an SD for unscrewing responses -a stimulus in the presence of which unscrewing responses (with the proper tool) are correlated with more frequent screw removal-but not for asking for screw drivers. The evocative effect of the slotted screw on asking behavior is more like the evocative effect of an establishing operation than that of an SD, except for its dependence on the organism's individual history. The slotted screw is better considered an establishing stimulus for asking, not a discrim-

153

inative stimulus.

An Animal Analogue It is not difficult to describe an SE situation in the context of an animal experiment, but first it is necessary to describe two secondary features of the human situation giving rise to this concept. First, it must be possible to produce the second stimulus change (S2) at any time and not just after the first stimulus change (S1). Otherwise we are dealing with simple chaining. Furthermore, the second stimulus change should not be one which once achieved remains effective indefinitely with no further effect or cost to the organism, or it will become a standard form of preparatory behavior. In the electrician's situation, if there were some tool that was used on a high proportion of activities it would be kept always available. On the other hand, to ask for a tool when it is

not needed would result in the work area being cluttered with unneeded tools (assume a small or crowded work area, etc.). This second feature would seem usually to involve some form of punishment for R2 that prevents it until Si has made it necessary. Now, for the animal analogue consider a food-deprived monkey in a chamber with a chain hanging from the ceiling and a retractable lever. Pulling the chain moves the lever into the chamber. Pressing the lever has no effect unless a light on the wall is on, in which case a lever press dispenses a food pellet. To prevent the chain pull from functioning as a standard preparatory component of the behavioral sequence, we could require that the chain be held in a pulled condition, or we could arrange that each chain pull makes the lever available for only a limited period, say five seconds. In either case we would expect a welltrained monkey ultimately to display the following repertoire: while the wall light is off (before the' electrician has seen the slotted screw), the chain pull does not occur (a screw driver is not requested), even though it would produce the lever (even though the assistant would provide one). When the light comes on (when the slotted screw is observed), the monkey pulls the chain (the electrician requests the screw driver) and then presses the lever (and then unscrews the screw) and eats the food pellet that is delivered (and removes the piece of equipment, finishes the job, etc.). Returning to Figure 1, S, is the onset of the wall light, which evokes lever pressing (R1). Lever pressing, however, cannot occur without the lever (S2), and thus the availability of the lever becomes an effective form of reinforcement once the wall light is on. The chain pull is R9, evoked by the light functioning as an SE rather than as an SD because the light is not correlated with more frequent lever availability (the reinforcement of the chain pull) but rather with greater effectiveness of the lever as a form of reinforcement.

ALTERNATIVE SOLUTIONS Larger Units of Behavior It may seem reasonable to consider the response evoked by the SE to be simply an element in a chain of responses evoked by an SD. Thus, with the electrician, asking for the screwdriver might be interpreted as a part of a

154

JACK MICHAEL

larger unit of behavior evoked by the slotted screw and reinforced by successful removal of the screw. From this point of view, the SE concept might seem unnecessary. That is, the slotted screw could be considered a discriminative stimulus for the larger unit, which is more likely to achieve the removal of the screw in the presence of a slotted screw than in the presence of a wing nut, a hex nut, etc. This analysis is not too plausible, however, since it would imply that requests are not sensitive to their own immediate consequences but only to the more remote events related to the use of the requested item. But even if this were true, we would still have to account for the formation or acquisition of the large unit of behavior. This has usually involved reference to repeated occurrence of chains of smaller units (for example, Skinner, 1938, 52ff and 102ff; Keller 8c Schoenfeld, 1950, Chapter 7; Millenson, 1967, Chapter 12), and the initial element of this particular chain would require the SE concept. That is, we might be able to do without the SE in the analysis of the current function of a large unit but would need it to account for the first element of the chain of smaller units out of which the large unit was formed.

change can only be produced when the light is on. This involves adding a static component to our reinforcing stimulus change, which may be theoretically sound, but is certainly not the usual way we talk about reinforcement. In the human example this would mean that the screw driver does not reinforce the request, but rather the change from looking at a slotted screw without a screw driver in hand to looking at one with a screw driver in hand. As with conditional conditioned reinforcement this is not an issue regarding the facts of behavior, but rather our verbal behavior concerning these facts. Will we be more effective in intellectual and practical ways by introducing a new stimulus function and retaining a simple form of verbal behavior about reinforcement, or will we be better off retaining the SD interpretation for both types of evocation but complicating our interpretation of reinforcement for one of them? I clearly favor the former.

SUMMARY

In everyday language we can and often do distinguish between changing people's behavior by changing what they want and changing their behavior by changing their chances of getting something that they already want. Our technical terminology also makes such a distinction, but only in the case of establishing operations such as deprivation and those kinds of reinforcing events called "unconditioned." Much more common are those stimulus changes which alter the reinforcing effectiveness of events ordinarily referred to as conditioned reinforcement, and which evoke the behavior that has previously produced this reinforcement. We do not have a convenient way of referring to such stimulus changes, and because of this they may be subsumed under the heading of discriminative stimuli. I have suggested the term "establishing stimulus" for such events, thus linking them with establishing operations such as deprivation, and I hope, suggesting the relation to the individual's history by the replacement of "operation" with

Conditional Conditioned Reinforcement The notion that a form of conditioned reinforcement may be conditional upon the presence of another stimulus condition is quite reasonable and requires no new terminology. This could be referred to as conditional conditioned reinforcement, and the SE of the previous sections can be seen to be the type of stimulus upon which such conditioned reinforcement is conditional. This general approachi, however, fails to implicate the evocative effect which is the main topic of the present paper and thus seems less satisfactory than the new terminology.

Retaining the SD by Complicating the Reinforcement If we consider the chain pull to be reinforced, not by the lever insertion into the chamber but by the more complex stimulus change from light on with lever out to light on with lever in, we may be able to retain the notion of the light -onset as an SD for the chain pull, because the more complex stimulus

"stimulus."

REFERENCES

Keller, F. S., & Schoenfeld, W. N. Principles of psychology. New York: Appleton-Century-Crofts, 1950.

DISCRIMINATIVE AND MOTIVATIONAL FUNCTIONS OF STIMULI

Michael, J. L. Positive and negative reinforcement, a distinction that is no longer necessary; or a better way to talk about bad things. Behaviorism, 1975, 3,

155

33-44. Millenson, J. R. Principles of behavioral analysis. New York: Macmillan, 1967. Skinner, B. F. The behavior of organisms. New York: Appleton-Century-Crofts, 1938.

Skinner, B. F. Science and human behavior. New York: Macmillan, 1953. Skinner, B. F. Verbal behavior. New York: AppletonCentury-Crofts, 1957. Received September 22, 1980 Final acceptance August 31, 1981

You might also like

- A Cognitive Approach To Panic de David M. Clark 1986Document10 pagesA Cognitive Approach To Panic de David M. Clark 1986Jeaniret YañezNo ratings yet

- Cognitive Self-Statements in Depression, DP of The ATQ, KendallDocument13 pagesCognitive Self-Statements in Depression, DP of The ATQ, Kendall1quellNo ratings yet

- BEDA QuestionnaireDocument68 pagesBEDA QuestionnaireRukawa RyaNo ratings yet

- Viru - Early Contributions of Russian PhysiologistsDocument13 pagesViru - Early Contributions of Russian PhysiologistsJeff ArmstrongNo ratings yet

- Skinner, B. F. (1945) - The Operational Analysis of Psychological Terms PDFDocument8 pagesSkinner, B. F. (1945) - The Operational Analysis of Psychological Terms PDFJota S. FernandesNo ratings yet

- Psycho Py ManualDocument981 pagesPsycho Py Manualwillian3219No ratings yet

- Mitch Fryling and Linda Hayes - Motivation in Behavior Analysis A Critique PDFDocument9 pagesMitch Fryling and Linda Hayes - Motivation in Behavior Analysis A Critique PDFIrving Pérez Méndez0% (1)

- Bartonietz Rotational SP Technique Biomechanic Findings and Recommendations For TrainingDocument16 pagesBartonietz Rotational SP Technique Biomechanic Findings and Recommendations For TrainingAldo GalvanNo ratings yet

- Bartonietz&Larsen GeneralAndEventSpecificConsiderationsPeakingDocument12 pagesBartonietz&Larsen GeneralAndEventSpecificConsiderationsPeakingsamiNo ratings yet

- Main - Iwata Et Al 1982Document18 pagesMain - Iwata Et Al 1982TimiNo ratings yet

- Screening for Dysfunctional BreathingDocument24 pagesScreening for Dysfunctional BreathingHONGJYNo ratings yet

- Skinner, B. F. (1989) - The Place of Feeling in The Analysis of BehaviorDocument9 pagesSkinner, B. F. (1989) - The Place of Feeling in The Analysis of Behaviordf710No ratings yet

- (Behavior Science) Israel Goldiamond - A Programming Contingency Analysis of Mental Health-Routledge (2022)Document92 pages(Behavior Science) Israel Goldiamond - A Programming Contingency Analysis of Mental Health-Routledge (2022)Henry CadenasNo ratings yet

- Moore Behaviorism 2011Document17 pagesMoore Behaviorism 2011ALVIN ABAJARNo ratings yet

- Foundations of Behavioral Neuroscience: Tenth EditionDocument42 pagesFoundations of Behavioral Neuroscience: Tenth EditionKaalynn JacksonNo ratings yet

- Filling The Gaps Skinner On The Role of Neuroscience in The Explanation of BehaviorDocument27 pagesFilling The Gaps Skinner On The Role of Neuroscience in The Explanation of BehaviorJosé EduardoNo ratings yet

- David Palmer et al. - Dialogue in private eventsDocument18 pagesDavid Palmer et al. - Dialogue in private eventsIrving Pérez MéndezNo ratings yet

- Altmeyer Tech and Training of The DT and SP PDFDocument11 pagesAltmeyer Tech and Training of The DT and SP PDFZachary LeeNo ratings yet

- Skinner, B. F. (1933) - The Abolishment of A Discrimination PDFDocument4 pagesSkinner, B. F. (1933) - The Abolishment of A Discrimination PDFJota S. FernandesNo ratings yet

- Cultural Systems AnalysisDocument23 pagesCultural Systems AnalysisLuc FglNo ratings yet

- Galileo's Lament, and The Collapse of The Social SciencesDocument246 pagesGalileo's Lament, and The Collapse of The Social SciencesArt MarrNo ratings yet

- Journal Article Analyzes Two Usages of Automatic Reinforcement TermDocument9 pagesJournal Article Analyzes Two Usages of Automatic Reinforcement TermdrlamfegNo ratings yet

- De Waal & Preston (2017) Mammalian Empathy - Behavioural Manifestations and Neural BasisDocument12 pagesDe Waal & Preston (2017) Mammalian Empathy - Behavioural Manifestations and Neural BasisRenzo Lanfranco100% (2)

- 100m y 110m Hurdle TrainingDocument14 pages100m y 110m Hurdle TrainingTerry LautoNo ratings yet

- Comparing Response Blocking and Response Interruption or Redirection On Levels of Motor StereotypyDocument13 pagesComparing Response Blocking and Response Interruption or Redirection On Levels of Motor Stereotypysuchitra choudhuryNo ratings yet

- Post Skinnerian Post SkinnerDocument8 pagesPost Skinnerian Post SkinnerNicolas GonçalvesNo ratings yet

- Organizational Behavior Management in Developmental Disabilities ServicesDocument63 pagesOrganizational Behavior Management in Developmental Disabilities ServicesMariaClaradeFreitasNo ratings yet

- What Is Dynamic Neuromuscular Stabilization (DNS) ?: How Is The Core Stabilized?Document5 pagesWhat Is Dynamic Neuromuscular Stabilization (DNS) ?: How Is The Core Stabilized?HONGJYNo ratings yet

- Research in Verbal Behavior and Some Neurophysiological ImplicationsFrom EverandResearch in Verbal Behavior and Some Neurophysiological ImplicationsKurt SalzingerNo ratings yet

- Chapter 8 - Conditioning and LearningDocument7 pagesChapter 8 - Conditioning and Learningjeremypj100% (2)

- Trends in Behavior Analysis Volume 1 PDFDocument254 pagesTrends in Behavior Analysis Volume 1 PDFjoaomartinelli100% (2)

- Book About BehaviorismDocument215 pagesBook About BehaviorismcengizdemirsoyNo ratings yet

- Metaphors in The Mind Sources of Variation in Embodied Metaphor 110841656x 9781108416566 - CompressDocument300 pagesMetaphors in The Mind Sources of Variation in Embodied Metaphor 110841656x 9781108416566 - Compressbose_lowe11No ratings yet

- Chapter 13 - Altruism and Prosocial Behavior-2Document7 pagesChapter 13 - Altruism and Prosocial Behavior-2alexisNo ratings yet

- BehaviorismDocument9 pagesBehaviorismJie FranciscoNo ratings yet

- Flash e Hogan (1985) PDFDocument16 pagesFlash e Hogan (1985) PDFDunga PessoaNo ratings yet

- Goldiamond, I., & Thompson, D. (2004) - The Blue Books. Functional Analysis of Behavior-Cambridge Center For Behavioral Studies (2004)Document986 pagesGoldiamond, I., & Thompson, D. (2004) - The Blue Books. Functional Analysis of Behavior-Cambridge Center For Behavioral Studies (2004)Diego Mansano FernandesNo ratings yet

- Introduction To Dynamic Neuromuscular Stabilization DNS NotesDocument59 pagesIntroduction To Dynamic Neuromuscular Stabilization DNS NotesPriya Jain100% (1)

- Introduction To Psychology - (Chapter 7) LearningDocument12 pagesIntroduction To Psychology - (Chapter 7) LearningVivian PNo ratings yet

- Autonomic Response in Depersonalization DisorderDocument6 pagesAutonomic Response in Depersonalization Disorderyochai_atariaNo ratings yet

- The Female Athlete Triad.26Document16 pagesThe Female Athlete Triad.26JohanNo ratings yet

- Animal Behaviour & Ethology Avneesh BishnoiDocument20 pagesAnimal Behaviour & Ethology Avneesh BishnoiAishwary SinghNo ratings yet

- Skinner BehaviorismDocument20 pagesSkinner BehaviorismAcklinda LiuNo ratings yet

- Dinsmoor 1995aDocument18 pagesDinsmoor 1995ajsaccuzzoNo ratings yet

- Textbook Survey Results 201102Document102 pagesTextbook Survey Results 201102tokionas100% (1)

- Rts Notes PDFDocument2 pagesRts Notes PDFalphaprogNo ratings yet

- Descartes - Meditations II and VI PDFDocument13 pagesDescartes - Meditations II and VI PDFnmoverley100% (1)

- Biomechanics: Dr. Jewelson M. SantosDocument29 pagesBiomechanics: Dr. Jewelson M. SantosJewelson SantosNo ratings yet

- The Clinical Interview and AssessmentDocument6 pagesThe Clinical Interview and AssessmentIoana Antonesi100% (1)

- Obsessive Compulsive DisorderDocument3 pagesObsessive Compulsive DisorderFlora Angeli PastoresNo ratings yet

- Musculoskeletal Insight EngineDocument11 pagesMusculoskeletal Insight EnginePatricia Ruiz ZamudioNo ratings yet

- Self Regulation-Brain - Cognition - and - Development PDFDocument149 pagesSelf Regulation-Brain - Cognition - and - Development PDFn rike100% (1)

- Evidence Base For DIR 2020Document13 pagesEvidence Base For DIR 2020MP MartinsNo ratings yet

- Goldiamon - Toward A Constructional Approach To Social Problems Ethical and Constitutional Issues Raised by Applied Behavior Analysis PDFDocument90 pagesGoldiamon - Toward A Constructional Approach To Social Problems Ethical and Constitutional Issues Raised by Applied Behavior Analysis PDFPamelaLiraNo ratings yet

- (Eisenberger) (2014) (ARP) (T) Social Pain Brain PDFDocument32 pages(Eisenberger) (2014) (ARP) (T) Social Pain Brain PDFbyominNo ratings yet

- Mental Skills Training For Sports - A Brief Review - Luke BehnckeDocument19 pagesMental Skills Training For Sports - A Brief Review - Luke BehnckedoublepazzNo ratings yet

- Psychology Study Guide, 8th EditionDocument476 pagesPsychology Study Guide, 8th EditionJoseph Jones67% (3)

- Behaviorism and Mind: B. F. SkinnerDocument15 pagesBehaviorism and Mind: B. F. SkinnerDavid DennenNo ratings yet

- Operant Conditioning (Staddon & Cerutti, 2003)Document33 pagesOperant Conditioning (Staddon & Cerutti, 2003)caelus72No ratings yet

- Wilson Jessica ResumeDocument2 pagesWilson Jessica Resumeapi-540125872No ratings yet

- My Five Year PlanDocument2 pagesMy Five Year Planapi-408271616No ratings yet

- B.F. Skinner BiographyDocument11 pagesB.F. Skinner BiographyDurgaA'dTdnNo ratings yet

- TI Activity 3 Managing The ClassroomDocument9 pagesTI Activity 3 Managing The ClassroomMargarita LovidadNo ratings yet

- The Cognitive Perspective On LearningDocument11 pagesThe Cognitive Perspective On Learningazmal100% (1)

- FS100: Observation of Teaching-Learning in Actual School EnvironmentDocument7 pagesFS100: Observation of Teaching-Learning in Actual School Environmentnikko candaNo ratings yet

- DLL Fbs Jul 16 - 20Document2 pagesDLL Fbs Jul 16 - 20Lhey Vilar100% (21)

- Kra 2 Objective 5 For QualityefficiencyDocument2 pagesKra 2 Objective 5 For QualityefficiencyJR BalleNo ratings yet

- Training Proposal For Effective Classroom Management and Discipline FinalDocument11 pagesTraining Proposal For Effective Classroom Management and Discipline FinalTrisha Mae Bocabo100% (1)

- Superstition in The Pigeon by B.F. SkinnerDocument10 pagesSuperstition in The Pigeon by B.F. SkinnerDavid100% (1)

- KrumboltzDocument2 pagesKrumboltzFaizura RohaizadNo ratings yet

- SPPP1042 Unit 3 Instructional DesignDocument48 pagesSPPP1042 Unit 3 Instructional DesignAziz JuhanNo ratings yet

- Reflection PaperDocument3 pagesReflection Papermaysam gharibNo ratings yet

- FIELD STUDY 1 Ep. 7Document9 pagesFIELD STUDY 1 Ep. 7Jamille Nympha C. BalasiNo ratings yet

- Behaviorism Vs Constructivism A Paradigm Shift From Traditional To Alternative Assessment TechniquesDocument15 pagesBehaviorism Vs Constructivism A Paradigm Shift From Traditional To Alternative Assessment TechniquesDimas PutraNo ratings yet

- BPT1501 Assignment 3Document5 pagesBPT1501 Assignment 3Anime LoverNo ratings yet

- Kolehiyo NG Lungsod NG Lipa: College of Teacher EducationDocument37 pagesKolehiyo NG Lungsod NG Lipa: College of Teacher EducationJonas Landicho MagsinoNo ratings yet

- Chapter 15 - Managing EmployeesDocument7 pagesChapter 15 - Managing EmployeesUsman NadeemNo ratings yet

- Dog Behaviour and Training Module 1 Research NotesDocument10 pagesDog Behaviour and Training Module 1 Research NotesKarin MihaljevicNo ratings yet

- Sivaraman (2017) Empathy SkillsDocument10 pagesSivaraman (2017) Empathy SkillsJohnNo ratings yet

- Grade 1 Paragraph FeaturesDocument4 pagesGrade 1 Paragraph FeaturesJanetteMaribbayPasicolanNo ratings yet

- Chapter 7-8Document3 pagesChapter 7-8Maria TMLNo ratings yet

- Social Learning Theory With Cover Page v2Document7 pagesSocial Learning Theory With Cover Page v2TARUN kumarNo ratings yet

- Organizational Behavior Summary Chapter 7 Motivation ConceptDocument6 pagesOrganizational Behavior Summary Chapter 7 Motivation Concept34. Tiansy Annisa BurhansyahNo ratings yet

- Important Note: - The Information Shared Is For Educational Purposes - Any Further Share/Forward/Copy/Edit Is StrictlyDocument73 pagesImportant Note: - The Information Shared Is For Educational Purposes - Any Further Share/Forward/Copy/Edit Is Strictlymy driveNo ratings yet

- Learning theories overviewDocument6 pagesLearning theories overviewBEA CASSANDRA BELCOURTNo ratings yet

- Schrag, Peter - Mind Control (1978) PDFDocument332 pagesSchrag, Peter - Mind Control (1978) PDFRupomir Sajberski0% (1)

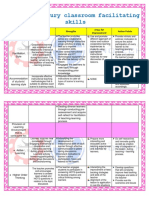

- Assignment No. 2 - My 21st Century Classroom Facilitating SkillsDocument4 pagesAssignment No. 2 - My 21st Century Classroom Facilitating SkillsKaren Delos Santos Toledo100% (2)

- Concept: Child and Adolescent Development/Facilitating LearningDocument360 pagesConcept: Child and Adolescent Development/Facilitating LearningJeffrey Roy Adlawan LopezNo ratings yet

- Bobo Doll Experiment.Document3 pagesBobo Doll Experiment.arlleimandappNo ratings yet