Professional Documents

Culture Documents

Time Series Models

Uploaded by

ashish_20kOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Time Series Models

Uploaded by

ashish_20kCopyright:

Available Formats

Time Series

Models

DJS 23/08/2012

2

Topics

Stochastic processes

Stationarity

White noise

Random walk

Moving average processes

Autoregressive processes

More general processes

Stochastic Processes 1

DJS 23/08/2012

4

Stochastic processes

Time series are an example of a stochastic

or random process

A stochastic process is 'a statistical

phenomenen that evolves in

timeaccording to probabilistic laws'

Mathematically, a stochastic process is an

indexed collection of random variables

X

t

:t eT

{ }

DJS 23/08/2012

5

Stochastic processes

We are concerned only with processes

indexed by time, either discrete time or

continuous time processes such as

X

t

:t e(, )

{ }

= X

t

: < t <

{ }

or

X

t

:t e{0,1, 2,...}

{ }

= X

0

, X

1

, X

2

,...

{ }

DJS 23/08/2012

6

Inference

We base our inference usually on a

single observation or realization of

the process over some period of time,

say [0, T] (a continuous interval of

time) or at a sequence of time points

{0, 1, 2, . . . T}

DJS 23/08/2012

7

Specification of a process

To describe a stochastic process fully,

we must specify the all finite

dimensional distributions, i.e. the joint

distribution of of the random

variables for any finite set of times

X

t

1

, X

t

2

, X

t

3

,.... X

t

n

{ }

DJS 23/08/2012

8

Specification of a process

A simpler approach is to only specify

the momentsthis is sufficient if all

the joint distributions are normal

The mean and variance functions are

given by

t

= E(X

t

), and o

t

2

= Var( X

t

)

DJS 23/08/2012

9

Autocovariance

Because the random variables comprising

the process are not independent, we must

also specify their covariance

t

1

,t

2

= Cov( X

t

1

, X

t

2

)

Stationarity 2

DJS 23/08/2012

11

Stationarity

Inference is most easy, when a

process is stationaryits distribution

does not change over time

This is strict stationarity

A process is weakly stationary if its

mean and autocovariance functions

do not change over time

DJS 23/08/2012

12

Weak stationarity

The autocovariance depends only on

the time difference or lag between

the two time points involved

t

= , o

t

2

= o

2

and

t

1

,t

2

= Cov( X

t

1

, X

t

2

) = Cov(X

t

1

+t

, X

t

2

+t

)

=

t

1

+t,t

2

+t

t

1

t

2

DJS 23/08/2012

13

Autocorrelation

It is useful to standardize the

autocovariance function (acvf)

Consider stationary case only

Use the autocorrelation function (acf)

t

=

t

0

DJS 23/08/2012

14

Autocorrelation

More than one process can have the

same acf

Properties are:

0

=1

t

=

t

t

s1

White Noise 3

DJS 23/08/2012

16

White noise

This is a purely random process, a

sequence of independent and identically

distributed random variables

Has constant mean and variance

Also

k

= Cov(Z

t

, Z

t +k

) = 0, k = 0

k

=

1 k = 0

0 k = 0

Random Walk 3

DJS 23/08/2012

18

Random walk

Start with {Zt} being white noise or

purely random

{Xt} is a random walk if

X

o

= 0

X

t

= X

t 1

+ Z

t

= Z

t

k=0

t

DJS 23/08/2012

19

Random walk

The random walk is not stationary

First differences are stationary

VX

t

= X

t

X

t1

= Z

t

E( X

t

) = t, Var(X

t

) = to

2

Moving Average

Processes

4

DJS 23/08/2012

21

Moving average processes

Start with {Zt} being white noise or

purely random, mean zero, s.d. o Z

{Xt} is a moving average process of order

q (written MA(q)) if for some constants

|0, |1, . . . |q we have

Usually |0 =1

X

t

= |

0

Z

t

+|

1

Z

t1

+...|

q

Z

tq

DJS 23/08/2012

22

Moving average processes

The mean and variance are given by

The process is weakly stationary because

the mean is constant and the covariance

does not depend on t

E( X

t

) = 0, Var(X

t

) = o

Z

2

|

k

2

k=0

q

DJS 23/08/2012

23

Moving average processes

If the Zt's are normal then so is the

process, and it is then strictly stationary

The autocorrelation is

k

=

1 k = 0

|

i

|

i+k

i =0

qk

|

i

2

k =1,..., q

i=0

q

0 k > q

k

k < 0

DJS 23/08/2012

24

Moving average processes

Note the autocorrelation cuts off at lag q

For the MA(1) process with |0 = 1

k

=

1 k = 0

|

1

(1 +|

1

2

) k = 1

0 otherwise

DJS 23/08/2012

25

Moving average processes

In order to ensure there is a unique

MA process for a given acf, we impose

the condition of invertibility

This ensures that when the process is

written in series form, the series

converges

For the MA(1) process Xt = Zt + uZt - 1,

the condition is |u|< 1

DJS 23/08/2012

26

Moving average processes

For general processes introduce the

backward shift operator B

Then the MA(q) process is given by

B

j

X

t

= X

t j

X

t

= (|

0

+ |

1

B+ |

2

B

2

+...|

q

B

q

)Z

t

= u(B)Z

t

DJS 23/08/2012

27

Moving average processes

The general condition for invertibility

is that all the roots of the equation

u(B) = 1 lie outside the unit circle (have

modulus less than one)

Autoregressive

Processes

4

DJS 23/08/2012

29

Autoregressive processes

Assume {Zt} is purely random with

mean zero and s.d. oz

Then the autoregressive process of

order p or AR(p) process is

X

t

= o

1

X

t 1

+ o

2

X

t 2

+...o

p

X

t p

+ Z

t

DJS 23/08/2012

30

Autoregressive processes

The first order autoregression is

Xt = oXt - 1 + Zt

Provided |o|<1 it may be written as

an infinite order MA process

Using the backshift operator we have

(1 oB)Xt = Zt

DJS 23/08/2012

31

Autoregressive processes

From the previous equation we have

X

t

= Z

t

/ (1 oB)

= (1+ oB+ o

2

B

2

+... )Z

t

= Z

t

+ oZ

t 1

+ o

2

Z

t 2

+...

DJS 23/08/2012

32

Autoregressive processes

Then E(Xt) = 0, and if |o|<1

Var (X

t

) = o

X

2

= o

Z

2

/ (1 o

2

)

k

= o

k

o

Z

2

/ (1 o

2

)

k

= o

k

DJS 23/08/2012

33

Autoregressive processes

The AR(p) process can be written as

(1 o

1

B o

2

B

2

... o

p

B

p

)X

t

= Z

t

or

X

t

= Z

t

/ (1 o

1

B o

2

B

2

... o

p

B

p

) = f (B)Z

t

DJS 23/08/2012

34

Autoregressive processes

This is for

for some |1, |2, . . .

This gives Xt as an infinite MA

process, so it has mean zero

f (B) = (1 o

1

B... o

p

B

p

)

1

= (1+ |

1

B+ |

2

B

2

+... )

DJS 23/08/2012

35

Autoregressive processes

Conditions are needed to ensure that

various series converge, and hence that

the variance exists, and the

autocovariance can be defined

Essentially these are requirements that

the |i become small quickly enough, for

large i

DJS 23/08/2012

36

Autoregressive processes

The |i may not be able to be found

however.

The alternative is to work with the oi

The acf is expressible in terms of the

roots ti, i=1,2, ...p of the auxiliary

equation

y

p

o

1

y

p1

...o

p

= 0

DJS 23/08/2012

37

Autoregressive processes

Then a necessary and sufficient

condition for stationarity is that for

every i, |ti|<1

An equivalent way of expressing this is

that the roots of the equation

must lie outside the unit circle

|(B) = 1 o

1

B...o

p

B

p

= 0

DJS 23/08/2012

38

ARMA processes

Combine AR and MA processes

An ARMA process of order (p,q) is

given by

X

t

= o

1

X

t 1

+...o

p

X

t p

+Z

t

+ |

1

Z

t 1

+...|

p

Z

t q

DJS 23/08/2012

39

ARMA processes

Alternative expressions are possible

using the backshift operator

|(B)X

t

= u(B)Z

t

where

|(B) = 1 o

1

B... o

p

B

p

u(B) = 1+|

1

B+... +|

q

B

q

DJS 23/08/2012

40

ARMA processes

An ARMA process can be written in pure

MA or pure AR forms, the operators

being possibly of infinite order

Usually the mixed form requires fewer

parameters

X

t

= (B)Z

t

t(B)X

t

= Z

t

DJS 23/08/2012

41

ARIMA processes

General autoregressive integrated

moving average processes are called

ARIMA processes

When differenced say d times, the

process is an ARMA process

Call the differenced process Wt. Then

Wt is an ARMA process and

W

t

= V

d

X

t

= (1 B)

d

X

t

DJS 23/08/2012

42

ARIMA processes

Alternatively specify the process as

This is an ARIMA process of order

(p,d,q)

|(B)W

t

= u(B)Z

t

or

|(B)(1 B)

d

X

t

= u(B)Z

t

DJS 23/08/2012

43

ARIMA processes

The model for Xt is non-stationary

because the AR operator on the left

hand side has d roots on the unit circle

d is often 1

Random walk is ARIMA(0,1,0)

Can include seasonal termssee later

DJS 23/08/2012

44

Non-zero mean

We have assumed that the mean is

zero in the ARIMA models

There are two alternatives

mean correct all the Wt terms in

the model

incorporate a constant term in the

model

The Box-Jenkins

Approach

DJS 23/08/2012

46

Topics

Outline of the approach

Sample autocorrelation & partial

autocorrelation

Fitting ARIMA models

Diagnostic checking

Example

Further ideas

Outline of the

Box-Jenkins Approach

1

DJS 23/08/2012

48

Box-Jenkins approach

The approach is an iterative one

involving

model identification

model fitting

model checking

If the model checking reveals that there

are problems, the process is repeated

DJS 23/08/2012

49

Models

Models to be fitted are from the

ARIMA class of models (or SARIMA

class if the data are seasonal)

The major tools in the identification

process are the (sample)

autocorrelation function and partial

autocorrelation function

DJS 23/08/2012

50

Autocorrelation

Use the sample autocovariance and

sample variance to estimate the

autocorrelation

The obvious estimator of the

autocovariance is

c

k

= X

t

X

( )

t =1

Nk

X

t +k

X

( )

N

DJS 23/08/2012

51

Autocovariances

The sample autocovariances are not

unbiased estimates of the

autocovariancesbias is of order 1/N

Sample autocovariances are

correlated, so may display smooth

ripples at long lags which are not in

the actual autocovariances

DJS 23/08/2012

52

Autocovariances

Can use a different divisor (N-k

instead of N) to decrease biasbut

may increase mean square error

Can use jacknifing to reduce bias (to

order 1/N )divide the sample in half

and estimate using the whole and both

halves

2

DJS 23/08/2012

53

Autocorrelation

More difficult to obtain properties of

sample autocorrelation

Generally still biased

When process is white noise

E(rk) ~ 1/N

Var( rk) ~ 1/N

Correlations are normal for N large

DJS 23/08/2012

54

Autocorrelation

Gives a rough test of whether an

autocorrelation is non-zero

If |rk|>2/\(N) suspect the

autocorrelation at that lag is non-zero

Note that when examining many

autocorrelations the chance of falsly

identifying a non-zero one increases

Consider physical interpretation

DJS 23/08/2012

55

Partial autocorrelation

Broadly speaking the partial

autocorrelation is the correlation

between Xt and Xt+k with the effect of

the intervening variables removed

Sample partial autocorrelations are

found from sample autocorrelations

by solving a set of equations known as

the Yule-Walker equations

DJS 23/08/2012

56

Model identification

Plot the autocorrelations and partial

autocorrelations for the series

Use these to try and identify an

appropriate model

Consider stationary series first

DJS 23/08/2012

57

Stationary series

For a MA(q) process the

autocorrelation is zero at lags greater

than q, partial autocorrelations tail off

in exponential fashion

For an AR(p) process the partial

autocorrelation is zero at lags greater

than p, autocorrelations tail off in

exponential fashion

DJS 23/08/2012

58

Stationary series

For mixed ARMA processes, both the

acf and pacf will have large values up

to q and p respectively, then tail off in

an exponential fashion

See graphs in M&W, pp. 136137

Try fitting a model and examine the

residuals is the approach used

DJS 23/08/2012

59

Non-stationary series

The existence of non-stationarity is

indicated by an acf which is large at

long lags

Induce stationarity by differencing

Differencing once is generally

sufficient, twice may be needed

Overdifferencing introduces

autocorrelation & should be avoided

Estimation 2

DJS 23/08/2012

61

Estimation

We will always fit the model using

Minitab

AR models may be fitted by least

squares, or by solving the Yule-

Walker equations

MA models require an iterative

procedure

ARMA models are like MA models

DJS 23/08/2012

62

Minitab

Minitab uses an iterative least squares

approach to fitting ARMA models

Standard errors can be calculated for

the parameter estimates so confidence

intervals and tests of significance can

be carried out

Diagnostic Checking 3

DJS 23/08/2012

64

Diagnostic checking

Based on residuals

Residuals should be Normally

distributed have zero mean, by

uncorrelated, and should have

minimum variance or dispersion

DJS 23/08/2012

65

Procedures

Plot residuals against time

Draw histogram

Obtain normal scores plot

Plot acf and pacf of residuals

Plot residuals against fitted values

Note that residuals are not

uncorrelated, but are approximately

so at long lags

DJS 23/08/2012

66

Procedures

Portmanteau test

Overfitting

DJS 23/08/2012

67

Portmanteau test

Box and Peirce proposed a statistic

which tests the magnitudes of the

residual autocorrelations as a group

Their test was to compare Q below with

the Chi-Square with K p q d.f. when

fitting an ARMA(p, q) model

Q = N r

k

2

k=1

K

DJS 23/08/2012

68

Portmanteau test

Box & Ljung discovered that the test

was not good unless n was very large

Instead use modified Box-Pierce or

Ljung-Box-Pierce statisticreject

model if Q* is too large

Q

*

= N N + 2 ( )

r

k

2

N k

k=1

K

DJS 23/08/2012

69

Overfitting

Suppose we think an AR(2) model is

appropriate. We fit an AR(3) model.

The estimate of the additional

parameter should not be significantly

different from zero

The other parameters should not

change much

This is an example of overfitting

Further Ideas 4

DJS 23/08/2012

71

Other identification tools

Chatfield(1979), JRSS A among others

has suggested the use of the inverse

autocorrelation to assist with

identification of a suitable model

Abraham & Ledolter (1984) Biometrika

show that although this cuts off after lag

p for the AR(p) model it is less effective

than the partial autocorrelation for

detecting the AR order

DJS 23/08/2012

72

AIC

The Akaike Information Criterion is a

function of the maximum likelihood

plus twice the number of parameters

The number of parameters in the

formula penalizes models with too

many parameters

DJS 23/08/2012

73

Parsimony

Once principal generally accepted is

that models should be parsimonious

having as few parameters as possible

Note that any ARMA model can be

represented as a pure AR or pure MA

model, but the number of parameters

may be infinite

DJS 23/08/2012

74

Parsimony

AR models are easier to fit so there is a

temptation to fit a less parsimonious AR

model when a mixed ARMA model is

appropriate

Ledolter & Abraham (1981) Technometrics

show that fitting unnecessary extra

parameters, or an AR model when a MA

model is appropriate, results in loss of

forecast accuracy

DJS 23/08/2012

75

Exponential smoothing

Most exponential smoothing

techniques are equivalent to fitting an

ARIMA model of some sort

Winters' multiplicative seasonal

smoothing has no ARIMA equivalent

Winters' additive seasonal smoothing

has a very non-parsimonious ARIMA

equivalent

DJS 23/08/2012

76

Exponential smoothing

For example simple exponential

smoothing is the optimal method of

fitting the ARIMA (0, 1, 1) process

Optimality is obtained by taking the

smoothing parameter o to be 1 u

when the model is

(1 B)X

t

= (1 uB)Z

t

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Tornado probe photos quizDocument2 pagesTornado probe photos quizandredasanfonaNo ratings yet

- Stochastic Modelling 2000-2004Document189 pagesStochastic Modelling 2000-2004Brian KufahakutizwiNo ratings yet

- MoES Technology Vision for Ocean ExplorationDocument289 pagesMoES Technology Vision for Ocean ExplorationPrabhat KumarNo ratings yet

- Maths ProjectDocument21 pagesMaths Projectdivya0% (1)

- Test BankDocument4 pagesTest Bankashish_20kNo ratings yet

- Responsible Investment - A Guide For Private Equity Venture CapitalDocument16 pagesResponsible Investment - A Guide For Private Equity Venture Capitalashish_20kNo ratings yet

- Key Financial Ratios for Liquidity, Solvency, Profitability and ActivityDocument2 pagesKey Financial Ratios for Liquidity, Solvency, Profitability and Activityashish_20kNo ratings yet

- AccentureDocument13 pagesAccentureashish_20kNo ratings yet

- River Sand Costing As On 1/2/2006Document1 pageRiver Sand Costing As On 1/2/2006ashish_20kNo ratings yet

- Mission Begin AgainDocument4 pagesMission Begin Againashish_20kNo ratings yet

- Cement Survey - Retail MarketDocument6 pagesCement Survey - Retail Marketashish_20kNo ratings yet

- Oil ReportDocument8 pagesOil Reportashish_20kNo ratings yet

- HSK (Hanyu Shuiping Kaoshi) - DeMODocument8 pagesHSK (Hanyu Shuiping Kaoshi) - DeMOashish_20kNo ratings yet

- Cement Survey 2 - Retail MarketDocument5 pagesCement Survey 2 - Retail Marketashish_20kNo ratings yet

- Damodaran DCFDocument15 pagesDamodaran DCFpraveen_356100% (2)

- Refineries in India: S. No. Name o The Company F Location o The F Refinery Capacity, MmtpaDocument22 pagesRefineries in India: S. No. Name o The Company F Location o The F Refinery Capacity, MmtpaAmrit SarkarNo ratings yet

- Sustainability 1050861Document22 pagesSustainability 1050861AceNo ratings yet

- CSCP Ls 2018 Brochure 8.5x11Document8 pagesCSCP Ls 2018 Brochure 8.5x11GangadharNo ratings yet

- ItIsYrBusiness PDFDocument12 pagesItIsYrBusiness PDFashish_20kNo ratings yet

- Lavasa Investor WoesDocument1 pageLavasa Investor Woesashish_20kNo ratings yet

- Reforms NeededDocument5 pagesReforms Neededashish_20kNo ratings yet

- Municipal BondDocument5 pagesMunicipal Bondashish_20kNo ratings yet

- NMIMS 2012 Holiday ListDocument2 pagesNMIMS 2012 Holiday Listashish_20kNo ratings yet

- BopDocument12 pagesBopashish_20kNo ratings yet

- Futures PrikcesDocument51 pagesFutures PrikcesLuis MiguelNo ratings yet

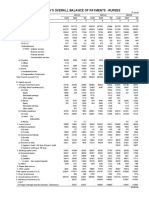

- Table 141: India'S Overall Balance of Payments - RupeesDocument3 pagesTable 141: India'S Overall Balance of Payments - Rupeesashish_20kNo ratings yet

- Working CapitalDocument55 pagesWorking Capitalashish_20kNo ratings yet

- Take Over Code 2011 PDFDocument64 pagesTake Over Code 2011 PDFashish_20kNo ratings yet

- Astm A105 PDFDocument5 pagesAstm A105 PDFrodriguez.gaytan100% (1)

- Chapter 14Document46 pagesChapter 14ashish_20k0% (1)

- Chapter 25Document35 pagesChapter 25Muhammad Zahid FaridNo ratings yet

- Press and To Open Baan Report: Alt-F8 EnterDocument1 pagePress and To Open Baan Report: Alt-F8 Enterashish_20kNo ratings yet

- Wrap Text SampleDocument2 pagesWrap Text Sampleashish_20kNo ratings yet

- 1.check ListDocument2 pages1.check Listashish_20kNo ratings yet

- Lemonade Stall Version 1 - 4Document19 pagesLemonade Stall Version 1 - 4Alan LibertNo ratings yet

- Brochure NCSS 2000 PDFDocument16 pagesBrochure NCSS 2000 PDFgore_11No ratings yet

- S OP Mind Map 1568467991Document1 pageS OP Mind Map 1568467991MehmetNo ratings yet

- Our Iceberg Is Melting PresentationDocument25 pagesOur Iceberg Is Melting PresentationHesham Fadly100% (1)

- Weather: What Is Wind?Document8 pagesWeather: What Is Wind?ruvinNo ratings yet

- Demand Forecasting SlidesDocument62 pagesDemand Forecasting SlidesSiddharth Narayanan ChidambareswaranNo ratings yet

- MMAPreferred Catalog 2010Document12 pagesMMAPreferred Catalog 2010Deep MandaliaNo ratings yet

- DR P Bamforth - Durability Study of A Diaphragm Wall - CONCRETE CRACKING JLDocument27 pagesDR P Bamforth - Durability Study of A Diaphragm Wall - CONCRETE CRACKING JLembaagil100% (1)

- Introduction To Food Freezing - 3Document50 pagesIntroduction To Food Freezing - 3Mahesh KumarNo ratings yet

- Mathematics For OrganizationDocument5 pagesMathematics For OrganizationFrancepogi4ever YTNo ratings yet

- Opinion Column WorksheetDocument3 pagesOpinion Column WorksheetAstridNo ratings yet

- Verbs IrregularDocument7 pagesVerbs IrregularIsabel Ferreira0% (1)

- Statistical MethodsDocument16 pagesStatistical MethodsyasheshgaglaniNo ratings yet

- The Flatmates: Language Point: Weather VocabularyDocument3 pagesThe Flatmates: Language Point: Weather VocabularyCristian SavaNo ratings yet

- SPE 39931 (1998) Production Analysis of Linear Flow Into Fractured Tight Gas WellsDocument12 pagesSPE 39931 (1998) Production Analysis of Linear Flow Into Fractured Tight Gas WellsEric RodriguesNo ratings yet

- Correlation Between The Wind Speed and The Elevation To Evaluate The Wind Potential in The Southern Region of EcuadorDocument17 pagesCorrelation Between The Wind Speed and The Elevation To Evaluate The Wind Potential in The Southern Region of EcuadorHolguer TisalemaNo ratings yet

- Innocent Life HahaDocument243 pagesInnocent Life HahaRyzameil Andrea UyNo ratings yet

- Guidelines For The Education and Training of Personnel in Meteorology and Operational HydrologyDocument61 pagesGuidelines For The Education and Training of Personnel in Meteorology and Operational HydrologyRucelle Chiong GarcianoNo ratings yet

- Futurology in PerspectiveDocument10 pagesFuturology in Perspectivefx5588No ratings yet

- StatPad Installation and User GuideDocument85 pagesStatPad Installation and User GuideBhargav Singirikonda0% (1)

- Future Tense ExercisesDocument3 pagesFuture Tense ExercisesThanhThaoTruongNo ratings yet

- Truth About Range DataDocument13 pagesTruth About Range DataGilley EstesNo ratings yet

- JRC Spaceweather Awareness DialogueDocument27 pagesJRC Spaceweather Awareness Dialoguembarraza727No ratings yet

- c1 - Additional Practice QuestionsDocument15 pagesc1 - Additional Practice Questionsapi-261193362No ratings yet

- Learn About Weather and SeasonsDocument10 pagesLearn About Weather and SeasonsMita Isnaini HarahapNo ratings yet

- ForecastingDocument2 pagesForecastingRaymart FloresNo ratings yet