Professional Documents

Culture Documents

Introduction To Probability and Statistics

Uploaded by

farbods0Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Introduction To Probability and Statistics

Uploaded by

farbods0Copyright:

Available Formats

Introduction to Probability and

Statistics

Chapter 12

Types of Probability

Fundamentals of Probability

Statistical Independence and Dependence

Expected Value

The Normal Distribution

Topics

Sample Space and Event

Probability is associated with performing an

experiment whose outcomes occur randomly

Sample space contains all the outcomes of an

experiment

An event is a subset of sample space

Probability of an event is always greater than or

equal to zero

Probabilities of all the events must sum to one

Events in an experiment are mutually exclusive if

only one can occur at a time

Objective Probability

Stated prior to the occurrence of the event

Based on the logic of the process producing the outcomes

Relative frequency is the more widely used definition of

objective probability.

Subjective Probability

Based on personal belief, experience, or knowledge of a

situation.

Frequently used in making business decisions.

Different people often arrive at different subjective

probabilities.

Objective Probability

Frequency Distribution

organization of numerical data about the events

Probability Distribution

A list of corresponding probabilities for each event

Mutually Exclusive Events

If two or more events cannot occur at the same

time

Probability that one or more events will occur is

found by summing the individual probabilities of

the events:

P(A or B) = P(A) + P(B)

Fundamentals of Probability Distributions

Grades for past four years.

Event

Grade

Number of

Students

Relative

Frequency

Probability

A

B

C

D

F

300

600

1,500

450

150

3,000

300/3,000

600/3,000

1,500/3,000

450/3,000

150/3,000

.10

.20

.50

.15

.05

1.00

Fundamentals of Probability

A Frequency Distribution Example

Probability that non-mutually exclusive events M and F or

both will occur expressed as:

P(M or F) = P(M) + P(F) - P(MF)

A joint (intersection) probability, P(MF), is the probability

that two or more events that are not mutually exclusive can

occur simultaneously.

Fundamentals of Probability

Non-Mutually Exclusive Events & Joint Probability

Determined by adding the probability of an event to the sum

of all previously listed probabilities

Probability that a student will get a grade of C or higher:

P(A or B or C) = P(A) + P(B) + P(C) = .10 + .20 + .50 = .80

Event

Grade

Probability

Cumulative

Probability

A

B

C

D

F

.10

.20

.50

.15

.05

1.00

.10

.30

.80

.95

1.00

Fundamentals of Probability

Cumulative Probability Distribution

Events that do not affect each other are independent.

Computed by multiplying the probabilities of each event.

P(AB) = P(A) P(B)

For coin tossed three consecutive times: Probability of getting head

on first toss, tail on second, tail on third is:

P(HTT) = P(H) P(T) P(T) = (.5)(.5)(.5) = .125

Statistical Independence and Dependence

Independent Events

Properties of a Bernoulli Process:

Two possible outcomes for each trial.

Probability of the outcome remains constant over

time.

Outcomes of the trials are independent.

Number of trials is discrete and integer.

Statistical Independence and Dependence

Independent Events Bernoulli Process Definition

Used to determine the probability of a number of successes

in n trials.

where: p = probability of a success

q = 1- p = probability of a failure

n = number of trials

r = number of successes in n trials

Determine probability of getting exactly two tails in three tosses of a

coin.

r - n

q

r

p

r)! - (n r!

n!

P(r) =

Binomial Distribution

.375 2) P(r

(.125)

2

6

(.25)(.5)

1)(1) (2

1) 2 (3

2 3

(.5)

2

(.5)

2)! - (3 2!

3!

2) P(r tails) P(2

= =

=

=

= = =

Example

Microchips are inspected at the quality

control station

From every batch, four are selected and

tested for defects

Given defective rate of 20%, what is the

probability that each batch contains

exactly two defectives

.1536

(.0256)

2

24

(.25)(.5)

1)(1) (2

1) 2 3 (4

2

(.8)

2

(.2)

2)! - (4 2!

4!

) defectives 2 P(r

=

=

=

= =

What is probability that each batch will contain exactly two

defectives?

What is probability of getting two or more defectives?

Probability of less than two defectives:

P(r<2) = P(r=0) + P(r=1) = 1.0 - [P(r=2) + P(r=3) + P(r=4)]

= 1.0 - .1808 = .8192

Binomial Distribution Example Quality Control

.1808

.0016 .0256 .1536

0

(.8)

4

(.2)

4)! - (4 4!

4!

1

(.8)

3

(.2)

3)! (4 3!

4!

2

(.8)

2

(.2)

2)! - (4 2!

4!

2) P(r

=

+ + =

+

+ = >

If the occurrence of one event affects the probability of the

occurrence of another event, the events are dependent.

Coin toss to select bucket, draw for blue ball.

If tail occurs, 1/6 chance of drawing blue ball from bucket 2; if head

results, no possibility of drawing blue ball from bucket 1.

Probability of event drawing a blue ball dependent on event

flipping a coin.

Dependent Events

Unconditional: P(H) = .5; P(T) = .5, must sum to one.

Conditional: P(R,H) =.33, P(W,H) = .67, P(R,T) = .83,

P(W,T) = .17

Dependent Events Conditional Probabilities

Given two dependent events A and B:

P(A,B) = P(AB)/P(B) or P(AB) = P(A|B).P(B)

With data from previous example:

P(RH) = P(R,H) P(H) = (.33)(.5) = .165

P(WH) = P(W,H) P(H) = (.67)(.5) = .335

P(RT) = P(R,T) P(T) = (.83)(.5) = .415

P(WT) = P(W,T) P(T) = (.17)(.5) = .085

Math Formulation of Conditional Probabilities

Summary of Example Problem Probabilities

B)P(B) P(C A)P(A) P(C

A)P(A) P(C

) C P(A

+

=

In Bayesian analysis, additional information is used to alter

(improve) the marginal probability of the occurrence of an

event.

Improved probability is called posterior probability

A posterior probability is the altered marginal probability of

an event based on additional information.

Bayes Rule for two events, A and B, and third event, C,

conditionally dependent on A and B:

Bayesian Analysis

Machine setup; if correct 10% chance of defective part; if

incorrect, 40%.

50% chance setup will be correct or incorrect.

What is probability that machine setup is incorrect if sample

part is defective?

Solution: P(C) = .50, P(IC) = .50, P(DC) = .10, P(DIC) = .40

where C = correct, IC = incorrect, D = defective

Bayesian Analysis Example (1 of 2)

.80

(.10)(.50) (.40)(.50)

(.40)(.50)

C)P(C) P(D IC)P(IC) P(D

IC)P(IC) P(D

) D P(IC

=

+

=

+

=

Previously, the manager knew that there was a 50% chance

that the machine was set up incorrectly

Now, after testing the part, he knows that if it is defective,

there is 0.8 probability that the machine was set up

incorrectly

Statistical Independence and Dependence

Bayesian Analysis Example (2 of 2)

When the values of variables occur in no particular order or

sequence, the variables are referred to as random

variables.

Random variables are represented by a letter x, y, z, etc.

Possible to assign a probability to the occurrence of

possible values.

Expected Value

Random Variables

Possible values of

no. of heads are:

Possible values of

demand/week:

Random Variable x

(Number of Breakdowns)

P(x)

0

1

2

3

4

.10

.20

.30

.25

.15

1.00

Machines break down 0, 1, 2, 3, or 4 times per month.

Relative frequency of breakdowns , or a probability

distribution:

Expected Value

Example (1 of 4)

Computed by multiplying each possible value of the

variable by its probability and summing these products.

The weighted average, or mean, of the probability

distribution of the random variable.

Expected value of number of breakdowns per month:

E(x) = (0)(.10) + (1)(.20) + (2)(.30) + (3)(.25) + (4)(.15)

= 0 + .20 + .60 + .75 + .60

= 2.15 breakdowns

Expected Value

Example (2 of 4)

Variance is a measure of the dispersion of random variable

values about the mean.

Variance computed as follows:

Square the difference between each value and the

expected value.

Multiply resulting amounts by the probability of each

value.

Sum the values compiled in step 2.

General formula:

o

2

= E[x

i

- E(x

i

)]

2

P(x

i

)

Expected Value

Example (3 of 4)

Standard deviation computed by taking the square root of

the variance.

For example data:

o

2

= 1.425 breakdowns per month

standard deviation = o = sqrt(1.425)

= 1.19 breakdowns per month

x

i

P(x

i

) x

i

E(x) [x

i

E(x

i

)]

2

[x

i

E(x)]

2

- P(x

i

)

0

1

2

3

4

.10

.20

.30

.25

.15

1.00

-2.15

-1.15

-0.15

0.85

1.85

4.62

1.32

0.02

0.72

3.42

.462

.264

.006

.180

.513

1.425

Expected Value

Example (4 of 4)

Poisson Distribution

Based on the number of outcomes occurring during

a given time interval or in a specified regions

Examples

# of accidents that occur on a given highway during a 1-

week period

# of customers coming to a bank during a 1-hour interval

# of TVs sold at a department store during a given week

# of breakdowns of a washing machine per month

Conditions

Consider the # of breakdowns of a washing

machine per month example

Each breakdown is called an occurrence

Occurrences are random that they do not

follow any pattern (unpredictable)

Occurrence is always considered with respect

to an interval (one month)

The Probability Mass Distribution

X = number of counts in the interval

Poisson random variable with > 0

PMF

f(x)= x=0,1,2,.

Mean and Variance

E[X] = , V (X) =

! x

e

x

Example

If a bank gets on average = 6 bad checks per

day, what are the probabilities that it will receive

four bad checks on any given day?10 bad checks

on any two consecutive days?

Solution

x = 4 and = 6, then f(4) = = 0.135

= 12 and x = 10, then f(10) = = 0.105

! 4

6

6 4

e

! 10

12

10 12

e

Example

The number of failures of a testing instrument from

contamination particle on the product is a Poisson

random variable with a mean of 0.02 failure per

hour.

What is the probability that the instrument does not fail in

an 8-hour shift?

What is the probability of at least one failure in one 24-

hour day?

Solution

a) Let X denote the failure in 8 hours. Then, X has a

Poisson distribution with =0.16

P(X=0)=0.8521

b) Let Y denote the number of failure in 24 hours.

Then, Y has a Poisson distribution with =0.48

P(Y>1) = 1-P(Y = 0) =0.3812

Continuous random variable can take on an infinite number

of values within some interval.

Continuous random variables have values that are not

countable

Cannot assign a unique probability to each value

The Normal Distribution

Continuous Random Variables

The normal distribution is a continuous probability

distribution that is symmetrical on both sides of the mean.

The center of a normal distribution is its mean .

The area under the normal curve represents probability,

and total area under the curve sums to one.

The Normal Distribution

Definition

Mean weekly carpet sales of 4,200 yards, with standard

deviation of 1,400 yards.

What is probability of sales exceeding 6,000 yards?

= 4,200 yd; o = 1,400 yd; probability that number of yards

of carpet will be equal to or greater than 6,000 expressed

as: P(x>6,000).

The Normal Distribution

Example (1 of 5)

-

-

The Normal Distribution

Example (2 of 5)

The area or probability under a normal curve is measured

by determining the number of standard deviations from the

mean.

Number of standard deviations a value is from the mean

designated as Z.

Z = (x - )/o

The Normal Distribution

Standard Normal Curve (1 of 2)

The Normal Distribution

Standard Normal Curve (2 of 2)

The Normal Distribution

Example (3 of 5)

Z = (x - )/ o = (6,000 - 4,200)/1,400

= 1.29 standard deviations

P(x> 6,000) = .5000 - .4015 = .0985

Determine probability that demand will be 5,000 yards or

less.

Z = (x - )/o = (5,000 - 4,200)/1,400 = .57 standard deviations

P(xs 5,000) = .5000 + .2157 = .7157

The Normal Distribution

Example (4 of 5)

Determine probability that demand will be between 3,000

yards and 5,000 yards.

Z = (3,000 - 4,200)/1,400 = -1,200/1,400 = -.86

P(3,000 s x s 5,000) = .2157 + .3051= .5208

The Normal Distribution

Example (5 of 5)

Different Table

P(3,000 s x s 5,000)=

P((3,000 - 4,200)/1,400) s z s ((5,000 -

4,200)/1,400)

P(-0.86s z s 0.57)=

P( z s 0.57)- P( z s -0.86)=

P( z s 0.57)- P( z 0.86)=

P( z s 0.57)- [1-P( z s 0.86)]=

(0.7157)-[1-0.8051]=0.5208

The population mean and variance are for the entire set of

data being analyzed.

The sample mean and variance are derived from a subset

of the population data and are used to make inferences

about the population.

The Normal Distribution

Sample Mean and Variance

2

s s deviation standard Sample

1 - n

n

1 i

2

) x -

i

(x

2

s variance Sample

n

n

1 i

i

x

x mean Sample

= =

=

= =

=

= =

The Normal Distribution

Computing the Sample Mean and Variance

Sample mean = 42,000/10 = 4,200 yd

Sample variance = [(190,060,000) - (1,764,000,000/10)]/9

= 1,517,777

Sample std. dev. = sqrt(1,517,777)

= 1,232 yd

Week

i

Demand

x

i

1

2

3

4

5

6

7

8

9

10

2,900

5,400

3,100

4,700

3,800

4,300

6,800

2,900

3,600

4,500

42,000

The Normal Distribution

Example Problem Re-Done

It can never be simply assumed that data are normally

distributed.

A statistical test must be performed to determine the exact

distribution.

The Chi-square test is used to determine if a set of data fit a

particular distribution.

It compares an observed frequency distribution with a

theoretical frequency distribution that would be expected to

occur if the data followed a particular distribution (testing

the goodness-of-fit).

The Normal Distribution

Chi-Square Test for Normality (1 of 2)

In the test, the actual number of frequencies in each range

of frequency distribution is compared to the theoretical

frequencies that should occur in each range if the data

follow a particular distribution.

A Chi-square statistic is then calculated and compared to a

number, called a critical value, from a chi-square table.

If the test statistic is greater than the critical value, the

distribution does not follow the distribution being tested; if it

is less, the distribution does exist.

Chi-square test is a form of hypothesis testing.

The Normal Distribution

Chi-Square Test for Normality (2 of 2)

Statistical Analysis with Excel (1 of 3)

Statistical Analysis with Excel (2 of 3)

Statistical Analysis with Excel (3 of 3)

You might also like

- RitesDocument11 pagesRitesMadmen quillNo ratings yet

- Basic Concept of TQMDocument2 pagesBasic Concept of TQMChristian lugtuNo ratings yet

- STS Gene TherapyDocument12 pagesSTS Gene Therapyedgar malupengNo ratings yet

- English (Step Ahead)Document33 pagesEnglish (Step Ahead)ry4nek4100% (1)

- Agamata Chapter 5Document10 pagesAgamata Chapter 5Drama SubsNo ratings yet

- Chapter 13 - Significance of Lead TimeDocument10 pagesChapter 13 - Significance of Lead TimeSarahia AmakaNo ratings yet

- SDGsDocument83 pagesSDGsmayasarissuroto100% (1)

- Jarratt Davis: How To Trade A Currency FundDocument5 pagesJarratt Davis: How To Trade A Currency FundRui100% (1)

- 1 Slide © 2005 Thomson/South-WesternDocument70 pages1 Slide © 2005 Thomson/South-WesternNad IraNo ratings yet

- FINANCE MANAGEMENT FIN420chp 1Document10 pagesFINANCE MANAGEMENT FIN420chp 1Yanty IbrahimNo ratings yet

- 1027 02 Swot Analysis PowerpointDocument6 pages1027 02 Swot Analysis PowerpointmohamedbosilaNo ratings yet

- Techno 1Document16 pagesTechno 1IzhamGhaziNo ratings yet

- TR - Reinforcing Steel Works NC IIDocument46 pagesTR - Reinforcing Steel Works NC IIAljon BalanagNo ratings yet

- Finance Interview QuestionDocument41 pagesFinance Interview QuestionshuklashishNo ratings yet

- CITY DEED OF USUFRUCTDocument4 pagesCITY DEED OF USUFRUCTeatshitmanuelNo ratings yet

- PEB4102 Chapter 4 - UpdatedDocument79 pagesPEB4102 Chapter 4 - UpdatedLimNo ratings yet

- International Environment and Management Lecture 1Document32 pagesInternational Environment and Management Lecture 1Anant MauryaNo ratings yet

- AHP-450 Horizontal Wrapping Machine ManualDocument50 pagesAHP-450 Horizontal Wrapping Machine Manualyoyyo7805No ratings yet

- 12 Breakeven AnalysisDocument24 pages12 Breakeven AnalysisIsmail JunaidiNo ratings yet

- Csci3134 Exercises OOPDocument14 pagesCsci3134 Exercises OOPTeodorescu StefanNo ratings yet

- Project TerminationDocument16 pagesProject TerminationNazryinzAJWilderNo ratings yet

- Answer Key 6 - Simple & Compound InterestDocument4 pagesAnswer Key 6 - Simple & Compound InterestCute trinkets by Dani100% (1)

- Harvard Style-webFINALDocument10 pagesHarvard Style-webFINALBgt AltNo ratings yet

- CHE 555 Numerical DifferentiationDocument9 pagesCHE 555 Numerical DifferentiationHidayah Humaira100% (1)

- Discounts, Markup and MarkdownDocument3 pagesDiscounts, Markup and MarkdownYannaNo ratings yet

- Chapter 3 Discrete Probability Distributions - Final 3Document27 pagesChapter 3 Discrete Probability Distributions - Final 3Victor ChanNo ratings yet

- Unesco TvetDocument141 pagesUnesco TvetSeli adalah Saya100% (1)

- UGRD-IT6200 Introduction To Human Computer Interaction / Human Computer Interaction Final Quiz 1 Grade 20.00 Out of 20.00 (100%)Document14 pagesUGRD-IT6200 Introduction To Human Computer Interaction / Human Computer Interaction Final Quiz 1 Grade 20.00 Out of 20.00 (100%)LouennaNo ratings yet

- External Communication: by Sir Haseeb Ur RehmanDocument6 pagesExternal Communication: by Sir Haseeb Ur RehmanKhawaja Haseeb Ur RehmanNo ratings yet

- Unit-6: Classification and PredictionDocument63 pagesUnit-6: Classification and PredictionNayan PatelNo ratings yet

- Discuss One Current Issue in Operations ManagementDocument3 pagesDiscuss One Current Issue in Operations ManagementFariha Shams Butt100% (1)

- CH07 NOTES LectureDocument124 pagesCH07 NOTES LectureAngelicaTagalaNo ratings yet

- Eco 620 Macroeconomics Course OutlineDocument11 pagesEco 620 Macroeconomics Course OutlineJyabuNo ratings yet

- Compile and Runtime Errors in JavaDocument208 pagesCompile and Runtime Errors in JavaOswaldo Canchumani0% (1)

- TR Masonry NC IIIDocument95 pagesTR Masonry NC IIImicah ramos100% (1)

- NPV CalculationDocument7 pagesNPV CalculationnasirulNo ratings yet

- K Mean ClusteringDocument11 pagesK Mean ClusteringCualQuieraNo ratings yet

- Business Proposal AssignmentDocument3 pagesBusiness Proposal Assignmentnirob_x9No ratings yet

- Ethical Issues in Budget PreparationDocument26 pagesEthical Issues in Budget PreparationJoseph SimonNo ratings yet

- 7-Identify Project ActivitiesDocument4 pages7-Identify Project ActivitiesAsjad JamshedNo ratings yet

- Microeconomics Questions Chapter 1Document21 pagesMicroeconomics Questions Chapter 1toms446No ratings yet

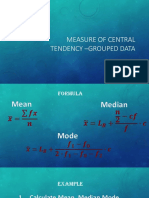

- Measure of Central Tendency - Grouped DataDocument15 pagesMeasure of Central Tendency - Grouped DataEmily Grace OralloNo ratings yet

- Conceptual: Session 1Document101 pagesConceptual: Session 1Advaita NGONo ratings yet

- Summary of Chapter 3 (Understanding Business)Document3 pagesSummary of Chapter 3 (Understanding Business)Dinda LambangNo ratings yet

- CS102 HandbookDocument72 pagesCS102 HandbookNihmathullah Mohamed RizwanNo ratings yet

- 48 EnterpreneurshipDocument274 pages48 Enterpreneurshiptamannaagarwal87No ratings yet

- Linear Programming: Presented by Paul MooreDocument36 pagesLinear Programming: Presented by Paul MooreDen NisNo ratings yet

- Museum Entrance: Welcome To The Museum of Managing E-Learning - Group 3 ToolsDocument31 pagesMuseum Entrance: Welcome To The Museum of Managing E-Learning - Group 3 ToolsRebeccaKylieHallNo ratings yet

- Project Selection Methods: Viability/Profitability of InvestmentDocument11 pagesProject Selection Methods: Viability/Profitability of InvestmentKwame Meshack AhinkorahNo ratings yet

- Strategic Profit Model Examines Logistics DecisionsDocument7 pagesStrategic Profit Model Examines Logistics Decisionsjohn brownNo ratings yet

- Derivative RulesDocument8 pagesDerivative RuleslukeNo ratings yet

- G-Research Sample Questions 2017Document2 pagesG-Research Sample Questions 2017SergeGardienNo ratings yet

- National Vocational Certificate curriculum for Refrigeration and Air ConditioningDocument84 pagesNational Vocational Certificate curriculum for Refrigeration and Air ConditioningPeter Waiguru WanNo ratings yet

- Insurance Employee Phone Call Survey StatisticsDocument3 pagesInsurance Employee Phone Call Survey StatisticsMohamad KhairiNo ratings yet

- Quick Start For Using Powerworld Simulator With Transient StabilityDocument66 pagesQuick Start For Using Powerworld Simulator With Transient StabilityJuan Carlos TNo ratings yet

- 5 Estimating Times and CostsDocument28 pages5 Estimating Times and CostsasdfaNo ratings yet

- International Business PDFDocument19 pagesInternational Business PDFShubham HedauNo ratings yet

- Decision TheoryDocument45 pagesDecision TheoryMary Divine Pelicano100% (1)

- Research Philosophy Spectrum from Positivism to ConstructivismDocument40 pagesResearch Philosophy Spectrum from Positivism to ConstructivismKhalid ElGhazouliNo ratings yet

- Pert and CPM TechniquesDocument67 pagesPert and CPM TechniquesGS IN ONE MINUTENo ratings yet

- Probability Distribution DiscreteDocument16 pagesProbability Distribution Discretescropian 9997No ratings yet

- Presentation 6Document43 pagesPresentation 6Swaroop Ranjan BagharNo ratings yet

- Basics of Probability TheoryDocument42 pagesBasics of Probability TheoryAmogh Sanjeev PradhanNo ratings yet

- Chi Square (KI Square) Test for Independence & Goodness of FitDocument30 pagesChi Square (KI Square) Test for Independence & Goodness of FitnyiisonlineNo ratings yet

- Risk Analysis of Individual and Societal RiskDocument9 pagesRisk Analysis of Individual and Societal RiskALIKNFNo ratings yet

- NCLT Orders Relief To Home BuyersDocument7 pagesNCLT Orders Relief To Home BuyersPGurusNo ratings yet

- Marketing Communications and Corporate Social Responsibility (CSR) - Marriage of Convenience or Shotgun WeddingDocument11 pagesMarketing Communications and Corporate Social Responsibility (CSR) - Marriage of Convenience or Shotgun Weddingmatteoorossi100% (1)

- Data Structures Assignment 2: (Backtracking Using Stack)Document4 pagesData Structures Assignment 2: (Backtracking Using Stack)Sai CharanNo ratings yet

- Gauteng Grade 6 Maths ExamDocument14 pagesGauteng Grade 6 Maths ExamMolemo S MasemulaNo ratings yet

- Paradine V Jane - (1646) 82 ER 897Document2 pagesParadine V Jane - (1646) 82 ER 897TimishaNo ratings yet

- Is.14858.2000 (Compression Testing Machine)Document12 pagesIs.14858.2000 (Compression Testing Machine)kishoredataNo ratings yet

- Fuentes CAED PortfolioDocument32 pagesFuentes CAED PortfoliojsscabatoNo ratings yet

- Making Hand Sanitizer from Carambola FruitDocument5 pagesMaking Hand Sanitizer from Carambola FruitMary grace LlagasNo ratings yet

- Ra 6770Document8 pagesRa 6770Jamiah Obillo HulipasNo ratings yet

- Irish Chapter 6 Causes of DeathDocument8 pagesIrish Chapter 6 Causes of DeathIrish AlonzoNo ratings yet

- Car For Sale: A. Here Are Some More Car Ads. Read Them and Complete The Answers BelowDocument5 pagesCar For Sale: A. Here Are Some More Car Ads. Read Them and Complete The Answers BelowCésar Cordova DíazNo ratings yet

- CPS 101 424424Document3 pagesCPS 101 424424Ayesha RafiqNo ratings yet

- GUINNESS F13 Full Year BriefingDocument27 pagesGUINNESS F13 Full Year BriefingImoUstino ImoNo ratings yet

- Understanding Culture, Society, and Politics - IntroductionDocument55 pagesUnderstanding Culture, Society, and Politics - IntroductionTeacher DennisNo ratings yet

- SAP Training Program Proposal for StudentsDocument2 pagesSAP Training Program Proposal for StudentsAjay KumarNo ratings yet

- Eq 1Document4 pagesEq 1jppblckmnNo ratings yet

- Allosteric EnzymeDocument22 pagesAllosteric EnzymeAhmed ImranNo ratings yet

- SLI ProfileThe title "TITLE SLI Profile" is less than 40 characters and starts with "TITLEDocument3 pagesSLI ProfileThe title "TITLE SLI Profile" is less than 40 characters and starts with "TITLEcringeNo ratings yet

- MERLINDA CIPRIANO MONTAÑES v. LOURDES TAJOLOSA CIPRIANODocument1 pageMERLINDA CIPRIANO MONTAÑES v. LOURDES TAJOLOSA CIPRIANOKaiserNo ratings yet

- Agenda For Regular Board Meeting February 18, 2022 Western Visayas Cacao Agriculture CooperativeDocument2 pagesAgenda For Regular Board Meeting February 18, 2022 Western Visayas Cacao Agriculture CooperativeGem Bhrian IgnacioNo ratings yet

- Gandhi and Indian Economic Planning (Unit III)Document21 pagesGandhi and Indian Economic Planning (Unit III)Aadhitya NarayananNo ratings yet

- An Investigation of The Reporting of Questionable Acts in An International Setting by Schultz 1993Document30 pagesAn Investigation of The Reporting of Questionable Acts in An International Setting by Schultz 1993Aniek RachmaNo ratings yet

- Impact of Technology On Future JobsDocument29 pagesImpact of Technology On Future Jobsmehrunnisa99No ratings yet

- 03.KUNCI KODING 11 IPA-IPS SMT 2 K13 REVISI - TP 23-24 - B.InggrisDocument2 pages03.KUNCI KODING 11 IPA-IPS SMT 2 K13 REVISI - TP 23-24 - B.InggrisfencenbolonNo ratings yet