Professional Documents

Culture Documents

Big Data Analysis Guide

Uploaded by

Anonymous DLEF3GvOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Big Data Analysis Guide

Uploaded by

Anonymous DLEF3GvCopyright:

Available Formats

Big Data Analysis

Big Data Overview (1/2)

Big data is a general term used to describe the voluminous amount of unstructured and semi -structured data a company creates. Its the data that would take too much time and cost too much money to load into a relational database for analysis. Big data refers to datasets whose sizes are beyond the ability of typical database software tools to capture, store, manage and analyze. A primary goal for looking at big data is to discover repeatable business patterns. It has many additional uses, including real-time fraud detection, web display advertising and competitive analysis, call center optimization, social media and sentiment analysis, intelligent traffic management, and smart power grids. Big data analytics is often associated with cloud computing because the analysis of large data sets in real-time requires a framework like MapReduce to distribute the work among tens, hundreds or even thousands of computers. As technology advances over time, the size of datasets that qualify as big data will also increase and big data is expected to play a significant economic role to benefit not only private commerce but also national economies and their citizens. Big data involves more than simply the ability to handle large volumes of data. Instead, it represents a wide range of new analytical technologies and business possibilities.

Big Data Can Generate Significant Financial Value Across Sectors

Source: http://searchcloudcomputing.techtarget.com/definition/big-data-Big-Data, McKinsey Big Data Report, BI Research Using Big Data for Smarter Decision Making

2

Big Data Overview (2/2)

Data volume is the primary attribute of big data Big data can also be quantified by counting records, transactions, tables or files. Some organizations find it more useful to quantify big data in terms of time. For example, due to the seven-year statute of limitations in the U.S., many firms prefer to keep seven years of data available for risk, compliance and legal analysis. The scope of big data affects its quantification, too. For example, in many organizations, the data collected for general data warehousing differs from data collected specifically for analytics. Three Vs of Big Data

Data variety comes from a greater variety of sources Big data comes from a variety of sources, including logs, click streams, social media, radio-frequency identification (RFID) data from supply chain applications, text data from call center applications, semistructured data from various business-to-business processes, and geospatial data in logistics. The recent tapping of these sources for analytics means that so-called structured data is now joined by unstructured data (text and human language) and semi-structured data (XML, RSS feeds).

Data feed velocity as a defining attribute of big data

The collection of big data in real time isnt new; many firms have been collecting click stream data from the web for years, using streaming data to make purchase recommendations to web visitors. Even more challenging, the analytics that go with streaming data have to make sense of the data and possibly take actionall in real time.

The three Vs of big data (volume, variety and velocity) constitute a comprehensive definition. Each of the three Vs has its own ramifications for analytics.

Source: TWDI Research report on Big Data Analytics

3

Big Data Future

International Data Corporation (IDC) released a worldwide big data technology and services forecast report based on a survey in March 2012. As per the survey: The big data market is expected to grow from $3.2 billion in 2010 to $16.9 billion in 2015. This represents a compound annual growth rate (CAGR) of 40% or about seven times that of the overall information and communications technology (ICT) market. The big data market is expanding rapidly and for technology buyers, opportunities exist to use big data technology to improve operational efficiency and to drive innovation. There are also big data opportunities for both large IT vendors and start ups. Major IT vendors are offering both database solutions and configurations supporting big data by evolving their own products as well as by acquisition. At the same time, more than half a billion dollars in venture capital has been invested in new big data technology. While the five-year CAGR for the worldwide market is expected to be nearly 40%, the growth of individual segments varies from 27.3% for servers and 34.2% for software to 61.4% for storage. The growth in appliances, cloud, and outsourcing deals for big data technology will likely mean that over time, end users will pay increasingly less attention to technology capabilities and will focus instead on the business value arguments. System performance, availability, security and manageability will all matter greatly; however, how they are achieved will be less of a point for differentiation among vendors. There is a shortage of trained big data technology experts, in addition to a shortage of analytics experts. This labor supply constraint will act as an inhibitor of adoption and use of big data technologies, and it will also encourage vendors to deliver big data technologies as cloud-based solutions. While software and services make up the bulk of the market opportunity, through 2015, infrastructure technology for big data deployments is expected to grow slightly faster at 44% CAGR. Storage, in particular, shows the strongest growth opportunity, growing at 61.4% CAGR through 2015.

IDC defines big data technologies as a new generation of technologies and architectures designed to extract value economically from very large volumes of a wide variety of data by enabling high-velocity capture, discovery and/or analysis.

Source: http://www.idc.com/getdoc.jsp?containerId=prUS23355112

4

Big Data Risks/Challenges

Big data is complex because of the variety of data it encompasses from structured data, such as transactions one makes or measurements one calculates and stores, to unstructured data such as text conversations, multimedia presentations and video streams. Big data presents a number of challenges relating to its complexity: One challenge is how one can understand and use big data when it comes in an unstructured format, such as text or video. Another challenge is how one can capture the most important data as it happens and deliver that to the right people in realtime. A third challenge is how one can store the data and analyze and understand it given its size and the computational capacity.

Big data also poses security and privacy risks for a large amount of data stored in data warehouses, centralized in a single repository. Big data and extreme workloads require optimized hardware and software. The main challenges of big data and extreme workloads are data variety and volume, and analytical workload complexity and agility. Many organizations are struggling to deal with increasing data volumes, and big data simply makes the problem worse. To solve this problem, organizations need to reduce the amount of data being stored and exploit new storage technologies that improve performance and storage utilization. Big datas increasing economic importance also raises a number of legal issues, especially when coupled with the fact that da ta is fundamentally different from many other assets. For example, one piece of data can be copied perfectly and easily combined with other data. The same piece of data can be used simultaneously by more than one person. Sectors with a relative lack of competitive intensity and performance transparency, along with industries where profit pools are highly concentrated, are likely to be slow to fully leverage the benefits of big data.

Source: BI Research Using Big Data for Smarter Decision Making, http://spotfireblog.tibco.com/?p=6793, https://www.privacyassociation.org/publications/2012_03_23_big_data_it_risks_and_privacy_meet_in_the_boardroom

5

Big Data Importance

Creating transparency Making big data more easily accessible to relevant stakeholders in a timely manner can create tremendous value. In the public sector, making relevant data more readily accessible across otherwise separated departments can sharply reduce search and processing time.

Enabling experimentation to discover needs

As more transactional data is created and stored in digital form, organizations can collect more accurate and detailed performance data on everything from product inventories to personnel sick days. Using data to analyze variability in performance is generated by controlled experiments.

Segmenting populations to customize actions

Big data allows organizations to create highly specific segmentations and to tailor products and services precisely to meet those needs. This approach is well-known in marketing and risk management but can be revolutionary elsewhere.

Replacing human decision making with automated algorithms

Sophisticated analytics can substantially improve decision making, minimize risks and unearth valuable insights that would otherwise remain hidden. Such analytics have applications for organizations from tax agencies that can use automated risk engines to flag candidates for further examination.

Innovating new business models, products and services

Big data enables companies to create new products and services, enhance existing ones, and invent entirely new business models. Manufacturers are using data obtained from the use of actual products to improve the development of the next generation of products and to create innovative after-sales service offerings.

Source: McKinsey Big Data Report

6

Big Data Vendors

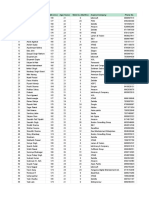

2012 Big Data Pure-Play Vendors, Yearly Big Data Revenue (in $US Million)

In the current market, big data pure-play vendors account for $300 million in big data-related revenue. Despite their relatively small percentage of current overall revenue (approximately 5%), big data pure-play vendors (such as Vertica, Splunk and Cloudera) are responsible for the vast majority of new innovations and modern approaches to data management and analytics that have emerged over the last several years and made big data the hottest sector in IT.

Source: http://www.forbes.com/sites/siliconangle/2012/02/17/big-data-is-big-market-big-business/

7

Big Data Trends

The McKinsey Global Institute estimated that enterprises globally stored more than seven exabytes of new data on disk drives in 2010, while consumers stored more than six exabytes of new data on devices such as PCs and notebooks. Big data has now reached every sector in the global economy. In total, European organizations have about 70% of the storage capacity of the entire United States at almost 11 exabytes. The possibilities of big data continue to evolve rapidly, driven by innovation in the underlying technologies, platforms and analytic capabilities for handling data, as well as the evolution of behavior among its users as more and more individuals live digital lives. The use of big data is becoming a key way for leading companies to outperform their peers. McKinsey estimated that a retailer embracing big data has the potential to increase its operating margin by more than 60%. The increasing use of multimedia in sectors, including health care and consumer-facing industries, has contributed significantly to the growth of big data and will continue to do so.

The surge in the use of social media is producing its own stream of new data. While social networks dominate the communications portfolios of younger users, older users are adopting them at an even more rapid pace.

Source: McKinsey Big Data Report

8

Big Data Examples

Big data includes web logs, RFID, sensor networks, social networks, social data, Internet text and documents, Internet search indexing, call detail records, complex and/or interdisciplinary scientific research, military surveillance, medical records, photography archives, video archives, and large-scale e-commerce. Examples of Companies Using Big Data: IBM has formed a partnership with the Netherlands Institute for Radio Astronomy (ASTRON) for the DOME Project, which provided support in developing the tools needed to crunch the data for the ambitious international Square Kilometer Array (SKA) radio telescope. San Francisco-based SeeChange Company offered a better way of designing health insurance plans with what it calls value based benefits. The company used a substantial amount of data gleaned from personal health records, claims databases, lab feeds and pharmacy data to identify patients with chronic illnesses who would benefit from a customized compliance program. Boston-based company Humedica combined its data analytics with a real-time clinical surveillance and decision support system. The company also sells its detailed clinical spending data to life sciences companies, with the idea that customers will use it to quantify patient populations, market share and market opportunities. Castlight Health aimed to push transparency in healthcare pricing by offering consumers a search engine to find prices of healthcare services. Castlights technology allowed consumers to run side-by-side comparisons of out-of-pocket medical expenses. Armed with prices, consumers will then shop for bargains, limiting the growth of healthcare costs. Cleveland-based Explorys has started a Google-like service that helps clinicians analyze real-time information culled from troves of electronic medical records (EMRs), financial records and other data. The idea is that medical researchers can mine the vast amounts of data to learn how variations in treatment can affect outcomes, uncovering best practices to enhance patient care and lower costs. Apixios technology brings together data from structured sources like EMRs with unstructured data, such as a physicians patient encounter notes. The companys software uses natural language processing technology to interpret clinicians free -text searches and return the most relevant results.

Source: http://www.forbes.com/sites/alexknapp/2012/04/09/ibm-is-using-big-data-to-crunch-the-big-bang/, http://www.medcitynews.com/2011/11/5-companies-using-big-data-to-solvehealthcare-problems/

9

Role of Internal Audit in Managing Big Data Case Study

To manage data holdings effectively, an organization must first be aware of the location, condition and value of its research assets. Conducting a data audit provides this information, raising awareness of collection strengths and identifying weaknesses in data policies and management procedures. The benefits of conducting an audit for managing big data effectively are: Check the extent of data assets and deep dive into what all is available. Data that is redundant or unimportant may be identified and reduced. Monitor holdings and avoid big data leaks. Data hacking, social engineering and data leaks are all concepts that plague a company an audit can help a company identify areas where there is a possibility of leakage. Manage risks associated with big data loss and irretrievability. Data which is not structured and is lying untouched may never be retrieved; an audit can help identify such cases. Develop a big data strategy and implement robust big data policies. Big data requires robust management and proper structurization. Improve workflows and benefit from efficiency savings. Check where there are complex and time-consuming workflows and where there is a scope of improving efficiencies. Realize the value of big data through improved access and reuse to check if there are areas that have not been used in a while.

Source: http://www.data-audit.eu/docs/DAF_briefing_paper.pdf

10

Managing Big Data Through Internal Audit

Following are issues of big data that internal audit can help mitigate: Most companies collect large volumes of data but they dont have comprehensive approaches for centralizing the information. Internal audit can help companies manage big data by streamlining and collating data effectively.

Complex Big Data

Big Data Security

Maintaining effective data security is increasingly recognized as a critical risk area for organizations. Loss of control over data security can have severe ramifications for an organization, including regulatory penalties, loss of reputation, and damage to business operations and profitability. Auditing can help organizations secure and control data collected.

Big Data Accessibility

Giving access to big data to the right person at the right time is another challenge organizations face. Segregation of duties (SoD) is an important aspect that can be checked by an IA.

Big Data Quality

The more data one accumulates, the harder it is to keep everything consistent and correct. Internal audit can check the quality of big data.

Big Data Understanding

Understanding and interpretation of big data remains one of the primary concerns for many organizations. Auditors can effectively simplify an organizations data effectively.

Source: http://www.acl.com/pdfs/wp_AA_Best_Practices.pdf, http://smartdatacollective.com/brett-stupakevich/48184/4-biggest-problems-big-data

11

You might also like

- Big Data AnalyticsDocument7 pagesBig Data Analyticsmundal minatiNo ratings yet

- Various Big Data ToolsDocument33 pagesVarious Big Data ToolsVishal GuptaNo ratings yet

- Data Science IntroductionDocument54 pagesData Science Introductiongopal_svsemails8998No ratings yet

- Data Science Architect Master's Course BrochureDocument23 pagesData Science Architect Master's Course BrochurePushpraj SinghNo ratings yet

- Big Data and Data WarehouseDocument19 pagesBig Data and Data WarehouseArunNo ratings yet

- Business Intelligence Capability LogisticsDocument40 pagesBusiness Intelligence Capability LogisticsBrian ShannyNo ratings yet

- Big Data in E-CommerceDocument21 pagesBig Data in E-Commercenitesh sharma100% (2)

- Data MiningDocument18 pagesData Miningani7890100% (3)

- 250+ Digital Professionals Driving Innovation From ScratchDocument17 pages250+ Digital Professionals Driving Innovation From ScratchKarim Shaik100% (1)

- Project FInal ReportDocument67 pagesProject FInal ReportUnknown UserNo ratings yet

- Predictive Analytics: Myth or RealityDocument9 pagesPredictive Analytics: Myth or RealityrishabhNo ratings yet

- Introduction To Big Data PDFDocument16 pagesIntroduction To Big Data PDFAurelle KTNo ratings yet

- Hadoop ReportDocument110 pagesHadoop ReportGahlot DivyanshNo ratings yet

- What Is Data Architecture - A Framework For Managing Data - CIODocument6 pagesWhat Is Data Architecture - A Framework For Managing Data - CIOGabrielGarciaOrjuelaNo ratings yet

- Literature Review on Big Data Analytics Tools and MethodsDocument6 pagesLiterature Review on Big Data Analytics Tools and MethodsvishalNo ratings yet

- Ucc & BM of Osmania University (MBA)Document22 pagesUcc & BM of Osmania University (MBA)ABDIRASAKNo ratings yet

- Data Mining (Banking)Document8 pagesData Mining (Banking)Anonymous R0vXI894TNo ratings yet

- Big Data Presentation SlideDocument30 pagesBig Data Presentation SlideMahmudul AlamNo ratings yet

- Data Warehousing and Data MiningDocument14 pagesData Warehousing and Data MiningAbbas Hashmi75% (4)

- Big DataDocument13 pagesBig DataIDG_World100% (3)

- Business AnalyticsDocument9 pagesBusiness AnalyticsArjun TrehanNo ratings yet

- World Big Data Market Opportunities 2013-2018Document19 pagesWorld Big Data Market Opportunities 2013-2018VisiongainGlobalNo ratings yet

- Data Science Predictive Analytics and BiDocument108 pagesData Science Predictive Analytics and Binikunjjain001No ratings yet

- Predictive Analytics - Share - V5Document32 pagesPredictive Analytics - Share - V5ravikumar19834853No ratings yet

- Data Warehousing and Data MiningDocument7 pagesData Warehousing and Data MiningDeepti SinghNo ratings yet

- Business Anlaytics NewDocument358 pagesBusiness Anlaytics NewRathan kNo ratings yet

- IBM InfoSphere MDM Overview v8 FinalDocument32 pagesIBM InfoSphere MDM Overview v8 FinalAshraf Sayed AbdouNo ratings yet

- Data Mining InformationDocument15 pagesData Mining Informationqun100% (1)

- Big Data - GCP IM Point of ViewDocument38 pagesBig Data - GCP IM Point of Viewkarthik_integerNo ratings yet

- Real-World Use of Big Data in TelecommunicationsDocument20 pagesReal-World Use of Big Data in TelecommunicationsmpmadeiraNo ratings yet

- Business AnalyticsDocument20 pagesBusiness AnalyticsSaurav Dash100% (1)

- Big Data SeminarDocument27 pagesBig Data SeminarAlemayehu Getachew100% (1)

- DS INTRO TO DATA SCIENCE BITBOOTCAMP 2014Document8 pagesDS INTRO TO DATA SCIENCE BITBOOTCAMP 2014Gyan SharmaNo ratings yet

- Data Mining and Knowledge Discovery in DatabasesDocument36 pagesData Mining and Knowledge Discovery in DatabasesPixelsquare StudiosNo ratings yet

- Evaluation of BIRCH Clustering Algorithm For Big DataDocument5 pagesEvaluation of BIRCH Clustering Algorithm For Big DataInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Web Mining Techniques ExplainedDocument15 pagesWeb Mining Techniques ExplainedChaitali BinzadeNo ratings yet

- Big Data: Presented by J.Jitendra KumarDocument14 pagesBig Data: Presented by J.Jitendra KumarJitendra KumarNo ratings yet

- Data Science Interview Questions and Answers For 2020 PDFDocument20 pagesData Science Interview Questions and Answers For 2020 PDFashokmvanjareNo ratings yet

- Cluster Analysis: Abu BasharDocument18 pagesCluster Analysis: Abu BasharAbu BasharNo ratings yet

- Big Data: Understanding the V'sDocument30 pagesBig Data: Understanding the V'sShradha GuptaNo ratings yet

- Datastage On Ibm Cloud Pak For DataDocument6 pagesDatastage On Ibm Cloud Pak For Dataterra_zgNo ratings yet

- Final Marketing Analysis PresentationDocument535 pagesFinal Marketing Analysis PresentationJasdeep SinghNo ratings yet

- DEA-7TT2 Associate-Data Science and Big Data Analytics v2 ExamDocument4 pagesDEA-7TT2 Associate-Data Science and Big Data Analytics v2 ExamSiddharth NandaNo ratings yet

- Big Data Analytics in Life Insurance PDFDocument20 pagesBig Data Analytics in Life Insurance PDFSubrahmanyam SiriNo ratings yet

- Role of Big Data Analytics in BankingDocument6 pagesRole of Big Data Analytics in BankingHanane KadiNo ratings yet

- Data Science Talent Gap EmergesDocument6 pagesData Science Talent Gap EmergesDebasishNo ratings yet

- Data Quality - Information Quality For NorthwindDocument18 pagesData Quality - Information Quality For NorthwindAminul DaffodilNo ratings yet

- Big Data Platforms and Techniques: January 2016Document11 pagesBig Data Platforms and Techniques: January 2016JAWAHAR BALARAMANNo ratings yet

- Bigdata Market Trends Key Offerings Case StudiesDocument16 pagesBigdata Market Trends Key Offerings Case StudiesUpendra KumarNo ratings yet

- Data AnalyticsDocument11 pagesData AnalyticsPeter AsanNo ratings yet

- BIDocument23 pagesBIoptisearchNo ratings yet

- IBM Big Data PresentationDocument32 pagesIBM Big Data PresentationswandawgNo ratings yet

- Critical Capabilities For Analytics and Business Intelligence PlatformsDocument73 pagesCritical Capabilities For Analytics and Business Intelligence PlatformsDanilo100% (1)

- Future Revolution On Big DataDocument24 pagesFuture Revolution On Big DataArpit AroraNo ratings yet

- MRA - Big Data Analytics - Its Impact On Changing Trends in Retail IndustryDocument4 pagesMRA - Big Data Analytics - Its Impact On Changing Trends in Retail IndustrySon phamNo ratings yet

- An Introduction To Big DataDocument31 pagesAn Introduction To Big DataDewi ArdianiNo ratings yet

- Excercise1 Data VIsualizationDocument5 pagesExcercise1 Data VIsualizationMadhu EvuriNo ratings yet

- Elements and Importance of Business IntelligenceDocument88 pagesElements and Importance of Business IntelligenceFabiyi Olawale100% (1)

- Concept Based Practice Questions for Tableau Desktop Specialist Certification Latest Edition 2023From EverandConcept Based Practice Questions for Tableau Desktop Specialist Certification Latest Edition 2023No ratings yet

- (Excerpts From) Investigating Performance: Design and Outcomes With XapiFrom Everand(Excerpts From) Investigating Performance: Design and Outcomes With XapiNo ratings yet

- The Ship of Brahmadvaita of The UpanishadsDocument58 pagesThe Ship of Brahmadvaita of The UpanishadsAnonymous DLEF3GvNo ratings yet

- Four Principles of AdvaitaDocument16 pagesFour Principles of AdvaitaPraveen KumarNo ratings yet

- Dhyanayoga, The Rishis Realise You. Oh Mother, The Benefactor ofDocument2 pagesDhyanayoga, The Rishis Realise You. Oh Mother, The Benefactor ofAnonymous DLEF3GvNo ratings yet

- Raghavayadaveeyam Anulom Vilom KavyaDocument96 pagesRaghavayadaveeyam Anulom Vilom KavyaSunil Sharma67% (3)

- ArunaprasnaDocument8 pagesArunaprasnaAnonymous DLEF3GvNo ratings yet

- Mountain Path.2013-OctDocument128 pagesMountain Path.2013-OctAnonymous DLEF3GvNo ratings yet

- Gita Sara Tallattu-Tamil With English TranslationDocument22 pagesGita Sara Tallattu-Tamil With English TranslationAnonymous DLEF3GvNo ratings yet

- Panchangam 2019-2020Document44 pagesPanchangam 2019-2020Anonymous DLEF3GvNo ratings yet

- Shankara Digvijayam SringeriDocument23 pagesShankara Digvijayam SringeriAnkurNagpal108No ratings yet

- attract more financial innovation.Regulatory arbitrage innovations between exploiting loopholes and spiritDocument3 pagesattract more financial innovation.Regulatory arbitrage innovations between exploiting loopholes and spiritAnonymous DLEF3GvNo ratings yet

- Capital AssessmentDocument57 pagesCapital AssessmentAnonymous DLEF3GvNo ratings yet

- Advaita Vedanta-The Supreme Source-Vgood-Read PDFDocument39 pagesAdvaita Vedanta-The Supreme Source-Vgood-Read PDFAnonymous DLEF3Gv100% (2)

- Management of Key Risks PDFDocument79 pagesManagement of Key Risks PDFAnonymous DLEF3GvNo ratings yet

- Sondarya LaharilDocument101 pagesSondarya LaharilSagar SharmaNo ratings yet

- A Cloud of Unkowning PDFDocument14 pagesA Cloud of Unkowning PDFAnonymous DLEF3GvNo ratings yet

- Soundaryalahari - Paramacharyal's Discussion in Daivaitin Kural - Translated by Prof.V.KrishnamoorthyDocument241 pagesSoundaryalahari - Paramacharyal's Discussion in Daivaitin Kural - Translated by Prof.V.KrishnamoorthyAnonymous DLEF3GvNo ratings yet

- Tripurasundari Vedapadastotram - With Swarams PDFDocument20 pagesTripurasundari Vedapadastotram - With Swarams PDFAnonymous DLEF3GvNo ratings yet

- Mananamala PDFDocument205 pagesMananamala PDFAnonymous DLEF3Gv100% (1)

- Kanchi Mahaswami On Advaitha Sadhana.Document192 pagesKanchi Mahaswami On Advaitha Sadhana.thapas100% (1)

- 01MookaPanchaSathi-AryaShatakam Text PDFDocument98 pages01MookaPanchaSathi-AryaShatakam Text PDFAnonymous DLEF3GvNo ratings yet

- Actuarial Considerations in Insurance M&a PDFDocument38 pagesActuarial Considerations in Insurance M&a PDFAnonymous DLEF3GvNo ratings yet

- DakshiNAmUrti Stotram InsightsDocument13 pagesDakshiNAmUrti Stotram InsightsBr SarthakNo ratings yet

- Vedic MarriageDocument3 pagesVedic MarriageNIKHIL SHETTYNo ratings yet

- Soundaryalahari - Paramacharyal's Discussion in Daivaitin Kural - Translated by Prof.v.krishnamoorthyDocument3 pagesSoundaryalahari - Paramacharyal's Discussion in Daivaitin Kural - Translated by Prof.v.krishnamoorthyAnonymous DLEF3GvNo ratings yet

- Advaitasiddhi DrishyatvamDocument15 pagesAdvaitasiddhi DrishyatvamUsha SrivathsanNo ratings yet

- Veda Padas TavaDocument22 pagesVeda Padas TavaAnonymous DLEF3GvNo ratings yet

- Markenday PuranDocument201 pagesMarkenday Purangaurav.oza393No ratings yet

- Avasthatrya - The Uniqe MethodDocument24 pagesAvasthatrya - The Uniqe MethodAnonymous DLEF3Gv100% (1)

- Mahaperiaval's analysis of Subrahmanyaya Namaste kritiDocument5 pagesMahaperiaval's analysis of Subrahmanyaya Namaste kritiAnonymous DLEF3GvNo ratings yet

- Arunachala Pancharatnam (By Sri Ramana Maharishi)Document24 pagesArunachala Pancharatnam (By Sri Ramana Maharishi)pixelbuddha100% (1)

- QMM Assignment - 1 - Student Information Sheet PDFDocument2 pagesQMM Assignment - 1 - Student Information Sheet PDFsarlagroverNo ratings yet

- Email PolicyDocument4 pagesEmail PolicyRocketLawyer100% (3)

- SCODocument3 pagesSCOevangelos!No ratings yet

- JBASE FilesDocument53 pagesJBASE FilesMd. Aminul Islam100% (2)

- Keychain PasswordsDocument5 pagesKeychain Passwordsbamby361976No ratings yet

- Just Dial...Document24 pagesJust Dial...Prince PrinceNo ratings yet

- Trade SecretDocument12 pagesTrade SecretMUHAMMAD TALHA TAHIRNo ratings yet

- CRMDocument38 pagesCRMynkamatNo ratings yet

- Case Study CelcomDocument7 pagesCase Study CelcomMon LuffyNo ratings yet

- Tata Elxsi Limited: Summary of Rating ActionDocument7 pagesTata Elxsi Limited: Summary of Rating ActionXyzNo ratings yet

- News EPLAN en US PDFDocument208 pagesNews EPLAN en US PDFTraian SerbanNo ratings yet

- Raghuleela Credentials Nov 2013Document12 pagesRaghuleela Credentials Nov 2013Manish AntwalNo ratings yet

- Sap Fi Bootcamp Training Exercises For Day2Document80 pagesSap Fi Bootcamp Training Exercises For Day2bogasrinu0% (1)

- Bsbmgt501 Task 1-1Document2 pagesBsbmgt501 Task 1-1wipawadee kruasangNo ratings yet

- IATF 16949 Automotive V1Document2 pagesIATF 16949 Automotive V1Marcelo VillacaNo ratings yet

- Fam PatDocument33 pagesFam PatrohitNo ratings yet

- Yahoo's Global Brand Success in the Gulf RegionDocument30 pagesYahoo's Global Brand Success in the Gulf RegionAli Shafique100% (2)

- ICFR Presentation - Ernst and YoungDocument40 pagesICFR Presentation - Ernst and YoungUTIE ELISA RAMADHANI50% (2)

- Effective Sales Force Automation and Customer Relationship ManagementDocument14 pagesEffective Sales Force Automation and Customer Relationship ManagementBusiness Expert Press100% (1)

- Inbound Marketing ContentDocument292 pagesInbound Marketing ContentInboundMarketingNL100% (5)

- Autosys Job ManagementDocument379 pagesAutosys Job Managementartois_aNo ratings yet

- 0008 Time Signatures 44Document1 page0008 Time Signatures 44Alexis ArcillasNo ratings yet

- Companies For OjtDocument2 pagesCompanies For OjtJezelle Abuan100% (2)

- Saep 133Document10 pagesSaep 133abidch143100% (1)

- Value-Based Pricing Putting The Customer at The Centre of Price PDFDocument4 pagesValue-Based Pricing Putting The Customer at The Centre of Price PDFAwadh KapoorNo ratings yet

- Chanderprabhu Jain College of Higher Studies School of LawDocument68 pagesChanderprabhu Jain College of Higher Studies School of LawRahul YadavNo ratings yet

- Syllabus Corporate GovernanceDocument8 pagesSyllabus Corporate GovernanceBrinda HarjanNo ratings yet

- Compulsory Winding UpDocument9 pagesCompulsory Winding UpShruti AmbasthaNo ratings yet

- Approach To Demonstration of Traceability of Measurement ResultsDocument3 pagesApproach To Demonstration of Traceability of Measurement Resultsfl_in1No ratings yet

- Marubeni Group Compliance ManualDocument32 pagesMarubeni Group Compliance ManualJohn Evan Raymund BesidNo ratings yet