Professional Documents

Culture Documents

Ca Unit 2.2

Uploaded by

GuruKPOOriginal Title

Copyright

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentPipelining and Vector Processing

PIPELINING AND VECTOR PROCESSING

Parallel Processing

Pipelining

Arithmetic Pipeline Instruction Pipeline RISC Pipeline Vector Processing Array Processors

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

PARALLEL PROCESSING

Execution of Concurrent Events in the computing process to achieve faster Computational Speed Levels of Parallel Processing

- Job or Program level - Task or Procedure level

- Inter-Instruction level

- Intra-Instruction level

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

PARALLEL COMPUTERS

Architectural Classification

Flynn's classification Based on the multiplicity of Instruction Streams and Data Streams Instruction Stream

Sequence of Instructions read from memory

Data Stream

Operations performed on the data in the processor

Number of Data Streams

Single

Number of Single Instruction Streams Multiple

Computer Organization

Multiple

SIMD MIMD

Computer Architectures Lab

SISD MISD

Pipelining and Vector Processing

Parallel Processing

COMPUTER ARCHITECTURES FOR PARALLEL PROCESSING

Von-Neuman based SISD Superscalar processors Superpipelined processors VLIW Nonexistence Array processors Systolic arrays Dataflow Associative processors Shared-memory multiprocessors Bus based Crossbar switch based Multistage IN based

MISD SIMD

MIMD Reduction

Message-passing multicomputers

Hypercube Mesh Reconfigurable Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

SISD COMPUTER SYSTEMS

Control Unit

Processor Unit Data stream Memory

Instruction stream

Characteristics

- Standard von Neumann machine - Instructions and data are stored in memory - One operation at a time

Limitations

Von Neumann bottleneck Maximum speed of the system is limited by the Memory Bandwidth (bits/sec or bytes/sec) - Limitation on Memory Bandwidth - Memory is shared by CPU and I/O

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

SISD PERFORMANCE IMPROVEMENTS

Multiprogramming Spooling Multifunction processor Pipelining Exploiting instruction-level parallelism - Superscalar - Superpipelining - VLIW (Very Long Instruction Word)

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

MISD COMPUTER SYSTEMS

M CU P

CU

Memory

CU

Data stream

Instruction stream

Characteristics

- There is no computer at present that can be classified as MISD

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

SIMD COMPUTER SYSTEMS

Memory Data bus

Control Unit

Instruction stream

Data stream

Processor units

Alignment network

Memory modules

Characteristics

- Only one copy of the program exists - A single controller executes one instruction at a time

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

Parallel Processing

TYPES OF SIMD COMPUTERS

Array Processors

- The control unit broadcasts instructions to all PEs, and all active PEs execute the same instructions - ILLIAC IV, GF-11, Connection Machine, DAP, MPP

Systolic Arrays

- Regular arrangement of a large number of very simple processors constructed on VLSI circuits - CMU Warp, Purdue CHiP

Associative Processors

- Content addressing - Data transformation operations over many sets of arguments with a single instruction - STARAN, PEPE

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

10

Parallel Processing

MIMD COMPUTER SYSTEMS

P M P M P M

Interconnection Network

Shared Memory

Characteristics

- Multiple processing units

- Execution of multiple instructions on multiple data

Types of MIMD computer systems

- Shared memory multiprocessors - Message-passing multicomputers

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

11

Parallel Processing

SHARED MEMORY MULTIPROCESSORS

M M M

Interconnection Network(IN)

Buses, Multistage IN, Crossbar Switch

Characteristics

All processors have equally direct access to one large memory address space

Example systems

Bus and cache-based systems - Sequent Balance, Encore Multimax Multistage IN-based systems - Ultracomputer, Butterfly, RP3, HEP Crossbar switch-based systems - C.mmp, Alliant FX/8

Limitations

Memory access latency Hot spot problem

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

12

Parallel Processing

MESSAGE-PASSING MULTICOMPUTER

Message-Passing Network Point-to-point connections

Characteristics

- Interconnected computers - Each processor has its own memory, and communicate via message-passing

Example systems

- Tree structure: Teradata, DADO - Mesh-connected: Rediflow, Series 2010, J-Machine - Hypercube: Cosmic Cube, iPSC, NCUBE, FPS T Series, Mark III

Limitations

- Communication overhead - Hard to programming

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

13

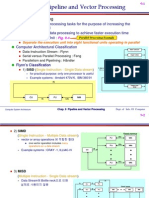

Pipelining

PIPELINING

A technique of decomposing a sequential process into suboperations, with each subprocess being executed in a partial dedicated segment that operates concurrently with all other segments.

Ai * Bi + Ci

Ai Segment 1 R1 R2 Bi

for i = 1, 2, 3, ... , 7

Memory Ci

Multiplier

Segment 2

R3 R4

Segment 3

Adder

R5

R1 Ai, R2 Bi R3 R1 * R2, R4 Ci R5 R3 + R4

Computer Organization

Load Ai and Bi Multiply and load Ci Add

Computer Architectures Lab

Pipelining and Vector Processing

14

Pipelining

OPERATIONS IN EACH PIPELINE STAGE

Clock Segment 1 Pulse Number R1 R2 1 A1 B1 2 A2 B2 3 A3 B3 4 A4 B4 5 A5 B5 6 A6 B6 7 A7 B7 8 9 Segment 2 R3 A1 * B1 A2 * B2 A3 * B3 A4 * B4 A5 * B5 A6 * B6 A7 * B7 R4 C1 C2 C3 C4 C5 C6 C7 Segment 3 R5

A1 * B1 + C1 A2 * B2 + C2 A3 * B3 + C3 A4 * B4 + C4 A5 * B5 + C5 A6 * B6 + C6 A7 * B7 + C7

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

15

Pipelining

GENERAL PIPELINE

General Structure of a 4-Segment Pipeline

Clock

Input

S1

R1

S2

R2

S3

R3

S4

R4

Space-Time Diagram

1 Segment 1 2 T1 2 T2 T1 3 T3 T2 4 T4 T3 5 T5 T4 6 T6 T5 T6 7 8 9 Clock cycles

3

4

T1

T2

T1

T3

T2

T4

T3

T5

T4

T6

T5 T6

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

16

Pipelining

PIPELINE SPEEDUP

n: Number of tasks to be performed Conventional Machine (Non-Pipelined) tn: Clock cycle : Time required to complete the n tasks = n * tn Pipelined Machine (k stages) tp: Clock cycle (time to complete each suboperation) : Time required to complete the n tasks = (k + n - 1) * tp

Speedup Sk: Speedup

Sk = n*tn / (k + n - 1)*tp lim

n

Sk =

tn tp

( = k, if tn = k * tp )

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

17

Pipelining

PIPELINE AND MULTIPLE FUNCTION UNITS

Example - 4-stage pipeline - subopertion in each stage; tp = 20nS - 100 tasks to be executed - 1 task in non-pipelined system; 20*4 = 80nS Pipelined System (k + n - 1)*tp = (4 + 99) * 20 = 2060nS Non-Pipelined System n*k*tp = 100 * 80 = 8000nS Speedup Sk = 8000 / 2060 = 3.88 4-Stage Pipeline is basically identical to the system with 4 identical function units Ii I i+1 Multiple Functional Units

I i+2

I i+3

P1

P 2

P 3

P4

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

18

Arithmetic Pipeline

ARITHMETIC PIPELINE

Floating-point adder

X = A x 2a Y = B x 2b [1] [2] [3] [4] Compare the exponents Align the mantissa Add/sub the mantissa Normalize the result

Exponents a b R Mantissas A B R

Segment 1:

Compare exponents by subtraction R

Difference

Segment 2:

Choose exponent

Align mantissa R

Segment 3:

Add or subtract mantissas R R

Segment 4:

Adjust exponent R

Normalize result R

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

19

Arithmetic Pipeline

4-STAGE FLOATING POINT ADDER

A = a x 2p p a B = b x 2q q b

Stages: S1

Exponent subtractor

Other fraction

Fraction selector Fraction with min(p,q) Right shifter

r = max(p,q) t = |p - q|

S2 r

Fraction adder c

S3

Leading zero counter

c Left shifter

r

d S4 Exponent adder

s C = A + B = c x 2 r= d x 2 s (r = max (p,q), 0.5 d < 1)

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

20

Instruction Pipeline

INSTRUCTION CYCLE

Six Phases* in an Instruction Cycle [1] Fetch an instruction from memory [2] Decode the instruction [3] Calculate the effective address of the operand [4] Fetch the operands from memory [5] Execute the operation [6] Store the result in the proper place * Some instructions skip some phases * Effective address calculation can be done in the part of the decoding phase * Storage of the operation result into a register is done automatically in the execution phase ==> 4-Stage Pipeline [1] FI: Fetch an instruction from memory [2] DA: Decode the instruction and calculate the effective address of the operand [3] FO: Fetch the operand [4] EX: Execute the operation

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

21

Instruction Pipeline

INSTRUCTION PIPELINE

Execution of Three Instructions in a 4-Stage Pipeline Conventional

i FI DA FO EX i+1 FI DA FO EX i+2 FI DA FO EX

Pipelined

i FI i+1 DA FO FI i+2 EX EX

DA FO

FI

DA FO EX

Computer Organization

Computer Architectures Lab

Pipelining and Vector Processing

22

Instruction Pipeline

INSTRUCTION EXECUTION IN A 4-STAGE PIPELINE

Segment1: Fetch instruction from memory

Segment2:

Decode instruction and calculate effective address

yes Branch? no Fetch operand from memory Execute instruction yes Interrupt? no

Segment3: Segment4: Interrupt handling

Update PC

Empty pipe

Step: Instruction 1 2 (Branch) 3 4 5 6 7 1 FI 2 DA FI 3 FO DA FI 4 EX FO DA FI EX FO EX FI DA FI FO DA FI EX FO DA FI EX FO DA EX FO EX 5 6 7 8 9 10 11 12 13

Computer Organization

Computer Architectures Lab

You might also like

- Advertising and Sales PromotionDocument75 pagesAdvertising and Sales PromotionGuruKPO100% (3)

- ZeroAccess Rootkit Analysis PDFDocument9 pagesZeroAccess Rootkit Analysis PDFzositeNo ratings yet

- Embedded CoderDocument8 pagesEmbedded Coderجمال طيبيNo ratings yet

- Think Tank - Advertising & Sales PromotionDocument75 pagesThink Tank - Advertising & Sales PromotionGuruKPO67% (3)

- Applied ElectronicsDocument37 pagesApplied ElectronicsGuruKPO100% (2)

- Banking Services OperationsDocument134 pagesBanking Services OperationsGuruKPONo ratings yet

- Ross-Tech - VCDS Tour - Auto-ScanDocument5 pagesRoss-Tech - VCDS Tour - Auto-ScandreamelarnNo ratings yet

- Co NotesDocument144 pagesCo NotesSandeep KumarNo ratings yet

- Production and Material ManagementDocument50 pagesProduction and Material ManagementGuruKPONo ratings yet

- ch09 Morris ManoDocument15 pagesch09 Morris ManoYogita Negi RawatNo ratings yet

- Computer Graphics & Image ProcessingDocument117 pagesComputer Graphics & Image ProcessingGuruKPONo ratings yet

- AutoAccounting FAQ provides troubleshooting tipsDocument10 pagesAutoAccounting FAQ provides troubleshooting tipsFahd AizazNo ratings yet

- CS1601 Computer ArchitectureDocument389 pagesCS1601 Computer Architectureainugiri100% (1)

- Product and Brand ManagementDocument129 pagesProduct and Brand ManagementGuruKPONo ratings yet

- Biyani's Think Tank: Concept Based NotesDocument49 pagesBiyani's Think Tank: Concept Based NotesGuruKPO71% (7)

- Software Quality Assurance: Key Attributes and ActivitiesDocument28 pagesSoftware Quality Assurance: Key Attributes and Activitieskaosar alamNo ratings yet

- ECCouncil 312-50v9 Practice Test: One-Click CertificationDocument45 pagesECCouncil 312-50v9 Practice Test: One-Click Certificationzamanbd75% (4)

- Functional Verification 2003: Technology, Tools and MethodologyDocument5 pagesFunctional Verification 2003: Technology, Tools and MethodologydoomachaleyNo ratings yet

- Abstract AlgebraDocument111 pagesAbstract AlgebraGuruKPO100% (5)

- Introduction To JavaDocument26 pagesIntroduction To JavaMr. VipulNo ratings yet

- Advanced Operating SystemsDocument51 pagesAdvanced Operating SystemsaidaraNo ratings yet

- Service MarketingDocument60 pagesService MarketingGuruKPONo ratings yet

- Wrapper ClassesDocument17 pagesWrapper Classesadarsh raj100% (1)

- Data Communication & NetworkingDocument138 pagesData Communication & NetworkingGuruKPO75% (4)

- SDM Final AssignmentDocument47 pagesSDM Final AssignmentAbdullah Ali100% (4)

- Green Computing: Reducing Environmental Impact Through IT StrategiesDocument174 pagesGreen Computing: Reducing Environmental Impact Through IT Strategies19CS038 HARIPRASADRNo ratings yet

- Java Programming PDFDocument247 pagesJava Programming PDFBaponNo ratings yet

- Computer Programming PDFDocument260 pagesComputer Programming PDFSaad IlyasNo ratings yet

- Green ComputingDocument24 pagesGreen ComputingSunil Vicky VohraNo ratings yet

- Introduction To C ProgrammingDocument82 pagesIntroduction To C ProgrammingTAPAS KUMAR JANANo ratings yet

- What is Python? An SEO-Optimized GuideDocument11 pagesWhat is Python? An SEO-Optimized GuidemohitNo ratings yet

- Teaching Plan of Operating SystemDocument2 pagesTeaching Plan of Operating SystemGarrett MannNo ratings yet

- Vi RtSimDocument155 pagesVi RtSimjenishaNo ratings yet

- Computer Hardware & MaintenanceDocument4 pagesComputer Hardware & MaintenancemanishkalshettyNo ratings yet

- GE3151 - Python SyllabusDocument2 pagesGE3151 - Python Syllabussaro2330No ratings yet

- Lab Manual SPCCDocument62 pagesLab Manual SPCCenggeng7No ratings yet

- Software Engineering Question BankDocument71 pagesSoftware Engineering Question BankMAHENDRANo ratings yet

- C++ Functions Chapter SummaryDocument23 pagesC++ Functions Chapter SummaryGeethaa MohanNo ratings yet

- Project On Fantasy Cricket GameDocument6 pagesProject On Fantasy Cricket GameKINGS MAN HUNTER GAMING67% (3)

- 2D PRIMITIVESDocument173 pages2D PRIMITIVESArunsankar MuralitharanNo ratings yet

- Unit No 4 Slides FullDocument133 pagesUnit No 4 Slides Full18JE0254 CHIRAG JAINNo ratings yet

- Edge analytics core functions and componentsDocument13 pagesEdge analytics core functions and componentssarakeNo ratings yet

- Knapp's ClassificationDocument6 pagesKnapp's ClassificationSubi PapaNo ratings yet

- Embedded System R 2017 PDFDocument50 pagesEmbedded System R 2017 PDFSENTHILKUMAR RNo ratings yet

- Quotation Lab: Internet of Things (Iot) : Regulation: R16 Branch: Cse Yrae: B.Tech Iv Year I SemDocument3 pagesQuotation Lab: Internet of Things (Iot) : Regulation: R16 Branch: Cse Yrae: B.Tech Iv Year I Sembabu surendraNo ratings yet

- CS8074 Cyber Forensics: Anna University Exams April May 2022 - Regulation 2017Document2 pagesCS8074 Cyber Forensics: Anna University Exams April May 2022 - Regulation 2017TeCh 5No ratings yet

- Role of Internet and IntranetDocument10 pagesRole of Internet and IntranetsubhendujanaNo ratings yet

- Bcs 011 PDFDocument33 pagesBcs 011 PDFRahul RawatNo ratings yet

- Cloud Computing FundamentalsDocument31 pagesCloud Computing FundamentalsvizierNo ratings yet

- Emb Lab ManualDocument73 pagesEmb Lab ManualBharath RamanNo ratings yet

- Compiler DesignDocument2 pagesCompiler DesignDreamtech Press100% (1)

- Data Mining and Business Intelligence Lab ManualDocument52 pagesData Mining and Business Intelligence Lab ManualVinay JokareNo ratings yet

- Cocomo PDFDocument14 pagesCocomo PDFPriyanshi TiwariNo ratings yet

- Customer Billing System Report 2Document14 pagesCustomer Billing System Report 2rainu sikarwarNo ratings yet

- 3 - JVM As An Interpreter and EmulatorDocument34 pages3 - JVM As An Interpreter and EmulatorVedika VermaNo ratings yet

- LAB SESSSION 01 (AutoRecovered)Document12 pagesLAB SESSSION 01 (AutoRecovered)Bhavana100% (2)

- Cs 2032 Data Warehousing and Data Mining Question Bank by GopiDocument6 pagesCs 2032 Data Warehousing and Data Mining Question Bank by Gopiapi-292373744No ratings yet

- SOFTWARE AS EVOLUTIONARY ENTITY AnandDocument17 pagesSOFTWARE AS EVOLUTIONARY ENTITY AnandCherun ChesilNo ratings yet

- AJAX Patterns Web20Document15 pagesAJAX Patterns Web20Jith MohanNo ratings yet

- Department of Information Technology Faculty of Engineering and TechnologyDocument40 pagesDepartment of Information Technology Faculty of Engineering and TechnologyHarry CNo ratings yet

- Microprocessor Architecture and Instruction SetDocument185 pagesMicroprocessor Architecture and Instruction Setanishadanda50% (2)

- Tomasulo's Algorithm and ScoreboardingDocument17 pagesTomasulo's Algorithm and ScoreboardingParth KaleNo ratings yet

- sOFTWARE REUSEDocument48 pagessOFTWARE REUSENaresh KumarNo ratings yet

- Case Study Comparison of X85 and X86 AssemblersDocument6 pagesCase Study Comparison of X85 and X86 AssemblersRiteshNo ratings yet

- Arrays in C': Shashidhar G Koolagudi CSE, NITK, SurathkalDocument26 pagesArrays in C': Shashidhar G Koolagudi CSE, NITK, SurathkalKALYANE SATYAM SANJAYNo ratings yet

- Theory of ComputationDocument34 pagesTheory of ComputationSurya KameswariNo ratings yet

- Chapter - 3 TRANSACTION PROCESSINGDocument51 pagesChapter - 3 TRANSACTION PROCESSINGdawodNo ratings yet

- Python Lab Assignment ManualDocument62 pagesPython Lab Assignment ManualNihal ShettyNo ratings yet

- UntitledDocument125 pagesUntitledRIHAN PATHAN100% (1)

- Compiler Design Practical FileDocument49 pagesCompiler Design Practical FileDHRITI SALUJANo ratings yet

- SW Detailed Design PrinciplesDocument42 pagesSW Detailed Design PrinciplesSamuel LambrechtNo ratings yet

- Ecs-Unit IDocument29 pagesEcs-Unit Idavid satyaNo ratings yet

- Computer Organisation and Architectre NotesDocument353 pagesComputer Organisation and Architectre NotesvardhinNo ratings yet

- OOAD - Object Oriented Analysis and DesignDocument48 pagesOOAD - Object Oriented Analysis and DesignsharmilaNo ratings yet

- Real Time Operating System A Complete Guide - 2020 EditionFrom EverandReal Time Operating System A Complete Guide - 2020 EditionNo ratings yet

- Network Management System A Complete Guide - 2020 EditionFrom EverandNetwork Management System A Complete Guide - 2020 EditionRating: 5 out of 5 stars5/5 (1)

- Pipelining and Vector ProcessingDocument37 pagesPipelining and Vector ProcessingJohn DavidNo ratings yet

- OptimizationDocument96 pagesOptimizationGuruKPO67% (3)

- Applied ElectronicsDocument40 pagesApplied ElectronicsGuruKPO75% (4)

- Algorithms and Application ProgrammingDocument114 pagesAlgorithms and Application ProgrammingGuruKPONo ratings yet

- Algorithms and Application ProgrammingDocument114 pagesAlgorithms and Application ProgrammingGuruKPONo ratings yet

- Biyani Group of Colleges, Jaipur Merit List of Kalpana Chawala Essay Competition - 2014Document1 pageBiyani Group of Colleges, Jaipur Merit List of Kalpana Chawala Essay Competition - 2014GuruKPONo ratings yet

- Fundamental of Nursing Nov 2013Document1 pageFundamental of Nursing Nov 2013GuruKPONo ratings yet

- Phychology & Sociology Jan 2013Document1 pagePhychology & Sociology Jan 2013GuruKPONo ratings yet

- Paediatric Nursing Sep 2013 PDFDocument1 pagePaediatric Nursing Sep 2013 PDFGuruKPONo ratings yet

- Phychology & Sociology Jan 2013Document1 pagePhychology & Sociology Jan 2013GuruKPONo ratings yet

- Biological Science Paper I July 2013Document1 pageBiological Science Paper I July 2013GuruKPONo ratings yet

- Community Health Nursing I Nov 2013Document1 pageCommunity Health Nursing I Nov 2013GuruKPONo ratings yet

- Community Health Nursing Jan 2013Document1 pageCommunity Health Nursing Jan 2013GuruKPONo ratings yet

- Business Ethics and EthosDocument36 pagesBusiness Ethics and EthosGuruKPO100% (3)

- Community Health Nursing I July 2013Document1 pageCommunity Health Nursing I July 2013GuruKPONo ratings yet

- Biological Science Paper 1 Nov 2013Document1 pageBiological Science Paper 1 Nov 2013GuruKPONo ratings yet

- Business LawDocument112 pagesBusiness LawDewanFoysalHaqueNo ratings yet

- Biological Science Paper 1 Jan 2013Document1 pageBiological Science Paper 1 Jan 2013GuruKPONo ratings yet

- BA II English (Paper II)Document45 pagesBA II English (Paper II)GuruKPONo ratings yet

- Software Project ManagementDocument41 pagesSoftware Project ManagementGuruKPO100% (1)

- Moving Ocr or Voting Disk From One Diskgroup To AnotherDocument4 pagesMoving Ocr or Voting Disk From One Diskgroup To Anothermartin_seaNo ratings yet

- RasterDocument229 pagesRastermacielxflavioNo ratings yet

- Idrisi32 APIDocument38 pagesIdrisi32 APIYodeski Rodríguez AlvarezNo ratings yet

- Automatic Timetable GeneratorDocument4 pagesAutomatic Timetable GeneratorArohi LigdeNo ratings yet

- Pulse Monitor - CMPT 340Document4 pagesPulse Monitor - CMPT 340Gilbert MiaoNo ratings yet

- Week 1 QuizDocument2 pagesWeek 1 QuizKevin MulhernNo ratings yet

- Hitachi-HDS Technology UpdateDocument25 pagesHitachi-HDS Technology Updatekiennguyenh156No ratings yet

- The Likelihood Encoder For Source Coding: Paul Cuff and Eva C. Song - Princeton UniversityDocument2 pagesThe Likelihood Encoder For Source Coding: Paul Cuff and Eva C. Song - Princeton UniversityAyda EhsanyNo ratings yet

- TL-WR1043ND Print Server AppliDocument39 pagesTL-WR1043ND Print Server AppliJames FungNo ratings yet

- Huawei Interview QuestionsDocument5 pagesHuawei Interview QuestionsShashank NaikNo ratings yet

- EndevorDocument40 pagesEndevorAkash KumarNo ratings yet

- PuttyDocument146 pagesPuttypandishNo ratings yet

- Checkpoint 156-115.77 Practice Exam TitleDocument54 pagesCheckpoint 156-115.77 Practice Exam Titlegarytj21No ratings yet

- PK Sinha Distributed Operating System PDFDocument4 pagesPK Sinha Distributed Operating System PDFJivesh Garg0% (1)

- CS 464 Question BankDocument5 pagesCS 464 Question BankjomyNo ratings yet

- Ch. 1 Installing Ubuntu Server and FOG: The Simple Method! by Ian Carey (TN - Smashedbotatos)Document25 pagesCh. 1 Installing Ubuntu Server and FOG: The Simple Method! by Ian Carey (TN - Smashedbotatos)Karen ShawNo ratings yet

- Array Assignment No1Document4 pagesArray Assignment No1subashNo ratings yet

- Linked List Iterator and Node ClassDocument15 pagesLinked List Iterator and Node ClassRadenSue Raden Abd MuinNo ratings yet

- History of Multimedia SystemsDocument40 pagesHistory of Multimedia SystemsEng MakwebaNo ratings yet

- Technical QuestionsDocument59 pagesTechnical QuestionsVinen TomarNo ratings yet

- 1) A Class A Address HasDocument5 pages1) A Class A Address HaspatipawanNo ratings yet

- 8 Ways To Tweak and Configure Sudo On UbuntuDocument9 pages8 Ways To Tweak and Configure Sudo On UbuntuVictor Alexandru BusnitaNo ratings yet

- Laguna University: PrefaceDocument5 pagesLaguna University: PrefaceBeatsTubeTV FlowsNo ratings yet

- Python TwistedDocument247 pagesPython Twistedamit.213No ratings yet